当前位置:网站首页>The difference between Tansig and logsig. Why does BP like to use Tansig

The difference between Tansig and logsig. Why does BP like to use Tansig

2022-07-07 01:30:00 【Old cake explanation BP neural network】

Original article , Reprint please indicate from 《 Old cake explains neural networks 》:bp.bbbdata.com

About 《 Old cake explains neural networks 》:

This website structurally explains the knowledge of Neural Networks , Principle and code .

repeat matlab Algorithm of neural network toolbox , It is a good assistant for learning neural networks .

Catalog

Why? BP Neural networks are generally used tansig, I believe this is the confusion of many people .

We might as well analyze tansig and logsig Properties of 、 characteristic 、 Derivative and so on ,

Try to find out why they tend to use tansig Why .

01. Formula analysis

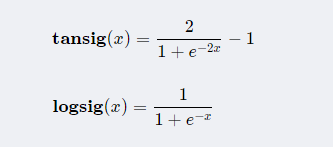

The formula

tansig and logsig The formula is as follows :

analysis

From the formula of both , There is not much difference between the two ,

tansig It's just logsig Perform stretching and translation operation on the basis of .

Both rely on exponential calculations , There is no difference in computational complexity .

therefore , On the formula level , It does not constitute a tendentious choice tansig The reason of .

02. Characteristic analysis

characteristic

When tansig When the independent variable is one dimension , It's a S Shape curve .

● Its value range is (-1,1)

● tansig The nonlinear part mainly focuses on 【-1.7,1.7】 Between ,

● stay 【-1.7,1.7】 Outside ,tansig Gradually tend to saturation .

When logsig When the independent variable is one dimension , It's a S Shape curve .

● Its value range is (0,1)

● logsig The nonlinear part mainly focuses on 【-1.7,1.7】 Between ,

● stay 【-1.7,1.7】 Outside ,logsig Gradually tend to saturation .

analysis

From the comparison of characteristics , We have not found any qualitative difference between the two ,

because tansig Will be logsig Stretch , Translation to 【-1,1】 The value range of .

I didn't find much difference in features ,

The only difference is , The two values are different .

03. Derivative Analysis

derivative

tansig The derivative of is :

logsig The derivative of is :

analysis

Through the comparison of derivatives ,

Both of them can use their own value to obtain the derivative value ,

The amount of calculation is also consistent ,

therefore , On derivative tansig There is no greater advantage ,

Does not constitute a tendency to use tansig Why

The author's view

Through the above analysis , We can hardly see tansig Than logsig What are the advantages of .

Then why use tansig Well ?

The author's view is ,

One 、 Unified input range .

Two 、 Make full use of the active interval of the activation function

We know , The input of the upper layer is the output of the lower layer ,

and tansig and logsig The active range of is 【-1.7,1.7】 Between ,

In the input layer , We will undoubtedly normalize the input to 【-1,1】,

It is more effective for using the active interval of the activation function of the first hidden layer .

And use tansig, In the case of multiple hidden layers ,

Output of each layer , That is, the input of the lower layer is still 【-1,1】

In this way, the input range of each layer is unified ,

And they all make effective use of the active interval of the activation function .

Unity is very beneficial ,

At least in theoretical research , It can bring a lot of convenience ,

Otherwise, we need to discuss the input layer and hidden layer respectively .

The above is the author's view , Because there is no literature research , For reference only .

边栏推荐

- Atomic in golang and CAS operations

- According to the analysis of the Internet industry in 2022, how to choose a suitable position?

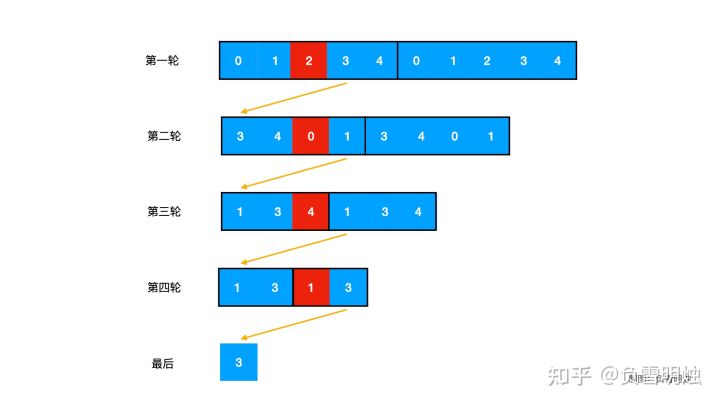

- 剑指 Offer II 035. 最小时间差-快速排序加数据转换

- C语言实例_2

- Sword finger offer II 035 Minimum time difference - quick sort plus data conversion

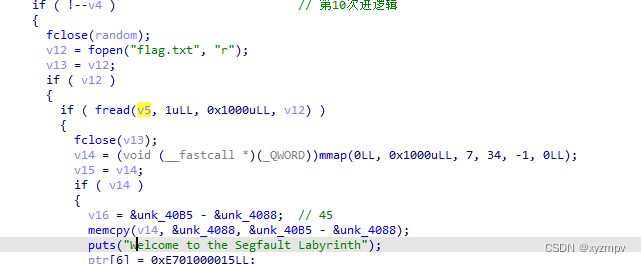

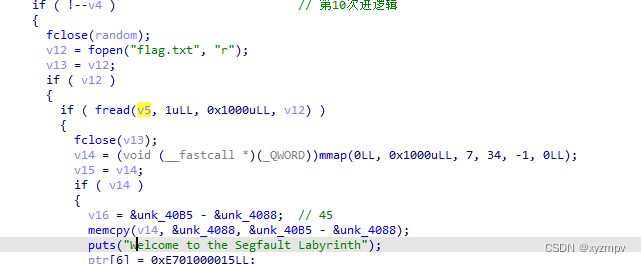

- 2022 Google CTF SEGFAULT LABYRINTH wp

- LeetCode. 剑指offer 62. 圆圈中最后剩下的数

- 树莓派/arm设备上安装火狐Firefox浏览器

- THREE. AxesHelper is not a constructor

- 云呐|工单管理软件,工单管理软件APP

猜你喜欢

AI automatically generates annotation documents from code

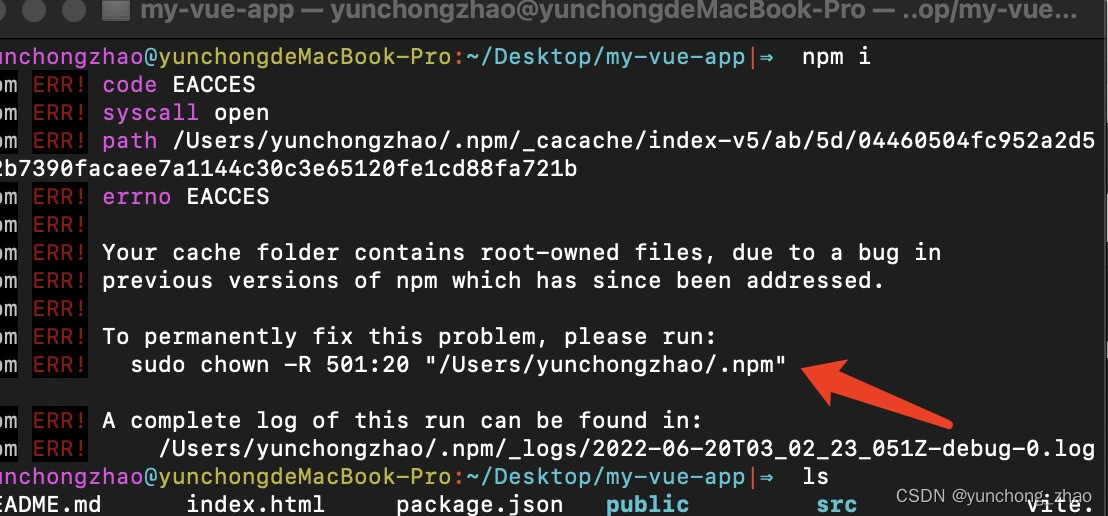

Your cache folder contains root-owned files, due to a bug in npm ERR! previous versions of npm which

2022 Google CTF SEGFAULT LABYRINTH wp

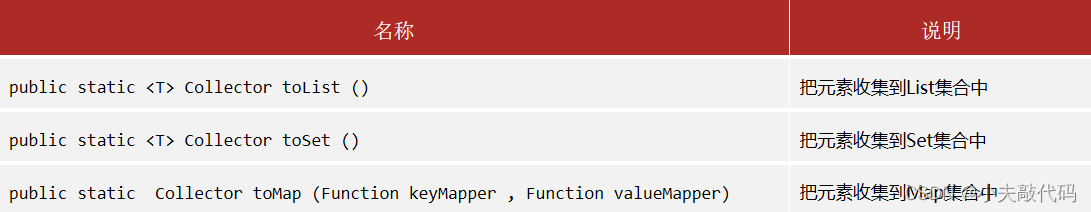

黑马笔记---创建不可变集合与Stream流

Byte P7 professional level explanation: common tools and test methods for interface testing, Freeman

2022 Google CTF SEGFAULT LABYRINTH wp

微信公众号发送模板消息

![[advanced C language] 8 written questions of pointer](/img/d4/c9bb2c8c9fd8f54a36e463e3eb2fe0.png)

[advanced C language] 8 written questions of pointer

LeetCode. 剑指offer 62. 圆圈中最后剩下的数

云呐|工单管理软件,工单管理软件APP

随机推荐

Comparison of picture beds of free white whoring

Neon Optimization: an optimization case of log10 function

Let's see through the network i/o model from beginning to end

Instructions for using the domain analysis tool bloodhound

云呐|工单管理办法,如何开展工单管理

一起看看matlab工具箱内部是如何实现BP神经网络的

[chip scheme design] pulse oximeter

Yunna | work order management software, work order management software app

接收用户输入,身高BMI体重指数检测小业务入门案例

swiper组件中使用video导致全屏错位

tansig和logsig的差异,为什么BP喜欢用tansig

Go zero micro service practical series (IX. ultimate optimization of seckill performance)

THREE.AxesHelper is not a constructor

C language instance_ three

从底层结构开始学习FPGA----FIFO IP的定制与测试

增加 pdf 标题浮窗

The MySQL database in Alibaba cloud was attacked, and finally the data was found

Oracle: Practice of CDB restricting PDB resources

Table table setting fillet

How to evaluate load balancing performance parameters?