当前位置:网站首页>[in-depth learning] review pytoch's 19 loss functions

[in-depth learning] review pytoch's 19 loss functions

2022-07-04 20:27:00 【Demeanor 78】

Just for academic sharing , It does not represent the position of the official account , Deletion of infringement contact

Reproduced in : author :mingo_ Sensitive

Link to the original text :https://blog.csdn.net/shanglianlm/article/details/85019768

Reading guide

Nineteen loss functions are summarized in this paper , Its mathematical formula and code implementation are introduced , I hope you can master .

01

Basic usage

criterion = LossCriterion() # Constructors have their own arguments

loss = criterion(x, y) # There are also parameters when calling the standard 02

Loss function

2-1 L1 Norm loss L1Loss

Calculation output and target The absolute value of the difference .

torch.nn.L1Loss(reduction='mean')Parameters :

reduction- Three values ,none: Do not use reduction ;mean: return loss The average of and ;sum: return loss And . Default :mean.

2-2 Loss of mean square error MSELoss

Calculation output and target The mean square error of the difference between .

torch.nn.MSELoss(reduction='mean')Parameters :

reduction- Three values ,none: Do not use reduction ;mean: return loss The average of and ;sum: return loss And . Default :mean.

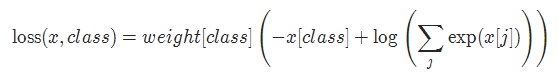

2-3 Cross entropy loss CrossEntropyLoss

When training has C It's very effective when it comes to the classification of two categories . Optional parameters weight Must be a 1 dimension Tensor, Weights will be assigned to each category . Very effective for imbalanced training sets .

In multi category tasks , Always use softmax Activation function + Cross entropy loss function , Because cross entropy describes the difference between two probability distributions , But the neural network outputs vectors , It's not in the form of a probability distribution . So we need to softmax The activation function performs a vector “ normalization ” In the form of probability distribution , And then the cross entropy loss function is used to calculate loss.

torch.nn.CrossEntropyLoss(weight=None, ignore_index=-100, reduction='mean')Parameters :

weight (Tensor, optional) – Customize the weight of each category . It has to be a length of C Of Tensor

ignore_index (int, optional) – Set a target value , The target value is ignored , So that it doesn't affect The gradient of the input .

reduction- Three values ,none: Do not use reduction ;mean: return loss The average of and ;sum: return loss And . Default :mean.

2-4 KL Divergence loss KLDivLoss

Calculation input and target Between KL The divergence .KL Divergence can be used to measure the distance between different continuous distributions , In the space of continuous output distribution ( Discrete sampling ) It is very effective for direct regression on .

torch.nn.KLDivLoss(reduction='mean')Parameters :

reduction- Three values ,none: Do not use reduction ;mean: return loss The average of and ;sum: return loss And . Default :mean.

2-5 Binary cross entropy loss BCELoss

The calculation function of cross entropy in binary classification task . Error used to measure reconstruction , For example, automatic encoders . Pay attention to the value of the target t[i] For the range of 0 To 1 Between .

torch.nn.BCELoss(weight=None, reduction='mean')Parameters :

weight (Tensor, optional) – Custom each batch Elemental loss The weight of . It has to be a length of “nbatch” Of Of Tensor

pos_weight(Tensor, optional) – Customized for each positive sample loss The weight of . It has to be a length by “classes” Of Tensor

2-6 BCEWithLogitsLoss

BCEWithLogitsLoss The loss function takes Sigmoid Layer integrated into BCELoss Class . This version is simpler than using a Sigmoid Layer and the BCELoss More stable numerically , Because after merging these two operations into one layer , You can use log-sum-exp Of Techniques to achieve numerical stability .

torch.nn.BCEWithLogitsLoss(weight=None, reduction='mean', pos_weight=None)Parameters :

weight (Tensor, optional) – Custom each batch Elemental loss The weight of . It has to be a length by “nbatch” Of Tensor

pos_weight(Tensor, optional) – Customized for each positive sample loss The weight of . It has to be a length by “classes” Of Tensor

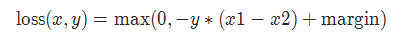

2-7 MarginRankingLoss

torch.nn.MarginRankingLoss(margin=0.0, reduction='mean')about mini-batch( Small batch ) The loss function for each instance in is as follows :

Parameters :

margin: The default value is 0

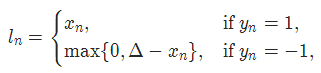

2-8 HingeEmbeddingLoss

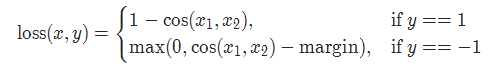

torch.nn.HingeEmbeddingLoss(margin=1.0, reduction='mean')about mini-batch( Small batch ) The loss function for each instance in is as follows :

Parameters :

margin: The default value is 1

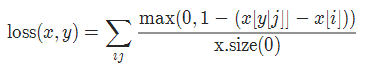

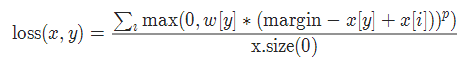

2-9 Multi label classification loss MultiLabelMarginLoss

torch.nn.MultiLabelMarginLoss(reduction='mean')about mini-batch( Small batch ) For each sample in, the loss is calculated as follows :

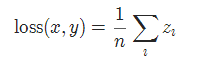

2-10 Smooth version L1 Loss SmoothL1Loss

Also known as Huber Loss function .

torch.nn.SmoothL1Loss(reduction='mean')

among

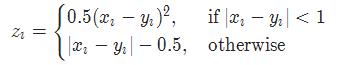

2-11 2 Classified logistic Loss SoftMarginLoss

torch.nn.SoftMarginLoss(reduction='mean')

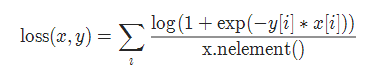

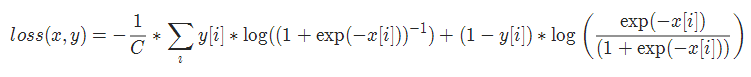

2-12 Multi label one-versus-all Loss MultiLabelSoftMarginLoss

torch.nn.MultiLabelSoftMarginLoss(weight=None, reduction='mean')

2-13 cosine Loss CosineEmbeddingLoss

torch.nn.CosineEmbeddingLoss(margin=0.0, reduction='mean')

Parameters :

margin: The default value is 0

2-14 Multi category classification of hinge Loss MultiMarginLoss

torch.nn.MultiMarginLoss(p=1, margin=1.0, weight=None, reduction='mean')

Parameters :

p=1 perhaps 2 The default value is :1

margin: The default value is 1

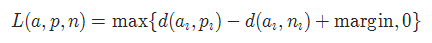

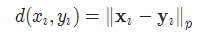

2-15 Triplet loss TripletMarginLoss

torch.nn.TripletMarginLoss(margin=1.0, p=2.0, eps=1e-06, swap=False, reduction='mean')

among :

2-16 Connection timing classification loss CTCLoss

CTC Connection timing classification loss , You can automatically align data that is not aligned , It is mainly used for training serialization data without prior alignment . For example, speech recognition 、ocr Identification and so on .

torch.nn.CTCLoss(blank=0, reduction='mean')Parameters :

reduction- Three values ,none: Do not use reduction ;mean: return loss The average of and ;sum: return loss And . Default :mean.

2-17 Negative log likelihood loss NLLLoss

Negative log likelihood loss . Used for training C There are three categories of classification problems .

torch.nn.NLLLoss(weight=None, ignore_index=-100, reduction='mean')Parameters :

weight (Tensor, optional) – Customize the weight of each category . It has to be a length of C Of Tensor

ignore_index (int, optional) – Set a target value , The target value is ignored , So that it doesn't affect The gradient of the input .

2-18 NLLLoss2d

Negative log likelihood loss for image input . It calculates the negative log likelihood loss per pixel .

torch.nn.NLLLoss2d(weight=None, ignore_index=-100, reduction='mean')Parameters :

weight (Tensor, optional) – Customize the weight of each category . It has to be a length of C Of Tensor

reduction- Three values ,none: Do not use reduction ;mean: return loss The average of and ;sum: return loss And . Default :mean.

2-19 PoissonNLLLoss

The target value is the negative log likelihood loss of Poisson distribution

torch.nn.PoissonNLLLoss(log_input=True, full=False, eps=1e-08, reduction='mean')Parameters :

log_input (bool, optional) – If set to True , loss Will be in accordance with the public type exp(input) - target * input To calculate , If set to False , loss Will follow input - target * log(input+eps) Calculation .

full (bool, optional) – Whether to calculate all of loss, i. e. add Stirling Approximation term target * log(target) - target + 0.5 * log(2 * pi * target).

eps (float, optional) – The default value is : 1e-8

Reference material

http://www.voidcn.com/article/p-rtzqgqkz-bpg.html

Past highlights

It is suitable for beginners to download the route and materials of artificial intelligence ( Image & Text + video ) Introduction to machine learning series download Chinese University Courses 《 machine learning 》( Huang haiguang keynote speaker ) Print materials such as machine learning and in-depth learning notes 《 Statistical learning method 》 Code reproduction album machine learning communication qq Group 955171419, Please scan the code to join wechat group

边栏推荐

- repeat_ P1002 [NOIP2002 popularization group] cross the river pawn_ dp

- 2022 version of stronger jsonpath compatibility and performance test (snack3, fastjson2, jayway.jsonpath)

- Swagger suddenly went crazy

- [QNX hypervisor 2.2 user manual]6.3.1 factory page and control page

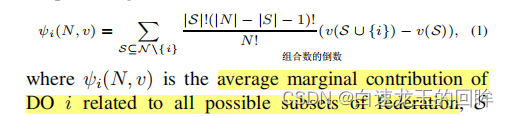

- 关于联邦学习和激励的相关概念(1)

- 更强的 JsonPath 兼容性及性能测试之2022版(Snack3,Fastjson2,jayway.jsonpath)

- Regular replacement [JS, regular expression]

- What financial products can you buy with a deposit of 100000 yuan?

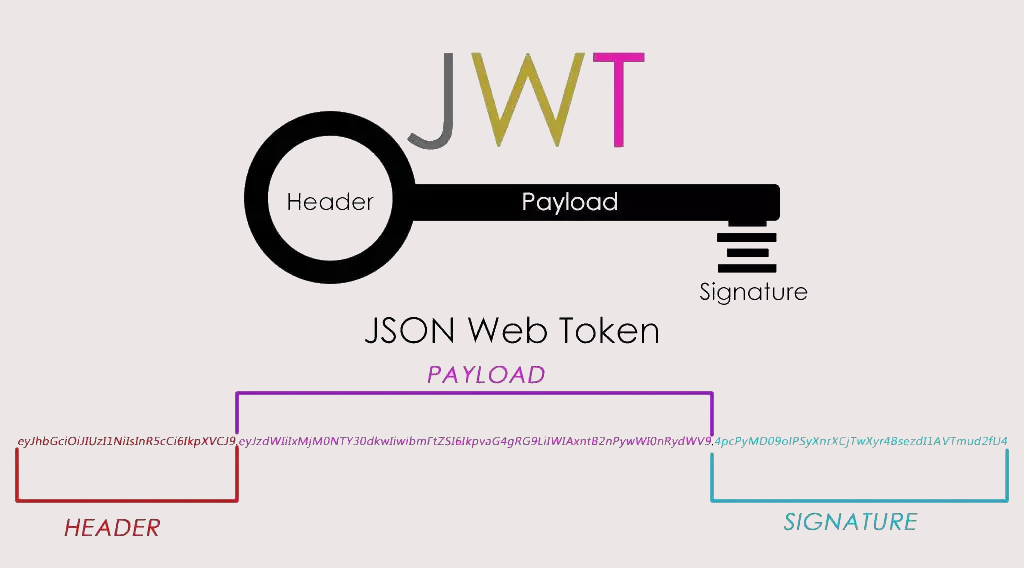

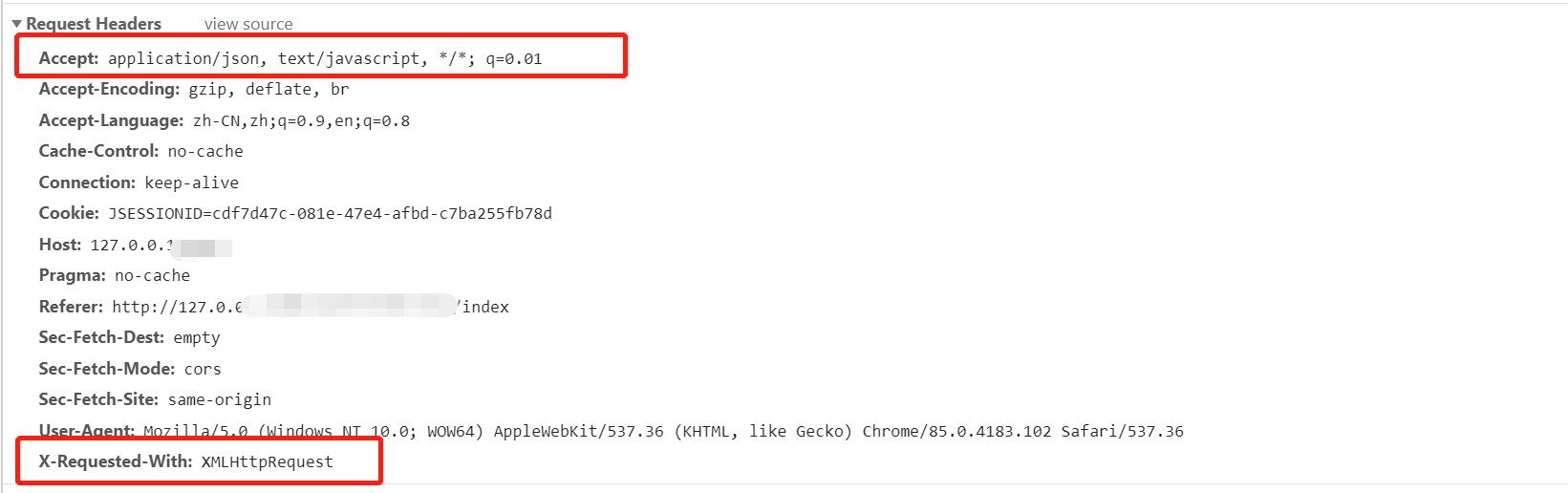

- 实战模拟│JWT 登录认证

- On communication bus arbitration mechanism and network flow control from the perspective of real-time application

猜你喜欢

![[Beijing Xunwei] i.mx6ull development board porting Debian file system](/img/46/abceaebd8fec2370beec958849de18.jpg)

[Beijing Xunwei] i.mx6ull development board porting Debian file system

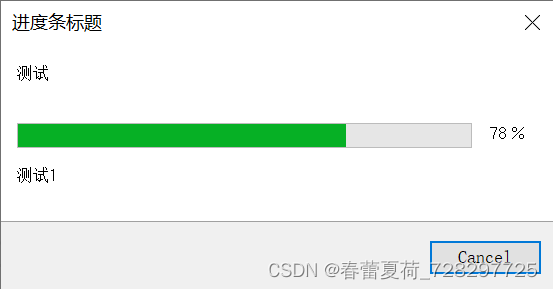

Cbcgpprogressdlg progress bar used by BCG

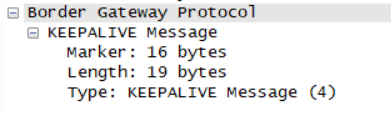

原来这才是 BGP 协议

什么叫内卷?

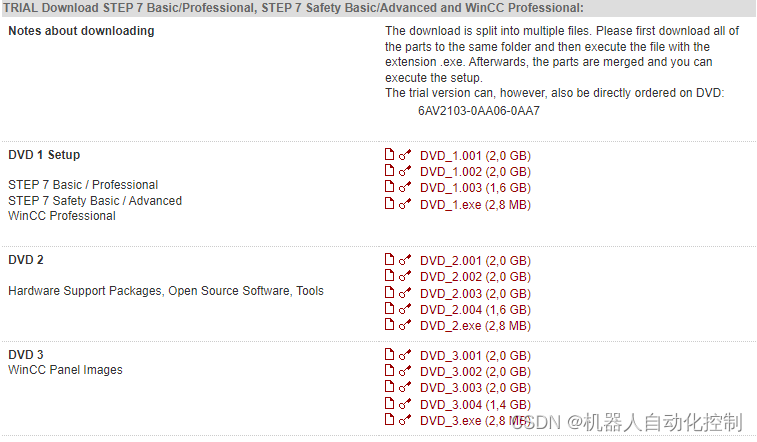

Siemens HMI download prompts lack of panel image solution

Actual combat simulation │ JWT login authentication

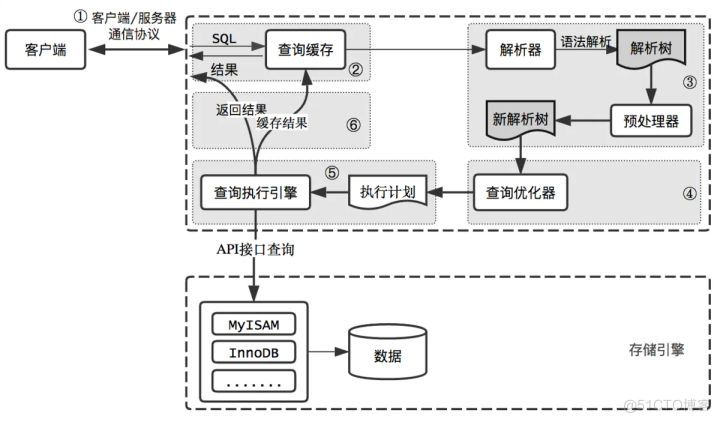

输入的查询SQL语句,是如何执行的?

Chrome开发工具:VMxxx文件是什么鬼

关于联邦学习和激励的相关概念(1)

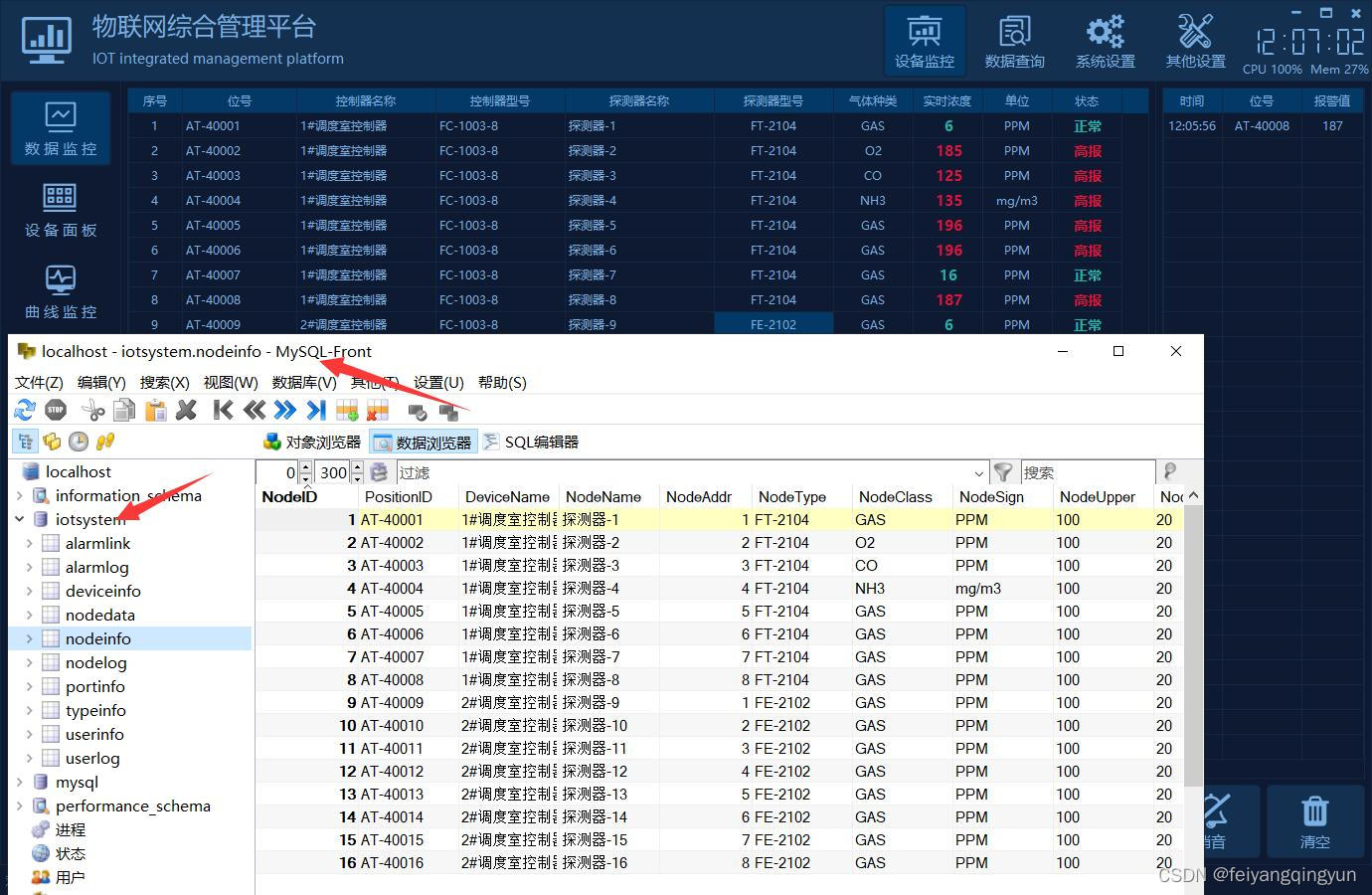

QT writing the Internet of things management platform 38- multiple database support

随机推荐

#夏日挑战赛#带你玩转HarmonyOS多端钢琴演奏

2022 version of stronger jsonpath compatibility and performance test (snack3, fastjson2, jayway.jsonpath)

多表操作-内连接查询

CANN算子:利用迭代器高效实现Tensor数据切割分块处理

Cann operator: using iterators to efficiently realize tensor data cutting and blocking processing

多表操作-外连接查询

凌云出海记 | 一零跃动&华为云:共助非洲普惠金融服务

Niuke Xiaobai month race 7 F question

原来这才是 BGP 协议

Lingyun going to sea | 10 jump &huawei cloud: jointly help Africa's inclusive financial services

九齐单片机NY8B062D单按键控制4种LED状态

kotlin 基本数据类型

C language - Introduction - Foundation - grammar - process control (VII)

Small hair cat Internet of things platform construction and application model

1500万员工轻松管理,云原生数据库GaussDB让HR办公更高效

Template_ Large integer subtraction_ Regardless of size

On communication bus arbitration mechanism and network flow control from the perspective of real-time application

复杂因子计算优化案例:深度不平衡、买卖压力指标、波动率计算

Cbcgpprogressdlg progress bar used by BCG

需求开发思考