当前位置:网站首页>Decision tree of machine learning

Decision tree of machine learning

2022-07-03 06:10:00 【Master core technology】

The decision tree algorithm represents the classification results of data in a tree structure , Each leaf node corresponds to the decision result .

Divide and choose : We hope that the branch nodes of the decision tree contain samples that belong to the same category as much as possible , That is, the purity of the node is high

ID3 Decision tree Information gain

“ Information entropy ”(information entropy) It is the most commonly used index to measure the purity of sample set , Suppose the current sample set D pass the civil examinations k The proportion of class samples is p k p^k pk(k=1,2,…|y|)( In the second category |y|=2), be D The entropy of information is defined as

E n t ( D ) = − ∑ k = 1 ∣ y ∣ p k l o g 2 p k Ent(D)=-\sum\limits_{k=1}^{|y|}p_klog_2p_k Ent(D)=−k=1∑∣y∣pklog2pk

Ent(D) The smaller the value of , be D The higher the purity .

Suppose discrete properties a Yes V Possible values { a 1 , a 2 , … . , a V a^1,a^2,….,a^V a1,a2,….,aV}, If you use a To the sample set D division , Will produce V Branch nodes , Among them the first v Branch nodes contain D All in attributes a The upper value is a V a^V aV The sample of , Write it down as D v D^v Dv. Considering that the number of samples contained in different branch nodes is different , Give weight to the branch ∣ D v ∣ / ∣ D ∣ |D^v|/|D| ∣Dv∣/∣D∣. Calculation a For the sample set D Divide the obtained “ Information gain ”(information gain):

G a i n ( D , a ) = E n t ( D ) − ∑ v = 1 V ∣ D v ∣ ∣ D ∣ E n t ( D v ) Gain(D,a)=Ent(D)-\sum\limits_{v=1}^V\frac{|D^v|}{|D|}Ent(D^v) Gain(D,a)=Ent(D)−v=1∑V∣D∣∣Dv∣Ent(Dv)

generally speaking , The larger the information gain , Use attributes a To divide the “ Purity improvement ” The bigger it is

C4.5 Decision tree Gain rate

actually , The information gain criterion has a preference for attributes with more values , In order to reduce the possible adverse effects of this preference, use the gain rate (gain ratio) To divide the optimal attributes

G a i n _ r a t i o ( D , a ) = G a i n ( D , a ) I V ( a ) Gain\_ratio(D,a)=\frac{Gain(D,a)}{IV(a)} Gain_ratio(D,a)=IV(a)Gain(D,a)

among I V ( a ) = − ∑ v = 1 V ∣ D v ∣ ∣ D ∣ l o g 2 ∣ D v ∣ ∣ D ∣ IV(a)=-\sum\limits_{v=1}^V\frac{|D^v|}{|D|}log_2\frac{|D^v|}{|D|} IV(a)=−v=1∑V∣D∣∣Dv∣log2∣D∣∣Dv∣

Be careful , The information rate criterion has a preference for attributes with a small number of values , therefore ,C4.5 Instead of directly selecting the candidate partition attribute with the largest gain rate , Instead, we first find the attributes with higher than average information gain from the candidate partition attributes , Then select the one with high gain rate .

CART Decision tree Classification and Regression Tree The gini coefficient

Data sets D The purity of can be measured by Gini :

G i n i ( D ) = ∑ k = 1 ∣ y ∣ ∑ k ′ ≠ k p k p k ′ = 1 − ∑ k = 1 ∣ y ∣ p k 2 Gini(D)=\sum\limits_{k=1}^{|y|}\sum\limits_{k'\neq k}p_kp_{k'}=1-\sum\limits_{k=1}^{|y|}p_k^2 Gini(D)=k=1∑∣y∣k′=k∑pkpk′=1−k=1∑∣y∣pk2

Gini(D) It reflects that two samples are randomly selected from the data set , The probability of different category marks , therefore ,Gini(D) The smaller it is , Data sets D The higher the purity .

attribute a The Gini coefficient of is defined as :

G i n i _ i n d e x ( D , a ) = ∑ v = 1 V ∣ D v ∣ ∣ D ∣ G i n i ( D v ) Gini\_index(D,a)=\sum\limits_{v=1}^V\frac{|D^v|}{|D|}Gini(D^v) Gini_index(D,a)=v=1∑V∣D∣∣Dv∣Gini(Dv)

The attribute that minimizes the Gini coefficient after partition is selected as the optimal partition attribute

prune : It's a decision tree “ Over fitting ” The primary means , There are mainly pre pruning (prepruning) And after pruning (postpruning) Two strategies

边栏推荐

- tabbar的设置

- Mysql database table export and import with binary

- Cesium Click to obtain the longitude and latitude elevation coordinates (3D coordinates) of the model surface

- Intel's new GPU patent shows that its graphics card products will use MCM Packaging Technology

- 使用 Abp.Zero 搭建第三方登录模块(一):原理篇

- Zhiniu stock project -- 04

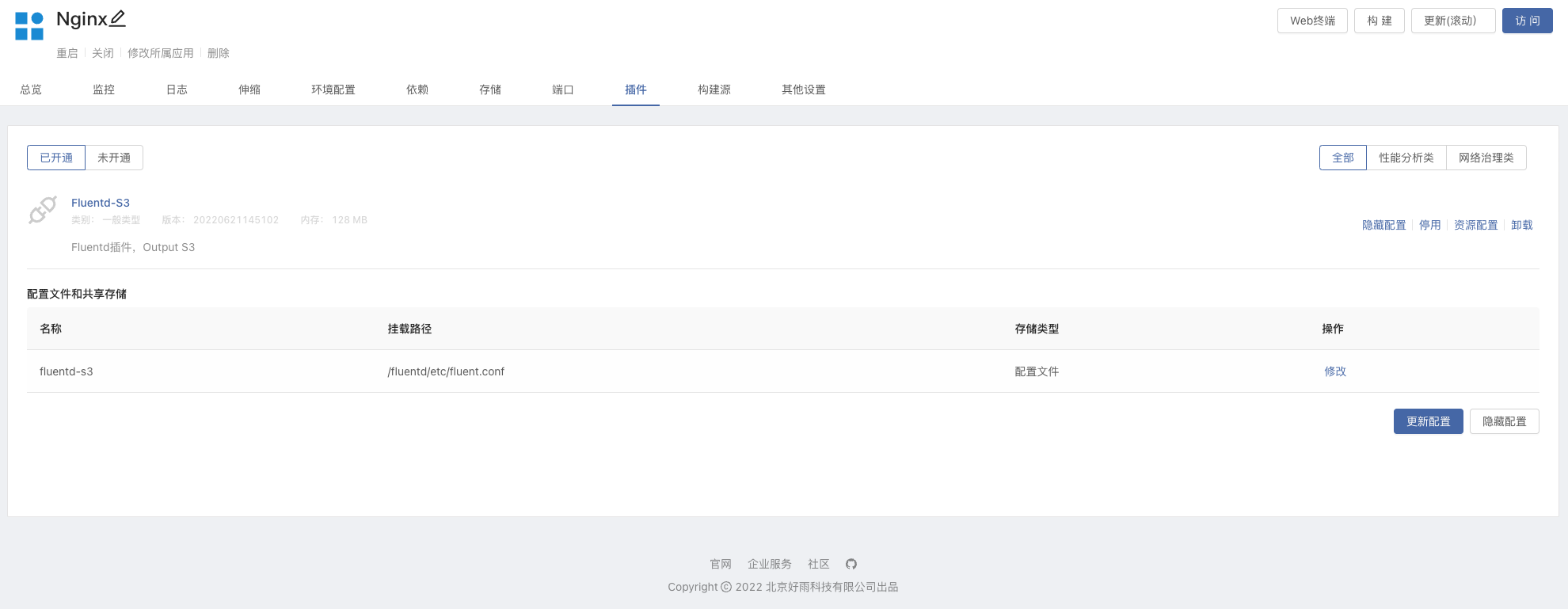

- Fluentd is easy to use. Combined with the rainbow plug-in market, log collection is faster

- Jedis source code analysis (I): jedis introduction, jedis module source code analysis

- BeanDefinitionRegistryPostProcessor

- BeanDefinitionRegistryPostProcessor

猜你喜欢

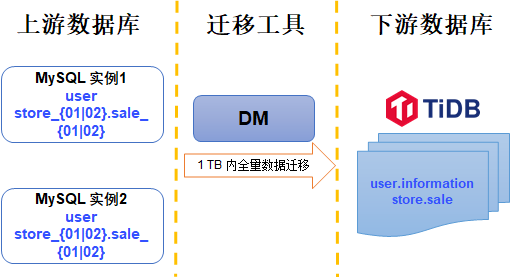

Merge and migrate data from small data volume, sub database and sub table Mysql to tidb

Skywalking8.7 source code analysis (I): agent startup process, agent configuration loading process, custom class loader agentclassloader, plug-in definition system, plug-in loading

智牛股项目--05

项目总结--04

Understand expectations (mean / estimate) and variances

Simple understanding of ThreadLocal

![[teacher Zhao Yuqiang] MySQL high availability architecture: MHA](/img/a7/2140744ebad9f1dc0a609254cc618e.jpg)

[teacher Zhao Yuqiang] MySQL high availability architecture: MHA

Fluentd facile à utiliser avec le marché des plug - ins rainbond pour une collecte de journaux plus rapide

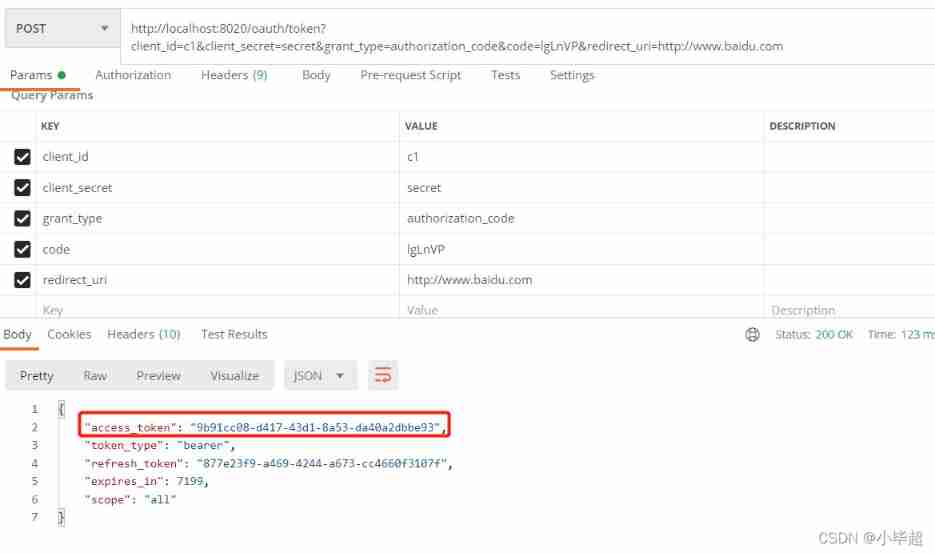

Oauth2.0 - Introduction and use and explanation of authorization code mode

The most responsible command line beautification tutorial

随机推荐

Intel's new GPU patent shows that its graphics card products will use MCM Packaging Technology

Kubernetes notes (IX) kubernetes application encapsulation and expansion

pytorch 多分类中的损失函数

Kubernetes notes (II) pod usage notes

Installation du plug - in CAD et chargement automatique DLL, Arx

Simple solution of small up main lottery in station B

When PHP uses env to obtain file parameters, it gets strings

Project summary --04

Clickhouse learning notes (I): Clickhouse installation, data type, table engine, SQL operation

Jedis source code analysis (I): jedis introduction, jedis module source code analysis

从 Amazon Aurora 迁移数据到 TiDB

[teacher Zhao Yuqiang] Cassandra foundation of NoSQL database

Pytorch dataloader implements minibatch (incomplete)

Cesium entity(entities) 实体删除方法

Cesium Click to obtain the longitude and latitude elevation coordinates (3D coordinates) of the model surface

Mysql database table export and import with binary

Kubernetes notes (V) configuration management

[teacher Zhao Yuqiang] index in mongodb (Part 2)

[video of Teacher Zhao Yuqiang's speech on wot] redis high performance cache and persistence

深入解析kubernetes controller-runtime