当前位置:网站首页>Optimizing the feed flow encountered obstacles, who helped Baidu break the "memory wall"?

Optimizing the feed flow encountered obstacles, who helped Baidu break the "memory wall"?

2022-08-05 02:17:00 【Intel Edge Computing Community】

Feed The concept of streams is foreign to many people,But in the era of mobile Internet, almost everyone's work and life are inseparable from it.通俗讲,It is a kind of information commonly used in all kinds of social and content information app ,Main attack information、Internet service methods for content aggregation and push.It can push you the most interesting and long-term attention content to you,成为“最懂你”的信息助手.同时,Intelligent automatic aggregation、The function of accurate push information,Today it has become a merchant to advertise the important channel and achieve better marketing effect.

Relying on a great personalized app experience,Feed The number and frequency of users of streaming services are increasing day by day,The accuracy with which users push their content、The demand for timeliness is also increasing,That's right Feed Platform performance for streaming services、Database performance in particular poses a serious challenge,Even veteran players in the field of related、身为全球 IT and Baidu, a leading company in the Internet industry,It also needs to be continuously optimized and innovated.

“成长的烦恼”

百度 Feed streaming service hit“内存墙”

此前,Everyone at the mention of IT platform performance,如果没有特指,Basically, it corresponds to the performance of its main computing unit.,但为什么在 Feed streaming service,Database performance will also be so critical?

这一点,其实是由 Feed The nature and architecture of streaming services,正如上文所说,Its main function is to automatically aggregate and accurately push various information and content,And all the information and content to type in the massive data Feed Stored in the database behind the streaming service,also needs to be accessed and processed as efficiently as possible,Only in this way can fast and accurate push results be achieved.

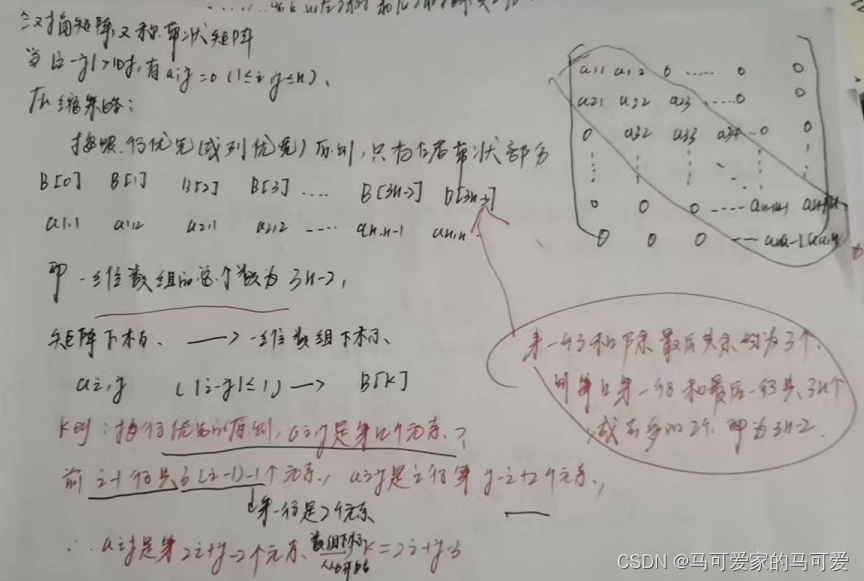

图一 百度 Feed-Cube 示意图

Therefore, no matter which company Feed 流服务,They are basically built around the core database behind them.百度也是如此,It is constructed as early as a few years ago Feed Database required for streaming services Feed-Cube.And from your own business situation,In order to meet the scale of hundreds of millions of users、Tens of millions of concurrent services,and lower latency data processing performance,百度还在 Feed-Cube It was built as an in-memory database at the beginning of the build,并采用了 KV (Key- Value ,键值对) 的存储结构.在这个结构中,Key 值,以及 Value The storage offset value of the data file where the value is located is stored in the hash table,而 Value Values are stored separately in different data files.此外,All hash tables and data files are stored in memory,so as to take full advantage of the high-speed memory I/O ability to provide excellent read and write performance and ultra-low latency.

Despite such a forward-looking architectural design,Feed-Cube Will meet the challenge——Although the high concurrency of tens of millions of queries per second and PB Its performance has been excellent in the environment of massive data storage,但在百度 Feed Streaming service scale continues to expand、Under the condition of data size also continues to grow,It still encounters the problem that the expansion of memory capacity cannot keep up with the development of data storage requirements,或者说,撞上了“内存墙”的考验.

the word memory wall,It was originally used to describe the performance bottleneck caused by the technological development gap between memory and computing power units.,in big data and AI The data processing needs of the era are more and more real-time,It also adds a capacity dimension,That is, in order to maximize the efficiency of data reading, writing and processing,Having to move larger volumes of data from storage closer to computing power、带宽和 I/O better performance in memory,However, the expansion of memory capacity is not easy and the cost is too high.,But it makes it difficult to carry larger amounts of data,这种情形,It's like the memory has a layer of capacity that can't be seen or touched.,but a real wall.

If you want to ask why it is difficult to expand the memory capacity,成本也高?那就要谈到 DRAM 身上.As a medium commonly used in current memory,DRAM The mainstream capacity configuration on a single server memory is mostly 32GB 或 64GB,128GB rare and expensive,Anyone who wants to use DRAM Memory to greatly expanded memory capacity,then there is a very high cost,And spent a lot of money,In the end, it may still be difficult to achieve the level of memory capacity you want to achieve.

Baidu has considered using heap DRAM 的方式为 Feed-Cube Build a larger memory pool,But this aspect will make TCO A sharp rise,另一方面,Still can't keep up with this Feed-Cube The development requirements of data carrying capacity.

relying on verification DRAM Hard to break through the memory wall,Baidu has also experimented with using ever-increasing performance、Based on non-volatile storage (non-volatile memory, NVM) technical storage devices,如 NVMe SSD for storage Feed-Cube data files and hash tables in.但经测试发现,这一方案在 QoS、IO Speed and other aspects are difficult to meet service requirements.

英特尔 傲腾 Persistent memory is broken“墙”利器

兼顾性能、Capacity and Cost Effectiveness

求助 DRAM 和 NVMe Solid state disk is not smooth,那么 Feed-Cube Where are the paths and solutions to break through the memory wall??Baidu holds the original intention of seeking breakthrough solutions,The eye sight the cooperation partner of Intel for many years,Aiming for it is intended to subvert traditional memory-Intel Storage Architecture 傲腾 持久内存.

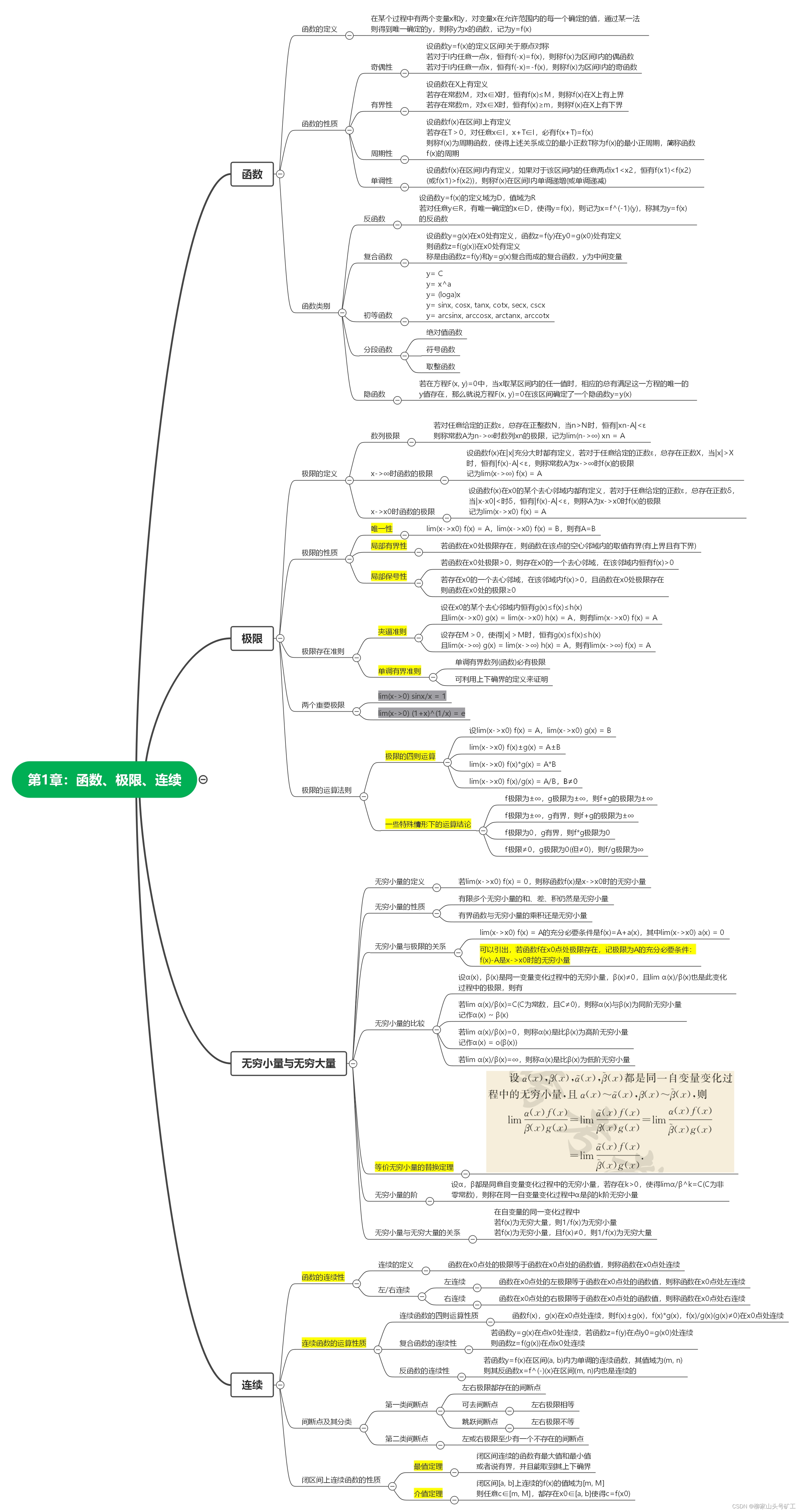

图二 英特尔 傲腾 persistent memory in memory-Location and role in the storage hierarchy

英特尔 傲腾 The reason why persistent memory enters Baidu's vision is actually very simple,That is, it takes into account the existing DRAM Advantages of memory and storage products,At the same time, they do not have their own obvious shortcomings like them.——It relies on innovative media,available close to DRAM read and write performance and access latency、Storage capacity closer to SSD,并支持 DRAM Data persistence and higher price-to-capacity ratio not available in memory,以及 NAND Durability unmatched by SSDs.This makes it a multi-user、High concurrency and large capacity scenario has a very prominent advantages,It is also especially suitable for expanding memory to carry larger volumes、Data that requires higher read and write speeds and latency to process.

有鉴于此,Baidu starts experimenting with Intel 傲腾 persistent memory to hack Feed-Cube In the face of the memory wall problem.Its approach is to use persistent memory to store Feed-Cube data file section in,and still use DRAM to store the hash table.The purpose of using this hybrid configuration,On the one hand, to verify that Intel 傲腾 持久内存在 Feed-Cube 中的性能表现;另一方面,Feed-Cube 在查询 Value 值的过程中,The number of reads of the hash table is much larger than the number of reads of the data file,So first replace in the data file part,reduce as much as possible Feed-Cube 性能的影响.

与此同步,Baidu also partners with Intel,根据 Feed-Cube 应用场景的需求,在服务器 BIOS added support for Intel 傲腾 Persistent memory support driver,Still in Baidu self-research Linux Added related patches to the kernel,In order to realize the hardware、操作系统、Comprehensive optimization of components such as the kernel,This in turn fully unlocks the performance potential of the entire system.

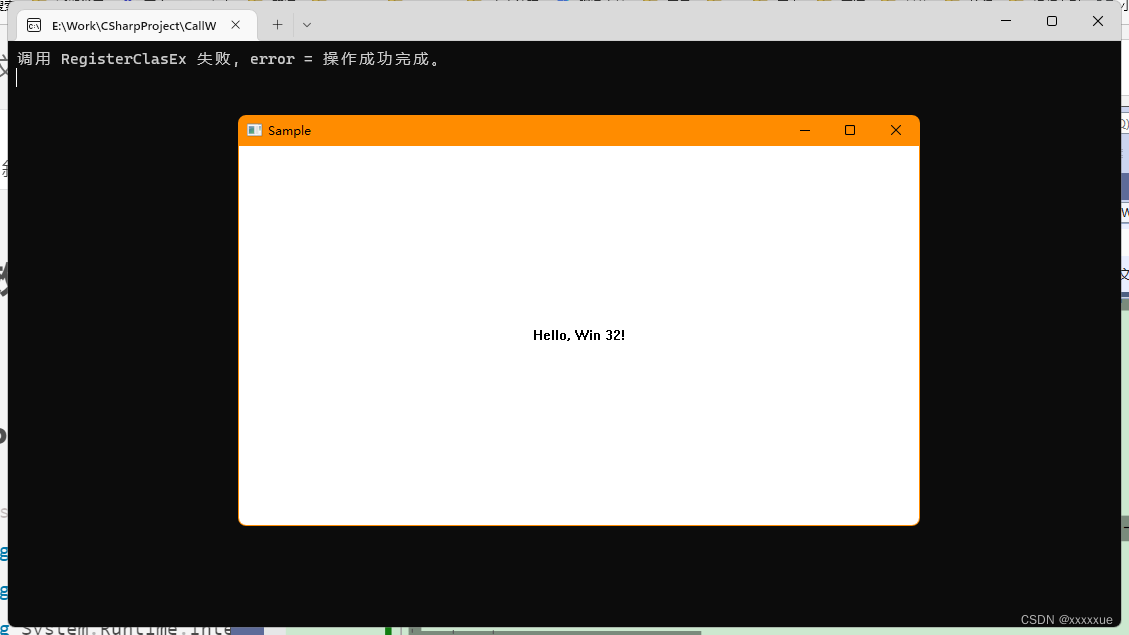

经过这一系列操作后,Baidu simulates the large concurrent access pressure that may occur in actual scenarios,对纯 DRAM The memory mode was tested against the mixed configuration mode described above.测试中,每秒查询次数 ( Query Per Second, QPS) 设为 20 万次,Every visit requires a query 100 组 Key-Value 组,The total access pressure is 2 千万级.结果显示,Under the pressure of such a large visit,平均访问耗时仅上升约 24% (30 微秒),The proportion of processor consumption in the whole machine only increased7%,The performance fluctuation is also within the acceptable range of Baidu.而与此相对应的是,单服务器的 DRAM 内存使用量下降过半,这对于 Feed-Cube PB level of storage capacity,Undoubtedly, the cost can be greatly reduced.

Inspired by this achievement,Baidu is further trying to Feed-Cube The hash table and data files are stored in Intel 傲腾 持久内存中,以每秒 50 万次查询 (QPS) access pressure to test,It turns out that this mode is the same as configuring only DRAM 内存的方案相比,The average latency rises only about 9.66%,The performance fluctuation is also within the acceptable range of Baidu.

图三 百度 Feed-Cube Memory hardware change path

After these explorations and practices,Baidu finally verified its Feed The core module of the streaming service Feed-Cube configure from onlyDRAM 内存的模式,Migrate to concurrent use DRAM Memory and Intel 傲腾 Hybrid Configuration Mode for Persistent Memory,Then to fully rely on Intel 傲腾 Feasibility of Persistent Memory Mode.The excellent performance of this series of innovative measures under the pressure of large concurrent access and the resource consumption that meets Baidu's expectations,Full display of Intel 傲腾 The Key Role of Persistent Memory in Breaking the Memory Wall.

打破内存墙

Enlightenment for the digital and intelligent transformation of the industry

毫无疑问,With the cloudification of various industries、Acceleration of digital and intelligent transformation,More and more big data and AI 应用的落地,everyone as a whole,Or individuals face the scale of data、Massive growth in data dimension and complexity,In addition, the user's demand for service timeliness continues to increase,All related systems and applications not only present more diverse and more severe challenges to computing power,also requires memory-The storage architecture can keep up with the evolution of computing power and data in terms of performance and capacity.If we take into account the low cost of enterprises、The infinite pursuit of high efficiency,All this makes the traditional usually added DRAM way to expand memory capacity is almost a thing of the past,All those facing the memory wall challenge,All need to be like Baidu,Transformative technologies must be sought、Subversive solutions can only break through the wall,A new chapter in the open and efficient service.

边栏推荐

猜你喜欢

2022 EdgeX中国挑战赛8月3日即将盛大开幕

特殊矩阵的压缩存储

![[Unity Entry Plan] Handling of Occlusion Problems in 2D Games & Pseudo Perspective](/img/de/944b31c68cc5b9ffa6a585530e7be9.png)

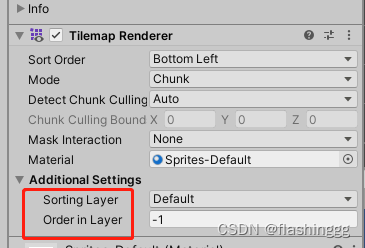

[Unity Entry Plan] Handling of Occlusion Problems in 2D Games & Pseudo Perspective

开篇-开启全新的.NET现代应用开发体验

Advanced Numbers_Review_Chapter 1: Functions, Limits, Continuity

.Net C# Console Create a window using Win32 API

<开发>实用工具

Utilities

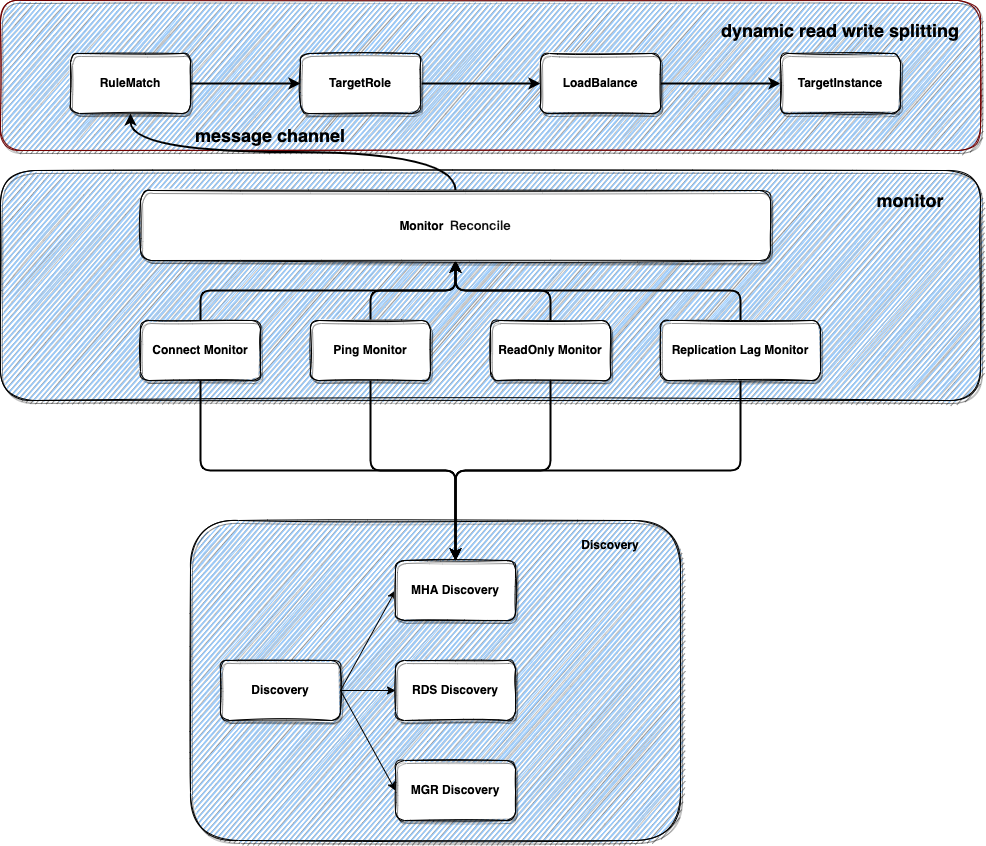

Pisanix v0.2.0 发布|新增动态读写分离支持

【Unity入门计划】2D游戏中遮挡问题的处理方法&伪透视

随机推荐

回顾51单片机

汇编语言之源程序

Leetcode brushing questions - 22. Bracket generation

Why is this problem reported when installing oracle11

the mechanism of ideology

[parameters of PyQT5 binding functions]

Pisanix v0.2.0 发布|新增动态读写分离支持

Xunrui cms website cannot be displayed normally after relocation and server change

2022杭电多校第一场

Intel XDC 2022 Wonderful Review: Build an Open Ecosystem and Unleash the Potential of "Infrastructure"

如何逐步执行数据风险评估

Flink 1.15.1 集群搭建(StandaloneSession)

Hypervisor related knowledge points

如何模拟后台API调用场景,很细!

亚马逊云科技 + 英特尔 + 中科创达为行业客户构建 AIoT 平台

程序员失眠时的数羊列表 | 每日趣闻

Simple implementation of YOLOv7 pre-training model deployment based on OpenVINO toolkit

直播预告|30分钟快速入门!来看可信分布式AI链桨的架构设计

Programmer's list of sheep counting when insomnia | Daily anecdote

The use of pytorch: temperature prediction using neural networks