当前位置:网站首页>Deep learning framework pytorch rapid development and actual combat chapter4

Deep learning framework pytorch rapid development and actual combat chapter4

2022-08-02 14:18:00 【weixin_50862344】

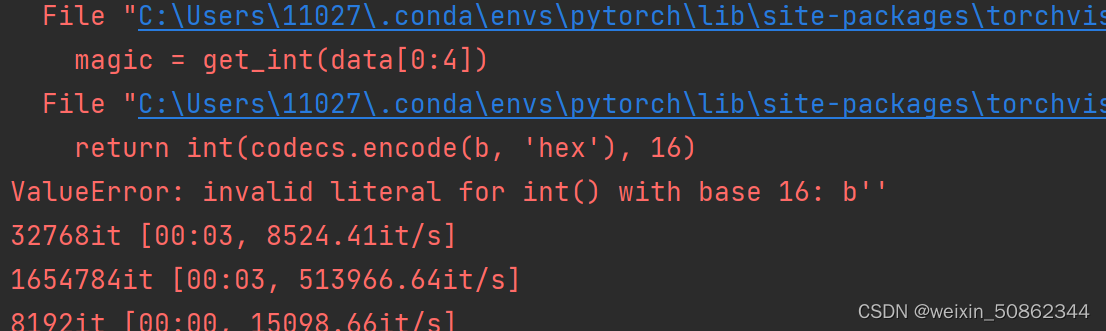

报错

第一个

This error should be related to the fact that the network in front of me cannot be disconnected,I deleted the directorydataFolder to run againok了

第二个

The old problem is stilldata[]改成item()

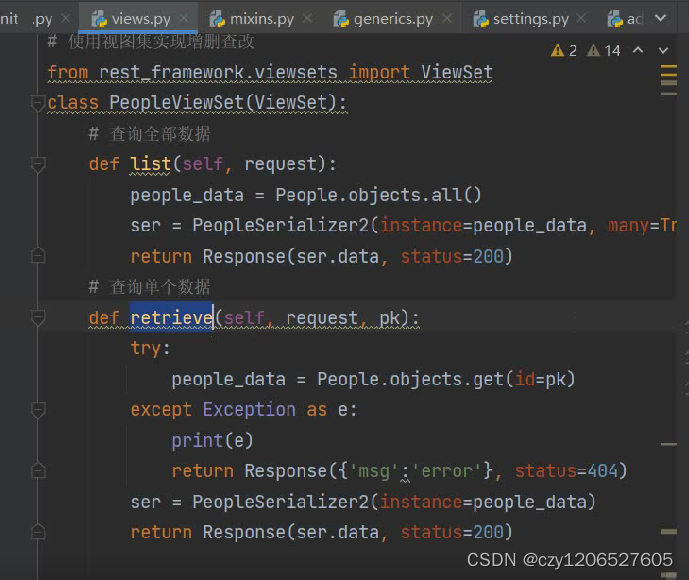

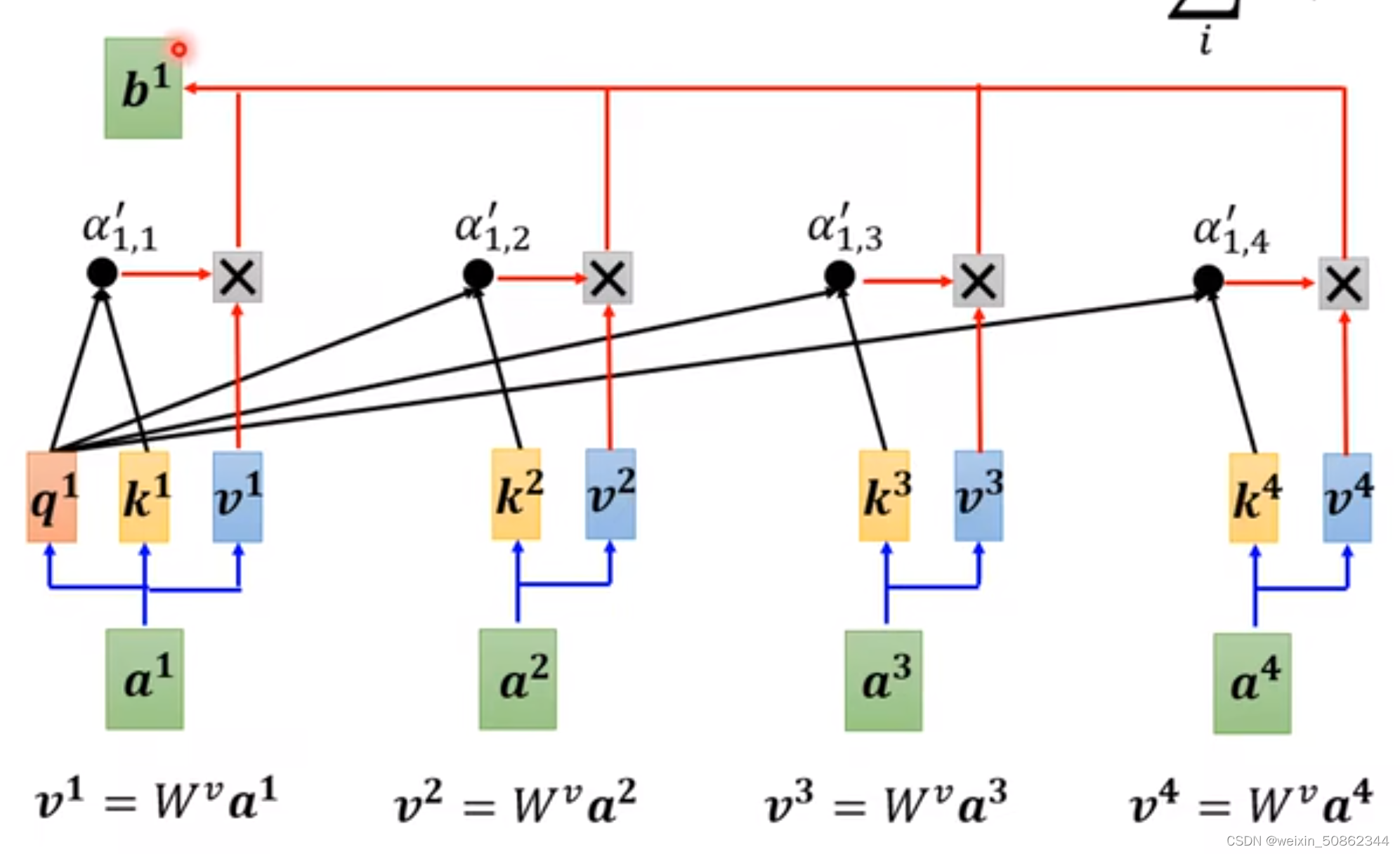

前馈神经网络

import torch

import torch.nn as nn

import torchvision.datasets as dsets

import torchvision.transforms as transforms

from torch.autograd import Variable

import torch.utils.data as Data

import matplotlib.pyplot as plt

# Hyper Parameters

input_size = 784

hidden_size = 500

num_classes = 10

num_epochs = 5

batch_size = 100

learning_rate = 0.001

# MNIST Dataset

train_dataset = dsets.MNIST(root='./data',

train=True,

transform=transforms.ToTensor(),

download=True)

test_dataset = dsets.MNIST(root='./data',

train=False,

transform=transforms.ToTensor())

# Data Loader (Input Pipeline)

train_loader = torch.utils.data.DataLoader(dataset=train_dataset,

batch_size=batch_size,

shuffle=True)

test_loader = torch.utils.data.DataLoader(dataset=test_dataset,

batch_size=batch_size,

shuffle=False)

test_y=test_dataset.test_labels

# Neural Network Model (1 hidden layer)

class Net(nn.Module):

def __init__(self, input_size, hidden_size, num_classes):

super(Net, self).__init__()

self.fc1 = nn.Linear(input_size, hidden_size)

self.relu = nn.ReLU()

self.fc2 = nn.Linear(hidden_size, num_classes)

def forward(self, x):

out = self.fc1(x)

out = self.relu(out)

out = self.fc2(out)

return out

net = Net(input_size, hidden_size, num_classes)

# Loss and Optimizer

criterion = nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(net.parameters(), lr=learning_rate)

# Train the Model

for epoch in range(num_epochs):

for i, (images, labels) in enumerate(train_loader):

# Convert torch tensor to Variable

images = Variable(images.view(-1, 28*28))

labels = Variable(labels)

# Forward + Backward + Optimize

optimizer.zero_grad() # zero the gradient buffer

outputs = net(images)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

if (i+1) % 100 == 0:

print ('Epoch [%d/%d], Step [%d/%d], Loss: %.4f'

%(epoch+1, num_epochs, i+1, len(train_dataset)//batch_size, loss.item()))

# Test the Model

correct = 0

total = 0

for images, labels in test_loader:

images = Variable(images.view(-1, 28*28))

outputs = net(images)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum()

print('Accuracy of the network on the 10000 test images: %d %%' % (100 * correct / total))

# Save the Model

for i in range(1,4):

plt.imshow(train_dataset.train_data[i].numpy(), cmap='gray')

plt.title('%i' % train_dataset.train_labels[i])

plt.show()

torch.save(net.state_dict(), 'model.pkl')

test_output = net(images[:20])

pred_y = torch.max(test_output, 1)[1].data.numpy().squeeze()

print('prediction number',pred_y)

print('real number',test_y[:20].numpy())

- torch.max返回最大值和索引

- 要使用torch.optim必须先构造一个Optimizer对象

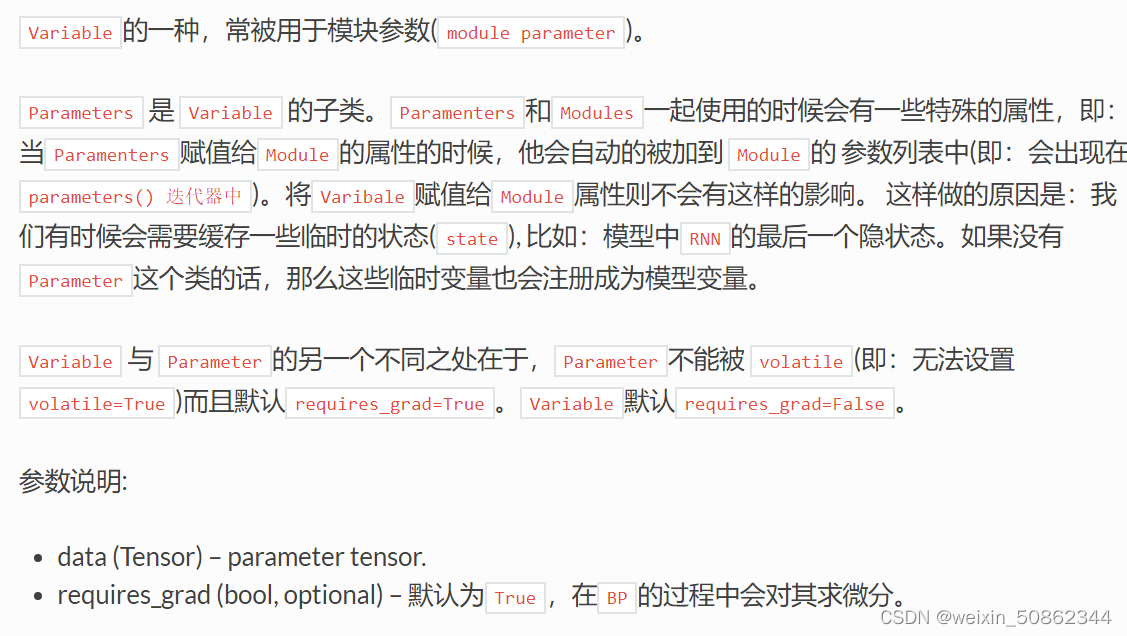

(1)He must be given an include parameter(必须是 Variable对象)进行优化

net.parameters()

(2)Parameter options can be specified,It can also be directly set individually

- torchvision

(1)torchvision.datasets包含数据集(p78)

(2)torchvision.modelsContains pretrained model structures

#加载预训练的

resnet18=models.resnet18(pretrained=True)

#具有随机权重的

resnet18=models.resnet18()

(3)图片转化

自定义ConvNet

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

"""

Created on Mon Jan 1 22:03:51 2018

@author: pc

"""

import torch

from torch.autograd import Variable

import torch.nn as nn

import torch.nn.functional as F

class MNISTConvNet(nn.Module):

def __init__(self):

super(MNISTConvNet, self).__init__()

self.conv1 = nn.Conv2d(1, 10, 5)

self.pool1 = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(10, 20, 5)

self.pool2 = nn.MaxPool2d(2, 2)

self.fc1 = nn.Linear(320, 50)

self.fc2 = nn.Linear(50, 10)

def forward(self, input):

x = self.pool1(F.relu(self.conv1(input)))

x = self.pool2(F.relu(self.conv2(x)))

return x

net = MNISTConvNet()

print(net)

input = Variable(torch.randn(1, 1, 28, 28))

out = net(input)

print(out.size())

(2) torch.nn

层结构

(1)卷积

(2)池化

函数

位于torch.nn.functional包中(p86)

边栏推荐

- The bad policy has no long-term impact on the market, and the bull market will continue 2021-05-19

- About the development forecast of the market outlook?2021-05-23

- mysql的case when如何用

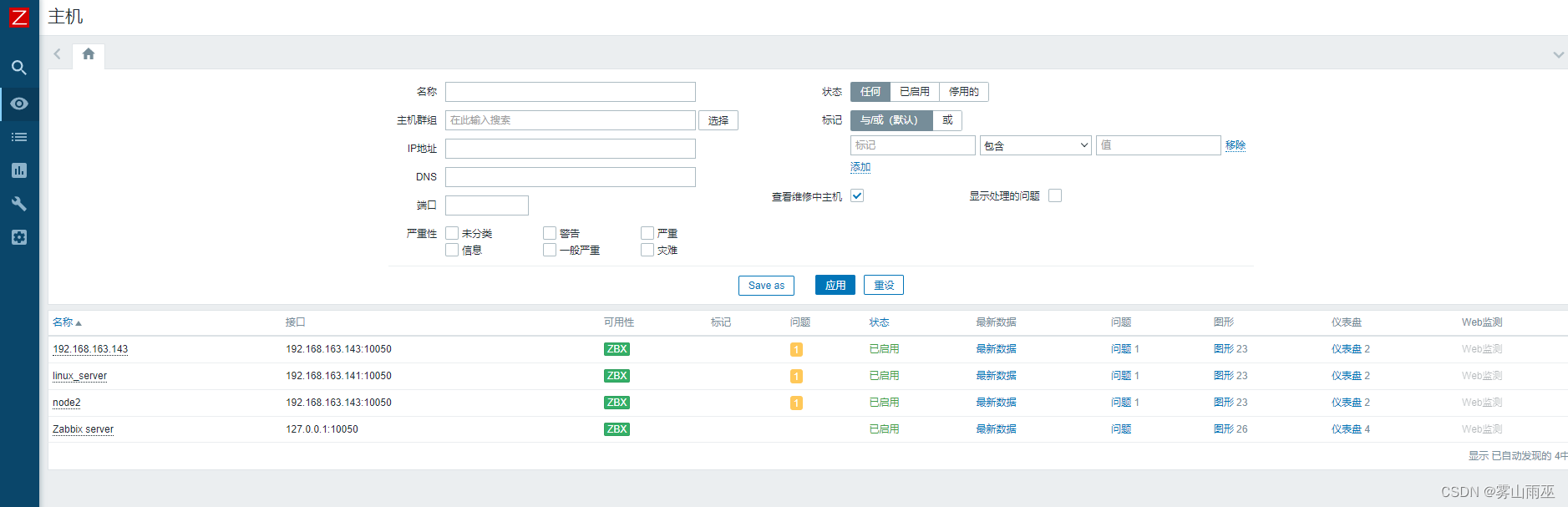

- 第八单元 中间件

- 【ROS】编译软件包packages遇到进度缓慢或卡死,使用swap

- Chapter6 visualization (don't want to see the version)

- [ROS](04)package.xml详解

- 此次519暴跌的几点感触 2021-05-21

- ping命令的使用及代码_通过命令查看ping路径

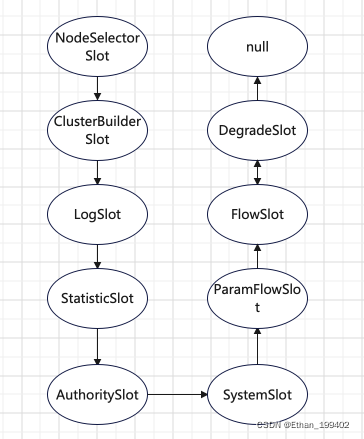

- Sentinel源码(五)FlowSlot以及限流控制器源码分析

猜你喜欢

随机推荐

[ROS](02)创建&编译ROS软件包Package

第十二单元 关联序列化处理

xshell连接虚拟机步骤_建立主机与vm虚拟机的网络连接

世界上最大的开源基金会 Apache 是如何运作的?

8583 顺序栈的基本操作

动态刷新日志级别

云片网案例

[ROS](03)CMakeLists.txt详解

第四单元 路由层

RowBounds[通俗易懂]

【Tensorflow】AttributeError: ‘_TfDeviceCaptureOp‘ object has no attribute ‘_set_device_from_string‘

Flask框架

Raft协议图解,缺陷以及优化

Unit 15 Paging, Filtering

泡利不相容原理适用的空间范围(系统)是多大?

window10下半自动标注

Flask项目的完整创建 七牛云与容联云

Cloin 控制台乱码

Flask上下文,蓝图和Flask-RESTful

paddle window10环境下使用conda安装

![[ROS]roscd和cd的区别](/img/a8/a1347568170821e8f186091b93e52a.png)