当前位置:网站首页>Spark DF adds a column

Spark DF adds a column

2022-07-06 00:28:00 【The south wind knows what I mean】

List of articles

- Method 1 : utilize createDataFrame Method , The process of adding new columns is included in building rdd and schema in

- Method 2 : utilize withColumn Method , The process of adding new columns is included in udf Function

- Method 3 : utilize SQL Code , The process of adding new columns is written directly to SQL In the code

- Method four : The above three are to add a judged column , If you want to add a unique sequence number , have access to monotonically_increasing_id

Method 1 : utilize createDataFrame Method , The process of adding new columns is included in building rdd and schema in

val trdd = input.select(targetColumns).rdd.map(x=>{

if (x.get(0).toString().toDouble > critValueR || x.get(0).toString().toDouble < critValueL)

Row(x.get(0).toString().toDouble,"F")

else Row(x.get(0).toString().toDouble,"T")

})

val schema = input.select(targetColumns).schema.add("flag", StringType, true)

val sample3 = ss.createDataFrame(trdd, schema).distinct().withColumnRenamed(targetColumns, "idx")

Method 2 : utilize withColumn Method , The process of adding new columns is included in udf Function

val code :(Int => String) = (arg: Int) => {

if (arg > critValueR || arg < critValueL) "F" else "T"}

val addCol = udf(code)

val sample3 = input.select(targetColumns).withColumn("flag", addCol(input(targetColumns)))

.withColumnRenamed(targetColumns, "idx")

Method 3 : utilize SQL Code , The process of adding new columns is written directly to SQL In the code

input.select(targetColumns).createOrReplaceTempView("tmp")

val sample3 = ss.sqlContext.sql("select distinct "+targetColname+

" as idx,case when "+targetColname+">"+critValueR+" then 'F'"+

" when "+targetColname+"<"+critValueL+" then 'F' else 'T' end as flag from tmp")

Method four : The above three are to add a judged column , If you want to add a unique sequence number , have access to monotonically_increasing_id

// Add sequence number column add a column method 4

import org.apache.spark.sql.functions.monotonically_increasing_id

val inputnew = input.withColumn("idx", monotonically_increasing_id)

边栏推荐

- 多线程与高并发(8)—— 从CountDownLatch总结AQS共享锁(三周年打卡)

- The global and Chinese markets of dial indicator calipers 2022-2028: Research Report on technology, participants, trends, market size and share

- wx. Getlocation (object object) application method, latest version

- uniapp开发,打包成H5部署到服务器

- FFMPEG关键结构体——AVFrame

- Go learning --- structure to map[string]interface{}

- Global and Chinese markets for pressure and temperature sensors 2022-2028: Research Report on technology, participants, trends, market size and share

- Data analysis thinking analysis methods and business knowledge -- analysis methods (II)

- Atcoder beginer contest 258 [competition record]

- Room cannot create an SQLite connection to verify the queries

猜你喜欢

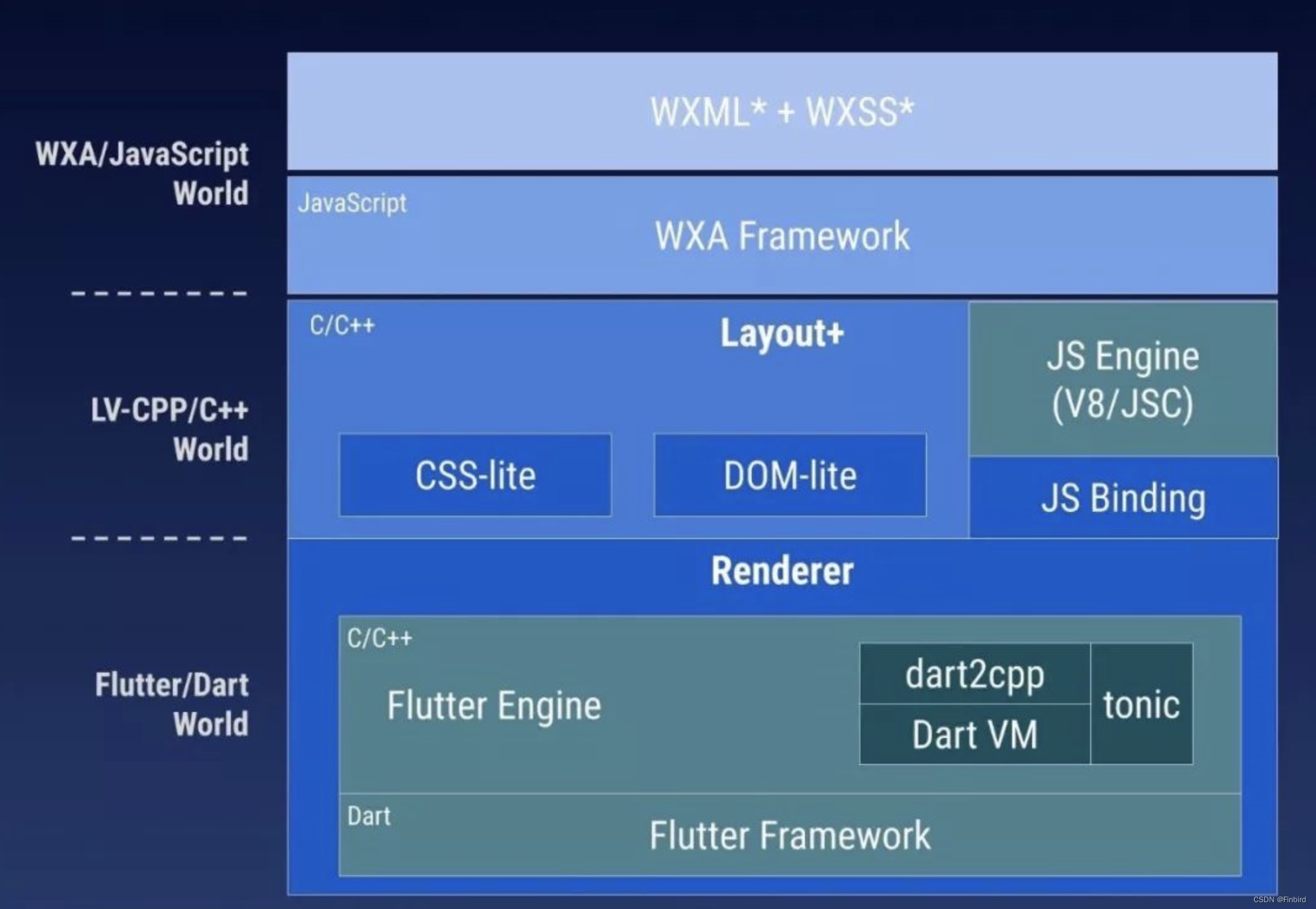

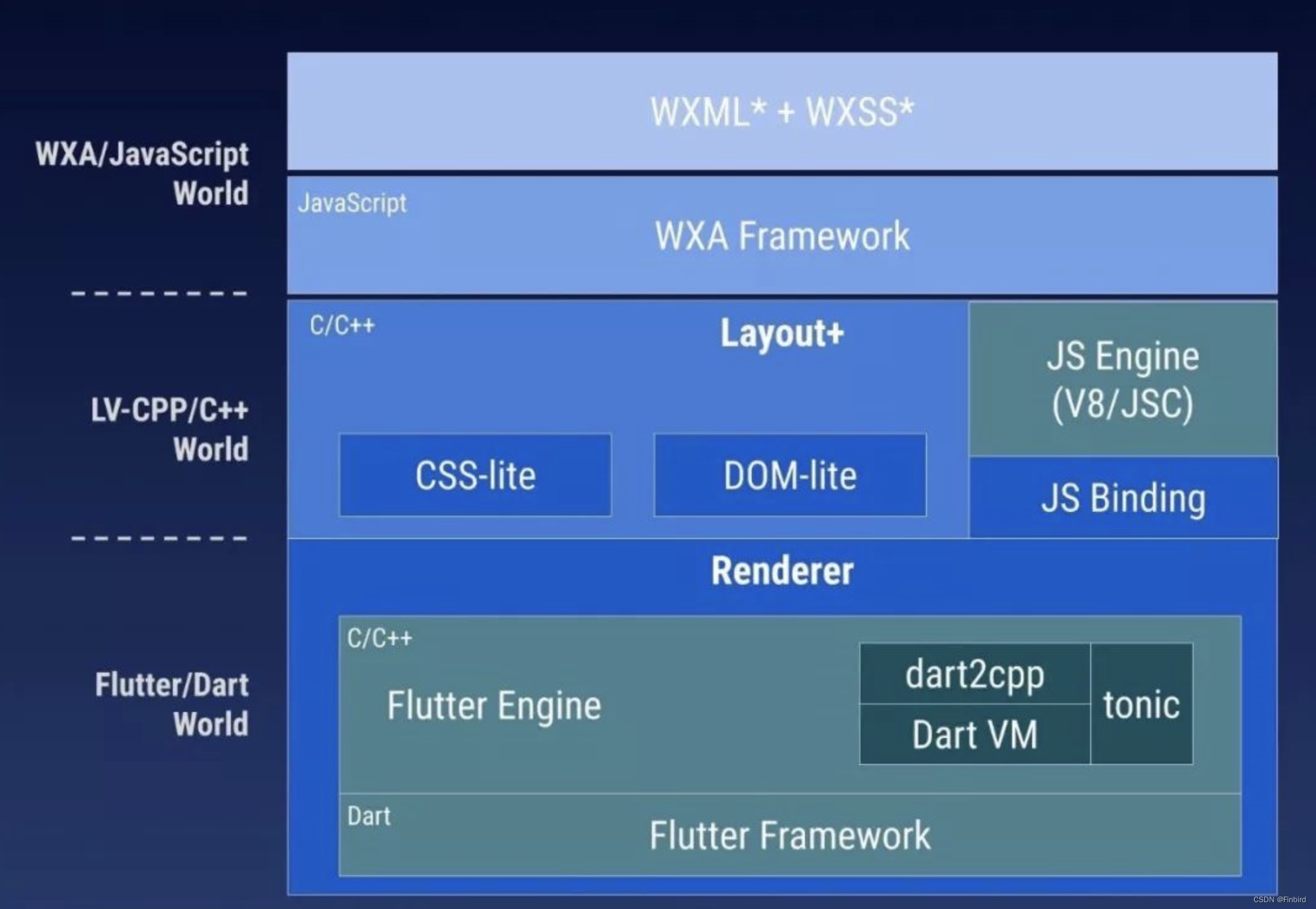

如何利用Flutter框架开发运行小程序

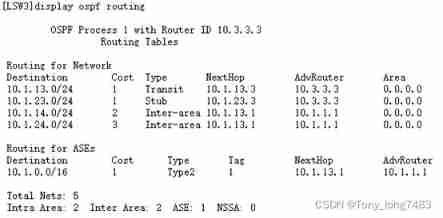

Configuring OSPF load sharing for Huawei devices

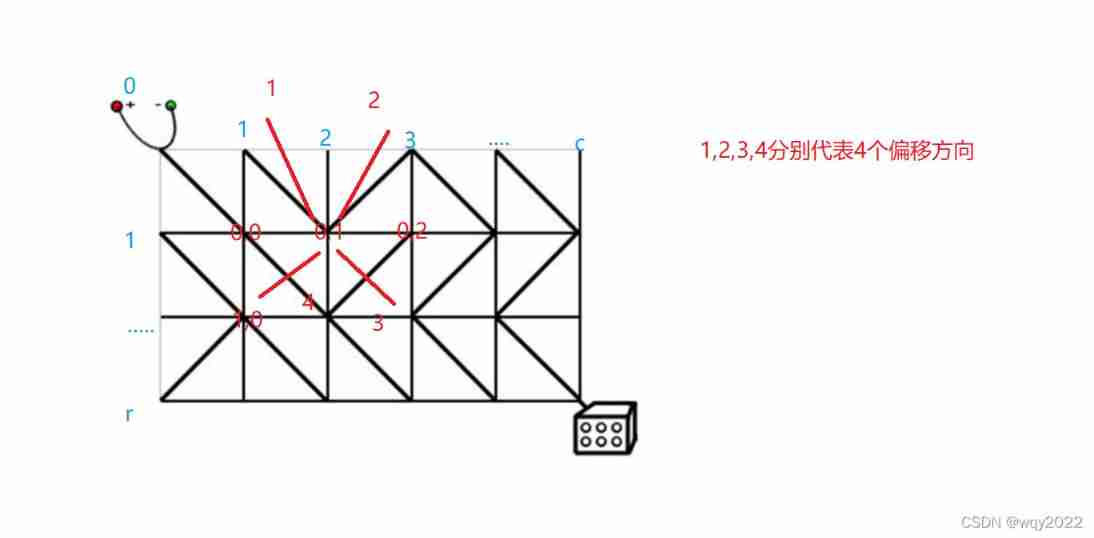

Search (DFS and BFS)

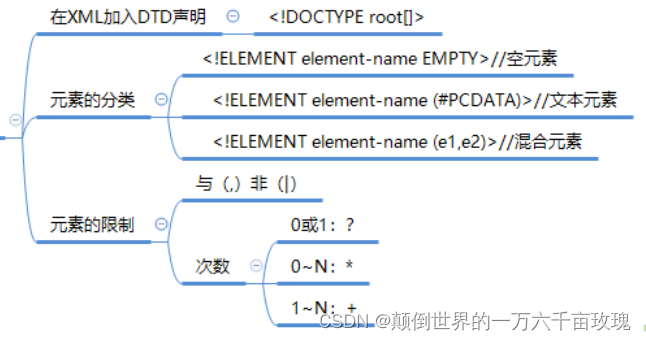

XML Configuration File

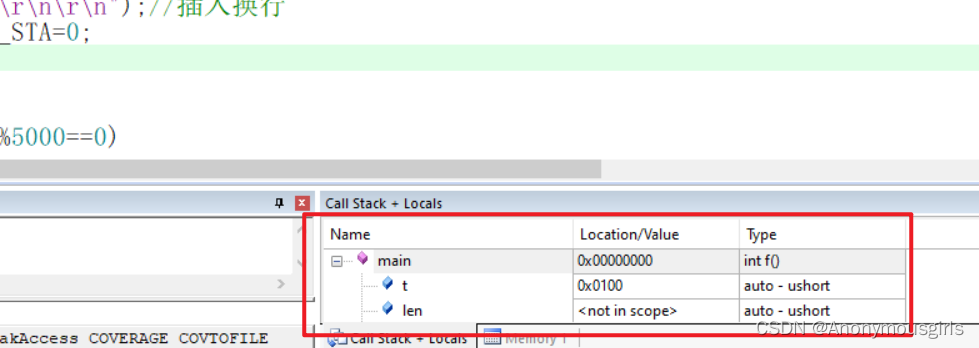

Set data real-time update during MDK debug

How to use the flutter framework to develop and run small programs

FFmpeg抓取RTSP图像进行图像分析

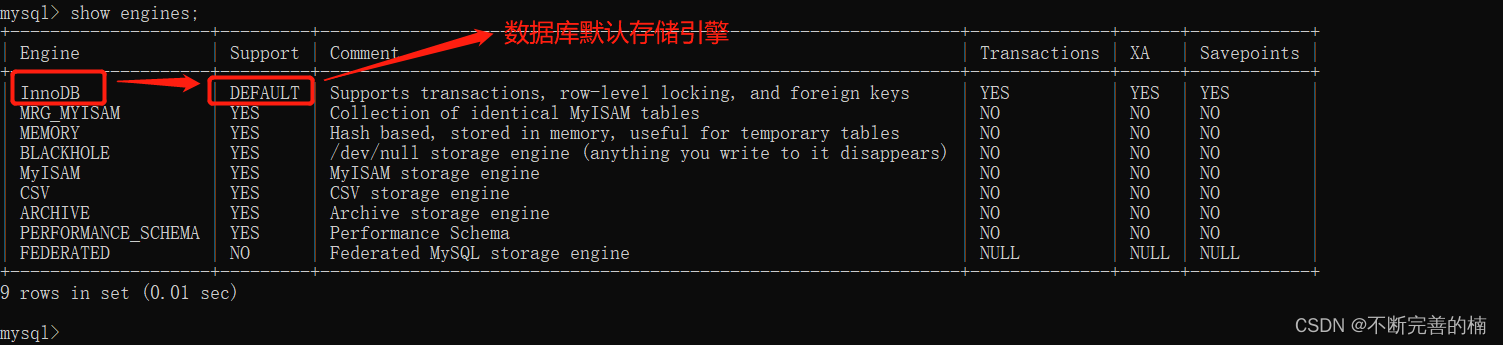

MySQL存储引擎

NSSA area where OSPF is configured for Huawei equipment

Configuring OSPF GR features for Huawei devices

随机推荐

Leetcode Fibonacci sequence

LeetCode 1189. Maximum number of "balloons"

Global and Chinese markets of POM plastic gears 2022-2028: Research Report on technology, participants, trends, market size and share

Chapter 16 oauth2authorizationrequestredirectwebfilter source code analysis

DEJA_ Vu3d - cesium feature set 055 - summary description of map service addresses of domestic and foreign manufacturers

Single merchant v4.4 has the same original intention and strength!

State mode design procedure: Heroes in the game can rest, defend, attack normally and attack skills according to different physical strength values.

Shardingsphere source code analysis

提升工作效率工具:SQL批量生成工具思想

Global and Chinese market of digital serial inverter 2022-2028: Research Report on technology, participants, trends, market size and share

Model analysis of establishment time and holding time

How to use the flutter framework to develop and run small programs

FFmpeg抓取RTSP图像进行图像分析

Go learning --- structure to map[string]interface{}

Spark SQL空值Null,NaN判断和处理

Gd32f4xx UIP protocol stack migration record

如何利用Flutter框架开发运行小程序

STM32按键消抖——入门状态机思维

LeetCode 8. String conversion integer (ATOI)

7.5模拟赛总结