当前位置:网站首页>Spark获取DataFrame中列的方式--col,$,column,apply

Spark获取DataFrame中列的方式--col,$,column,apply

2022-07-06 00:23:00 【南风知我意丿】

Spark获取DataFrame中列的方式--col,$,column,apply

1 官方说明

df("columnName") //在特定数据帧上

col("columnName") //尚未与数据帧关联的通用列

col("columnName.field") //提取结构字段

col("`a.column.with.dots`") //转义。在列名中

$"columnName" //Scala命名列的缩写

expr("a + 1") //由解析的SQL表达式构造的列

lit("abc") //产生文字(常量)值的列

2 使用时涉及到的的包

//

import spark.implicits._

import org.apache.spark.sql.functions._

import org.apache.spark.sql.Column

3 Demo

//

scala> val idCol = $"id"

idCol: org.apache.spark.sql.ColumnName = id

scala> val idCol = col("id")

idCol: org.apache.spark.sql.Column = id

scala> val idCol = column("id")

idCol: org.apache.spark.sql.Column = id

scala> val dataset = spark.range(5).toDF("text")

dataset: org.apache.spark.sql.DataFrame = [text: bigint]

scala> val textCol = dataset.col("text")

textCol: org.apache.spark.sql.Column = text

scala> val textCol = dataset.apply("text")

textCol: org.apache.spark.sql.Column = text

scala> val textCol = dataset("text")

textCol: org.apache.spark.sql.Column = text

边栏推荐

- Room cannot create an SQLite connection to verify the queries

- Yolov5、Pycharm、Anaconda环境安装

- Global and Chinese market of valve institutions 2022-2028: Research Report on technology, participants, trends, market size and share

- MySql——CRUD

- 建立时间和保持时间的模型分析

- Power query data format conversion, Split Merge extraction, delete duplicates, delete errors, transpose and reverse, perspective and reverse perspective

- Ffmpeg learning - core module

- State mode design procedure: Heroes in the game can rest, defend, attack normally and attack skills according to different physical strength values.

- Atcoder beginer contest 258 [competition record]

- 小程序技术优势与产业互联网相结合的分析

猜你喜欢

About the slmgr command

![[designmode] Decorator Pattern](/img/65/457e0287383d0ca9a28703a63b4e1a.png)

[designmode] Decorator Pattern

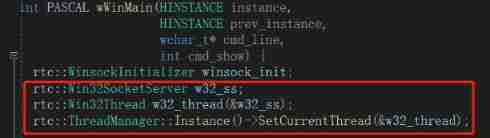

Basic introduction and source code analysis of webrtc threads

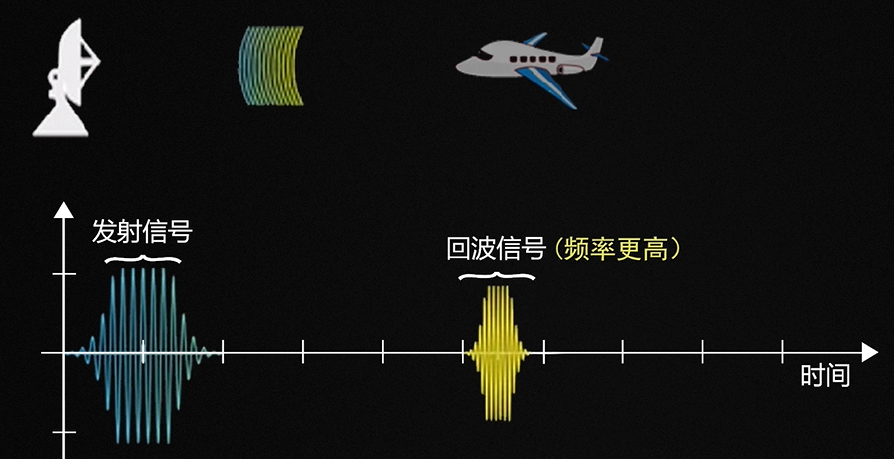

Doppler effect (Doppler shift)

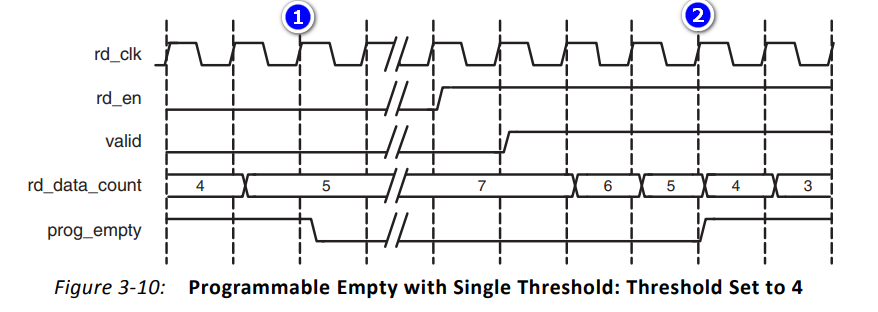

Start from the bottom structure and learn the introduction of fpga---fifo IP core and its key parameters

Hardware and interface learning summary

![[designmode] composite mode](/img/9a/25c7628595c6516ac34ba06121e8fa.png)

[designmode] composite mode

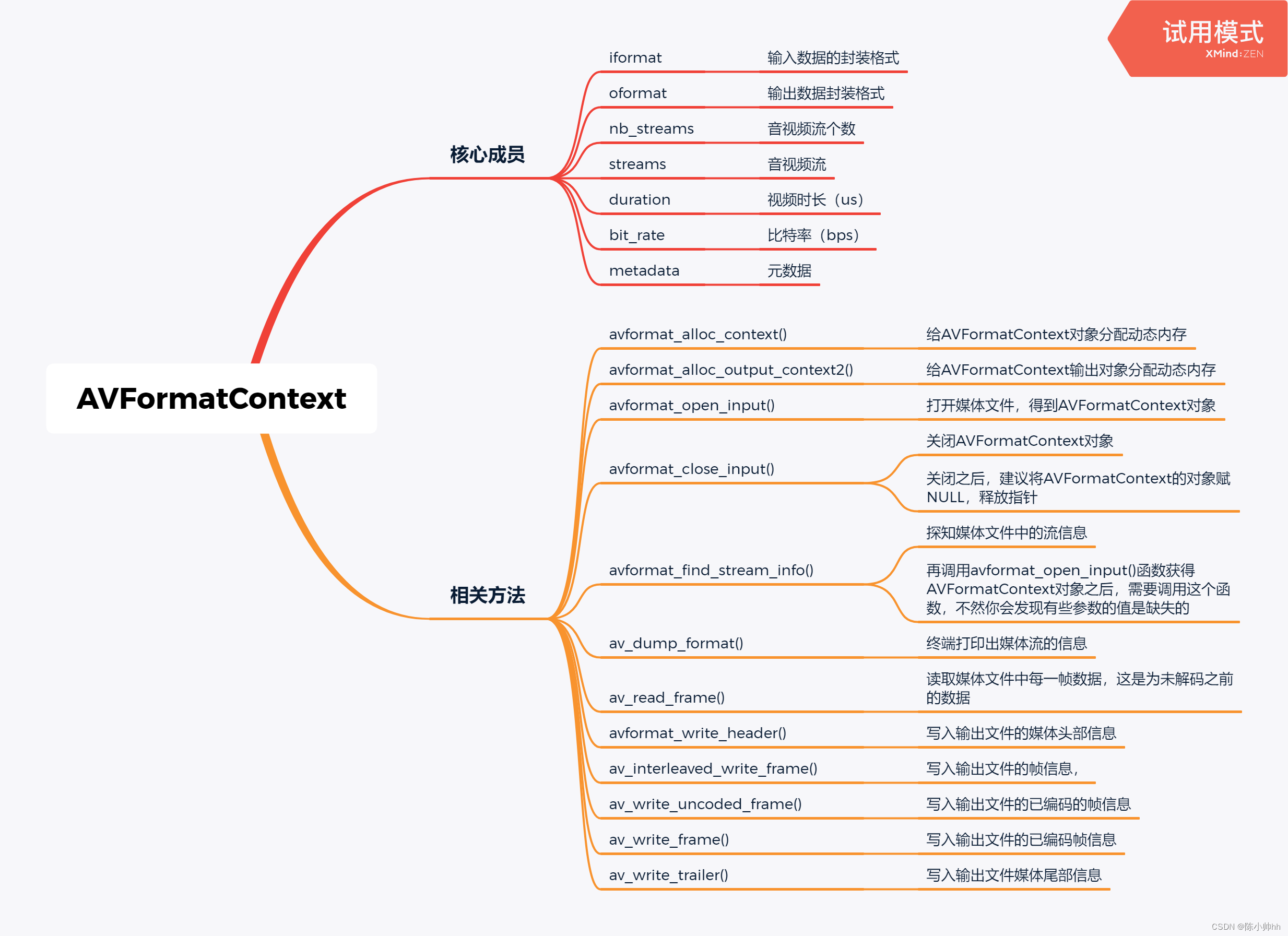

FFMPEG关键结构体——AVFormatContext

Configuring OSPF GR features for Huawei devices

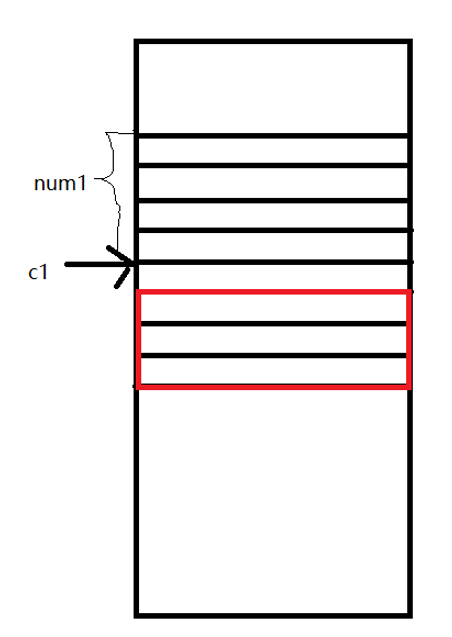

关于结构体所占内存大小知识

随机推荐

The global and Chinese markets of dial indicator calipers 2022-2028: Research Report on technology, participants, trends, market size and share

Codeforces gr19 D (think more about why the first-hand value range is 100, JLS yyds)

[designmode] adapter pattern

Yunna | what are the main operating processes of the fixed assets management system

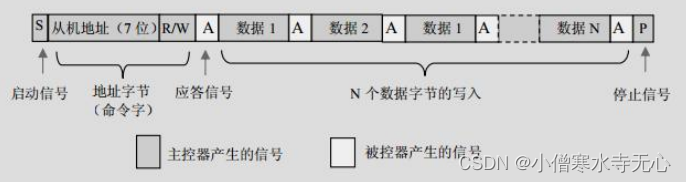

USB Interface USB protocol

Opencv classic 100 questions

[designmode] Decorator Pattern

免费的聊天机器人API

建立时间和保持时间的模型分析

Transport layer protocol ----- UDP protocol

FFMPEG关键结构体——AVCodecContext

多线程与高并发(8)—— 从CountDownLatch总结AQS共享锁(三周年打卡)

MySql——CRUD

Codeforces Round #804 (Div. 2)【比赛记录】

Teach you to run uni app with simulator on hbuilderx, conscience teaching!!!

Classical concurrency problem: the dining problem of philosophers

uniapp开发,打包成H5部署到服务器

Determinant learning notes (I)

LeetCode 1189. Maximum number of "balloons"

行列式学习笔记(一)