当前位置:网站首页>Key structure of ffmpeg - avframe

Key structure of ffmpeg - avframe

2022-07-06 00:00:00 【Chen Xiaoshuai HH】

One 、AVFrame Structure

AVFrame Structure is generally used for Store raw data ( That is, uncompressed data , For example, for video YUV,RGB, For audio, it's PCM), In addition, it contains some related information .

for instance , The macroblock type table is stored during decoding ,QP surface , Motion vector table and other data . Relevant data is also stored during coding . So in use FFMPEG When performing bitstream analysis ,AVFrame Is a very important structure .

AVFramet Usually, there are many code stream parameters in decoding , When encoding, it is mainly used to carry image data or audio sampling data . The definition of a structure lies in libavutil/frame.h, Here are the main variables in decoding

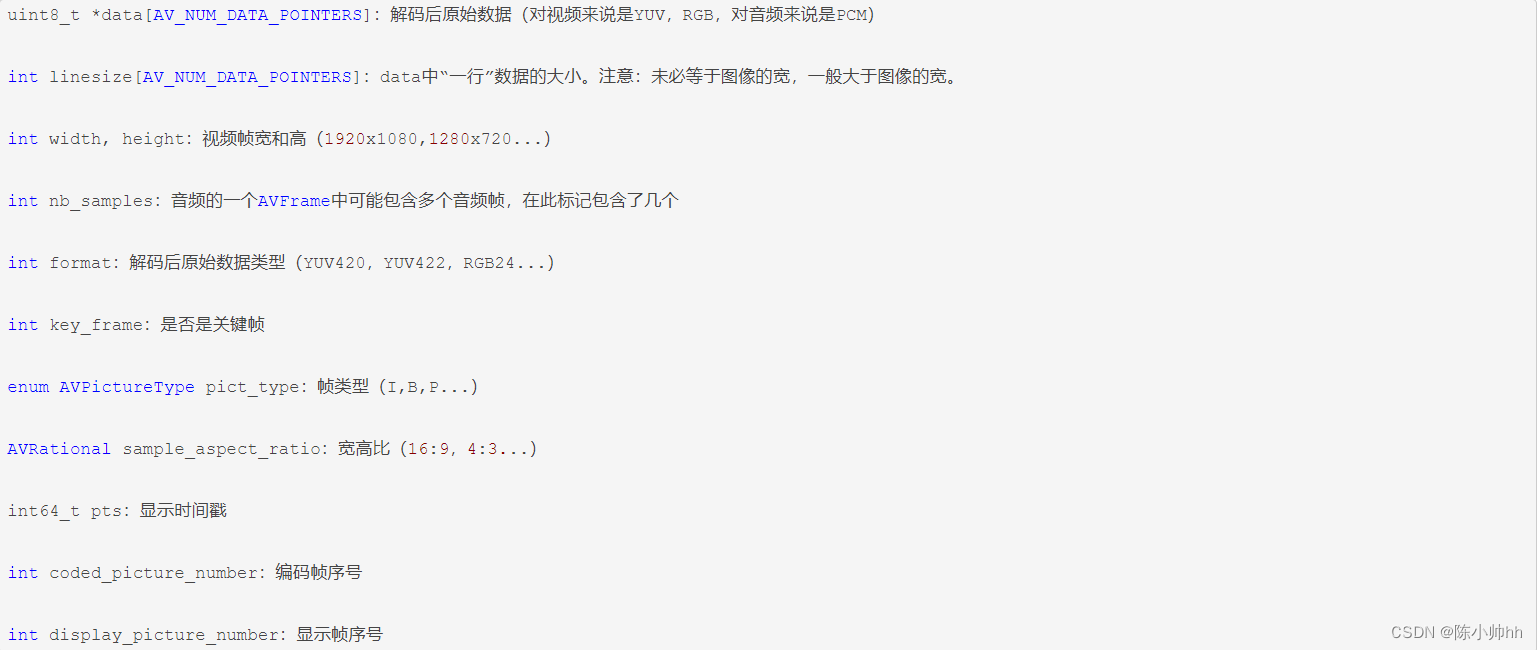

1. A variable is introduced

①uint8_t *data[AV_NUM_DATA_POINTERS];

(1) Image data :

about packed Formatted data ( for example RGB24), It will be saved to data[0] Inside .

about planar Formatted data ( for example YUV420P), Will be separated into data[0],data[1],data[2]…(YUV420P in data[0] save Y,data[1] save U,data[2] save V)

(2) Audio data :

Sampled data PCM, Save in the same way as image data . For planar The number of audio data channels in format exceeds 8 when , Other channel data is stored in extended_data in .

②int linesize[AV_NUM_DATA_POINTERS];

The span of line bytes , amount to stride. about data[i] The number of bytes occupied by a row of pixels in the region , about RGB24 The theory is {wh3, 0, …}; about yuv420p, The theory is {w, w/2, w/2, 0, …}. but ffmpeg Memory will be filled with alignment , The actual number of line bytes will be greater than or equal to the theoretical value .

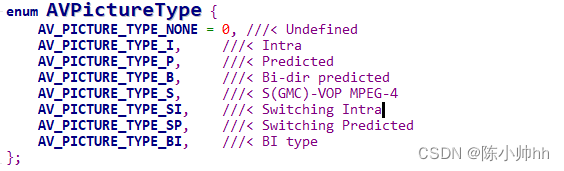

③enum AVPictureType pict_type;

Frame data type , Some extracts are as follows

Two 、 Introduction to common functions

1、av_frame_alloc()

apply AVFrame Structure space , At the same time, the structure of the application will be initialized . Watch out! , This function just creates AVFrame Structural space ,AVFrame Medium uint8_t *data[AV_NUM_DATA_POINTERS] Space at this time NULL, Will not be created .

2、av_frame_free()

Release AVFrame Structure space of . This function is interesting . Because it not only frees up the space of the structure , Also involves AVFrame Medium uint8_t *data[AV_NUM_DATA_POINTERS]; Field release problem ., If AVFrame Medium uint8_t *data[AV_NUM_DATA_POINTERS] Citation in ==1, Then release data Space .

3、int av_frame_ref(AVFrame *dst, const AVFrame *src)

The existing AVFrame References to , This quote does two actions :1、 take src The attribute content is copied to dst,2、 Yes AVFrame Medium uint8_t *data[AV_NUM_DATA_POINTERS] Field reference count +1.

4、void av_frame_unref(AVFrame *frame)

Yes frame Release references , Did two moves :1、 take frame Initialization of each attribute of ,2、 If AVFrame Medium uint8_t *data[AV_NUM_DATA_POINTERS] Citation in ==1, Then release data Space . Of course , If data Reference count of >1 Others frame To detect release .

5、av_frame_get_buffer()

This function is to build AVFrame Medium uint8_t *data[AV_NUM_DATA_POINTERS] Memory space , Before using this function frame The structure of the format、width、height: Assignment must be made. , Otherwise, how can the function know how many bytes of space to create !

3、 ... and 、 Use of conventional decoding process

3 A step :

①AVFrame *pFrame = av_frame_alloc() Allocate one AVFrame object , buffer data[] Not allocated .

② Use call av_receive_frame decode , Would be right pFrame Distribute data[] Buffer and save decoded data ; After each use , Must use av_frame_unref Release buffer , Otherwise, repeated decoding will cause memory leakage .

③ Need to use av_frame_free Release the entire object .

AVFrame *pFrame = av_frame_alloc(); // [1]

while(){

…

av_receive_frame(ctx, pFrame);

… // process

av_frame_unref(pFrame); // [2]

}

av_frame_free(pFrame); // [3]

Four 、 The image processing

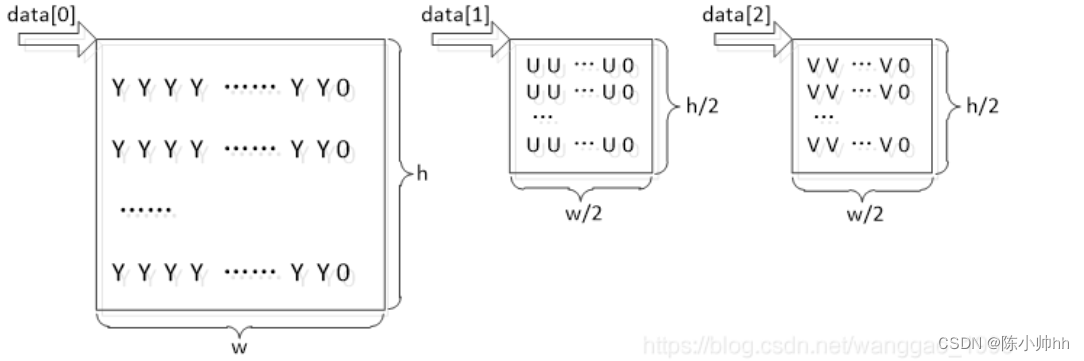

Mentioned earlier ,ffmpeg The internal encoder is for the image processing part in order to facilitate the optimization processing , Usually the created buffer is larger than the original image , The actual valid data part is only part of the buffer . This optimization scheme is directly reflected in linesize On , Use 8 or 16 or 32 Byte alignment , Depends on the platform .

For resolution 638*272 After video decoding yuv420p The buffer linsesize by {640,320,320,…}, The memory layout structure is shown in the figure below

In the figure , among w = 640 , h = 320. You can see , Data filling is carried out between each row of data in the three channels ,Y The number of line bytes of the region is not equal to 638,U/V The number of line bytes of the region is not equal to 319. observe data[2],data[1],data[0] Difference between .

If we need yuv Three components are unfilled yuv420p data , You can manually allocate a size of wh3/2 Of memory , After decoding AVFrame Of data[] Copy it from the library .

1、 Allocate buffer memory

① Method 1 : Use malloc Native memory management ,

uint8_t yuv_buf = malloc(w*h*3/2);

② Method 2 :fmpeg Memory allocation function

// yuv420p Alignment Variable

AVFrame *frame_yuv = av_frame_alloc();

// Allocate buffer , After receiving the conversion yuv420p Of 1 Byte aligned data , The resolution does not change

av_image_alloc(frame_yuv->data, frame_yuv->linesize,

video_decoder_ctx->width, video_decoder_ctx->height, AV_PIX_FMT_YUV420P, 1);

// For coding , need AVFrame There are corresponding parameters

frame_yuv->width = video_decoder_ctx->width;

frame_yuv->height = video_decoder_ctx->height;

frame_yuv->format = AV_PIX_FMT_YUV420P;

③ Method 3 : Native pointer plus ffmpeg Memory allocation function

uint8_t *yuvbuf;

int linesize[4];

av_image_alloc(&yuvbuf, linesize, video_decoder_ctx->width, video_decoder_ctx->height,

AV_PIX_FMT_YUV420P, 1);

④ Method four : Use av_frame_get_buffer function ( Check whether the memory is continuous )

AVFrame *frame_yuv = av_frame_alloc();

frame_yuv->width = video_decoder_ctx->width;

frame_yuv->height = video_decoder_ctx->height;

frame_yuv->format = AV_PIX_FMT_YUV420P;

// You must specify frame Of Audio / video Parameters

av_frame_get_buffer(frame_yuv, 1); // align = 0, The system chooses the best alignment

Be careful ,av_frame_get_buffer(frame_yuv, 1); Guarantee y,u,v The component data area is 1 Byte Alignment 、 Successive , however y,u,v The three data areas are not 1 Byte alignment 、 It's not continuous . for example , Manually assign 100*100 Size yuv420 Data component part of

AVFrame *frame_yuv = av_frame_alloc();

frame_yuv->width = 100;

frame_yuv->height = 100;

frame_yuv->format = AV_PIX_FMT_YUV420P;

av_frame_get_buffer(frame_yuv, 1);

The results are as follows , The pointers of the three component data areas are from small to large u < y < v, The interval between pointers is not equal to wh, Also is not equal to wh/2.

Be careful : One of the last two ways is to use bare pointers , A kind of help AVFrame, Although the way to access the management buffer is different , But they all use av_image_alloc, You need to pass the buffer pointer and receive linesize Array of .

边栏推荐

- GFS distributed file system

- Russian Foreign Ministry: Japan and South Korea's participation in the NATO summit affects security and stability in Asia

- Research notes I software engineering and calculation volume II (Chapter 1-7)

- Hardware and interface learning summary

- 【luogu P3295】萌萌哒(并查集)(倍增)

- MySQL之函数

- FFMPEG关键结构体——AVFormatContext

- NSSA area where OSPF is configured for Huawei equipment

- VBA fast switching sheet

- Gd32f4xx UIP protocol stack migration record

猜你喜欢

Senparc. Weixin. Sample. MP source code analysis

选择致敬持续奋斗背后的精神——对话威尔价值观【第四期】

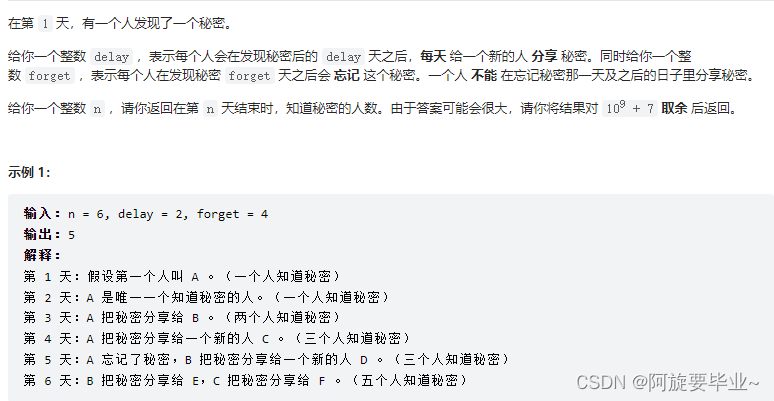

20220703 week race: number of people who know the secret - dynamic rules (problem solution)

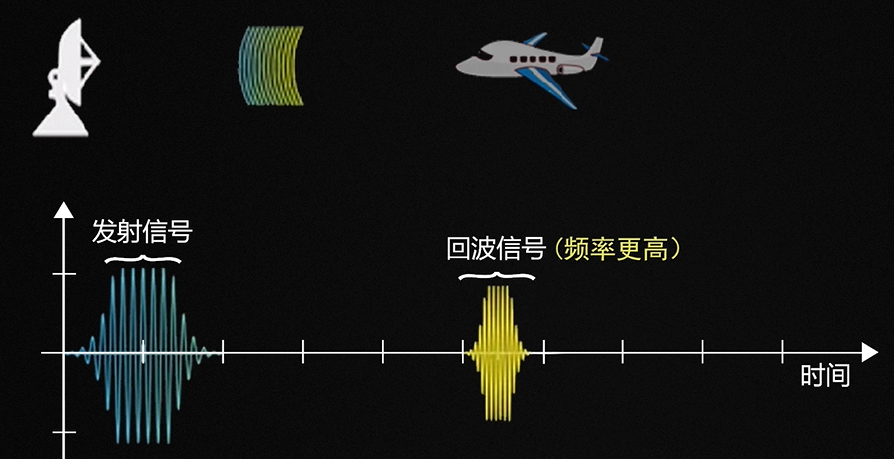

多普勒效应(多普勒频移)

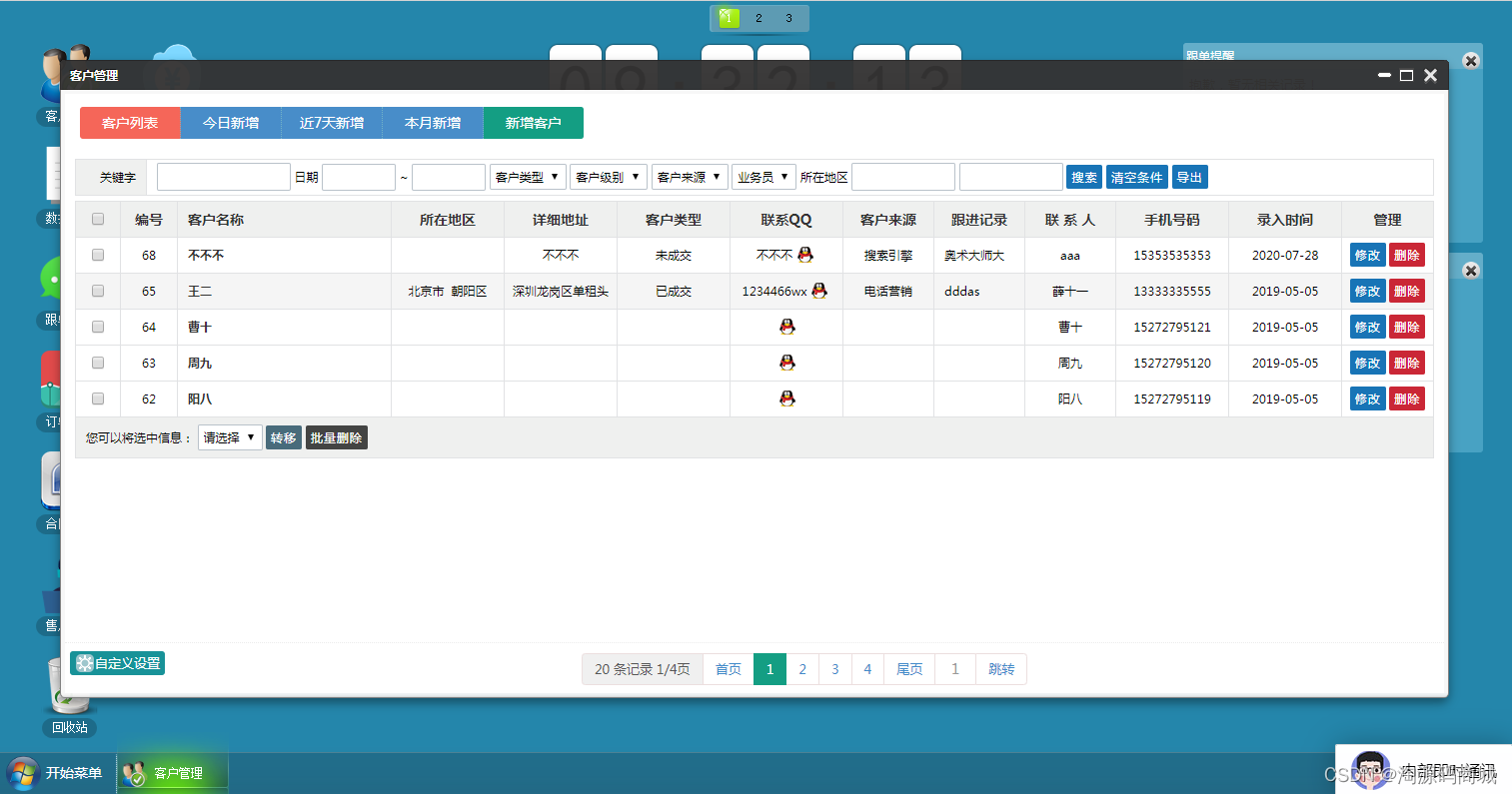

Open source CRM customer relationship system management system source code, free sharing

Bao Yan notes II software engineering and calculation volume II (Chapter 13-16)

单商户V4.4,初心未变,实力依旧!

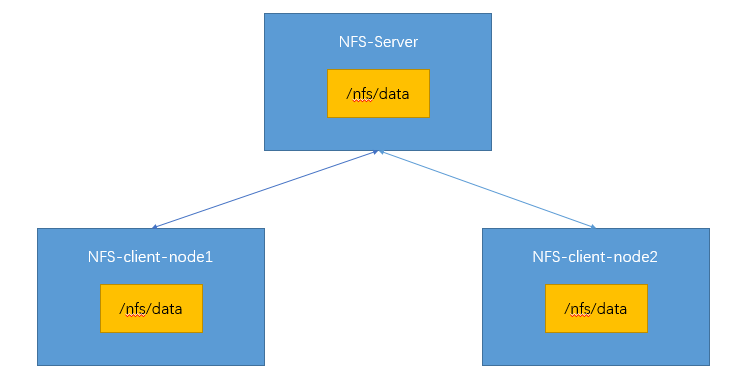

PV静态创建和动态创建

Use mapper: --- tkmapper

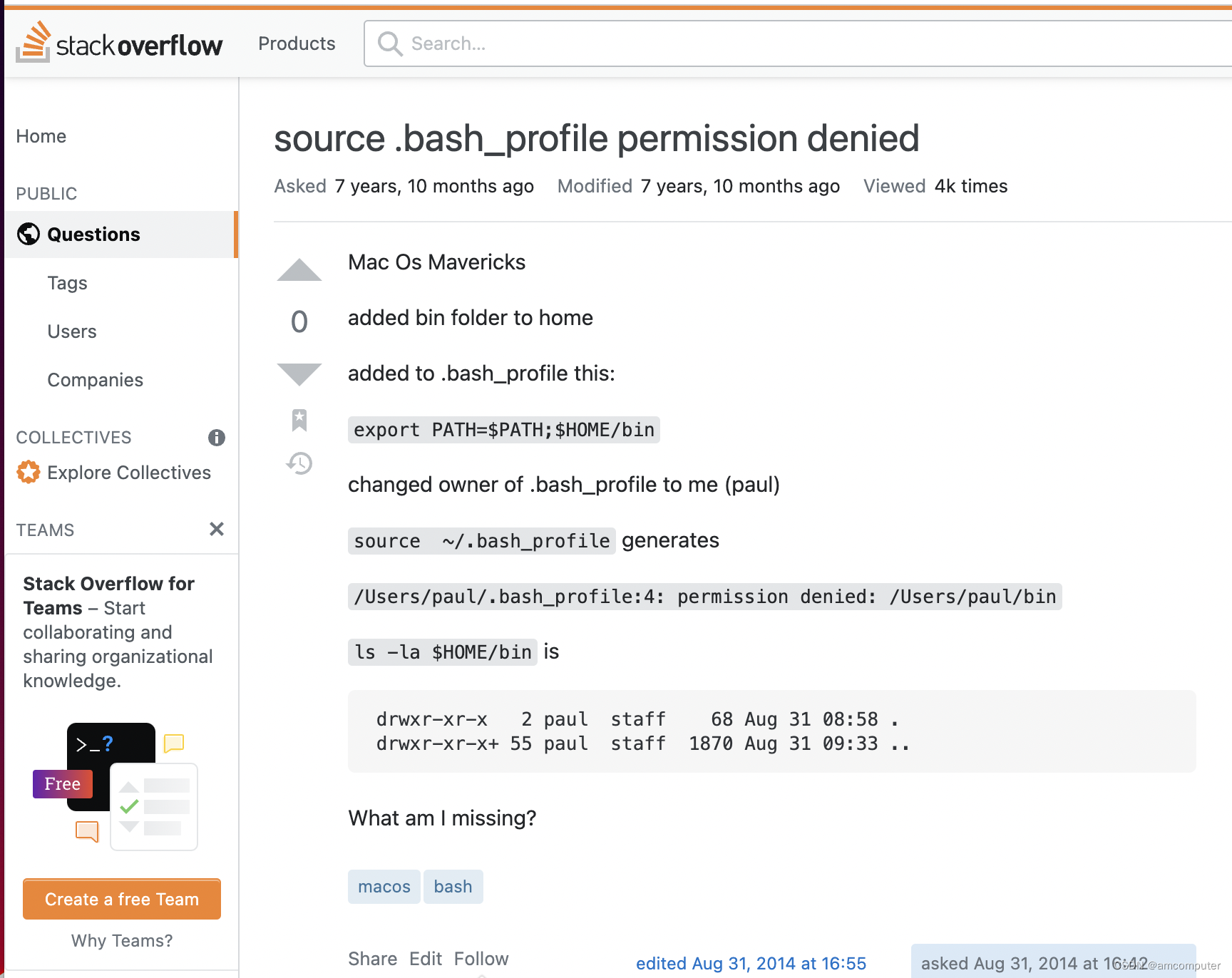

Permission problem: source bash_ profile permission denied

随机推荐

微信小程序---WXML 模板语法(附带笔记文档)

硬件及接口学习总结

[noi simulation] Anaid's tree (Mobius inversion, exponential generating function, Ehrlich sieve, virtual tree)

时区的区别及go语言的time库

C# 反射与Type

Asynchronous task Whenall timeout - Async task WhenAll with timeout

14 MySQL-视图

Fiddler Everywhere 3.2.1 Crack

Mathematical model Lotka Volterra

第16章 OAuth2AuthorizationRequestRedirectWebFilter源码解析

PADS ROUTER 使用技巧小记

Chapter 16 oauth2authorizationrequestredirectwebfilter source code analysis

从底层结构开始学习FPGA----FIFO IP核及其关键参数介绍

[Luogu p3295] mengmengda (parallel search) (double)

GFS distributed file system

How to rotate the synchronized / refreshed icon (EL icon refresh)

Single merchant v4.4 has the same original intention and strength!

Initialize your vector & initializer with a list_ List introduction

Russian Foreign Ministry: Japan and South Korea's participation in the NATO summit affects security and stability in Asia

上门预约服务类的App功能详解