当前位置:网站首页>Scheduling system of kubernetes cluster

Scheduling system of kubernetes cluster

2022-07-05 04:05:00 【Fate in the Jianghu】

kubernetes Cluster scheduling system

One 、kube-scheduler Introduce

1.kube-scheduler brief introduction

1.Kubernetes Scheduler yes Kubernetes One of the core components of the control plane .

2.Scheduler Run in the control plane , And distribute the workload to Kubernetes colony .

3.kube-scheduler Will be based on Kubernetes The scheduling principle and our configuration options select the best node to run pod,

2.k8s The role of the dispatching system

1. Maximize resource utilization

2. Meet the user specified scheduling requirements

3. Meet custom priority requirements

4. High scheduling efficiency , Be able to make quick decisions based on resources

5. It can adjust the scheduling strategy according to the change of load

6. Consider fairness at all levels

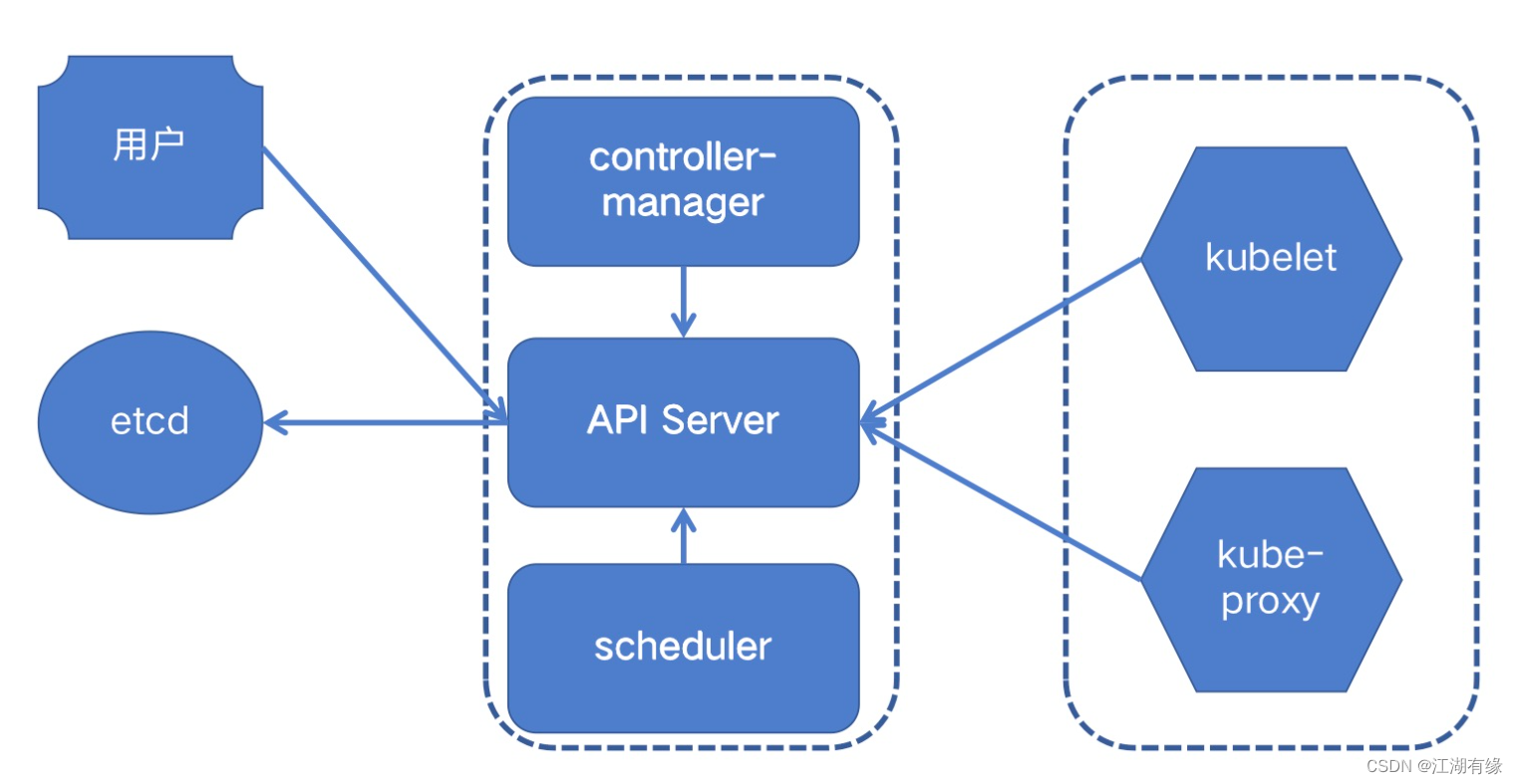

3.kubernetes Component diagram

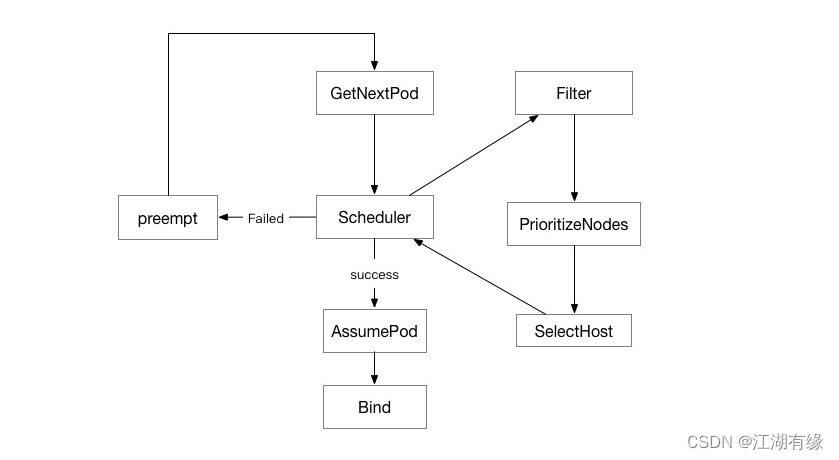

4.schedule Working diagram of scheduler

Two 、 see kubernetes state

[[email protected]-master ~]# kubectl get nodes -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

k8s-master Ready control-plane,master 39h v1.23.1 192.168.3.201 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 containerd://1.6.6

k8s-node01 Ready worker 39h v1.23.1 192.168.3.202 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 containerd://1.6.6

k8s-node02 Ready <none> 39h v1.23.1 192.168.3.203 <none> CentOS Linux 7 (Core) 3.10.0-957.el7.x86_64 containerd://1.6.6

3、 ... and 、kube-scheduler Process of selecting nodes

1. primary

. The preselection phase is mainly used to exclude nodes that do not meet the conditions .

# One pod The resources running in the container are as required

resources:

request:

cpu: 1

memory: 1Gi

2. optimization

The optimization link is mainly to score the nodes that meet the conditions .

Reference items for scoring

1. The actual resource occupation of the node

2. In nodes pod The number of

3. Nodes in the cpu Load condition

4. Memory usage in nodes

.......

3. Final selection

The final selection phase sorts the nodes according to the score , Find the node with the highest score , To schedule .

Four 、 Intervention scheduling method - tag chooser

1. Introduction to tag selector

1. For the specified node tagging

2. by pod Specify to schedule nodes with specific labels

2. Of the label selector yaml How to write it

① View all node labels

[[email protected]-master ~]# kubectl get nodes --show-labels

NAME STATUS ROLES AGE VERSION LABELS

k8s-master Ready control-plane,master 40h v1.23.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-master,kubernetes.io/os=linux,node-role.kubernetes.io/control-plane=,node-role.kubernetes.io/master=,node.kubernetes.io/exclude-from-external-load-balancers=

k8s-node01 Ready worker 40h v1.23.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node01,kubernetes.io/os=linux,node-role.kubernetes.io/worker=

k8s-node02 Ready <none> 40h v1.23.1 beta.kubernetes.io/arch=amd64,beta.kubernetes.io/os=linux,kubernetes.io/arch=amd64,kubernetes.io/hostname=k8s-node02,kubernetes.io/os=linux,type=dell730

② stay yaml Select a label in the file

volumes:

- name: rootdir

hostPath:

path: /data/mysql

nodeSelector:

#disk: ssd

kubernetes.io/hostname=k8s-node01 # You can choose the unique tags built into the system

containers:

- name: mysql

image: mysql:5.7

volumeMounts:

- name: rootdir

mountPath: /var/lib/mysql

3. Run a complete pod Example

cat ./label.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: mysql

name: mysql

spec:

replicas: 1

selector:

matchLabels:

app: mysql

strategy: {

}

template:

metadata:

creationTimestamp: null

labels:

app: mysql

spec:

volumes:

- name: datadir

hostPath:

path: /data/mysql

nodeSelector:

disk: ssd

nodeType: cpu

containers:

- image: mysql:5.7

name: mysql

env:

- name: MYSQL_ROOT_PASSWORD

value: "redhat"

volumeMounts:

- name: datadir

mountPath: /var/lib/mysql

4. to node02 Add labels to nodes

[[email protected]-master ~]# kubectl label nodes k8s-node02 disk=ssd

node/k8s-node02 labeled

[[email protected]-master ~]# kubectl label nodes k8s-node02 nodeType=cpu

node/k8s-node02 labeled

5. establish pod

[[email protected]-master ~]# kubectl apply -f ./label.yaml

deployment.apps/mysql created

6. see pod The node

[[email protected]-master ~]# kubectl get pod -owide |grep node02

elasticsearch-master-0 1/1 Running 2 (7m54s ago) 29h 10.244.58.224 k8s-node02 <none> <none>

fb-filebeat-lj5p7 1/1 Running 3 (7m54s ago) 28h 10.244.58.221 k8s-node02 <none> <none>

kb-kibana-5c46dbc5dd-htw7n 1/1 Running 1 (7m54s ago) 25h 10.244.58.222 k8s-node02 <none> <none>

metric-metricbeat-5h5g5 1/1 Running 2 (7m53s ago) 27h 192.168.3.203 k8s-node02 <none> <none>

metric-metricbeat-758c5c674-ldgg4 1/1 Running 2 (7m54s ago) 27h 10.244.58.225 k8s-node02 <none> <none>

mysql-59c6fc696d-qrjx9 1/1 Running 0 13m 10.244.58.223 k8s-node02 <none> <none>

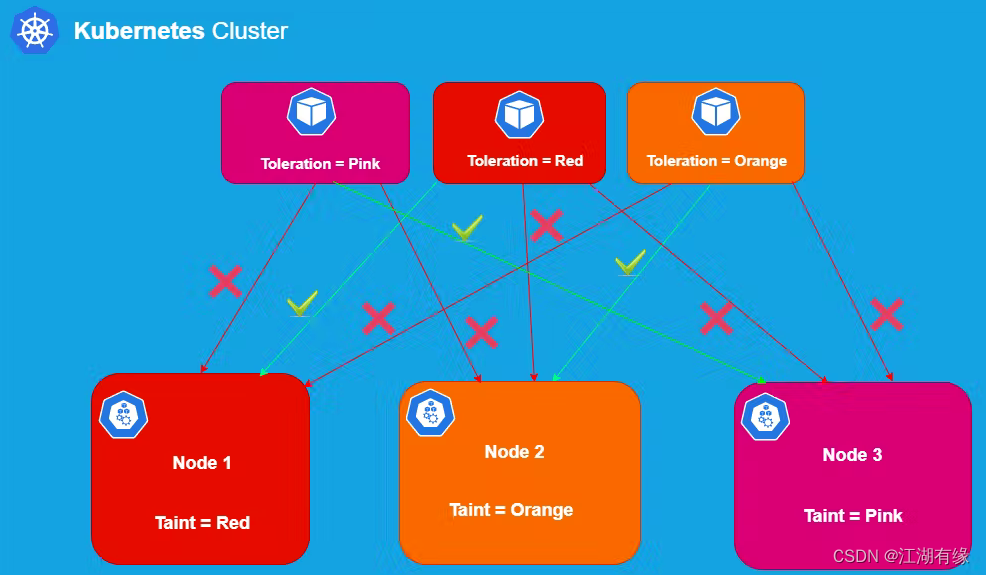

5、 ... and 、 Intervention scheduling method —— The stain

1. The stain taint Introduce

The stain : When a node is injured taint When the tag , By default , whatever pod Will not be dispatched to this node , Even if this node is assigned a label selector , This node must be selected ,pod It will not run to this node , here pod Meeting pending.

2. Stain type

* preferNoSchedule:

Try not to schedule

* NoSchedule: No scheduling

At present node If there is already some before the stain pod Running on , When stained , new pod Will not schedule it ; But already running pod Will not be expelled

* NoExecute: No scheduling .

At present node If there is some before the stain pod Running on , When stained , Will immediately expel the existing pod

3. Create a stain for the work node

[[email protected]-master ~]# kubectl taint node k8s-node02 key1=value:NoSchedule

node/k8s-node02 tainted

[[email protected]-master ~]# kubectl taint node k8s-node02 key2=value:NoExecute

node/k8s-node02 tainted

4. Remove the stain on the work node

kuberctl taint node k8s-node02 key1-

5. Check the stain of a node

[[email protected]-master ~]# kubectl describe nodes k8s-node02 |grep -i tain -A2 -B2

nodeType=cpu

type=dell730

Annotations: kubeadm.alpha.kubernetes.io/cri-socket: /run/containerd/containerd.sock

node.alpha.kubernetes.io/ttl: 0

projectcalico.org/IPv4Address: 192.168.3.203/24

--

volumes.kubernetes.io/controller-managed-attach-detach: true

CreationTimestamp: Sun, 03 Jul 2022 01:14:11 +0800

Taints: key2=value:NoExecute

key1=value:NoSchedule

Unschedulable: false

--

Operating System: linux

Architecture: amd64

Container Runtime Version: containerd://1.6.6

Kubelet Version: v1.23.1

Kube-Proxy Version: v1.23.1

6、 ... and 、 Intervention scheduling method —— tolerate

1. tolerate toerations Introduce

1. tolerate : When one pod When you can tolerate stains on nodes , Do not represent , It will select this node , And for this pod for , This node is the same as other nodes without stains ..

2. One pod Can tolerate multiple stains , When there are multiple stains on a node , Only the pod Tolerate all the stains on this node , This node is in this pod In front of you, you can behave like a node without stains .

2. Tolerant and tainted pod choice

3. stay yaml Tolerant usage in documents

apiVersion: v1

kind: Pod

metadata:

name: nginx

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.5.2

tolerations:

- key: "check"

operator: "Equal"

value: "xtaint"

effect: "NoExecute"

tolerationSeconds: 3600

4. Tolerance related parameter interpretation

tolerations:-----------> tolerate

- key: "check" -----------> Tolerant key

operator: "Equal"-----------> The operator " be equal to "

value: "xtaint"-----------> The key value corresponding to the tolerated key

effect: "NoExecute"-----------> Tolerable key corresponding influence effect

tolerationSeconds: 3600-----------> tolerate 3600 second . This pod It won't be like ordinary pod Be expelled immediately like that , But wait 3600 Seconds before being deleted .

边栏推荐

- [C language] address book - dynamic and static implementation

- 面试字节,过关斩将直接干到 3 面,结果找了个架构师来吊打我?

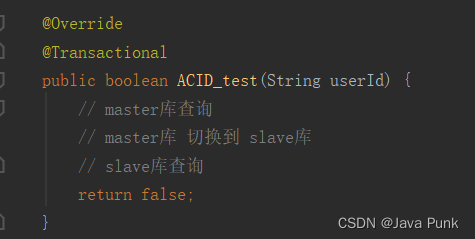

- @The problem of cross database query invalidation caused by transactional annotation

- About the recent experience of writing questions

- Test d'automatisation de l'interface utilisateur télécharger manuellement le pilote du navigateur à partir de maintenant

- Threejs loads the city obj model, loads the character gltf model, and tweetjs realizes the movement of characters according to the planned route

- Why is there a reincarnation of 60 years instead of 120 years in the tiangan dizhi chronology

- Threejs realizes rain, snow, overcast, sunny, flame

- MindFusion. Virtual Keyboard for WPF

- 快手、抖音、视频号交战内容付费

猜你喜欢

Web components series (VII) -- life cycle of custom components

Common features of ES6

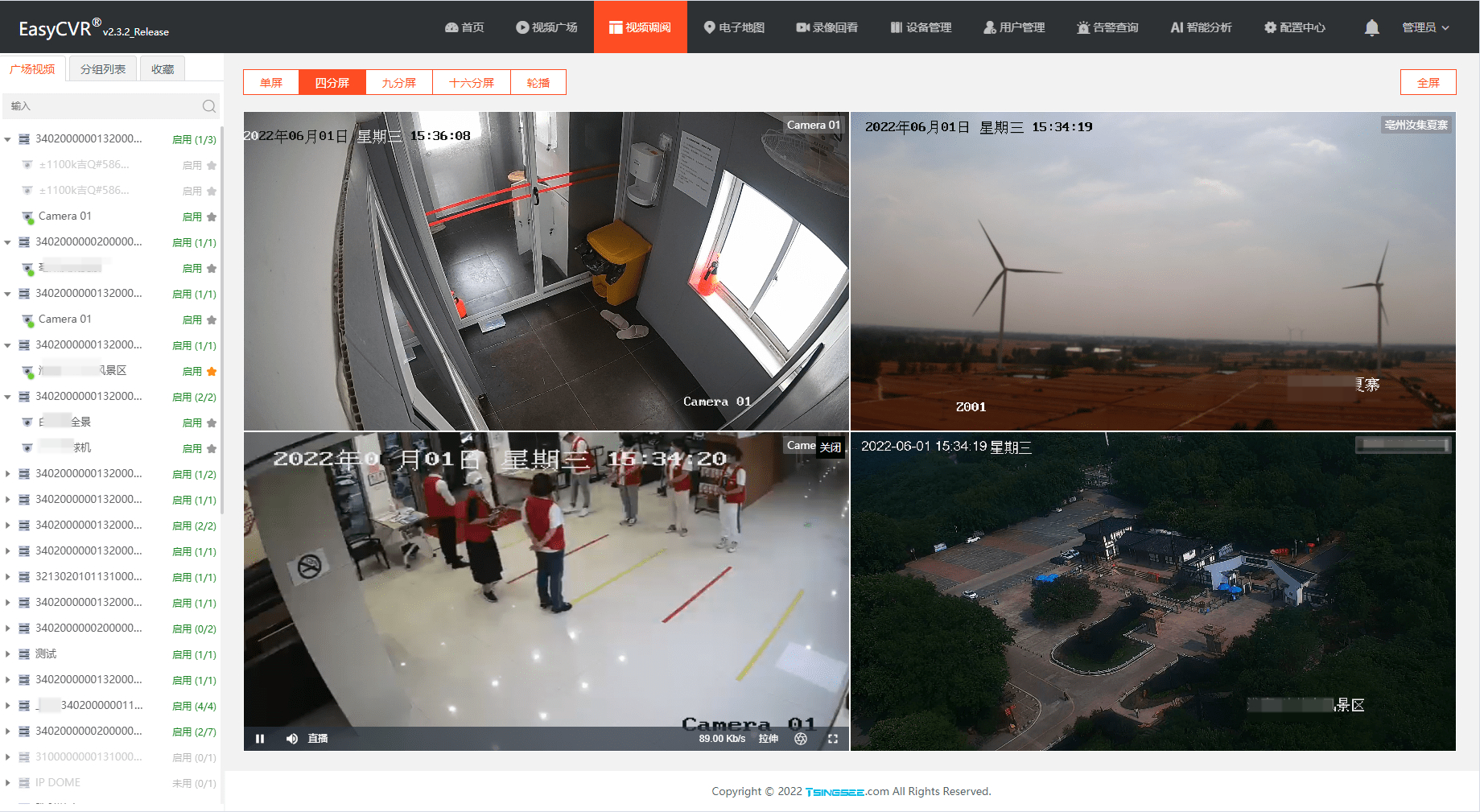

EasyCVR平台出现WebRTC协议视频播放不了是什么原因?

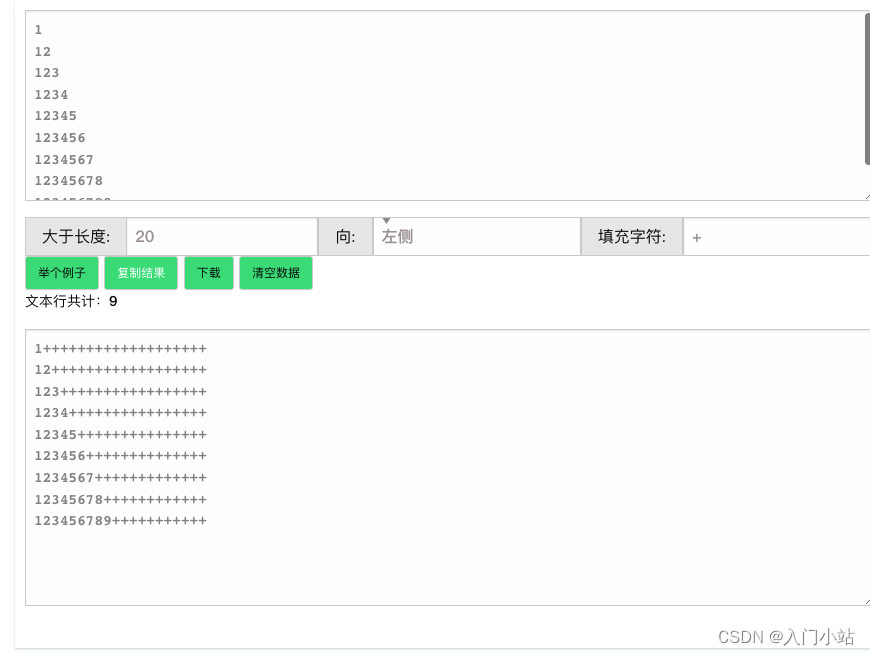

Online text line fixed length fill tool

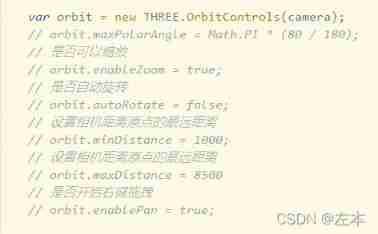

Threejs Internet of things, 3D visualization of farm (III) model display, track controller setting, model moving along the route, model adding frame, custom style display label, click the model to obt

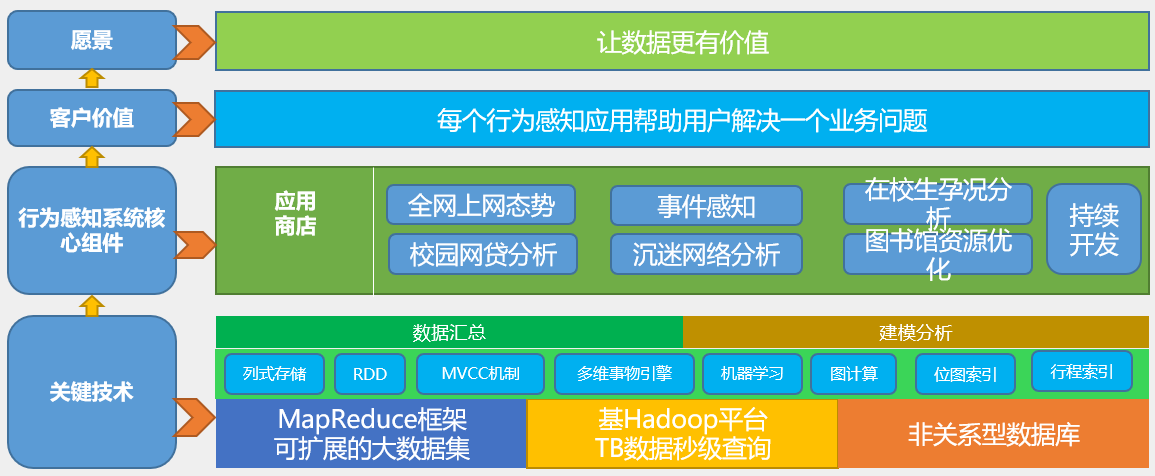

行为感知系统

Basic function learning 02

@The problem of cross database query invalidation caused by transactional annotation

![[C language] address book - dynamic and static implementation](/img/eb/07e7a32a172e5ae41457cf8a49c130.jpg)

[C language] address book - dynamic and static implementation

Special Edition: spreadjs v15.1 vs spreadjs v15.0

随机推荐

The new project Galaxy token just announced by coinlist is gal

Why can't all browsers on my computer open web pages

UI automation test farewell to manual download of browser driver

我国算力规模排名全球第二:计算正向智算跨越

灵魂三问:什么是接口测试,接口测试怎么玩,接口自动化测试怎么玩?

[brush questions] BFS topic selection

How does the applet solve the rendering layer network layer error?

Use object composition in preference to class inheritance

Deflocculant aminoiodotide eye drops

Online sql to excel (xls/xlsx) tool

Wechat applet development process (with mind map)

Redis source code analysis: redis cluster

Number of possible stack order types of stack order with length n

mysql的七种join连接查询

Analysis of glibc strlen implementation mode

C语言课设:影院售票管理系统

Rome chain analysis

Threejs factory model 3DMAX model obj+mtl format, source file download

[array]566 Reshape the matrix - simple

基于TCP的移动端IM即时通讯开发仍然需要心跳保活