当前位置:网站首页>About LDA model

About LDA model

2022-07-05 12:39:00 【NLP journey】

Recently, I am studying recommendation system , There is a model of argot meaning LDA. Read a lot of blogs , Information , The literature , For a person with a bad background in mathematics, I know a little about what this is . Make a note of , First, induction and summary has always been a better way of learning , I hope it can enlighten the latecomers even a little understanding .

Aside from boring Mathematics , What can this model be used for , I found that many materials and blog introductions are relatively general , After reading it, my mind is full of complex and unintelligible mathematical formulas , Even the purpose of this model is not clear . Here to talk about my own understanding . For computer programs , It is clear from the input intermediate process to the output . The input of this model is some corpus ( This abstract word can be embodied into several documents , For example, a few txt file , Each of these files contains an article )+ Initial parameter setting of the model , The intermediate process is to train through model corpus LDA Parameters of the model , Well trained LDA The model can be applied to a document , Output the probability that this document belongs to a topic . So just like other supervised statistical learning methods ,LDA The model should be trained first , Then use the trained model for analysis and prediction .

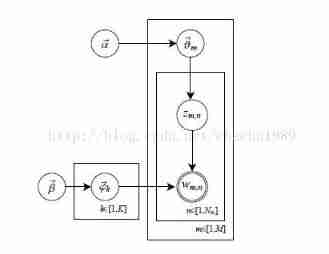

Although the above process is not complicated , But the whole model involves a lot of mathematical knowledge , Especially if you don't understand the function of these knowledge clearly, it's easy to make people dizzy . These include Dirichlet+ Multinomial conjugate distribution ,LDA Model process and Gibbs sampling method . Quote LDA Probability graph model :

From this probability diagram , You can imagine such a process , Join a strange writer to write an article , When it writes each word, it must first determine a theme for the word . The determination of this topic is determined by a multinomial distribution ( It can be thought of as a polyhedral dice , Each side of the dice is a theme ), But this multinomial distribution is composed of a Dirichlet Distribution to determine . This is the upper part of the figure , First of all Dirichlet Distribution determines multinomial distribution , Then the topic is determined by the distribution of multiple items Zm,n. There is also such a process when determining words by topic , First, by a Dirichlet Distribution determines a multinomial distribution , Then the word to be written is determined by the distribution of multiple items .

The above is even LDA Model , Next is gibbs abstract ,gibbs In fact, the function of sampling is to train the values of intermediate hidden parameters according to the input corpus . Because this whole LDA In the process , The input includes two Dirichlet Parameters of the distribution alpha,beta And corpus and topic The number of . Based on these inputs gibbs sampling method , Calculate the middle three parameters ( I don't know how to type these letters , Namely Zm,n And two parameters of multinomial distribution )

LDA After training , For new documents , To know its theme distribution , Can be used again gibbs sampling method , Fix  , obtain

, obtain  that will do .

that will do .

The whole blog post does not involve any mathematical derivation , You need to know more about Daniel's special blog http://blog.csdn.net/yangliuy/article/details/8302599, If there is a mistake , Welcome criticism and correction

边栏推荐

- MySQL constraints

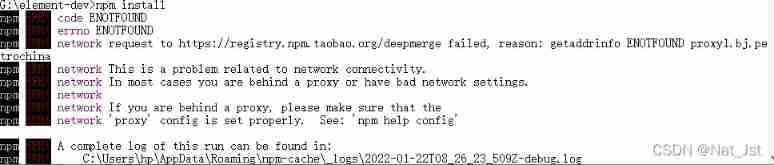

- NPM install reports an error

- Distributed solution - distributed lock solution - redis based distributed lock implementation

- A new WiFi option for smart home -- the application of simplewifi in wireless smart home

- Handwriting blocking queue: condition + lock

- Swift - add navigation bar

- A guide to threaded and asynchronous UI development in the "quick start fluent Development Series tutorials"

- MySQL storage engine

- Select drop-down box realizes three-level linkage of provinces and cities in China

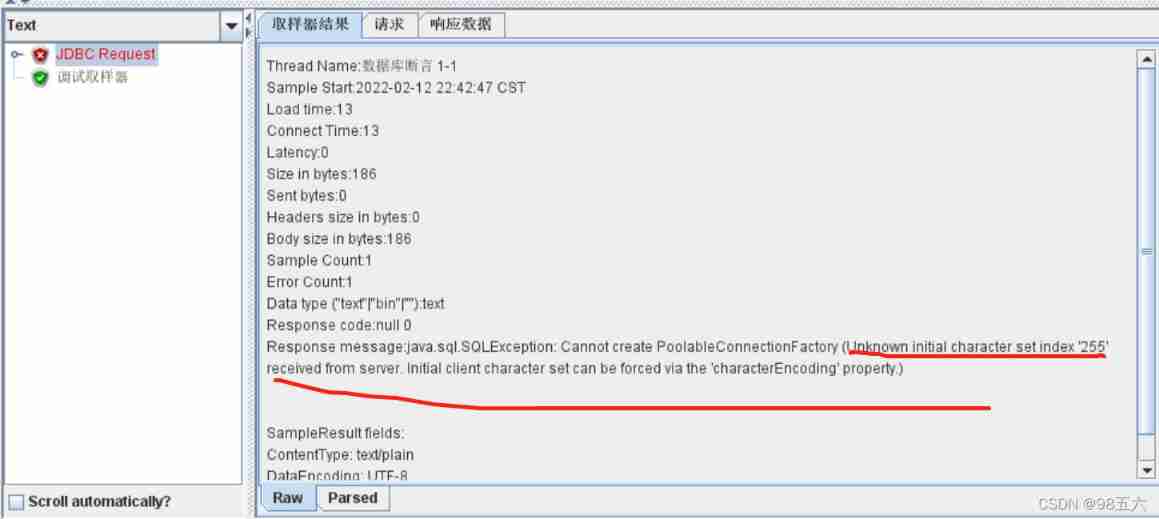

- Get data from the database when using JMeter for database assertion

猜你喜欢

前几年外包干了四年,秋招感觉人生就这样了..

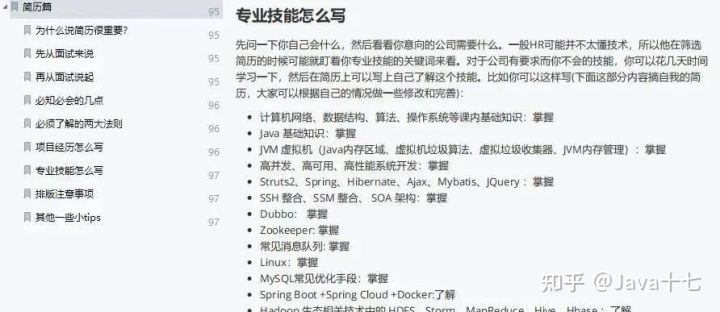

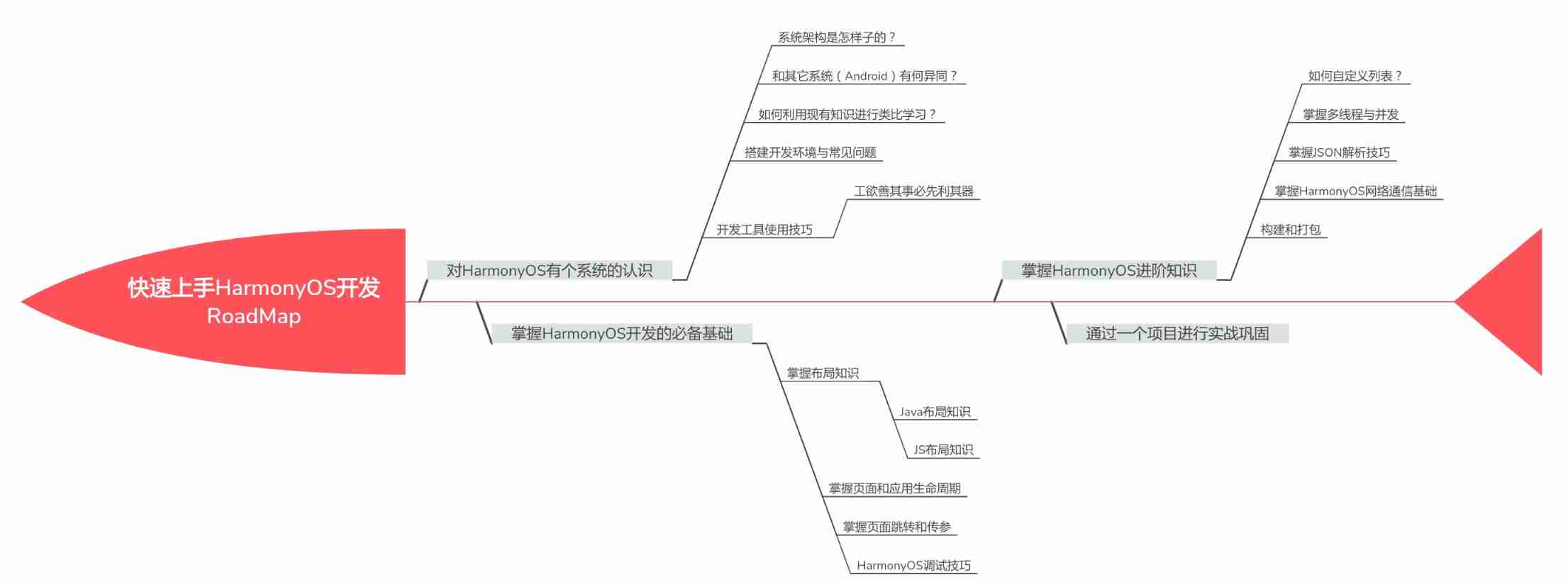

Why learn harmonyos and how to get started quickly?

Principle of universal gbase high availability synchronization tool in Nanjing University

NPM install reports an error

Yum only downloads the RPM package of the software to the specified directory without installing it

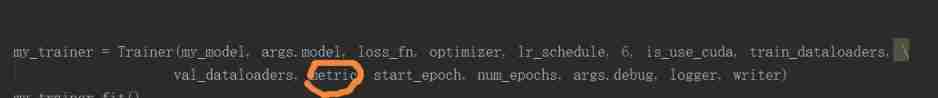

Pytoch uses torchnet Classerrormeter in meter

Get data from the database when using JMeter for database assertion

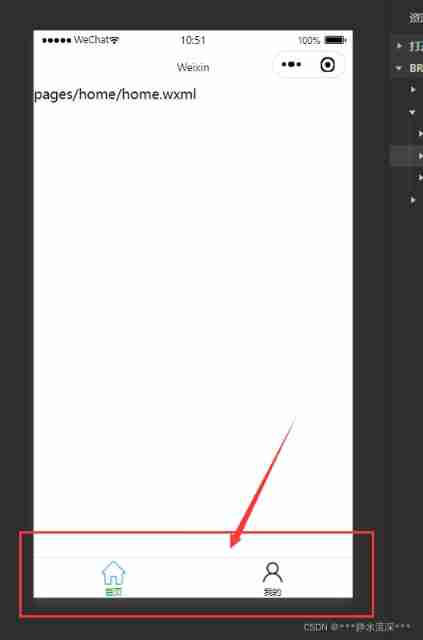

Tabbar configuration at the bottom of wechat applet

Take you two minutes to quickly master the route and navigation of flutter

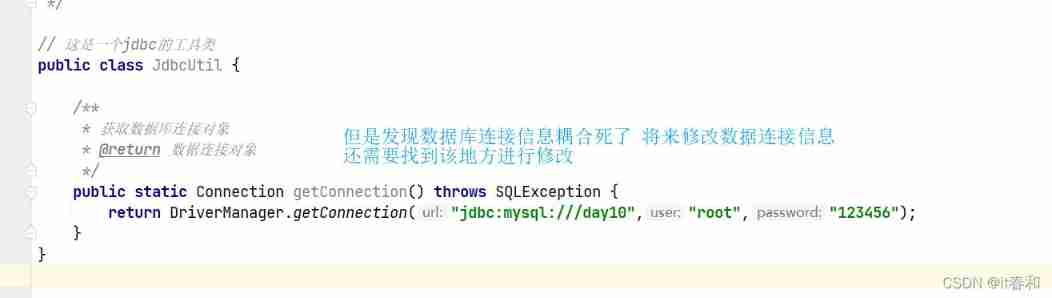

JDBC -- extract JDBC tool classes

随机推荐

Keras implements verification code identification

Add a new cloud disk to Huawei virtual machine

Array cyclic shift problem

MySQL installation, Windows version

C language structure is initialized as a function parameter

Programming skills for optimizing program performance

Want to ask, how to choose a securities firm? Is it safe to open an account online?

Understand kotlin from the perspective of an architect

Image hyperspectral experiment: srcnn/fsrcnn

NPM install reports an error

Iterator details in list... Interview pits

Distributed solution - Comprehensive decryption of distributed task scheduling platform -xxljob

Detailed steps for upgrading window mysql5.5 to 5.7.36

How does MySQL execute an SQL statement?

Solution to order timeout unpaid

Flutter2 heavy release supports web and desktop applications

MySQL index (1)

Automated test lifecycle

Differences between IPv6 and IPv4 three departments including the office of network information technology promote IPv6 scale deployment

Docker configures redis and redis clusters