当前位置:网站首页>Torch learning notes (7) -- take lenet as an example for dataload operation (detailed explanation + reserve knowledge supplement)

Torch learning notes (7) -- take lenet as an example for dataload operation (detailed explanation + reserve knowledge supplement)

2022-07-03 18:22:00 【ZRX_ GIS】

python Reserve knowledge supplement

OS Operation supplement

(1)os.path.abspath(file)&os.path.dirname

import os

# os.path.dirname function : Remove the filename , Return directory

# os.path.abspath(__file__) effect : Get the full path of the current script

BASE_DIR = os.path.abspath(__file__)

print(BASE_DIR)

BASE_DIR = os.path.dirname(os.path.abspath(__file__))

print(BASE_DIR)

dataset_dir = os.path.join(BASE_DIR, "data", "RMB_data")

(2)os.walk(filedir)

import os

# os.walk(filedir) This function will return three objects

# root( Directory path , Tuple format )

# dirs( Subdirectory name , It's a list , Because there will be many subdirectories under a directory path ,, Tuple format )

# files( file name , It is also a list , Because there are multiple files in the same directory , Tuple format )

BASE_DIR = os.path.dirname(os.path.abspath(__file__))

dataset_dir = os.path.abspath(os.path.join(BASE_DIR, "data", "RMB_data")) # Data set path to be split

print(dataset_dir)

root, dirs, files = os.walk(dataset_dir)

print(root, type(root))

print(dirs, type(dirs))

print(files, type(files))

(3)lambda Anonymous functions &x.endswith()&filter()&list()

import os

import random

import shutil

BASE_DIR = os.path.dirname(os.path.abspath(__file__))

dataset_dir = os.path.abspath(os.path.join(BASE_DIR, "data", "RMB_data")) # Data set path to be split

print(dataset_dir)

root, dirs, files = os.walk(dataset_dir)

for root, dirs, files in os.walk(dataset_dir):

# Traverse file by file

for sub_dir in dirs:

imgs = os.listdir(os.path.join(root, sub_dir)) # Get the absolute path of the image

# lambda To create anonymous functions ,lambda As an expression , An anonymous function is defined .x Is the function entry parameter ,x.endswith('.jpg') It's a function body .

# x.endswith('.jpg') Determine whether the string is based on '.jpg' ending

# filter( function , Sequence ) Function to filter the sequence , Filter out the elements that do not meet the conditions , Returns a new list of eligible elements

# list() Method is used to iterate objects ( character string 、 list 、 Yuan Zu 、 Dictionaries ) Convert to list

imgs = list(filter(lambda x: x.endswith('.jpg'), imgs))

print(imgs)

DataLoad Detailed explanation of mechanism

# data: collect (Img,Label)、 Divide (train: Training models 、valid: Verify whether the model is over fitted 、test: Test model performance )、 Read (DataLoader)、 Preprocessing (transforms)

# DataLoad Include Sample and DataSet, among ,Sample Generate index;DataSet For use in accordance with Index Read Img、Label

# torch.utils.data.DataLoader It is used to build an iterative data loader

# Parameters :dataset:Dataset class , Decide where and how to read the data ;batchsize:batch size ;num_works: Whether to read data by multiple processes ;shuffle: Every epoch Is it out of order ;drop_last: When the number of samples can be supplemented batchsize Divisible time , Whether to discard the last batch of data

# epoch: All training samples are input into the model

# iteraction: A batch of samples are input into the model

# batchsize:batch size , decision epoch How many iteration

# torch.utils.data.DataSet()Dataset abstract class , All custom Dataset Need to inherit him , And pass __getitem__() make carbon copies ,getitem Used to receive a index, Return to one sample

Case study

Data set partitioning

# Data set partitioning

import os

import random

import shutil

BASE_DIR = os.path.dirname(os.path.abspath(__file__)) # Store code .py In the folder

# Used to generate storage folders

def makedir(new_dir):

if not os.path.exists(new_dir):

os.makedirs(new_dir)

if __name__ == '__main__':

dataset_dir = os.path.abspath(os.path.join(BASE_DIR, "data", "RMB_data")) # Data set path to be split

split_dir = os.path.abspath(os.path.join(BASE_DIR, "data", "rmb_split")) # First level folder path after segmentation

train_dir = os.path.join(split_dir, "train") # Training set path

valid_dir = os.path.join(split_dir, "valid") # Verify set path

test_dir = os.path.join(split_dir, "test") # Test set path

train_pct = 0.8 # Training set proportion

valid_pct = 0.1 # Verification of specific gravity

test_pct = 0.1 # Test set specific gravity

# Traverse dataset_dir Download all folders and sub files

for root, dirs, files in os.walk(dataset_dir):

# Traverse folder by folder

for sub_dir in dirs:

imgs = os.listdir(os.path.join(root, sub_dir)) # Get the absolute path of the image

imgs = list(filter(lambda x: x.endswith('.jpg'), imgs)) # Get image list

random.shuffle(imgs) # The image list is out of order

img_count = len(imgs) # Record list length , Used for subsequent dataset segmentation

train_point = int(img_count * train_pct) # Training set length

valid_point = int(img_count * (train_pct + valid_pct)) # Validation set length

# warning Mechanism , No image traversed

if img_count == 0:

print("{} Under the table of contents , No pictures , Please check ".format(os.path.join(root, sub_dir)))

import sys

sys.exit(0)

# Traverse the internal image of the dataset according to the length of the dataset

for i in range(img_count):

# First fill in the training set

if i < train_point:

# Store image address

out_dir = os.path.join(train_dir, sub_dir)

# secondly , Verification set

elif i < valid_point:

out_dir = os.path.join(valid_dir, sub_dir)

# Last , Prediction set

else:

out_dir = os.path.join(test_dir, sub_dir)

# Create output path

makedir(out_dir)

# Create image output address

target_path = os.path.join(out_dir, imgs[i])

src_path = os.path.join(dataset_dir, sub_dir, imgs[i])

# Copy the picture

shutil.copy(src_path, target_path)

# Display the data set division

print('Class:{}, train:{}, valid:{}, test:{}'.format(sub_dir, train_point, valid_point - train_point,

img_count - valid_point))

Model structures,

lenet.py

import torch.nn as nn

import torch.nn.functional as F

class LeNet(nn.Module):

def __init__(self, classes):

super(LeNet, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16*5*5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, classes)

def forward(self, x):

out = F.relu(self.conv1(x))

out = F.max_pool2d(out, 2)

out = F.relu(self.conv2(out))

out = F.max_pool2d(out, 2)

out = out.view(out.size(0), -1)

out = F.relu(self.fc1(out))

out = F.relu(self.fc2(out))

out = self.fc3(out)

return out

def initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.xavier_normal_(m.weight.data)

if m.bias is not None:

m.bias.data.zero_()

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight.data, 0, 0.1)

m.bias.data.zero_()

class LeNet2(nn.Module):

def __init__(self, classes):

super(LeNet2, self).__init__()

self.features = nn.Sequential(

nn.Conv2d(3, 6, 5),

nn.ReLU(),

nn.MaxPool2d(2, 2),

nn.Conv2d(6, 16, 5),

nn.ReLU(),

nn.MaxPool2d(2, 2)

)

self.classifier = nn.Sequential(

nn.Linear(16*5*5, 120),

nn.ReLU(),

nn.Linear(120, 84),

nn.ReLU(),

nn.Linear(84, classes)

)

def forward(self, x):

x = self.features(x)

x = x.view(x.size()[0], -1)

x = self.classifier(x)

return x

class LeNet_bn(nn.Module):

def __init__(self, classes):

super(LeNet_bn, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5)

self.bn1 = nn.BatchNorm2d(num_features=6)

self.conv2 = nn.Conv2d(6, 16, 5)

self.bn2 = nn.BatchNorm2d(num_features=16)

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.bn3 = nn.BatchNorm1d(num_features=120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, classes)

def forward(self, x):

out = self.conv1(x)

out = self.bn1(out)

out = F.relu(out)

out = F.max_pool2d(out, 2)

out = self.conv2(out)

out = self.bn2(out)

out = F.relu(out)

out = F.max_pool2d(out, 2)

out = out.view(out.size(0), -1)

out = self.fc1(out)

out = self.bn3(out)

out = F.relu(out)

out = F.relu(self.fc2(out))

out = self.fc3(out)

return out

def initialize_weights(self):

for m in self.modules():

if isinstance(m, nn.Conv2d):

nn.init.xavier_normal_(m.weight.data)

if m.bias is not None:

m.bias.data.zero_()

elif isinstance(m, nn.BatchNorm2d):

m.weight.data.fill_(1)

m.bias.data.zero_()

elif isinstance(m, nn.Linear):

nn.init.normal_(m.weight.data, 0, 1)

m.bias.data.zero_()

commen_tools.py

import torch

import random

import psutil

import numpy as np

from PIL import Image

import torchvision.transforms as transforms

def transform_invert(img_, transform_train):

""" take data Carry out counter-measures transfrom operation :param img_: tensor :param transform_train: torchvision.transforms :return: PIL image """

if 'Normalize' in str(transform_train):

norm_transform = list(filter(lambda x: isinstance(x, transforms.Normalize), transform_train.transforms))

mean = torch.tensor(norm_transform[0].mean, dtype=img_.dtype, device=img_.device)

std = torch.tensor(norm_transform[0].std, dtype=img_.dtype, device=img_.device)

img_.mul_(std[:, None, None]).add_(mean[:, None, None])

img_ = img_.transpose(0, 2).transpose(0, 1) # C*H*W --> H*W*C

if 'ToTensor' in str(transform_train) or img_.max() < 1:

img_ = img_.detach().numpy() * 255

if img_.shape[2] == 3:

img_ = Image.fromarray(img_.astype('uint8')).convert('RGB')

elif img_.shape[2] == 1:

img_ = Image.fromarray(img_.astype('uint8').squeeze())

else:

raise Exception("Invalid img shape, expected 1 or 3 in axis 2, but got {}!".format(img_.shape[2]) )

return img_

def set_seed(seed=1):

random.seed(seed)

np.random.seed(seed)

torch.manual_seed(seed)

torch.cuda.manual_seed(seed)

def get_memory_info():

virtual_memory = psutil.virtual_memory()

used_memory = virtual_memory.used/1024/1024/1024

free_memory = virtual_memory.free/1024/1024/1024

memory_percent = virtual_memory.percent

memory_info = "Usage Memory:{:.2f} G,Percentage: {:.1f}%,Free Memory:{:.2f} G".format(

used_memory, memory_percent, free_memory)

return memory_info

my_dataset.py

import numpy as np

import torch

import os

import random

from PIL import Image

from torch.utils.data import Dataset

random.seed(1)

rmb_label = {

"1": 0, "100": 1}

class RMBDataset(Dataset):

def __init__(self, data_dir, transform=None):

""" rmb Denomination classification task Dataset :param data_dir: str, The path where the dataset is located :param transform: torch.transform, Data preprocessing """

self.label_name = {

"1": 0, "100": 1}

self.data_info = self.get_img_info(data_dir) # data_info Store all picture paths and labels , stay DataLoader Pass through index Read sample

self.transform = transform

def __getitem__(self, index):

path_img, label = self.data_info[index]

img = Image.open(path_img).convert('RGB') # 0~255

if self.transform is not None:

img = self.transform(img) # Do it here transform, To tensor wait

return img, label

def __len__(self):

return len(self.data_info)

@staticmethod

def get_img_info(data_dir):

data_info = list()

for root, dirs, _ in os.walk(data_dir):

# Traversal category

for sub_dir in dirs:

img_names = os.listdir(os.path.join(root, sub_dir))

img_names = list(filter(lambda x: x.endswith('.jpg'), img_names))

# Traverse images

for i in range(len(img_names)):

img_name = img_names[i]

path_img = os.path.join(root, sub_dir, img_name)

label = rmb_label[sub_dir]

data_info.append((path_img, int(label)))

return data_info

class AntsDataset(Dataset):

def __init__(self, data_dir, transform=None):

self.label_name = {

"ants": 0, "bees": 1}

self.data_info = self.get_img_info(data_dir)

self.transform = transform

def __getitem__(self, index):

path_img, label = self.data_info[index]

img = Image.open(path_img).convert('RGB')

if self.transform is not None:

img = self.transform(img)

return img, label

def __len__(self):

return len(self.data_info)

def get_img_info(self, data_dir):

data_info = list()

for root, dirs, _ in os.walk(data_dir):

# Traversal category

for sub_dir in dirs:

img_names = os.listdir(os.path.join(root, sub_dir))

img_names = list(filter(lambda x: x.endswith('.jpg'), img_names))

# Traverse images

for i in range(len(img_names)):

img_name = img_names[i]

path_img = os.path.join(root, sub_dir, img_name)

label = self.label_name[sub_dir]

data_info.append((path_img, int(label)))

if len(data_info) == 0:

raise Exception("\ndata_dir:{} is a empty dir! Please checkout your path to images!".format(data_dir))

return data_info

class PortraitDataset(Dataset):

def __init__(self, data_dir, transform=None, in_size = 224):

super(PortraitDataset, self).__init__()

self.data_dir = data_dir

self.transform = transform

self.label_path_list = list()

self.in_size = in_size

# obtain mask Of path

self._get_img_path()

def __getitem__(self, index):

path_label = self.label_path_list[index]

path_img = path_label[:-10] + ".png"

img_pil = Image.open(path_img).convert('RGB')

img_pil = img_pil.resize((self.in_size, self.in_size), Image.BILINEAR)

img_hwc = np.array(img_pil)

img_chw = img_hwc.transpose((2, 0, 1))

label_pil = Image.open(path_label).convert('L')

label_pil = label_pil.resize((self.in_size, self.in_size), Image.NEAREST)

label_hw = np.array(label_pil)

label_chw = label_hw[np.newaxis, :, :]

label_hw[label_hw != 0] = 1

if self.transform is not None:

img_chw_tensor = torch.from_numpy(self.transform(img_chw.numpy())).float()

label_chw_tensor = torch.from_numpy(self.transform(label_chw.numpy())).float()

else:

img_chw_tensor = torch.from_numpy(img_chw).float()

label_chw_tensor = torch.from_numpy(label_chw).float()

return img_chw_tensor, label_chw_tensor

def __len__(self):

return len(self.label_path_list)

def _get_img_path(self):

file_list = os.listdir(self.data_dir)

file_list = list(filter(lambda x: x.endswith("_matte.png"), file_list))

path_list = [os.path.join(self.data_dir, name) for name in file_list]

random.shuffle(path_list)

if len(path_list) == 0:

raise Exception("\ndata_dir:{} is a empty dir! Please checkout your path to images!".format(self.data_dir))

self.label_path_list = path_list

class PennFudanDataset(Dataset):

def __init__(self, data_dir, transforms):

self.data_dir = data_dir

self.transforms = transforms

self.img_dir = os.path.join(data_dir, "PNGImages")

self.txt_dir = os.path.join(data_dir, "Annotation")

self.names = [name[:-4] for name in list(filter(lambda x: x.endswith(".png"), os.listdir(self.img_dir)))]

def __getitem__(self, index):

""" return img and target :param idx: :return: """

name = self.names[index]

path_img = os.path.join(self.img_dir, name + ".png")

path_txt = os.path.join(self.txt_dir, name + ".txt")

# load img

img = Image.open(path_img).convert("RGB")

# load boxes and label

f = open(path_txt, "r")

import re

points = [re.findall(r"\d+", line) for line in f.readlines() if "Xmin" in line]

boxes_list = list()

for point in points:

box = [int(p) for p in point]

boxes_list.append(box[-4:])

boxes = torch.tensor(boxes_list, dtype=torch.float)

labels = torch.ones((boxes.shape[0],), dtype=torch.long)

# iscrowd = torch.zeros((num_objs,), dtype=torch.int64)

target = {

}

target["boxes"] = boxes

target["labels"] = labels

# target["iscrowd"] = iscrowd

if self.transforms is not None:

img, target = self.transforms(img, target)

return img, target

def __len__(self):

if len(self.names) == 0:

raise Exception("\ndata_dir:{} is a empty dir! Please checkout your path to images!".format(self.data_dir))

return len(self.names)

class CelebADataset(Dataset):

def __init__(self, data_dir, transforms):

self.data_dir = data_dir

self.transform = transforms

self.img_names = [name for name in list(filter(lambda x: x.endswith(".jpg"), os.listdir(self.data_dir)))]

def __getitem__(self, index):

path_img = os.path.join(self.data_dir, self.img_names[index])

img = Image.open(path_img).convert('RGB')

if self.transform is not None:

img = self.transform(img)

return img

def __len__(self):

if len(self.img_names) == 0:

raise Exception("\ndata_dir:{} is a empty dir! Please checkout your path to images!".format(self.data_dir))

return len(self.img_names)

Architecture building

import os

BASE_DIR = os.path.dirname(os.path.abspath(__file__))

import numpy as np

import torch

import torch.nn as nn

from torch.utils.data import DataLoader

import torchvision.transforms as transforms

import torch.optim as optim

from matplotlib import pyplot as plt

path_lenet = os.path.abspath(os.path.join(BASE_DIR, "model", "lenet.py"))

path_tools = os.path.abspath(os.path.join(BASE_DIR, "tools", "common_tools.py"))

import sys

# os.path.sep: Path separator

hello_pytorch_DIR = os.path.abspath(os.path.dirname(__file__)+os.path.sep+".."+os.path.sep+"..")

sys.path.append(hello_pytorch_DIR)

from model.lenet import LeNet

from tools.my_dataset import RMBDataset

from tools.common_tools import set_seed

set_seed() # Set random seeds

rmb_label = {

"1": 0, "100": 1}

# Parameter setting

MAX_EPOCH = 10

BATCH_SIZE = 16

LR = 0.01

log_interval = 10

val_interval = 1

# ============================ step 1/5 data ============================

split_dir = os.path.abspath(os.path.join(BASE_DIR, "..", "..", "data", "rmb_split"))

if not os.path.exists(split_dir):

raise Exception(r" data {} non-existent , go back to lesson-06\1_split_dataset.py Generate the data ".format(split_dir))

train_dir = os.path.join(split_dir, "train")

valid_dir = os.path.join(split_dir, "valid")

norm_mean = [0.485, 0.456, 0.406]

norm_std = [0.229, 0.224, 0.225]

train_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.RandomCrop(32, padding=4),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

valid_transform = transforms.Compose([

transforms.Resize((32, 32)),

transforms.ToTensor(),

transforms.Normalize(norm_mean, norm_std),

])

# structure MyDataset example

train_data = RMBDataset(data_dir=train_dir, transform=train_transform)

valid_data = RMBDataset(data_dir=valid_dir, transform=valid_transform)

# structure DataLoder

train_loader = DataLoader(dataset=train_data, batch_size=BATCH_SIZE, shuffle=True)

valid_loader = DataLoader(dataset=valid_data, batch_size=BATCH_SIZE)

# ============================ step 2/5 Model ============================

net = LeNet(classes=2)

net.initialize_weights()

# ============================ step 3/5 Loss function ============================

criterion = nn.CrossEntropyLoss() # Choose the loss function

# ============================ step 4/5 Optimizer ============================

optimizer = optim.SGD(net.parameters(), lr=LR, momentum=0.9) # Choose the optimizer

scheduler = torch.optim.lr_scheduler.StepLR(optimizer, step_size=10, gamma=0.1) # Set learning rate reduction strategy

# ============================ step 5/5 Training ============================

train_curve = list()

valid_curve = list()

for epoch in range(MAX_EPOCH):

loss_mean = 0.

correct = 0.

total = 0.

net.train()

for i, data in enumerate(train_loader):

# forward

inputs, labels = data

outputs = net(inputs)

# backward

optimizer.zero_grad()

loss = criterion(outputs, labels)

loss.backward()

# update weights

optimizer.step()

# Statistical classification

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).squeeze().sum().numpy()

# Print training information

loss_mean += loss.item()

train_curve.append(loss.item())

if (i+1) % log_interval == 0:

loss_mean = loss_mean / log_interval

print("Training:Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, i+1, len(train_loader), loss_mean, correct / total))

loss_mean = 0.

scheduler.step() # Update learning rate

# validate the model

if (epoch+1) % val_interval == 0:

correct_val = 0.

total_val = 0.

loss_val = 0.

net.eval()

with torch.no_grad():

for j, data in enumerate(valid_loader):

inputs, labels = data

outputs = net(inputs)

loss = criterion(outputs, labels)

_, predicted = torch.max(outputs.data, 1)

total_val += labels.size(0)

correct_val += (predicted == labels).squeeze().sum().numpy()

loss_val += loss.item()

loss_val_epoch = loss_val / len(valid_loader)

valid_curve.append(loss_val_epoch)

print("Valid:\t Epoch[{:0>3}/{:0>3}] Iteration[{:0>3}/{:0>3}] Loss: {:.4f} Acc:{:.2%}".format(

epoch, MAX_EPOCH, j+1, len(valid_loader), loss_val_epoch, correct_val / total_val))

train_x = range(len(train_curve))

train_y = train_curve

train_iters = len(train_loader)

valid_x = np.arange(1, len(valid_curve)+1) * train_iters*val_interval - 1 # because valid It's recorded in epochloss, Record points need to be converted to iterations

valid_y = valid_curve

plt.plot(train_x, train_y, label='Train')

plt.plot(valid_x, valid_y, label='Valid')

plt.legend(loc='upper right')

plt.ylabel('loss value')

plt.xlabel('Iteration')

plt.show()

# ============================ inference ============================

BASE_DIR = os.path.dirname(os.path.abspath(__file__))

test_dir = os.path.join(BASE_DIR, "test_data")

test_data = RMBDataset(data_dir=test_dir, transform=valid_transform)

valid_loader = DataLoader(dataset=test_data, batch_size=1)

for i, data in enumerate(valid_loader):

# forward

inputs, labels = data

outputs = net(inputs)

_, predicted = torch.max(outputs.data, 1)

rmb = 1 if predicted.numpy()[0] == 0 else 100

print(" The model gets {} element ".format(rmb))

边栏推荐

- Closure and closure function

- [combinatorics] generating function (example of using generating function to solve the number of solutions of indefinite equation)

- Sepconv (separable revolution) code recurrence

- SQL injection -day16

- Supervisor monitors gearman tasks

- 网格图中递增路径的数目[dfs逆向路径+记忆dfs]

- [enumeration] annoying frogs always step on my rice fields: (who is the most hateful? (POJ hundred practice 2812)

- As soon as we enter "remote", we will never regret, and several people will be happy and several people will be sad| Community essay solicitation

- Postfix tips and troubleshooting commands

- 统计图像中各像素值的数量

猜你喜欢

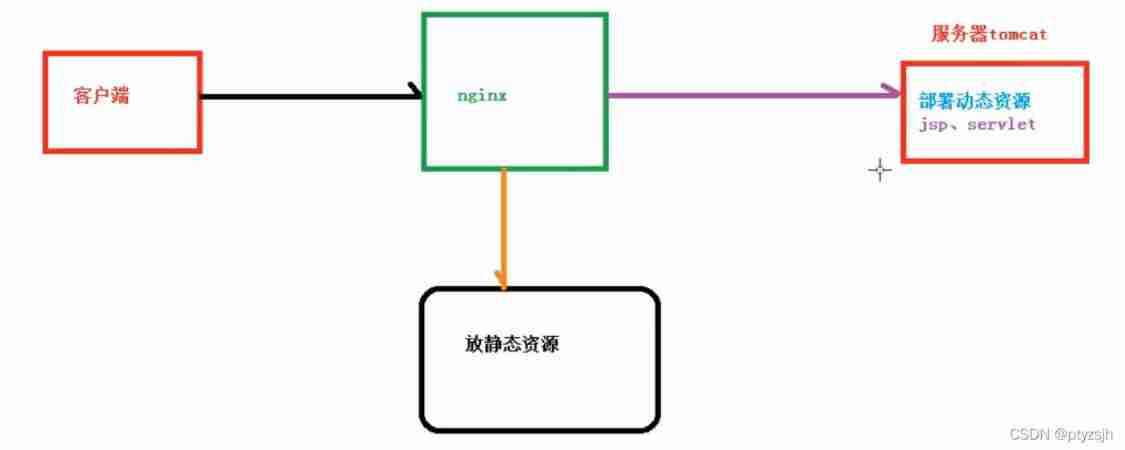

4. Load balancing and dynamic static separation

English语法_形容词/副词3级 - 倍数表达

模块九作业

Why can deeplab v3+ be a God? (the explanation of the paper includes super detailed notes + Chinese English comparison + pictures)

Naoqi robot summary 27

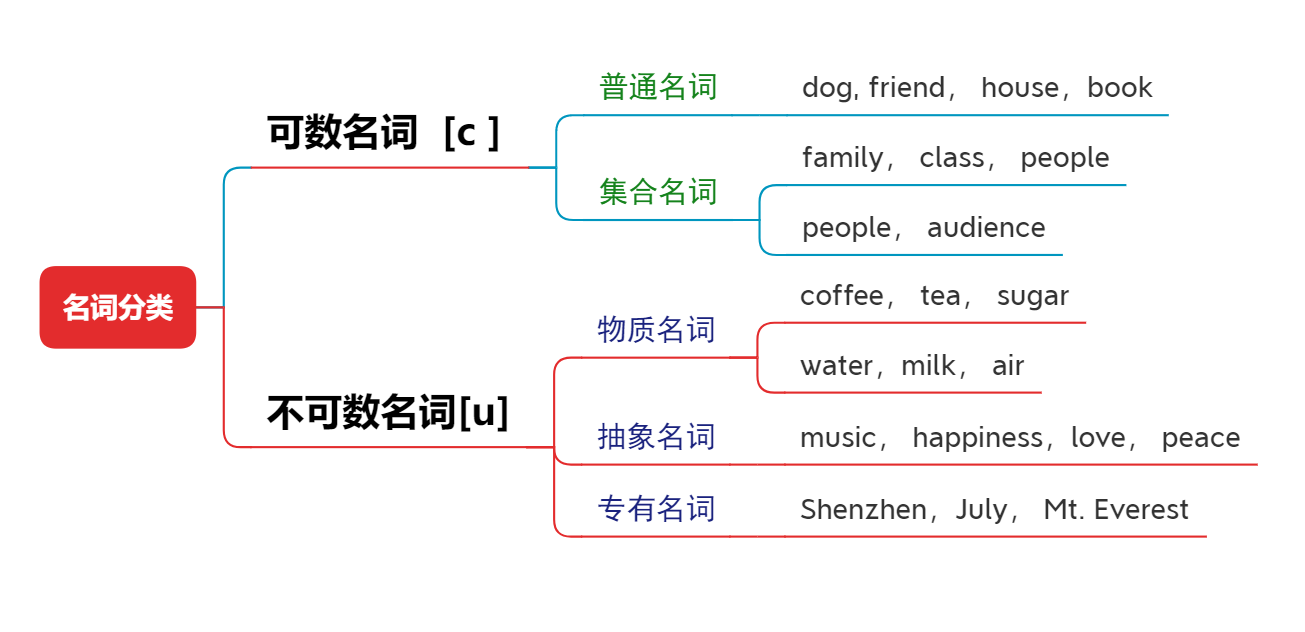

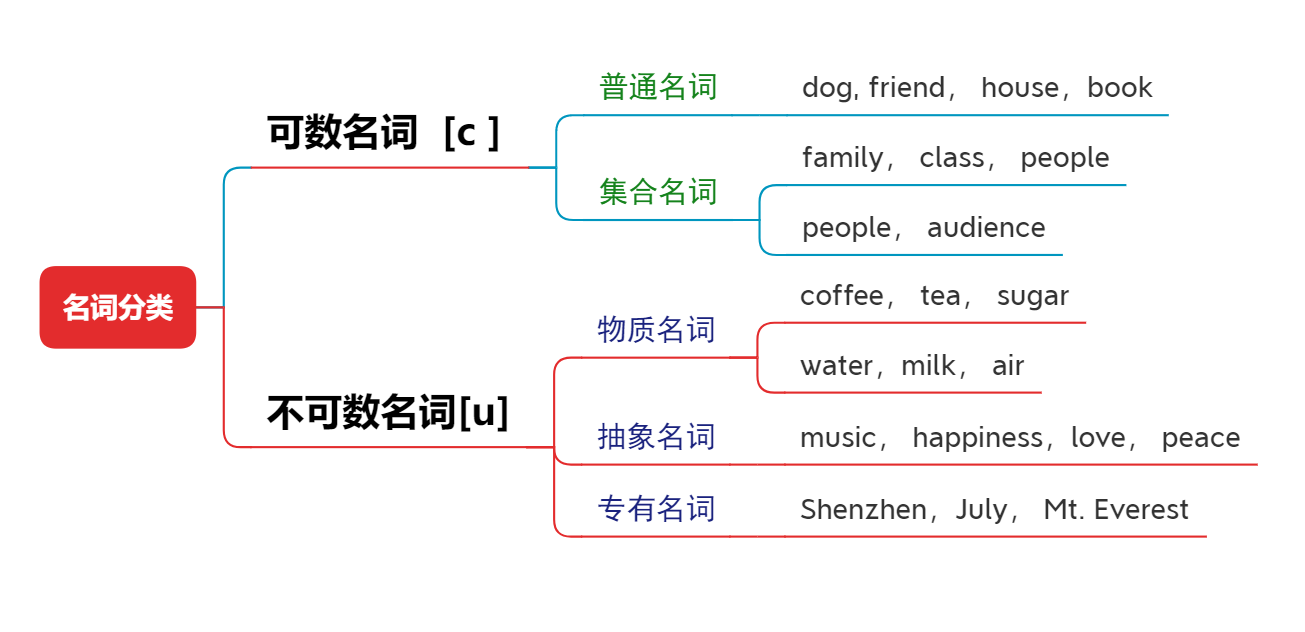

English grammar_ Noun classification

English语法_名词 - 分类

Mature port AI ceaspectus leads the world in the application of AI in terminals, CIMC Feitong advanced products go global, smart terminals, intelligent ports, intelligent terminals

Have you learned the correct expression posture of programmers on Valentine's day?

An academic paper sharing and approval system based on PHP for computer graduation design

随机推荐

PHP MySQL preprocessing statement

统计图像中各像素值的数量

Redis core technology and practice - learning notes (VI) how to achieve data consistency between master and slave Libraries

2022-2028 global physiotherapy clinic industry research and trend analysis report

[combinatorics] generating function (summation property)

Fedora 21 安装 LAMP 主机服务器

[tutorial] build your first application on coreos

[Godot] add menu button

Codeforces Round #803 (Div. 2) C. 3SUM Closure

Supervisor monitors gearman tasks

[combinatorics] exponential generating function (example 2 of solving multiple set permutation with exponential generating function)

PHP MySQL Update

[combinatorics] generating function (use generating function to solve the combination number of multiple sets R)

Data analysis is popular on the Internet, and the full version of "Introduction to data science" is free to download

How do microservices aggregate API documents? This wave of operation is too good

Win 11 major updates, new features love love.

Redis on local access server

Class exercises

How to expand the capacity of golang slice slice

Niuke monthly race 31 minus integer