当前位置:网站首页>Vit paper details

Vit paper details

2022-07-06 21:41:00 【Murmur and smile】

AN IMAGE IS WORTH 16X16 WORDS: TRANSFORMERS FOR IMAGE RECOGNITION AT SCALE

Billbill Explain :https://www.bilibili.com/video/BV1GB4y1X72R/?spm_id_from=333.788&vd_source=d2733c762a7b4f17d4f010131fbf1834

1.Introduction

Self attention based architecture , In especial Transformers(Vaswani wait forsomeone ,2017 year ), It has become natural language processing (NLP) The preferred model for . The main method is pre training on a large text corpus , Then fine tune on smaller task specific data sets (Devlin wait forsomeone ,2019). because Transformers Computational efficiency and scalability , Training has more than 100B Unprecedented models of parameters are possible (Brown wait forsomeone ,2020;Lepikhin wait forsomeone ,2020). As models and data sets grow , There is still no sign of saturation performance .

But in the field of computer vision , Convolution still dominates the address . suffer NLP Successful inspiration , Multiple work attempts will be similar to CNN Architecture and self-attention Combine . Some of them completely replace convolution , Although the latter model is theoretically valid , But due to the use of a special attention model , Has not been effectively extended on modern hardware , classical ResNet Still preferred .

suffer NLP Medium Transformer The inspiration of scaling success , Try to put the standard Transformer Apply directly to images , And minimize modifications . therefore , We split the image into pathch, And provide these patch Linearity of embedding As Transformer The input of .Image patch How to deal with it and NLP In the application token(word) identical ( How many words are there in a sentence , How many are there in a picture Patch). We train image classification models in a supervised way (nlp Learning with no supervision ).

When there is no strong regularization Transformer In medium-sized datasets ImageNet When you're training on , The accuracy is larger than that of downlight ResNet A few percentage points lower . This is because Transformer Lack some CNN Inherent induction bias, For example, translation without deformation and locality , Therefore, it cannot be well summarized in the case of insufficient data sets .

however , If the model is in a larger dataset (14M-300M Images ) Training , Things will change . We found that large-scale training is better than inductive bias .

2.Related Work

Self-attention Simply applying it to an image requires each pixel to focus on every other pixel . Due to the secondary cost of the number of pixels , It cannot be extended to the actual input size . because , In order to apply in the context of image processing Transformer, Query pixels in local areas of application self-attention, On the whole self-attention Use scalable approximations ( Sparse attention ), To apply to images . Another way to expand attention is to apply it to blocks of different sizes (Weissenborn wait forsomeone ,2019 year ), In extreme cases, apply only along a single axis ( The horizontal axis 、 The vertical axis )(Ho wait forsomeone ,2019 year ;Wang wait forsomeone ,2020a). Many of these specialized attention architectures show promising results in computer vision tasks , But it requires complex engineering to be effectively implemented on the hardware accelerator .

What is most relevant to us is Cordonnier The model of people . (2020), It extracts from the input image a size of 2x2 Of patch, And apply full self attention at the top . This model is similar to ViT Very similar , But our work further proves that large-scale pre training makes vanilla Transformer It can be done with ( Even better than ) State-of-the-art CNN competition . Besides ,Cordonnier wait forsomeone . (2020) Use 2 2 Small block size of pixels , This makes the model only suitable for small resolution images , And we also deal with medium resolution images .

Another recent related model is image GPT (iGPT) (Chen et al., 2020a), It will reduce the image resolution and color space Transformers Apply to image pixels . The model is trained in an unsupervised way as a generative model , The generated representation can then be fine tuned or linearly probed to improve classification performance , stay ImageNet Implemented on 72% Maximum accuracy of .

3.Method

The design is in the model , We try to follow the original Transformer (Vaswani et al., 2017). One advantage of this deliberately simple setup is extensibility NLP Transformer The architecture and its efficient implementation can be used almost out of the box .

chart 1: Model overview . We divide the image into fixed size blocks , Linear embedding of each block , Add location embed , And feed the generated vector sequence to the standard Transformer Encoder . To perform classification , We use to add extra learnable “ Classification marks ” Standard method of . Transformer The illustration of the encoder was Vaswani And so on . (2017)

step : chart ---> Divided into several patch---> take patch Through the linear projection layer ----> add to Position Embedding---->Transformer Encoder-->MLP Head--->class

Let's say 224x224x3 The input of , Explain the diagram .

Input X: 224x224x3 Every patch Size : 16x16 patch The number of N = 224^2/16^2 = 196 Every patch To 1D Embedding:16x16x3=768

Linear projection layer E: 768x768 ( Dimension in the article D) For input : 196x768 token: 1x768

Through the linear projection layer : [196x768] x [178x768] = [196,768] ( Matrix multiplication )

Add one cls token, This thing can be from other 196 individual embedding Learning classification features :torch.cat([196,768],[1,768]) ==> [197,768]

Add location code :[197, 768] + [197, 768] ==> [197,768], Sum up (2017 Year of Transformer prove + and concat The effect is the same ).

Multi-head attention. long position ,e.g., 12 Head ,768/12 = 64, Each head is 197x64, After splicing is 197x768.

MLP: General dimension enlargement , Quadruple magnification 197x3012. Later, we will expand and shrink the dimension , Retract 197x768.

Layer norm: The difference in BN, On all the samples , Yes CHW Perform the normalization operation .

3.1 Vision Transformer

The standard Transformer receive 1D token As input . In order to deal with 2D Images , take Into a series of flat 2D patch , among .H and W Is the height and width ,C It's a channel ,P Every patch The resolution of the . It's every image patch Count , Can be used as Transformer Valid input sequence length of .Transformer Use a constant potential vector size in all its layers D, So we will patch Flatten and map to... Using a trainable linear projection D dimension ( Equation 1). We call the output of this projection patch embedding.

And BERT Of [class] token similar , We are embedding Sequence (Z00= xclass) Add learnable embedding, Its presence Transformer Encoder (Z0L ) The state at the output is used as an image representation y ( equation 4). During pre training and fine tuning , Category headers are attached to Z0L . The classification header consists of MLP Realization , There is a hidden layer during pre training , Fine tuning is achieved by a single linear layer .

Position embedding Be added to patch embedding To retain location information . We use standard learnable 1D position embedding, Because we didn't observe the use of more advanced 2D Significant performance improvement due to location aware embedding ( appendix D.4). Generated embedding vectors The vector sequence is used as the input of the encoder .

Transformer Encoder (Vaswani wait forsomeone ,2017) From the attention of the Bulls (MSA, See appendix A) and MLP block ( equation 2、3) Composed of alternating layers . Apply... Before each block Layernorm (LN), Apply residual join after each block (Wang et al., 2019; Baevski & Auli, 2019).

MLP Contains two with GELU Nonlinear layers .

Perceptual bias . And CNN comparison ,Vision Transformer The image feature induction deviation is much less . stay CNN in , Locality , The two-dimensional domain structure and translational deformability are added to each layer of the whole model . stay ViT in , Only MLP Layers are local and translation invariant , The self attention layer is global , And when fine-tuning, adjust the image of different resolutions position embedding. besides , Initialization position embedding Do not carry relevant patch Of 2D Location information , And you have to learn from scratch patch All spatial relationships between .

Hybrid Architecture. Hybrid architecture . As an alternative to the original image block , The input sequence can be CNN The characteristic diagram of (LeCun wait forsomeone ,1989). In this hybrid model ,patch embedding Projection E( equation 1) Apply to from CNN Patches extracted from feature maps . As a special case ,patch Can have 1x1 Space size of , This means that the input sequence is simply flattened and projected onto the spatial dimension of the feature map Transformer Dimension to get . Add category input embedding and location embedding as described above .

3.2 Fine-tuning and higher resolution

Usually , We pre train on large data sets ViT, And fine tune to ( smaller ) Downstream tasks . So , We removed the pre trained prediction header and attached a zero initialization DxK Feedforward layer , among K Is the number of downstream classes . Compared with pre training , Fine tuning at a higher resolution is usually beneficial (Touvron wait forsomeone ,2019;Kolesnikov wait forsomeone ,2020). When providing higher resolution images , We keep patch size identical , This results in a larger effective sequence length . Vision Transformer Can handle any sequence length ( Until the memory limit ), however , The location embedding of pre training may no longer make sense . therefore , We embed the pre trained positions according to their positions in the original image 2D interpolation . Please note that , This resolution adjustment and patch extraction will be related to the image 2D The inductive deviation of the structure is manually injected into the unique point of the visual converter .

4.Experience

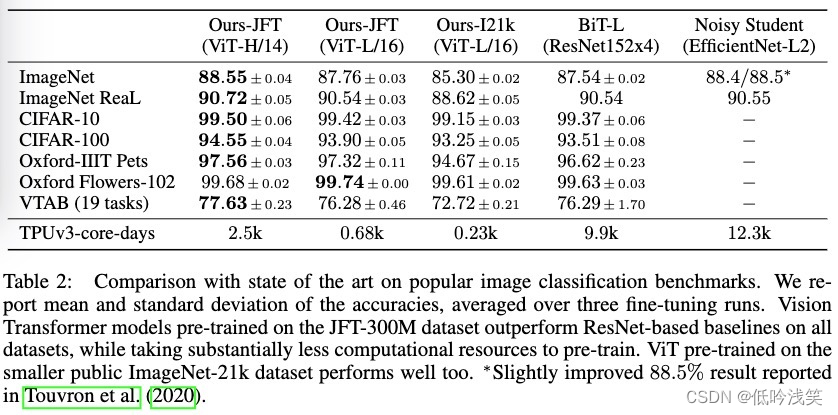

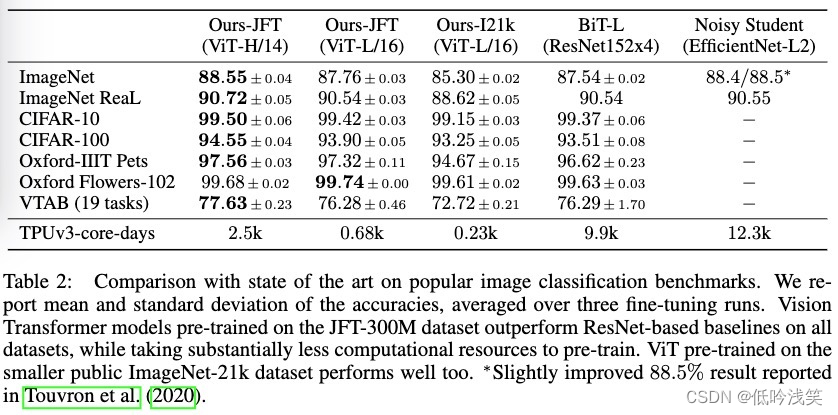

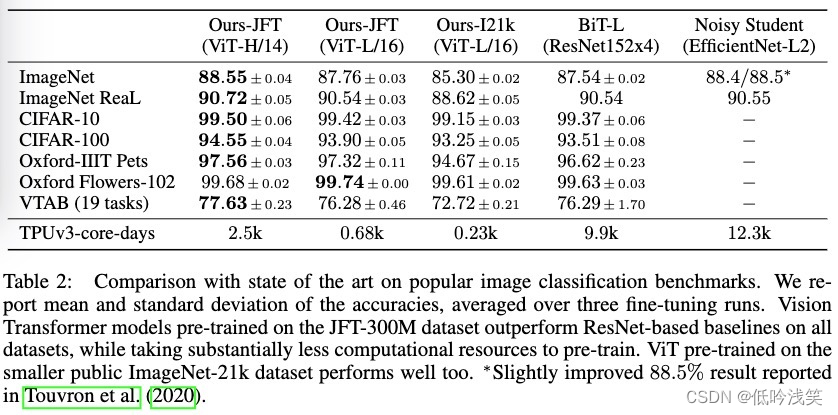

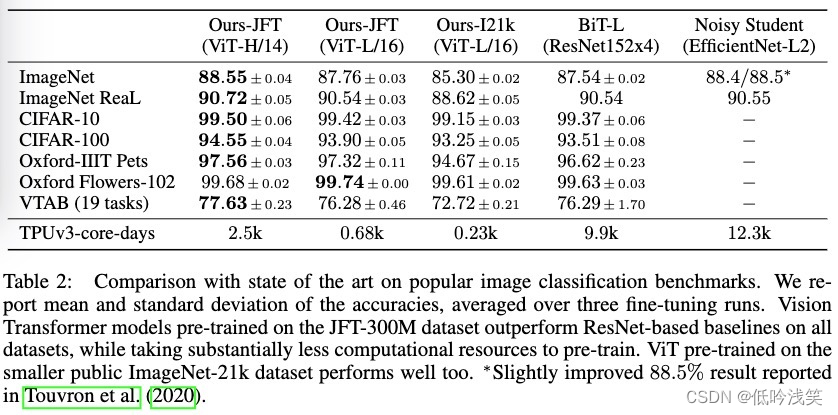

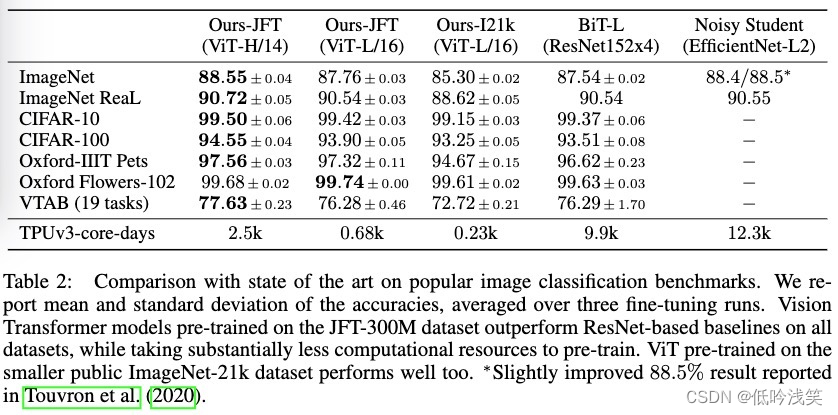

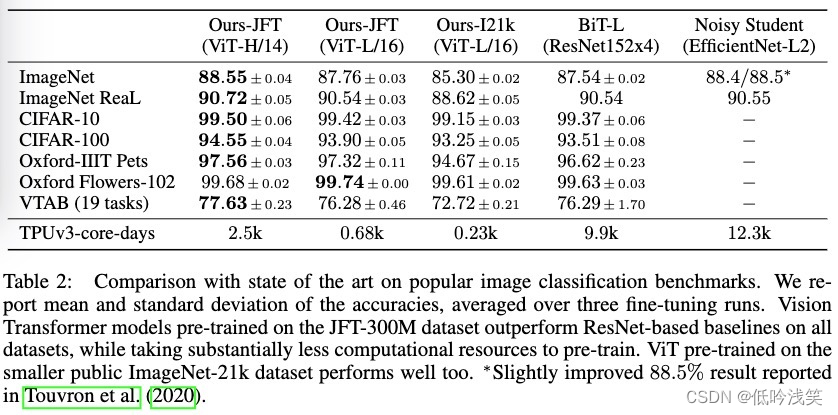

Compared with other methods ,ViT Better performance , And have less training time .

The gray part indicates ResNet What can be achieved , On small data ,VIT The effect of is worse than ResNet, On big data sets ,VIT be better than ResNet.

The picture shows Transformer、ResNet、Hybrid Three models , Performance is increasing FLOPs Increase and improve . There is no bottleneck yet .

For the same FLOPs Model of ,Transformer And hybrid models are better than ResNet.

The hybrid model can improve the pure Transformer, But it has no advantage over larger models .

4.5 Inspection Vision Transformer

Vision Transformer The first layer of will be flattened patch Linear projection to low dimensional space . chart 7( Left ) Shows learning embedding fiters The main components of . These components are similar to reasonable basis functions , For each patch Low dimensional representation of inner fine structure .

After projection , Embed and add learning location to patch In the expression of . chart 7( in ) Show that the model is learned in position embedding The distance in the image is encoded in the similarity of , That is, closer blocks tend to have more similar location embedding . further , The row and column structure appears ; The same line / The patches in the column have similar embedding . Last , For larger meshes , Sometimes sinusoidal structures appear ( appendix D). Position embedding learning representation 2D Image topology explains why handmade 2D Perceptual embedding variants do not produce improvements ( appendix D.4, because Transformer Has gone from 1D The adjacent feature representation is learned in the position coding of )

Self-attention allow ViT Integrate the information of the whole image , Even at the lowest level . Our research network makes use of this ability to a great extent . say concretely , We calculate the average distance of information integration in image space according to the attention weight ( chart 7, Right ). such “ Pay attention to the distance ” Be similar to CNN The size of the receptive field in . Some heads participating in the low-level network have also observed the global information , This shows that the model does use the ability to globally integrate information . The attention distance of other attention heads at the lower level is always very small . This highly localized attention is Transformer Previously applied ResNet Less obvious in the mixed model ( chart 7, Right ), This suggests that it may have a relationship with CNN Similar functions of early convolution in . Besides , The attention distance increases with the increase of network depth . On a global scale , We find that the model focuses on image regions related to Classification Semantics .

5.Conclusion

We have explored Transformer Direct application in image recognition . Unlike previous work using self attention in computer vision , Except for the beginning patch Outside the extraction step , We will not introduce image specific inductive bias into the architecture . contrary , We interpret the image as a series patch, And pass NLP Standards used in Transformer The encoder processes it . This simple but scalable strategy works surprisingly well when combined with pre training of large data sets . therefore ,Vision Transformer It matches or exceeds the existing technology on many image classification data sets , At the same time, the cost of pre training is relatively low .

边栏推荐

- [Li Kou brushing questions] one dimensional dynamic planning record (53 change exchanges, 300 longest increasing subsequence, 53 largest subarray and)

- @Detailed differences among getmapping, @postmapping and @requestmapping, with actual combat code (all)

- [in depth learning] pytorch 1.12 was released, officially supporting Apple M1 chip GPU acceleration and repairing many bugs

- Start the embedded room: system startup with limited resources

- C# 如何在dataGridView里设置两个列comboboxcolumn绑定级联事件的一个二级联动效果

- 抖音将推独立种草App“可颂”,字节忘不掉小红书?

- JS学习笔记-OO创建怀疑的对象

- The underlying implementation of string

- 一行代码可以做些什么?

- 缓存更新策略概览(Caching Strategies Overview)

猜你喜欢

![Happy sound 2[sing.2]](/img/ca/1581e561c427cb5b9bd5ae2604b993.jpg)

Happy sound 2[sing.2]

guava:Collections. The collection created by unmodifiablexxx is not immutable

3D face reconstruction: from basic knowledge to recognition / reconstruction methods!

Fastjson parses JSON strings (deserialized to list, map)

![Leetcode topic [array] -118 Yang Hui triangle](/img/77/d8a7085968cc443260b4c0910bd04b.jpg)

Leetcode topic [array] -118 Yang Hui triangle

![[redis design and implementation] part I: summary of redis data structure and objects](/img/2e/b147aa1e23757519a5d049c88113fe.png)

[redis design and implementation] part I: summary of redis data structure and objects

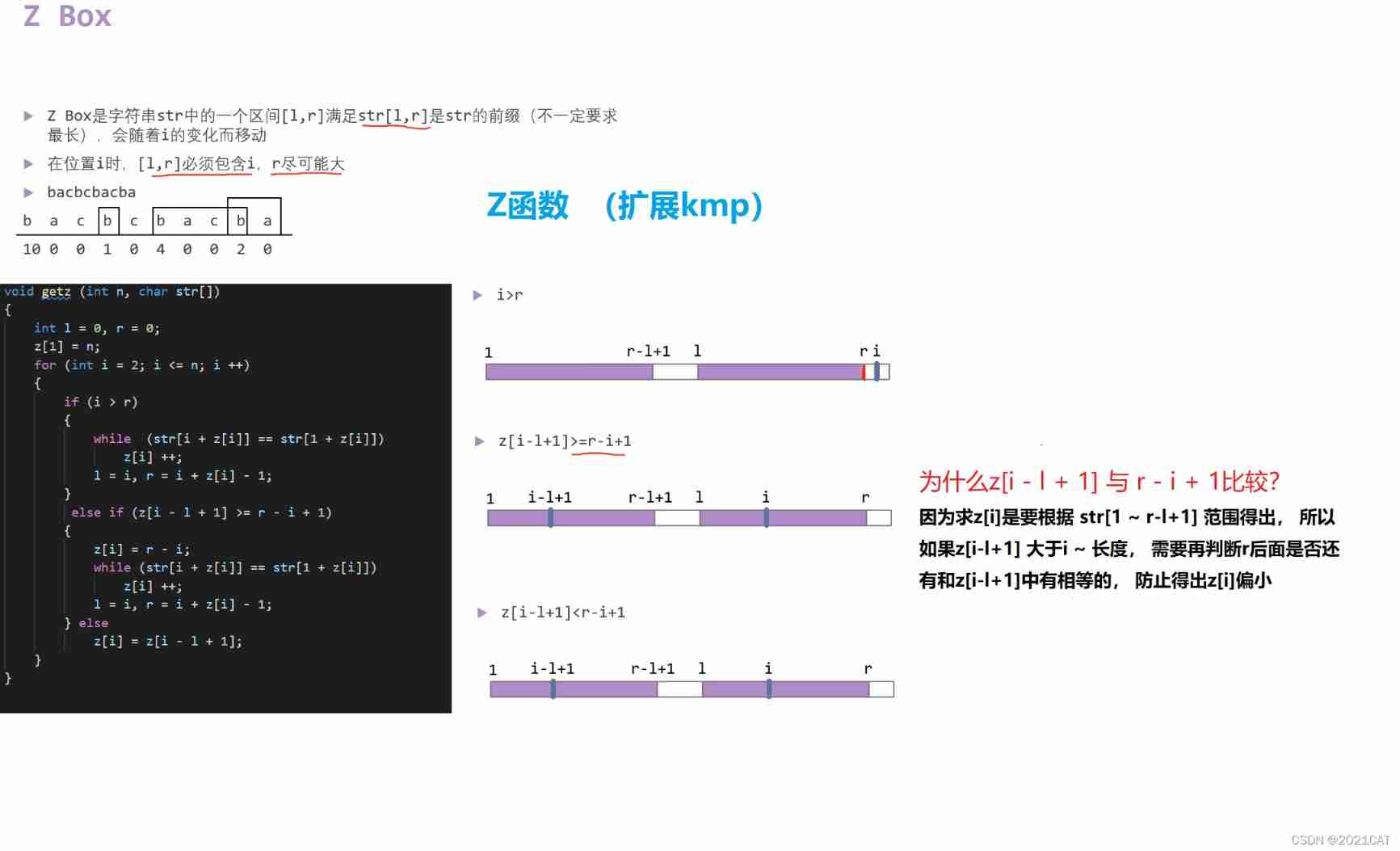

Z function (extended KMP)

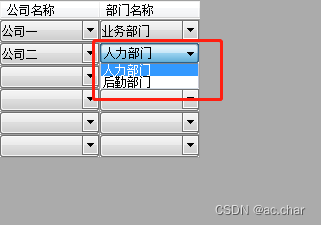

C# 如何在dataGridView里设置两个列comboboxcolumn绑定级联事件的一个二级联动效果

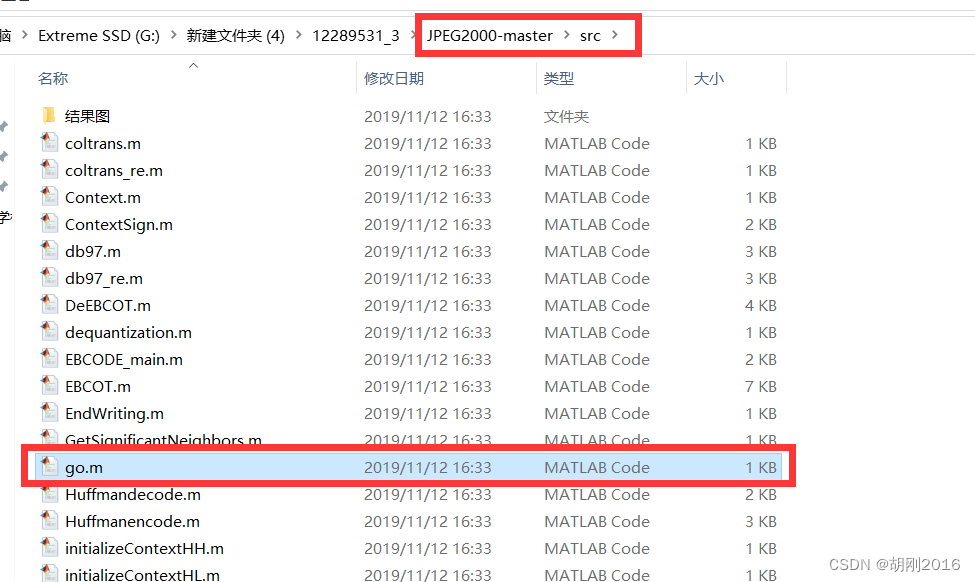

JPEG2000 matlab source code implementation

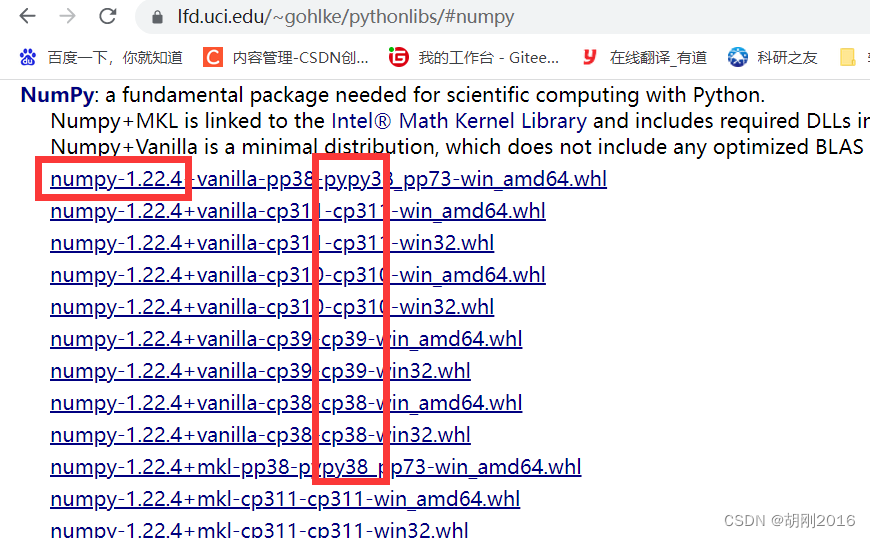

Numpy download and installation

随机推荐

【滑动窗口】第九届蓝桥杯省赛B组:日志统计

Technology sharing | packet capturing analysis TCP protocol

After working for 5 years, this experience is left when you reach P7. You have helped your friends get 10 offers

js之遍历数组、字符串

Search map website [quadratic] [for search map, search fan, search book]

SQL:存储过程和触发器~笔记

The underlying implementation of string

JS according to the Chinese Alphabet (province) or according to the English alphabet - Za sort &az sort

Numpy download and installation

[Digital IC manual tearing code] Verilog automatic beverage machine | topic | principle | design | simulation

It's not my boast. You haven't used this fairy idea plug-in!

【力扣刷题】一维动态规划记录(53零钱兑换、300最长递增子序列、53最大子数组和)

FZU 1686 龙之谜 重复覆盖

This year, Jianzhi Tencent

guava: Multiset的使用

[interpretation of the paper] machine learning technology for Cataract Classification / classification

Five wars of Chinese Baijiu

Efficiency tool +wps check box shows the solution to the sun problem

Torch Cookbook

Michael smashed the minority milk sign