当前位置:网站首页>UCORE lab7 synchronous mutual exclusion experiment report

UCORE lab7 synchronous mutual exclusion experiment report

2022-07-06 15:06:00 【Wuhu hanjinlun】

The experiment purpose

- Understand the design and implementation of synchronous mutual exclusion of operating system ;

- Understand the underlying support technology : Disable interrupt 、 Timer 、 Waiting in line ;

- stay ucore Understand semaphores (semaphore) Specific implementation of the mechanism ;

- Understand the management mechanism , stay ucore Add tube based in the kernel (monitor) The conditional variable of (condition variable) Support for ;

- Understand the classic process synchronization problem , And can use the synchronization mechanism to solve the problem of process synchronization .

Experimental content

Experiment 6 completed the scheduling framework and specific scheduling algorithm of user processes , Multiple processes can be scheduled to run . If multiple processes need to work together or access shared resources , Then there is the problem of how to synchronize and orderly compete . This experiment , Mainly familiar ucore Process synchronization mechanism — Semaphore (semaphore) Mechanism , And the solution to the dining problem of philosophers based on semaphores . Then master the concept and principle of tube pass , And refer to the semaphore mechanism , Realize the conditional variable mechanism based on management and solve the dining problem of philosophers based on conditional variables .

In this experiment , stay kern/sync/check_sync.c Provides a semaphore based solution to the dining problem of philosophers . At the same time, you need to complete the exercise , That is to say, it is based on the pipe process ( It mainly uses conditional variables and mutually exclusive semaphores flexibly ) The solution to the dining problem of philosophers . The philosopher's dining problem is described as follows : There are five philosophers , Their lifestyle alternates between thinking and eating . Philosophers share a round table , There are five chairs around , Take a seat each . There are five bowls and five chopsticks on the round table , When a philosopher thinks , He doesn't talk to others , When I'm hungry, I try to use the left side 、 Right is closest to his chopsticks , But he may not get any . Only when he gets two chopsticks , Before eating , After dinner , Put down your chopsticks and continue to think .

practice

practice 0: Fill in the existing experiment

This experiment relies on experiments 1/2/3/4/5/6. Please take your experiment 1/2/3/4/5/6 Fill in the code in this experiment. There are “LAB1”/“LAB2”/“LAB3”/“LAB4”/“LAB5”/“LAB6” The corresponding part of the notes . And ensure that the compilation passes . Be careful : In order to execute correctly lab7 Test application for , It may be necessary to test the completed experiments 1/2/3/4/5/6 Code for further improvements .

Use meld The software will lab6 and lab7 After comparing the files , The following files need to be copied :

- proc.c

- default_pmm.c

- pmm.c

- swap_fifo.c

- vmm.c

- trap.c

- sched.c

Other documents can be used directly without modification

practice 1: Understand the implementation of kernel level semaphores and the dining problem of philosophers based on kernel level semaphores ( No coding required )

Finish the exercise 0 after , I suggest you compare ( You can use meld Wait for the documents diff Comparison software ) Completed by individuals lab6 And practice 0 Just revised after completion lab7 The difference between , Analysis and understanding lab7 Implementation process using semaphores . perform make grade, Most test cases should pass .

Please give the design description of kernel level semaphore in the experimental report , And explain the general implementation process .

Please give the user status process in the experiment report / Thread provides the design scheme of semaphore mechanism , It also compares and explains the similarities and differences of semaphore providing mechanisms at the kernel level .

The dining problem of philosophers based on kernel semaphores

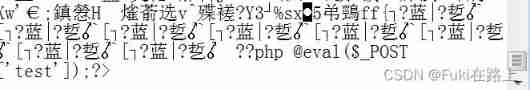

The dining problem of philosophers is given in the experimental content , Let's analyze the code of this problem , Mainly in the check_sync.c Realization

The main function of this problem is as follows , The content is that every philosopher picks up a fork , Eat , Then put down the fork , Go on indefinitely :

int state_sema[N]; /* An array that records everyone's status */

/* A semaphore is a special integer variable */

semaphore_t mutex; /* Critical regions are mutually exclusive */

semaphore_t s[N]; /* Every philosopher has a semaphore */

struct proc_struct *philosopher_proc_sema[N];

int philosopher_using_semaphore(void * arg) /* i: Philosopher number , from 0 To N-1 */

{

int i, iter=0;

i=(int)arg;

cprintf("I am No.%d philosopher_sema\n",i);

while(iter++<TIMES)// Simulate many experiments

{

/* Infinite loop */

cprintf("Iter %d, No.%d philosopher_sema is thinking\n",iter,i); /* Philosophers are thinking */

do_sleep(SLEEP_TIME);

phi_take_forks_sema(i);

/* You need two forks , Or blocked */

cprintf("Iter %d, No.%d philosopher_sema is eating\n",iter,i); /* eating */

do_sleep(SLEEP_TIME);

phi_put_forks_sema(i);

/* Put both forks back on the table at the same time */

}

cprintf("No.%d philosopher_sema quit\n",i);

return 0;

}

Be careful : Pick up / When you put down the fork , Due to the need to modify the status of the current philosopher , At the same time, the status is Global shared variables , So you need to acquire locks to prevent conditional competition .

You can see two important functions phi_take_forks_sema and phi_put_forks_sema

void phi_take_forks_sema(int i) /* i: Philosophers number from 0 To N-1 */

{

down(&mutex); /* Enter the critical area */

state_sema[i]=HUNGRY; /* Record the philosophers i The fact of hunger */

phi_test_sema(i); /* Trying to get two forks */

up(&mutex); /* Get out of the critical area */

down(&s[i]); /* If you don't get a fork, it will block */

}

void phi_put_forks_sema(int i) /* i: Philosophers number from 0 To N-1 */

{

down(&mutex); /* Enter the critical area */

state_sema[i]=THINKING; /* The philosopher's meal is over */

phi_test_sema(LEFT); /* Let's see if the left neighbor can have dinner now */

phi_test_sema(RIGHT); /* Let's see if the right neighbor can have dinner now */

up(&mutex); /* Get out of the critical area */

}

When putting the fork back on the table , If the two philosophers on the left and right of the current philosopher are in Starvation , That is, when you are ready to eat but do not have a knife and fork , If the conditions are met , Then awaken these two philosophers and let them continue to eat .

phi_test_sema The function is used to set the philosopher's eating state . If the current philosopher meets the eating conditions , Then update the philosopher status , Execute the corresponding of philosopher lock V operation , To wake up the thread corresponding to the philosopher waiting for the fork .

void phi_test_sema(i) /* i: Philosophers number from 0 To N-1 */

{

if(state_sema[i]==HUNGRY&&state_sema[LEFT]!=EATING

&&state_sema[RIGHT]!=EATING)

{

state_sema[i]=EATING;

up(&s[i]);

}

}

When trying to get chopsticks , The input parameter of the function is i, That is, the number of philosophers , here , He did it for himself HUNGRY, And try to check whether the two next to you are eating . If you are not eating , Then you can get EATING The state of .

Design description and general execution flow of kernel level semaphore

The core of semaphore can be roughly represented by the following short code :

struct semaphore {

int count;

queueType queue;

};

void semWait(semaphore s)

{

s.count--;

if (s.count < 0) {

/* place this process in s.queue */;

/* block this process */;

}

}

void semSignal(semaphore s)

{

s.count++;

if (s.count<= 0) {

/* remove a process P from s.queue */;

/* place process P on ready list */;

}

}

When more than one (>1) When processes can mutually exclusive or cooperate synchronously , A process will stop at a certain position because it cannot meet a certain condition set by the semaphore , Until it receives a specific The signal ( Indicates that the conditions are met ). To signal , You need to use a called Semaphore The special variable . Is the passing semaphore s Transmitting signals , Semaphore V The operation adopts the process executable primitive semSignal(s); Is the passing semaphore s Received signal , Semaphore P The operation adopts the process executable primitive semWait(s); If the corresponding signal is still not sent , Then the process is Obstruction or sleep , Until it is sent .

Let's analyze how to implement kernel semaphores :

Structure definition of semaphore as follows :

typedef struct {

int value; // Current value of semaphore

wait_queue_t wait_queue; // The waiting queue corresponding to the semaphore

} semaphore_t;

Waiting in line wait_queue Defined in another structure :

// For waiting queue , Storing the thread currently waiting PCB And wake-up reason and wait queue and wait queue flag for restoring structure

typedef struct {

struct proc_struct *proc; // Wait for the pointer of the process

uint32_t wakeup_flags; // The reason why the process was put into the waiting queue is marked

wait_queue_t *wait_queue; // Point to this wait The structure belongs to wait_queue

list_entry_t wait_link; // To organize wait_queue in wait Connection of nodes

} wait_t;

In the semaphore structure value The meaning is as follows :

- value>0, Express Number of free shared resources

- vlaue<0, That represents the semaphore The number of processes in the waiting queue

- value=0, Express The wait queue is empty

Semaphore initialization .

take value Set to the entered value , take wq Initialize to an empty linked list .

void sem_init(semaphore_t *sem, int value)

{

// take value Set to a specific value

sem->value = value;

// Will wait for the queue to initialize

wait_queue_init(&(sem->wait_queue));

}

stay phi_take_forks_sema and phi_put_forks_sema Two core functions are called in :up and down, Through these two functions , You can enter or leave the critical zone .

_down Function will decrement the current semaphore value value . If value Before decreasing, it is 0, Then add it to the waiting queue wait_queue in , And make the current thread immediately abandon CPU resources , Schedule to other threads . Notice the atomic operation . The source code of this function is as follows :

// Indicates that a resource corresponding to this semaphore is requested

static __noinline uint32_t __down(semaphore_t *sem, uint32_t wait_state) {

bool intr_flag;

local_intr_save(intr_flag);

// Query integer variables to see if there are redundant allocable resources ,

// If there are redundant allocable resources , Take out resources ( Integer variable minus 1), Then the current process can proceed normally ;

if (sem->value > 0) {

sem->value --;

local_intr_restore(intr_flag);

return 0;

}

wait_t __wait, *wait = &__wait;

wait_current_set(&(sem->wait_queue), wait, wait_state);

local_intr_restore(intr_flag);

// If there are no available resources , Integer variables are not positive , The resource requirements of the current process cannot be met ,

// So change its status to SLEEPING state , Then hang it to the waiting queue of the corresponding semaphore ,

wait_t __wait, *wait = &__wait;

wait_current_set(&(sem->wait_queue), wait, wait_state);

local_intr_restore(intr_flag);

// call schedule Function to yield CPU

schedule();

local_intr_save(intr_flag);

// When resources are met , After being awakened again , Delete itself from the waiting queue ;

wait_current_del(&(sem->wait_queue), wait);

local_intr_restore(intr_flag);

if (wait->wakeup_flags != wait_state) {

return wait->wakeup_flags;

}

return 0;

}

_up The function is a little simpler : If there is no waiting thread value++, Otherwise wake up the first waiting thread .

// It means releasing a resource corresponding to the semaphore ,

static __noinline void __up(semaphore_t *sem, uint32_t wait_state)

{

bool intr_flag;

local_intr_save(intr_flag);

{

wait_t *wait;

Query whether the waiting queue is empty , If it's empty , Add 1;

if ((wait = wait_queue_first(&(sem->wait_queue))) == NULL) {

sem->value ++;

}

// If the waiting queue is not empty , Take out one of the processes to wake up ;

else

{

assert(wait->proc->wait_state == wait_state);

wakeup_wait(&(sem->wait_queue), wait, wait_state, 1);

}

}

local_intr_restore(intr_flag);

}

Question answering

- Please give the user status process in the experiment report / Thread provides the design scheme of semaphore mechanism , It also compares and explains the similarities and differences of semaphore providing mechanisms at the kernel level .

User mode process / The data structure of thread semaphore is the same as that of kernel state . User state process / The semaphore related operations of threads are completed through system calls . Whenever a user process calls a semaphore correlation function , Will enter the system call , Processed by the kernel , Then return to user mode to continue execution . Compared with the semaphore mechanism provided for the kernel , User state process / Threads need to execute privileged instructions such as interrupt operations , You need to enter the kernel state through system calls and use the kernel semaphore mechanism .

Therefore, we want to complete the semaphore mechanism design in the user state , In fact, we only need to complete the design of kernel semaphore mechanism , Add some system calls , Include :

- Apply for a system call to create a semaphore

- A semaphore is P operation

- A semaphore is V operation

- Release the specified semaphore

The same thing : The code implementation logic that provides the semaphore mechanism is the same

Difference :

- Kernel semaphore mechanism can directly call kernel services , User mode services need to access kernel mode services through the interface provided by the kernel , This involves the relevant mechanism of user state to kernel state .

- Kernel semaphores are stored in the kernel stack ; But the semaphore of user state is stored in the user stack .

practice 2: Complete the kernel level conditional variables and the philosophers' dining problem based on kernel level conditional variables ( Need to code )

First, master the management mechanism , Then, based on the semaphore implementation, complete the conditional variable implementation , Then the solution to the dining problem of philosophers is realized by using the management mechanism ( Based on conditional variables ).

perform :make grade . If the displayed application tests all output ok, Is basically correct . If it's just a program that can't pass , such as matrix.c, Can be carried out

make run-matrix

Command to debug it separately . See the appendix for the general implementation results .

Please give the design description of kernel level condition variables in the experimental report , And explain the general implementation process .

Please give the user status process in the experiment report / Thread provides the design scheme of conditional variable mechanism , It also compares and explains the similarities and differences of the mechanism of providing conditional variables to the kernel level .

Please answer in the experiment report : Whether the conditional variable can be completed without semaphore mechanism ? If not , Please give reasons , If you can , Please give the design description and specific implementation .

Tube side

Check out the lab instructions , It can be seen that a management process defines a data structure and can be executed by concurrent processes ( In this data structure ) A set of operations , This set of operations can synchronize the process and change the data in the pipe

The tube side consists of four parts :

- Shared variables inside the tube ;

- The condition variables inside the tube side ;

- Processes executed concurrently inside the management process ;

- A statement that sets the initial value of shared data locally within the pipe process .

The tube side is equivalent to an isolation zone , It encloses shared variables and several processes that operate on them , When all processes want to access critical resources , All have to go through the tube to get into , And only one process is allowed to enter the process at a time , Therefore, it is necessary to ensure that processes are mutually exclusive .

But it is not enough to have mutual exclusion operation in the pipe . The process may need to wait for a condition C If it is true, it can continue .

The so-called conditional variable , The waiting queue and sleep conditions are packaged together , A new synchronization mechanism is formed , It is called conditional variable . A conditional variable CV It can be understood as the waiting queue of a process , The process in the queue is waiting for a condition C Become true . Each conditional variable is associated with an assertion “ Assertion ” PC. When a process waits for a condition variable , This process does not count as occupying the pipe , Therefore, other processes can enter the pipe and execute , Change the state of the tube side , Notification condition variable CV Its associated assertion Pc True in current state .

Therefore, the two operations of conditional variables are as follows :

wait_cv: Called by a process , Wait for assertion Pc After being satisfied, the process can resume execution . When the process hangs on the condition variable and waits , It is not considered to occupy the pipe . If the conditions are not met , We need to wait .signal_cv: Called by a process , To point out the assertion Pc Now it's true , Thus, the waiting assertion can be awakened Pc The satisfied process continues . If the conditions can be met , So it can run .

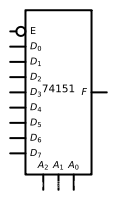

The tube side data structure is defined in monitor.h in :

typedef struct monitor{

semaphore_t mutex; // Binary semaphore Used to mutually exclusive access procedures

semaphore_t next; // For conditional synchronization Used to send out signal Enter sleep before the process of operation and other conditions are true

int next_count; // Record sleeping in signal Number of processes for operation

condvar_t *cv; // Condition variables,

} monitor_t;

Condition variables, cv The data structure of is defined in monitor.h in :

typedef struct condvar{

semaphore_t sem; // For conditional synchronization Used to send out wait The process of the operation waits for the condition to be true before entering sleep

int count; // Record sleeping in wait Number of processes for operation ( Wait for the condition variable to come true )

monitor_t * owner; // Pipe process

} condvar_t;

The realization of conditional variable mechanism is mainly cond_signal, cond_wait Of the two functions , Respectively, it means to remind the process waiting on this condition variable to resume execution , And wait on this condition variable , Until something else wakes it up

cond_signal: Wake up a thread waiting in the queue on the specified condition variable , And transfer control to this process . This operation realizes the mutex of accessing shared variables , The code is as follows :

void

cond_signal (condvar_t *cvp) {

//LAB7 EXERCISE1: YOUR CODE

cprintf("cond_signal begin: cvp %x, cvp->count %d, cvp->owner->next_count %d\n", cvp, cvp->count, cvp->owner->next_count);

// Judge whether there is a waiting process on the waiting queue of the current condition variable , If not, no operation is required ;

// If there is a process waiting

if(cvp->count>0)

{

// Wake up one of them

up(&(cvp->sem));

// Belonging to the pipeline next Count plus 1, Indicates that the current process will be blocked by the waiting

cvp->owner->next_count ++;

// Blocking , Wait for condition synchronization

down(&(cvp->owner->next));

// The current process is awakened , recovery next Waiting process count on

cvp->owner->next_count --;

}

cprintf("cond_signal end: cvp %x, cvp->count %d, cvp->owner->next_count %d\n", cvp, cvp->count, cvp->owner->next_count);

}

in general : perform cond_signal Function time , First, test the current process cv.count, If not greater than 0, It means that there is currently no implementation cond_wait And the process that goes into sleep , Function directly returns . If cv.count Greater than 0, This indicates that there is currently implementation cond_wait And the process that goes into sleep , Therefore, we need to wake up in cv.sem Waiting for the process to enter and exit the sleep state . Because only one process is allowed to execute in the pipe , So once the current process wakes up other processes , It will go into sleep , That is, increase monitor.next_count, Let the current process in semaphore monitor.next Go to sleep on , Until awakened , Reduce monitor.next_count.

cond_wait: The function of this function is to wait for the current process on the specified semaphore , The operation process is to add the count of the waiting queue 1, Then release the lock of the tube side or wake up a next To release the lock , Then wait yourself in the waiting queue of condition variables , Until there is signal The signal wakes it up , Normal exit function ;

void

cond_wait (condvar_t *cvp) {

//LAB7 EXERCISE1: YOUR CODE

cprintf("cond_wait begin: cvp %x, cvp->count %d, cvp->owner->next_count %d\n", cvp, cvp->count, cvp->owner->next_count);

cvp->count ++; // Modify the process count waiting on the waiting queue of the condition variable

// When the tube side next_count Greater than 0, It means that there is a process sleeping signal Operationally, we wake it up

if (cvp->owner->next_count > 0)

{

// Release the lock

up(&cvp->owner->next);

}

else // Currently, no process is sleeping signal Operands Just release the mutex

{

up(&cvp->owner->mutex);

}

// Block yourself , Wait for the condition of the condition variable to be true , After being awakened, reduce the count of sleep processes when the condition is not established 1

down(&cvp->sem); // Wait for yourself on the condition variable

cvp->count --; // Awakened , Fix the process count on the waiting queue

cprintf("cond_wait end: cvp %x, cvp->count %d, cvp->owner->next_count %d\n", cvp, cvp->count, cvp->owner->next_count);

}

therefore , If a process executes cond_wait function , It indicates that the process needs to enter the sleep state because a required condition variable does not meet the demand . The number of dormant processes waiting for this condition variable cv.count increase . If at this time monitor.next_count Greater than 0, Means at least 1 Process execution cond_signal Function and enter sleep state , wait for monitor.next Semaphore time , You need to wake up another process waiting for the condition quantity , Then the process is cv.sem Sleep on . When a process A When awakened , Reduce cv.count, Indicates that the number of sleep processes waiting for this condition variable has been reduced by one , Can continue . If monitor.next_count Less than or equal to 0, Indicates that there is currently no process execution cond_signal Function goes to sleep , What needs to be awakened is the process that cannot enter the pipe due to mutual exclusion conditions , Wake up in monitor.mutex The process of sleep on . Then the current process is cv.sem Sleep on , Until awakened , Reduce cv.count, Indicates that the number of sleep processes waiting for this condition decreases , Can continue .

The realization of philosophers' dining problem based on conditional variables and management

The problem of realizing philosophers mainly lies in check_sync.c in

So here we create 5 Thread Representation 5 Philosophers , Philosophers try 4 Think twice -> Take a fork -> having dinner -> Put down the fork .

Specific analysis and implementation : Mainly phi_take_forks_condvar Function and phi_put_forks_condvar function

phi_take_forks_condvar The function means that the specified philosopher tries to get two forks for the meal he needs , If not, block , The specific implementation process is :

- Lock the tube , Change the status of philosophers to HUNGER;

- Judge whether the neighboring philosophers are eating ;

- If you can eat , Change your status to EATING, Then release the lock , Just leave the tube ;

- If you can't eat , Wait on your corresponding condition variable , Wait for the neighboring philosophers to awaken themselves when they release resources ;

void phi_take_forks_condvar(int i) {

// adopt P The operation enters the critical zone

down(&(mtp->mutex));

// Record the philosophers i Whether you are hungry , That is, in a waiting state, take a fork

state_condvar[i]=HUNGRY;

phi_test_condvar(i);

while (state_condvar[i] != EATING)

{

cprintf("phi_take_forks_condvar: %d didn't get fork and will wait\n",i);

cond_wait(&mtp->cv[i]);// If you can't get a fork, sleep

}

// If there is a process of sleep, then wake it up

if(mtp->next_count>0)

up(&(mtp->next));

else

up(&(mtp->mutex));

}

phi_put_forks_condvar The function means to release the fork occupied by the current philosopher , And wake up neighboring philosophers who wait because they don't have resources :

- First, get the lock of the tube , Change your status to THINKING;

- Check whether the neighboring philosophers meet the eating conditions after releasing the occupation of the fork , If meet , Wake it up from waiting

- Release the lock , Leave the tube

void phi_put_forks_condvar(int i) {

// adopt P The operation enters the critical zone

down(&(mtp->mutex));

// Record the status at the end of the meal

state_condvar[i]=THINKING;

// See if the philosopher on the left can eat now

phi_test_condvar(LEFT);

// See if the philosopher on the right can eat now

phi_test_condvar(RIGHT);

// If there are philosophers sleeping, wake them up

if(mtp->next_count>0)

up(&(mtp->next));

else

up(&(mtp->mutex));

}

Because every philosopher can only occupy all the necessary resources or not occupy resources at all , Therefore, there will be no partial occupation of resources , Thus, deadlock is avoided ; Ultimately, all philosophy will succeed

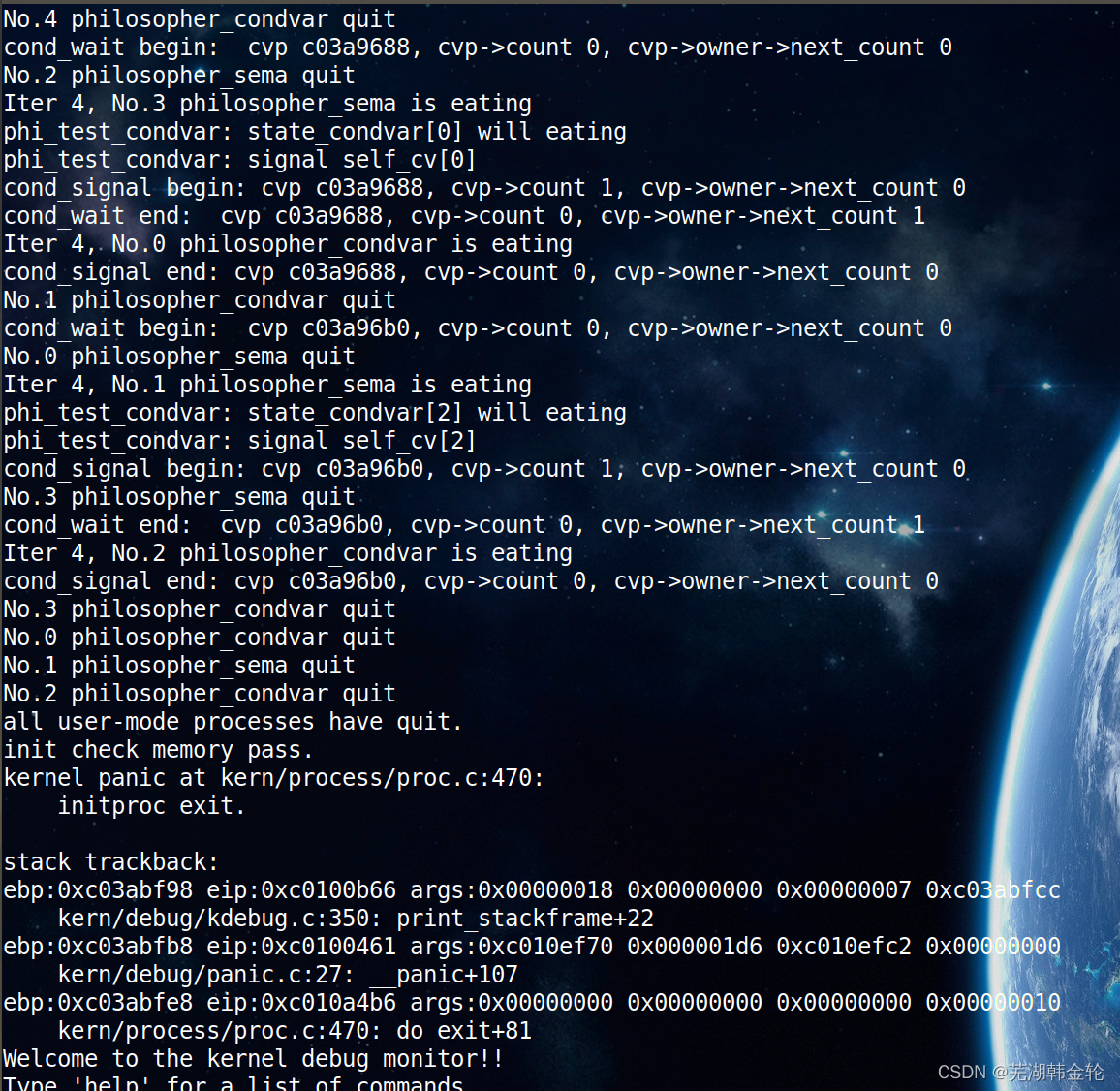

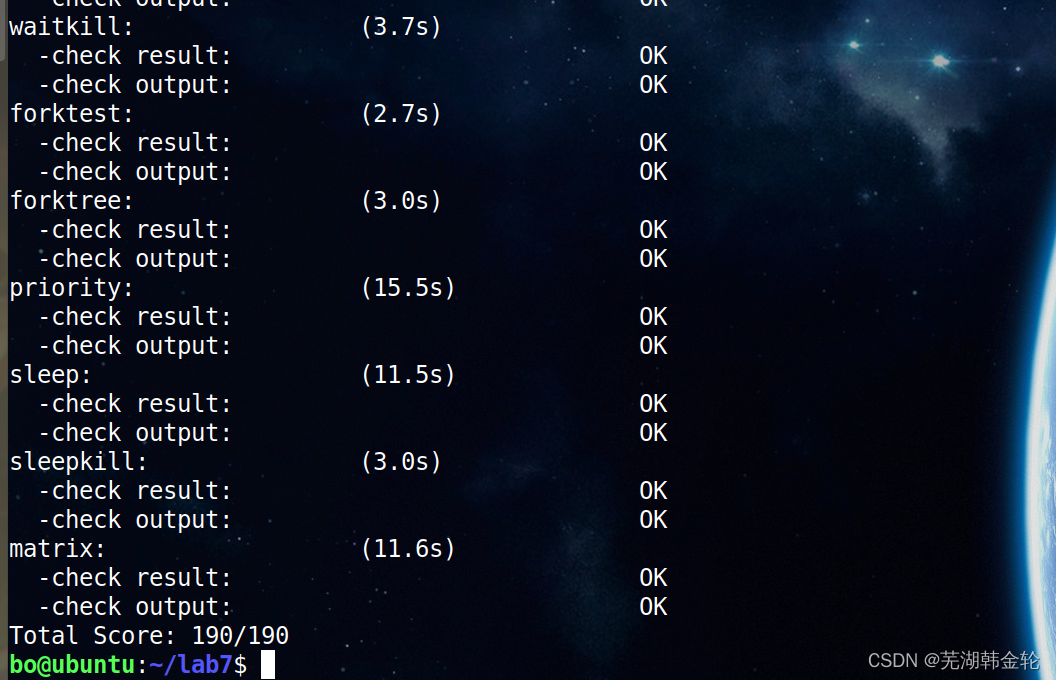

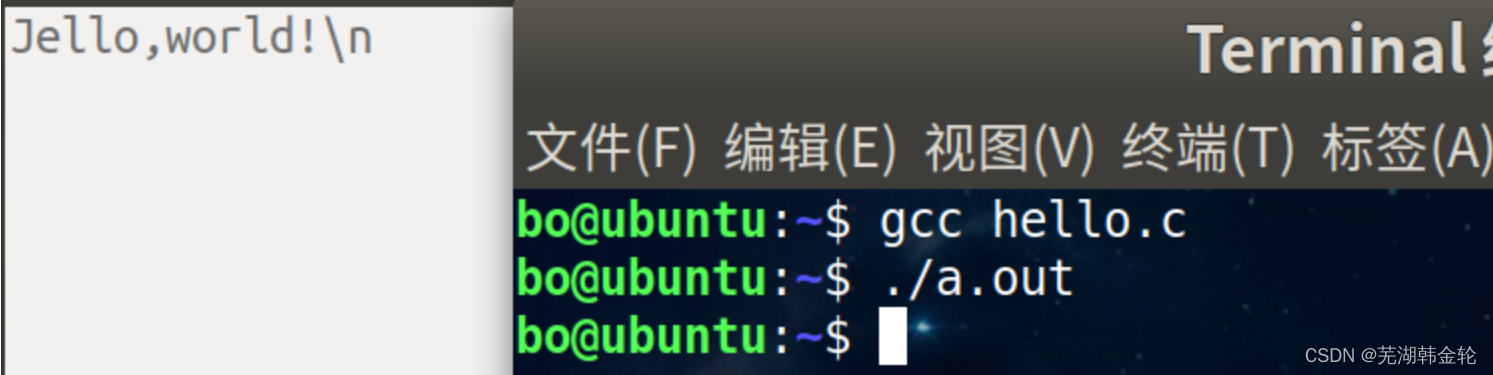

function make qemu View the run results :

function make grade Check your grades :

Extended exercises Challenge : stay ucore Implements simplified deadlock and reentry detection mechanisms

stay ucore Implement a detection mechanism under , Be able to work in multiple processes / When a thread runs a synchronous mutual exclusion problem , It is necessary to dynamically judge whether the current system has deadlock , Whether multiple processes enter the critical zone . If you find that , Let the system enter monitor state , Print out your detection information .

Extended exercises Challenge : Reference resources Linux Of RCU Mechanism , stay ucore Implementation of simplified RCU Mechanism

stay ucore Next realize Linux Of RCU Synchronous mutual exclusion mechanism . Can read related Linux Kernel books or query online materials , Can understand RCU Design and implementation details of , Then simplify the implementation in ucore in . An experimental report is required to explain your design idea , And provide test cases . Here are some references :

- http://www.ibm.com/developerworks/cn/linux/l-rcu/

- http://www.diybl.com/course/6_system/linux/Linuxjs/20081117/151814.html

RCU The full name is (Read-Copy-Update), Intended to read and write - Copy - to update , stay Linux All the kernel mutually exclusive facilities provided belong to a lock free mechanism . Read write spin locks discussed earlier (rwlock)、 Sequential lock (seqlock) equally ,RCU The applicable model of is also a read-write coexistence system .

- Read write spin lock : Readers and writers are mutually exclusive , Readers and readers coexist , The writer and the writer are mutually exclusive .( Favor readers )

- Sequential lock : The writer and the writer are mutually exclusive , The writer directly interrupts the reader ( Prefer the writer )

RCU Different from them , Its read and write operations do not need to consider the mutual exclusion between the two .

In the previous lock analysis , You can know , Lock 、 Unlocking all involves memory operations , At the same time, memory barrier is introduced , All of these will cause the system overhead of lock operation to become larger , On top of that , Kernel in Kernel Of 2.5 Version introduced RCU Lock free and mutually exclusive access mechanism . therefore RCU It's actually an improved rwlock, Readers have little synchronization overhead , It doesn't need a lock , Not using atomic instructions . No locks also make it easier to use , Because the deadlock problem does not need to be considered . The writer's synchronization overhead is relatively large , It needs to delay the release of data structures , Copy the modified data structure , It must also use some kind of locking mechanism to synchronize the modification operations of other writers in parallel . The reader must provide a signal to the writer so that the writer can determine when the data can be safely released or modified . There is a special garbage collector to detect the reader's signal , Once all the readers have signaled that they are not in use RCU Protected data structures , The garbage collector calls the callback function to complete the final data release or modification operation .

in general ,RCU Behavior of :

- You can get the read lock at any time , That is, the read operation of the critical area can be met at any time

- Only one person can get the write lock at a time , Multiple write locks need to be mutually exclusive , The actions of writing include Copy – modify – Delete the original value after the grace window expires

- The original value of the critical zone is m1, If someone gets the write lock and changes the critical area to m2, Then the value of the critical area obtained by the read lock after the write lock modifies the critical area is m2, Previously obtained is m1, This is guaranteed by atomic operation

Compared with RCU The read operation will be satisfied at any time , However, the write operation after the write lock consumes relatively more system resources , And only delete the original resource after the grace period .

Target :

RCU The protected object is the pointer . This is especially important . Because pointer assignment is a single instruction . That is to say, it is an atomic operation . Because it changes the pointer, there is no need to consider its synchronization . Just think about it cache Influence .

All about in the kernel RCU All operations should be provided by the kernel RCU Of APIs Function completion , these APIs It mainly focuses on the operation of pointers and linked lists .

Realization principle :

stay RCU During the implementation of , We mainly solve the following problems :

- In the process of reading , Another thread deleted a node . Delete thread can remove this node from the linked list , But it cannot destroy this node directly , You must wait until all the reading threads have finished reading , Before destruction .RCU This process is called grace period (Grace period).

- In the process of reading , Another thread inserts a new node , And the reading thread reads this node , Then you need to ensure that the read node is complete . This involves publishing - Subscribe mechanism (Publish-Subscribe Mechanism).

- Ensure the integrity of reading the linked list . Add or delete a node , It will not cause traversal of a linked list to be disconnected from the middle . however RCU There is no guarantee that the newly added node or the node to be deleted will be read .

1. Grace period

void foo_read(void)

{

rcu_read_lock();

foo *fp = gbl_foo;

if ( fp != NULL )

dosomething(fp->a,fp->b,fp->c);

rcu_read_unlock();

}

void foo_update( foo* new_fp )

{

spin_lock(&foo_mutex);

foo *old_fp = gbl_foo;

gbl_foo = new_fp;

spin_unlock(&foo_mutex);

synchronize_rcu();

kfee(old_fp);

}

among foo_ read Added in rcu_read_lock and rcu_read_unlock, These two functions are used to mark a RCU The beginning and end of the reading process . Its function is to help detect whether the grace period is over .foo_update Added a function synchronize_rcu(), Calling this function means the beginning of a grace period , And until the grace period ends , This function will return . Let's compare the picture again , Threads 1 and 2, stay synchronize_rcu You may have got the old one before gbl_foo, That is to say foo_update Medium old_fp, If you don't wait for them to finish running , Just call kfee(old_fp), It is very likely to cause system crash . and 3,4,6 stay synchronize_rcu And then run , At this time, it is impossible for them to get old_fp, This time kfee Will not affect them .

2. subscribe —— Publishing mechanism

#define rcu_assign_pointer(p, v)

__rcu_assign_pointer((p), (v), __rcu)

#define __rcu_assign_pointer(p, v, space)

do {

smp_wmb();

(p) = (typeof(*v) __force space *)(v);

} while (0)

We can see that its implementation only adds optimization barrier before assignment smp_wmb To ensure the execution order of the code . In addition, it is used in macros __rcu, It is only used as a detection condition in the compilation process .

3、 Integrity of data reading

To solve the problem of read integrity , We call a native interface for publishing semantics ,rcu_assign_ pointer() :

#define rcu_assign_pointer(p, v)

{

uintptr_t _r_a_p__v = (uintptr_t)(v);

if (__builtin_constant_p(v) && (_r_a_p__v) == (uintptr_t)NULL) // Insert a memory barrier if necessary

{

WRITE_ONCE((p), (typeof(p))(_r_a_p__v));

}

else {

// Turn off the compiler's non sequential compilation optimization when assigning values , Ensure that the assignment has been initialized .

smp_store_release(&p, RCU_INITIALIZER((typeof(p))_r_a_p__v));

}

}

This interface can ensure that from the compiler and CPU On the level gp Before being assigned ,p The field pointed to can be assigned .

Summary of the experiment

Through this experiment, we have a deeper study and understanding of semaphores and conditional variables , Through the acceptance and the questions of the teaching assistants, we can grasp this part more firmly , In terms of code , Semaphores and conditional variables have little difference in the realization of philosophers' dining problems . I believe that the content and harvest of this experiment will be of great help to future learning . After that, there will be another experiment of the operating system course , I hope the experiment of the whole operating system can be successfully completed .

边栏推荐

- Statistics 8th Edition Jia Junping Chapter 2 after class exercises and answer summary

- 1. Payment system

- flask实现强制登陆

- Build your own application based on Google's open source tensorflow object detection API video object recognition system (I)

- [HCIA continuous update] working principle of static route and default route

- 数字电路基础(二)逻辑代数

- “Hello IC World”

- Function: find the root of the equation by Newton iterative method

- 指针:最大值、最小值和平均值

- Mysql的事务是什么?什么是脏读,什么是幻读?不可重复读?

猜你喜欢

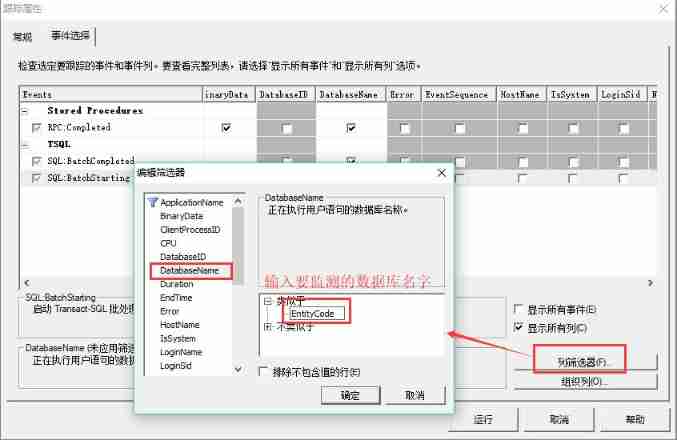

Database monitoring SQL execution

How to learn automated testing in 2022? This article tells you

DVWA exercise 05 file upload file upload

Investment operation steps

CSAPP家庭作業答案7 8 9章

Fundamentals of digital circuit (IV) data distributor, data selector and numerical comparator

The salary of testers is polarized. How to become an automated test with a monthly salary of 20K?

Es full text index

Statistics 8th Edition Jia Junping Chapter XIII Summary of knowledge points of time series analysis and prediction and answers to exercises after class

数字电路基础(一)数制与码制

随机推荐

China's county life record: go upstairs to the Internet, go downstairs' code the Great Wall '

What is an index in MySQL? What kinds of indexes are commonly used? Under what circumstances will the index fail?

函数:求两个正数的最大公约数和最小公倍

【指针】求二维数组中最大元素的值

【指针】统计一字符串在另一个字符串中出现的次数

“Hello IC World”

函数:求方程的根

Global and Chinese market of maleic acid modified rosin esters 2022-2028: Research Report on technology, participants, trends, market size and share

Don't you even look at such a detailed and comprehensive written software test question?

ucore lab7 同步互斥 实验报告

MySQL中什么是索引?常用的索引有哪些种类?索引在什么情况下会失效?

Quaternion -- basic concepts (Reprint)

“Hello IC World”

servlet中 servlet context与 session与 request三个对象的常用方法和存放数据的作用域。

[pointer] solve the last person left

数据库多表链接的查询方式

ByteDance ten years of experience, old bird, took more than half a year to sort out the software test interview questions

Function: string storage in reverse order

Public key box

Vysor uses WiFi wireless connection for screen projection_ Operate the mobile phone on the computer_ Wireless debugging -- uniapp native development 008