author |dhwani mehta

compile |Flin

source |medium

Image stylization is an image processing technology studied in recent decades , This paper aims to demonstrate an efficient and novel style attention network (SANet) Method , While balancing the global and local style patterns , Keep the content structure , Synthesize high quality stylized images .

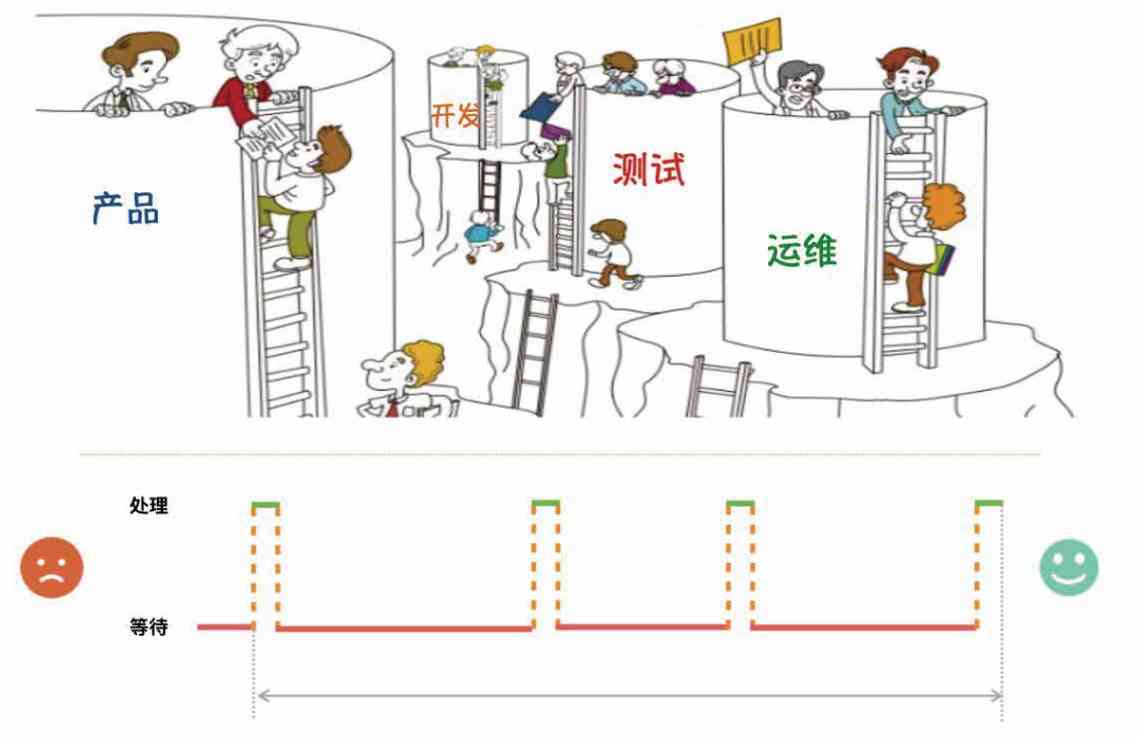

An overview of style transfer mechanism

Have you ever imagined that if you had a great artist making photos , What will the picture look like ? Arbitrary style migration through the content image ( Target image ) With style images ( Its texture is brush stroke , Angle Geometry , pattern , Images that need to be drawn to the content image, such as color transitions ) blend , And turn it into reality , To create a third image you've never seen before .

Novel SANet Style transfer method

The ultimate goal of arbitrary style transfer is to achieve generality , And maintain quality and efficiency .

Balance global and local style patterns and retain content structure for the following reasons :

-

Use the similarity kernel of learning instead of the fixed kernel

-

Use soft attention based web instead of hard attention for style decoration

-

Avoid losing features during training , To maintain content structure without losing the richness of style

Use SANet Building blocks for arbitrary style migration

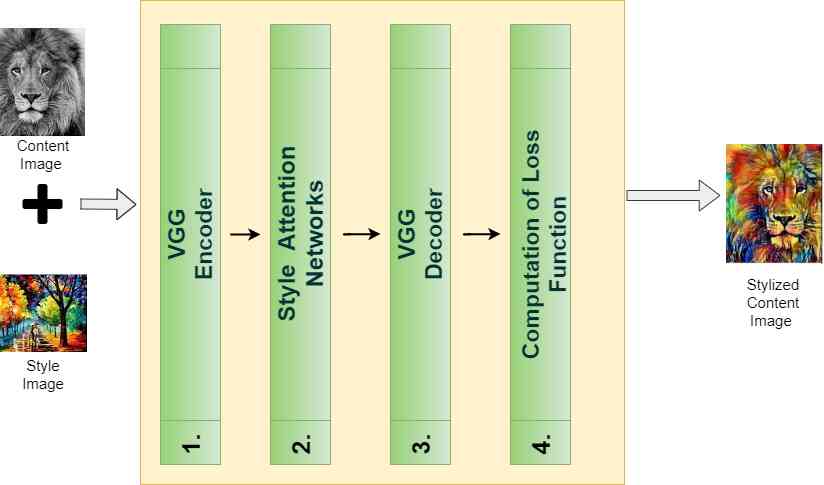

The whole mechanism of style transfer can be summarized as follows :

Let's step through the architecture , Finally, get a comprehensive overview .

comprehensive SANet framework

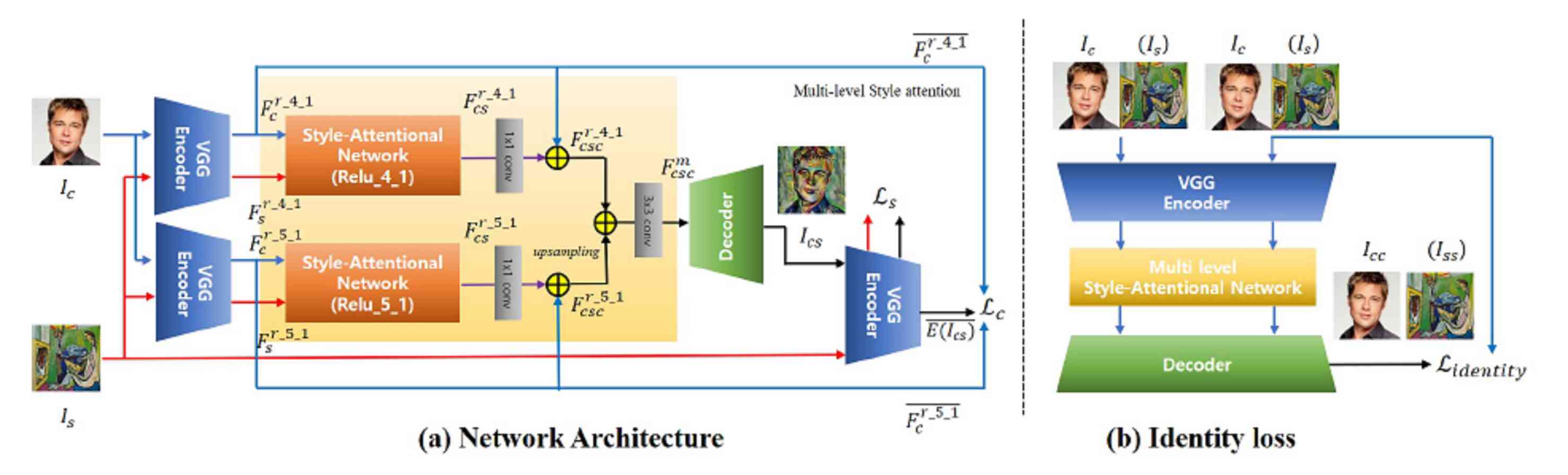

Let's try to unravel the whole architecture , To better understand :

-

Encoder decoder module

-

Style attention module

-

Calculation of loss function

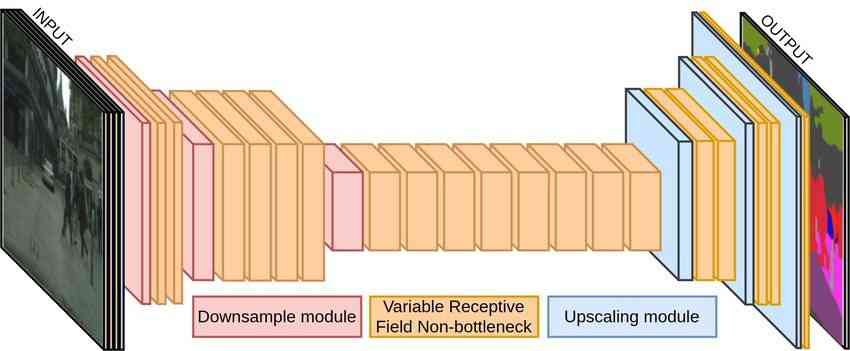

Encoder - Decoder module

The most important step to solve the style migration problem is encoder - Decoder mechanism . In the process of the training VGG-19 The network encodes an image , Form a representation , And pass it to the decoder , The decoder attempts to reconstruct the original input image back to .

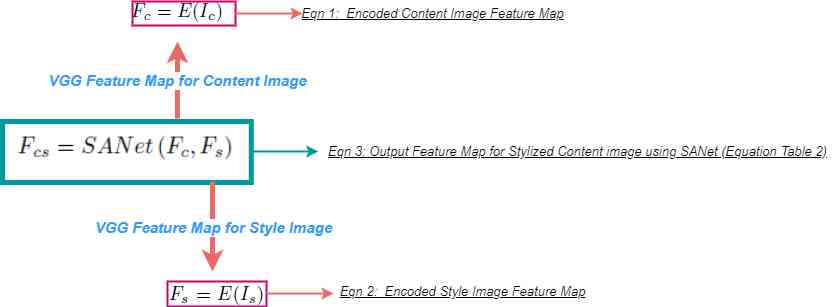

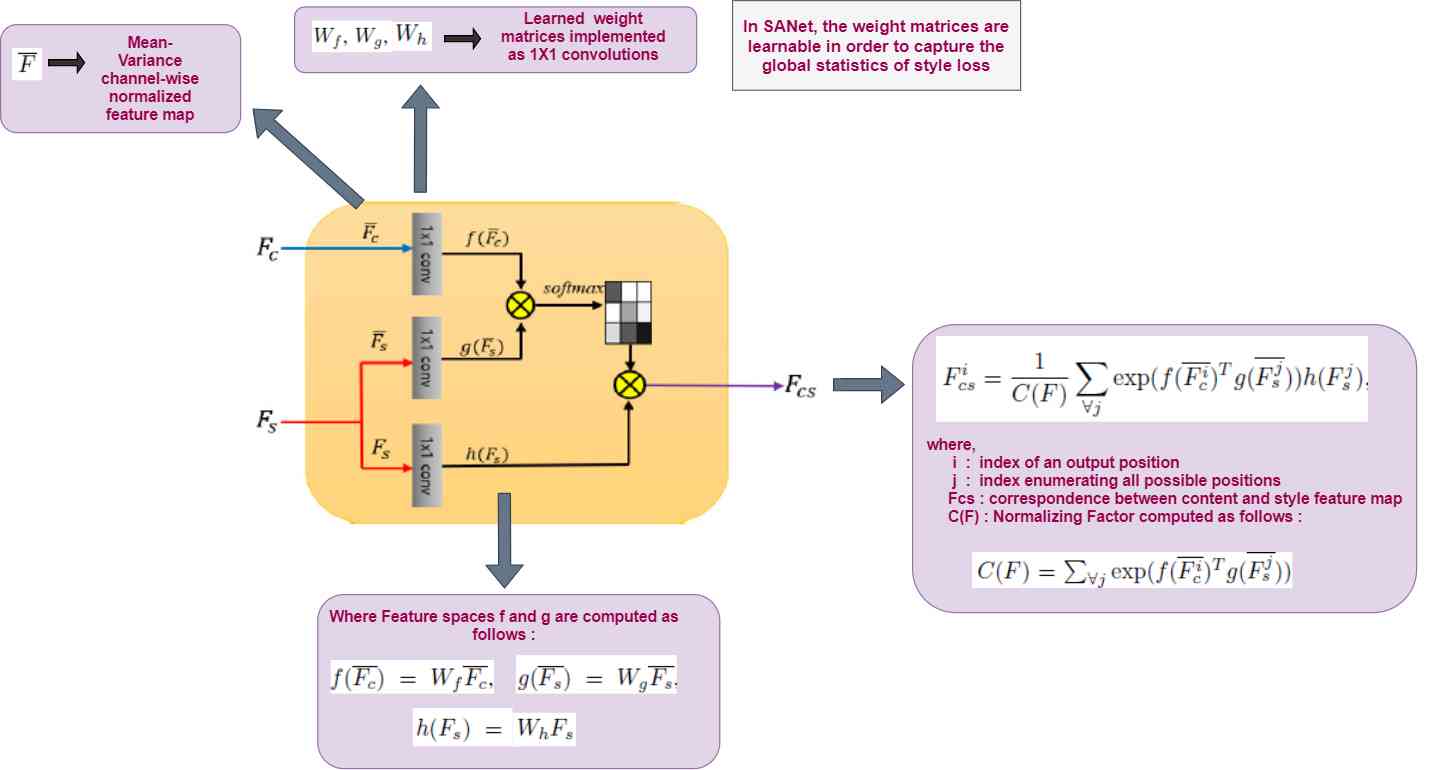

Style attention module

SANet The architecture will come from VGG-19 The content and style of the encoder are input as feature maps , And standardize it , Convert to feature space , To calculate the attention between content and style feature map .

Calculation of loss function

In the process of the training VGG-19 Used to calculate the loss function , In order to train the decoder in the following way :

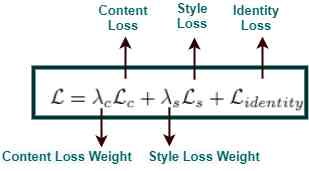

Complete loss calculation formula

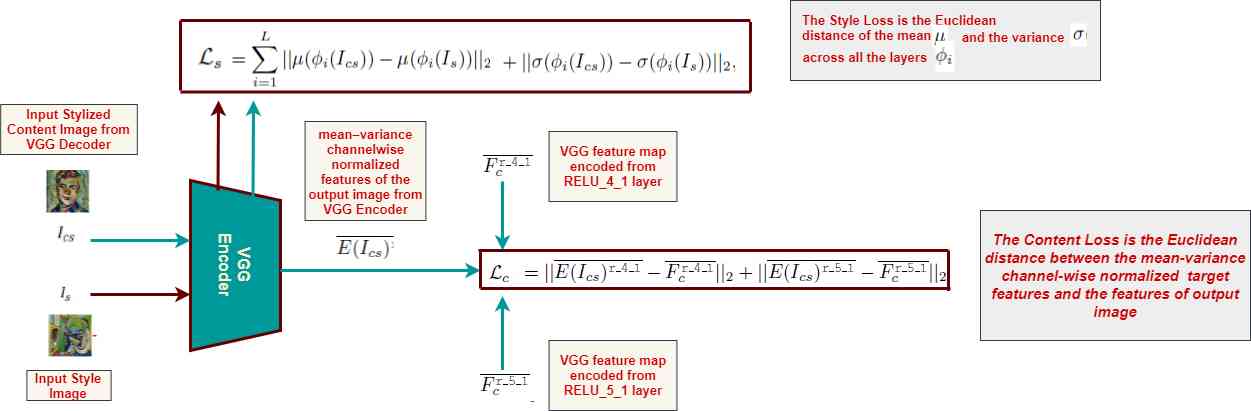

An idea for calculating the loss of content and style :

SANet An overview of the calculation of content and style loss components in

Calculation of characteristic loss

Loss of function due to novel features ,SANet Architecture can preserve the content structure and enrich the style patterns .

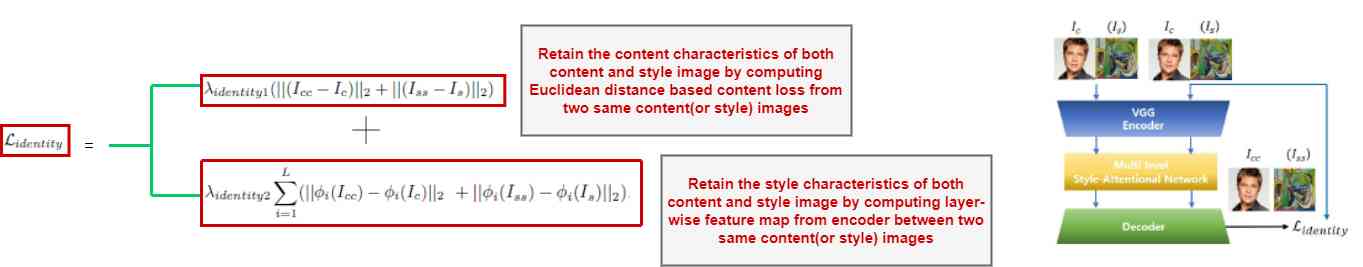

SANet An overview of the calculation of characteristic loss in

Calculate the loss of the same input image without any style blank , It makes the feature loss and realizes the maintenance of content structure and style features at the same time .

Conclusion and result

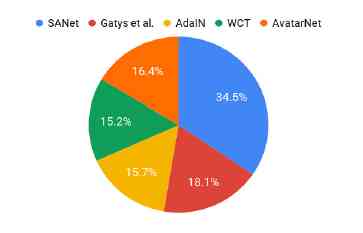

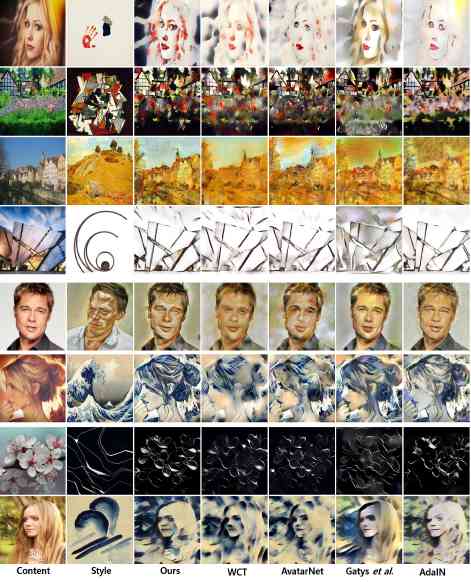

The experiment clearly shows that , Use SANet The results of style transfer will analyze various styles ,

For example, global color distribution , Texture and local style , While maintaining the structure of the content . Again ,SANet It is also useful in distinguishing between the content structure and the migration style corresponding to each semantic content . So it can be inferred that ,SANet Not only is it effective in maintaining the structure of the content , And it's also very effective in retaining style and structural features , And it's easy to integrate style features , So as to enrich the global style and local style statistical information .

reference

[1] Park Daying and Lee Kwong hee .“ Any style migration through a style focused network .” IEEE Proceedings of the conference on computer vision and pattern recognition .2019.

[2] Gatys,Leon A.,Alexander S. Ecker and Matthias Bethge.“ Using convolutional neural network to transfer image style .” IEEE Conference on computer vision and pattern recognition .2016.

[3] Huang,Xun and Serge Belongie.“ Real time arbitrary style migration through adaptive instance Standardization .” IEEE Proceedings of the International Conference on computer vision .2017.

[4] Li Yijun , etc. .“ General style transfer is realized by feature transformation .” Research progress of neural information processing system .2017.

[5] Shenglu , etc. .“ Head picture network : Multi scale zero shot style transfer through feature decoration .” IEEE Proceedings of the conference on computer vision and pattern recognition .2018.

Link to the original text :https://medium.com/visionwizard/insight-on-style-attentional-networks-for-arbitrary-style-transfer-ade42e551dce

Welcome to join us AI Blog station :

http://panchuang.net/

sklearn Machine learning Chinese official documents :

http://sklearn123.com/

Welcome to pay attention to pan Chuang blog resource summary station :

http://docs.panchuang.net/