当前位置:网站首页>手把手基于YOLOv5定制实现FacePose之《YOLO结构解读、YOLO数据格式转换、YOLO过程修改》

手把手基于YOLOv5定制实现FacePose之《YOLO结构解读、YOLO数据格式转换、YOLO过程修改》

2022-08-05 00:51:00 【烧技湾】

导读:本篇记录如何在YOLOv5上实现定制数据集与检测的过程。从原工程数据格式出发,关注每个细节,并将定制任务按照相同的格式再做一遍。独立实现迁移一个project到新坑。

目录

wandb:可视化训练过程

tensorboard: Start with 'tensorboard --logdir runs/train', view at http://localhost:6006/

hyperparameters: lr0=0.01, lrf=0.2, momentum=0.937, weight_decay=0.0005, warmup_epochs=3.0, warmup_momentum=0.8, warmup_bias_lr=0.1, box=0.05, kpt=0.1, cls=0.5, cls_pw=1.0, obj=1.0, obj_pw=1.0, iou_t=0.2, anchor_t=4.0, fl_gamma=0.0, hsv_h=0.015, hsv_s=0.7, hsv_v=0.4, degrees=0.0, translate=0.1, scale=0.5, shear=0.0, perspective=0.0, flipud=0.0, fliplr=0.5, mosaic=1.0, mixup=0.0

loggers['wandb'] = wandb_logger.wandb # train.py中可視化權重與偏置,需要創建一個賬戶

wandb: (1) Create a W&B account

wandb: (2) Use an existing W&B account

wandb: (3) Don't visualize my results

wandb: Enter your choice: 1

wandb: You chose 'Create a W&B account'

wandb: Create an account here: https://wandb.ai/authorize?signup=true

wandb: Paste an API key from your profile and hit enter, or press ctrl+c to quit:

需要wandb官网注册,这里是用github联合注册即可,并获取一个密匙

模型解析

这里介绍anchor设置,与检测头的输出

def parse_model(d, ch): # model_dict, input_channels(3)

logger.info('\n%3s%18s%3s%10s %-40s%-30s' % ('', 'from', 'n', 'params', 'module', 'arguments'))

anchors, nc, nkpt, gd, gw = d['anchors'], d['nc'], d['nkpt'], d['depth_multiple'], d['width_multiple']

#anchor的数量,其anchors:[[19, 27, 44, 40, 38, 94], [96, 68, 86, 152, 180, 137], [140, 301, 303, 264, 238, 542], [436, 615, 739, 380, 925, 792]]

na = (len(anchors[0]) // 2) if isinstance(anchors, list) else anchors # number of anchors na = 3

#论文中针对关键点的改进,3×(1+5+2×17)=3×40

no = na * (nc + 5 + 2*nkpt) # number of outputs = anchors * (classes + 5)

优化器参数与Batch Size关系

# Optimizer

nbs = 64 # nominal batch size

accumulate = max(round(nbs / total_batch_size), 1) # accumulate loss before optimizing

#这里不需要因修改batch—size而修改decay,累计误差再优化

hyp['weight_decay'] *= total_batch_size * accumulate / nbs # scale weight_decay

logger.info(f"Scaled weight_decay = {

hyp['weight_decay']}")

图像增强

# class LoadImagesAndLabels(Dataset): # for training/testing

...

#马赛克增强

self.mosaic = self.augment and not self.rect # load 4 images at a time into a mosaic (only during training)

self.mosaic_border = [-img_size // 2, -img_size // 2]

self.stride = stride

self.path = path

self.kpt_label = kpt_label

#这里针对Keypoint作改进。

self.flip_index = [0, 2, 1, 4, 3, 6, 5, 8, 7, 10, 9, 12, 11, 14, 13, 16, 15]

COCO与YOLO格式转换

COCO原始格式

${

POSE_ROOT}

|-- data

`-- |-- coco

`-- |-- annotations

| |-- person_keypoints_train2017.json

| `-- person_keypoints_val2017.json

|-- person_detection_results

| |-- COCO_val2017_detections_AP_H_56_person.json

`-- images

|-- train2017

| |-- 000000000009.jpg

| |-- 000000000025.jpg

| |-- 000000000030.jpg

| |-- ...

`-- val2017

|-- 000000000139.jpg

|-- 000000000285.jpg

|-- 000000000632.jpg

|-- ...

也就是说Keypoints的标注都放在JSON文件中。我们可以拿出一个样本分析下JSON数据

JSON信息包含了图片的名称、宽高、id等信息

{

"license": 4,

"file_name": "000000252219.jpg",

"coco_url": "http://images.cocodataset.org/val2017/000000252219.jpg",

"height": 428,"width": 640,

"date_captured": "2013-11-14 22:32:02",

"flickr_url": "http://farm4.staticflickr.com/3446/3232237447_13d84bd0a1_z.jpg",

"id": 252219

}

图片展示如下:

其人工标注的信息如下:

{

"num_keypoints": 17,

"area": 8511.1568, "iscrowd": 0,

"keypoints": [356,198,2,358,193,2,351,194,2,364,192,2,346,194,2,375,207,2,341,211,2,388,236,2,336,238,2,392,263,2,

343,242,2,373,271,2,347,272,2,372,316,2,348,318,2,372,353,2,355,354,2],

"image_id": 252219,

"bbox": [326.28,174.56,71.24,197.25],

"category_id": 1,"id": 481918

}

我们可以发现,COCO格式中Keypoints的标注信息由3×num_keypoins组成,每个三元组格式为:[x,y,v],其中v为可见度,表示意义为:

- v=0,表示不可见,且未标记,此时x=y=0;

- v=1,表示不可见,已标记;

- v=2,表示可见,已标记。

{

"num_keypoints": 15,

"area": 8349.28485,"iscrowd": 0,

"keypoints": [100,190,2,0,0,0,96,185,2,0,0,0,86,188,2,84,208,2,71,208,2,84,245,2,59,240,2,115,263,2,66,271,2,

64,268,2,71,264,2,59,324,2,99,322,2,18,363,2,101,377,2],

"image_id": 252219,

"bbox": [9.79,167.06,121.94,226.45],

"category_id": 1,

"id": 489768

}

bounding box的格式服从**“xywh”**,即左上角坐标+宽+高

YOLO格式

${

POSE_ROOT}

|-- data

`-- |-- coco

`-- |-- annotations

| |-- person_keypoints_train2017.json

| `-- person_keypoints_val2017.json

|-- person_detection_results

| |-- COCO_val2017_detections_AP_H_56_person.json

`-- images

| |-- train2017

| | |-- 000000000009.jpg

| | |-- 000000000025.jpg

| | |-- ...

| `-- val2017

| |-- 000000000139.jpg

| |-- 000000000285.jpg

| |-- ...

`-- labels

| |-- train2017

| | |-- 000000000009.txt

| | |-- 000000000025.txt #这里面图片的keypoint信息,以YOLO格式展示

| | |-- ...

| `-- val2017

| |-- 000000000139.txt

| |-- 000000000285.txt #这里面图片的keypoint信息,以YOLO格式展示

| |-- ...

`-- train2017.txt #这里面放的内容是:相对路径+图片名字

`-- val2017.txt #这里面放的内容是:相对路径+图片名字

这里还是列举"image_id": 252219的YOLO格式信息

0 0.565469 0.638283 0.111312 0.460864 0.556250 0.462617 2.000000 0.559375 0.450935 2.000000 0.548438 0.453271 2.000000 0.568750

0.448598 2.000000 0.540625 0.453271 2.000000 0.585938 0.483645 2.000000 0.532813 0.492991 2.000000 0.606250 0.551402 2.000000

0.525000 0.556075 2.000000 0.612500 0.614486 2.000000 0.535937 0.565421 2.000000 0.582812 0.633178 2.000000 0.542188 0.635514

2.000000 0.581250 0.738318 2.000000 0.543750 0.742991 2.000000 0.581250 0.824766 2.000000 0.554688 0.827103 2.000000

0 0.110562 0.654871 0.190531 0.529089 0.156250 0.443925 2.000000 0.000000 0.000000 0.000000 0.150000 0.432243 2.000000 0.000000

0.000000 0.000000 0.134375 0.439252 2.000000 0.131250 0.485981 2.000000 0.110937 0.485981 2.000000 0.131250 0.572430 2.000000

0.092188 0.560748 2.000000 0.179688 0.614486 2.000000 0.103125 0.633178 2.000000 0.100000 0.626168 2.000000 0.110937 0.616822

2.000000 0.092188 0.757009 2.000000 0.154688 0.752336 2.000000 0.028125 0.848131 2.000000 0.157812 0.880841 2.000000

0 0.894172 0.652220 0.193219 0.504112 0.837500 0.448598 1.000000 0.840625 0.439252 2.000000 0.000000 0.000000 0.000000 0.862500

0.443925 2.000000 0.000000 0.000000 0.000000 0.887500 0.483645 2.000000 0.867188 0.485981 2.000000 0.873437 0.567757 2.000000

0.865625 0.574766 2.000000 0.846875 0.630841 2.000000 0.859375 0.647196 2.000000 0.895312 0.640187 2.000000 0.873437 0.640187

2.000000 0.920312 0.754673 2.000000 0.845313 0.752336 2.000000 0.964063 0.852804 2.000000 0.828125 0.843458 2.000000

这里,JSON2YOLO格式的转化函数参考链接JSON2YOLO,其算法如下:

img = images['%g' % x['image_id']]

h, w, f = img['height'], img['width'], img['file_name']

# The COCO box format is [top left x, top left y, width, height]

box = np.array(x['bbox'], dtype=np.float64)

box[:2] += box[2:] / 2 # xy top-left corner to center

box[[0, 2]] /= w # normalize x

box[[1, 3]] /= h # normalize y

说明YOLO格式是中心点归一化,即XYWH,需要转为 C x C y C_xC_y CxCyWH(注意,此时所有点都被图片的宽高归一化)。我们按照上述COCO原始格式,看看能不能得到YOLO格式:

"height": 428,"width": 640,

"num_keypoints": 17,

"area": 8511.1568, "iscrowd": 0,

"keypoints": [356,198,2,358,193,2,351,194,2,364,192,2,346,194,2,375,207,2,341,211,2,388,236,2,336,238,2,392,263,2,

343,242,2,373,271,2,347,272,2,372,316,2,348,318,2,372,353,2,355,354,2],

"image_id": 252219,

"bbox": [326.28,174.56,71.24,197.25],

通过上述算法,可以粗略估计:

bbox:(326+71/2)/640=0.5656, (174+197/2)/428=0.6355, 71/670=0.1109, 197/428=0.460

keypoints[0]: 356/640=0.5562, 198/428=0.4626

这与转成YOLO格式的结果一致

0 0.565469 0.638283 0.111312 0.460864 0.556250 0.462617 2.000000

300-W转化YOLO格式

300-W人脸数据库,是包含68个人脸关键点的流行数据库,它的人脸来自不同的数据集如afw、ibug等。其文件格式如下:

-- data

|-- data_300W

|-- afw

|-- helen

|-- ibug

|-- lfpw

|-- data

`-- |-- data_300W

`-- |-- annotations

|-- afw

|-- helen

|-- ibug

|-- lfpw

`-- images

| |-- train2017

| | |-- 000000000009.jpg

| | |-- 000000000025.jpg

| | |-- ...

| `-- val2017

| |-- 000000000139.jpg

| |-- 000000000285.jpg

| |-- ...

`-- labels

| |-- train2017

| | |-- 000000000009.txt

| | |-- 000000000025.txt #这里面图片的keypoint信息,以YOLO格式展示

| | |-- ...

| `-- val2017

| |-- 000000000139.txt

| |-- 000000000285.txt #这里面图片的keypoint信息,以YOLO格式展示

| |-- ...

`-- train2017.txt #这里面放的内容是:相对路径+图片名字

`-- val2017.txt #这里面放的内容是:相对路径+图片名字

300-W格式

查看data_300W/afw/1051618982_1.jpg

上述图片对应的68个人脸标记是*.pt文件,打开如下

version: 1

n_points: 68

{

482.866335 268.009351

484.241455 298.524244

487.963820 329.985842

491.613829 359.446370

503.992490 387.443021

523.666182 409.551102

543.708366 429.090358

566.283098 442.751692

……

591.348649 385.406662

580.068281 384.385348

563.609110 379.281936

552.917511 366.852392

580.508062 371.198816

592.309498 371.492218

604.011866 371.855814

634.952400 369.536292

604.011866 371.855814

592.309498 371.492218

580.508062 371.198816

}

一共68个二值对 ( x i , y i ) (x_i,y_i) (xi,yi),为方便展示,省去中间若干数值对。而coco2yolo格式如下所示,即:

0 xywh (x, y)

| | |

| | ` - - 按照图像宽高归一化的坐标 | ` - - 归一化的bounding box,中心点坐标xy与框的宽高wh

` - - iscrowd:是否拥挤场景,0,N;1,yes.

300-W格式转YOLO格式

也就是说,需要将上述68个人脸关键点的数据转化为coco2yolo格式。这里,我们参考PIPNet的预处理文本,将300W文件夹完全转化为COCO类似的文件格式,包括文件目标格式。这样做就是为了尽可能避免在yolo中代码修改。

至此,此格式转换成功。

工程修改(刨坑记录)

YOLO涉及的改动还是不少的,主要在几个方面:

- 数据集读取;

- 检测头修改;

去修改launch文件相关配置;

去修改data/coco_kepts.yaml文件中的数据读取路径。

去修改models/hub/cfg文件,如yolo5s6_kpts.yaml中的相关参数如:nkpt 从17改外68;

去修改dataset第497行,有关如何读取txt数据的;

去修改dataset第987行,有关如何数据变化的;

修改dataset第365行,有关如何flip数据;

修改loss函数第187,和202行,有关loss_gain;

loss函数中第119行,有关sigmas是直接写死的,都写成1算了;

plots函数中第76、84行,有关plot的问题,目前还没有搞定,先不画图算了;

修改yolo函数第90行,有关self.inplace

train log

autoanchor: Analyzing anchors... anchors/target = 7.86, Best Possible Recall (BPR) = 1.0000

Image sizes 640 train, 640 test

Using 4 dataloader workers

Logging results to runs/train/exp10

Starting training for 300 epochs...

Epoch gpu_mem box obj cls kpt kptv total labels img_size

0/299 4.22G 0.07731 0.0573 0 0.3465 0.01299 0.4941 10 640: 100%| 787/787 [02:58<00:00, 4.41it/s]

Class Images Labels P R [email protected] [email protected]:.95: 100%| 87/87 [00:14<00:00, 6.05it/s]

all 689 689 0.0073 0.691 0.00784 0.00137

……

一个epoch需要3mins,共300个epoch; 期待下结果!

待续

工程总算调试通过!更多细节会慢慢放出。

边栏推荐

猜你喜欢

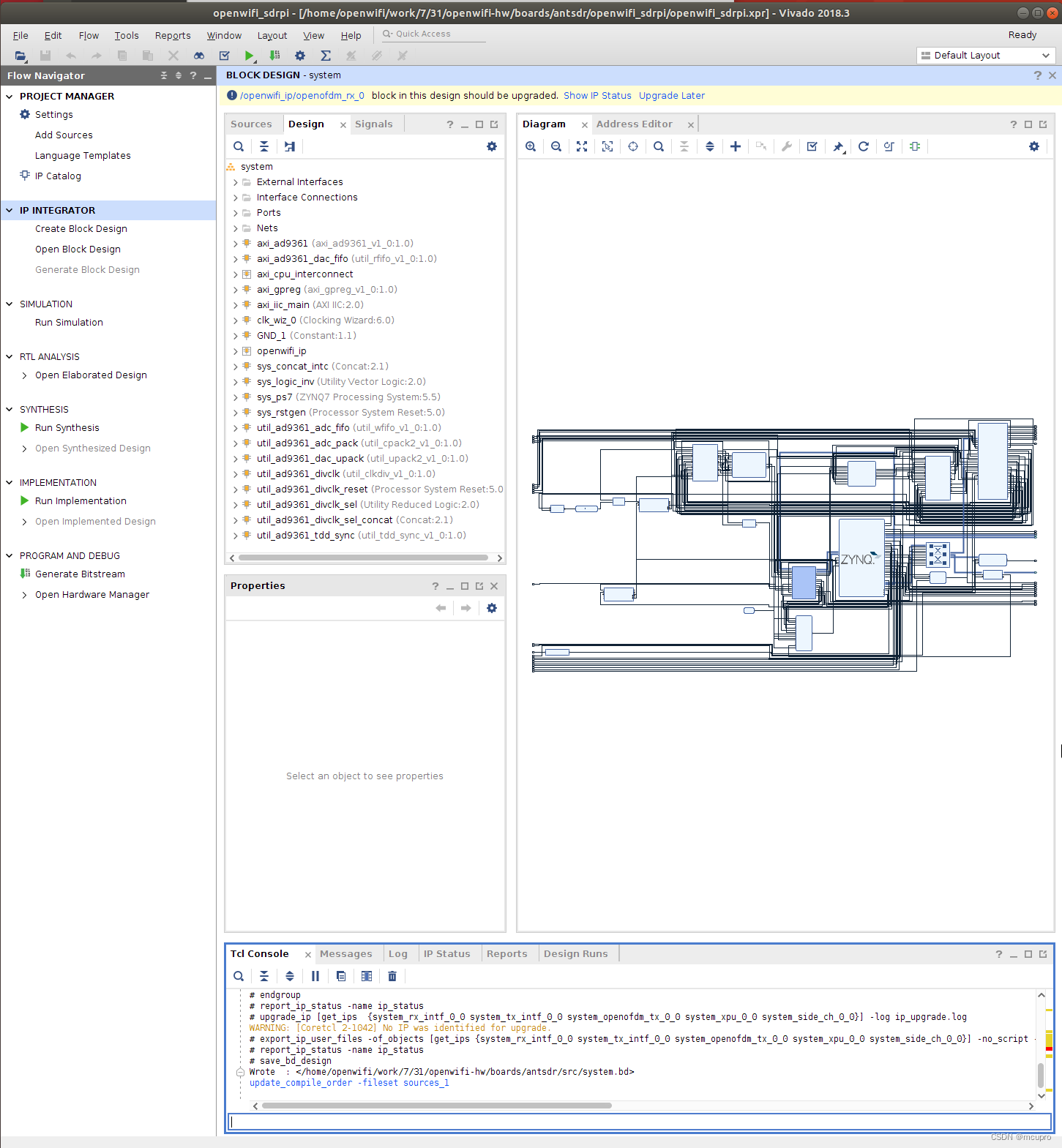

OPENWIFI实践1:下载并编译SDRPi的HDL源码

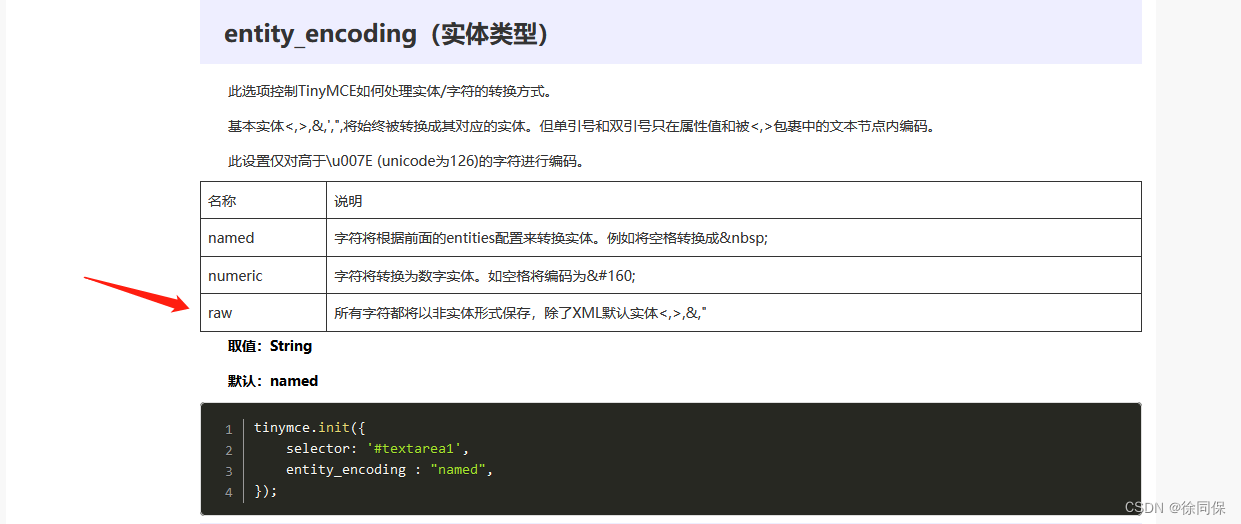

TinyMCE disable escape

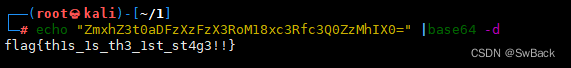

Memory Forensics Series 1

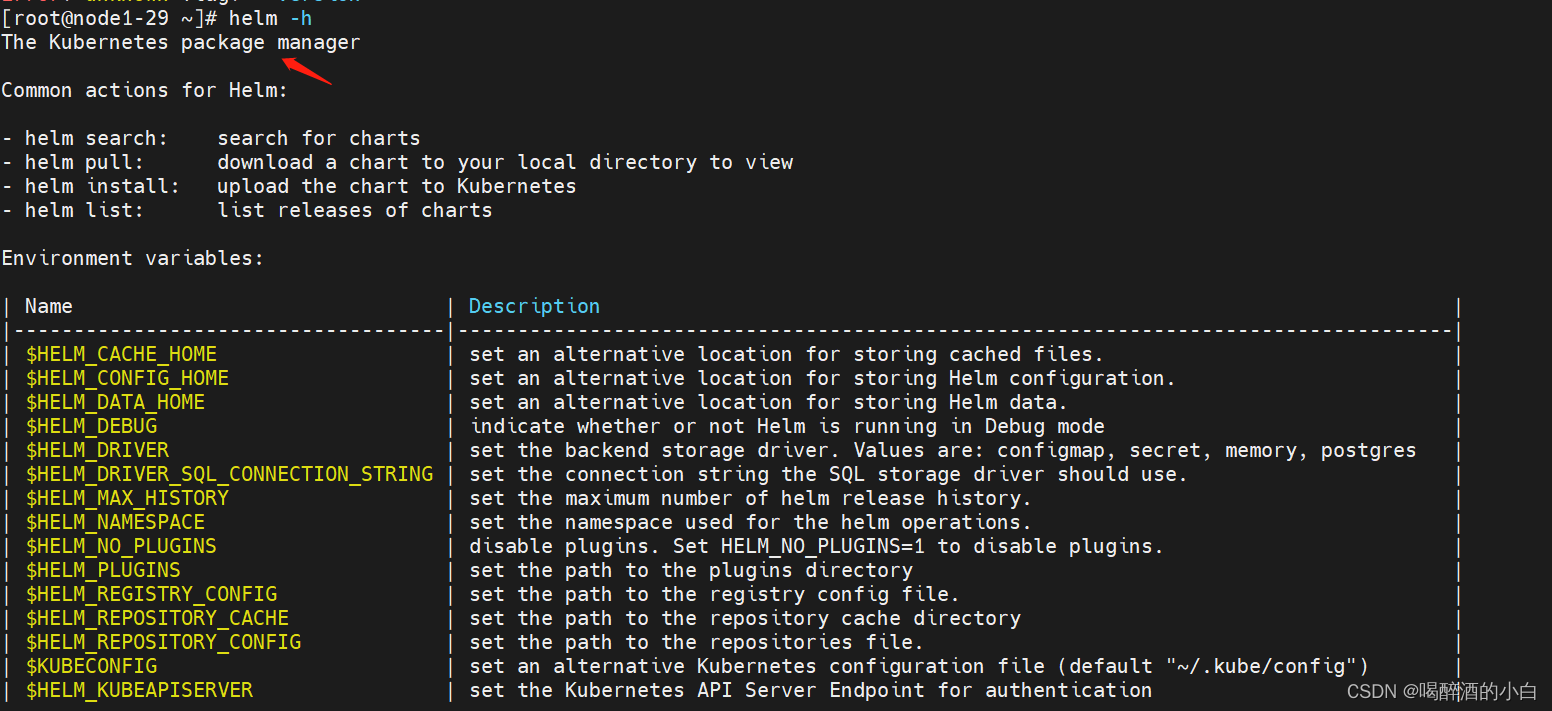

Helm Chart

Countdown to 1 day!From August 2nd to 4th, I will talk with you about open source and employment!

Inter-process communication and inter-thread communication

新唐NUC980使用记录:在用户应用中使用GPIO

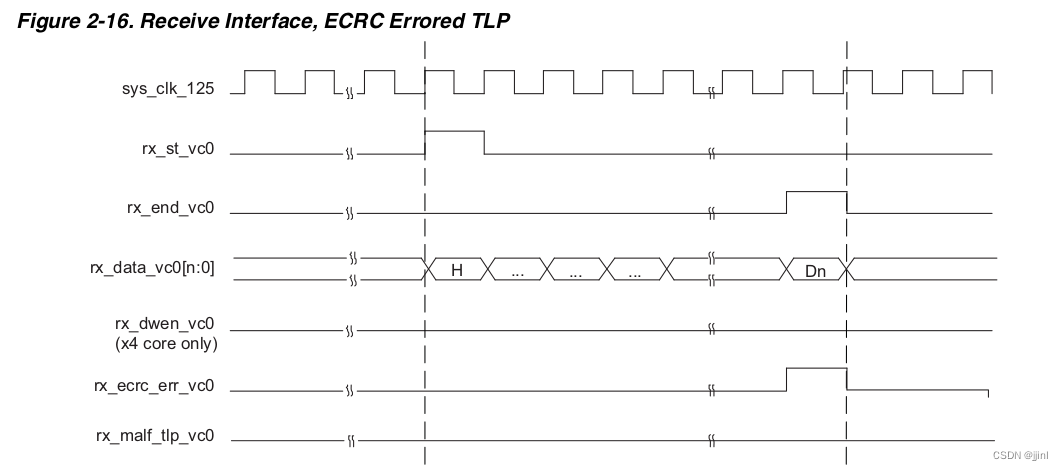

4. PCIe interface timing

![[FreeRTOS] FreeRTOS and stm32 built-in stack occupancy](/img/33/3177b4c3de34d4920d741fed7526ee.png)

[FreeRTOS] FreeRTOS and stm32 built-in stack occupancy

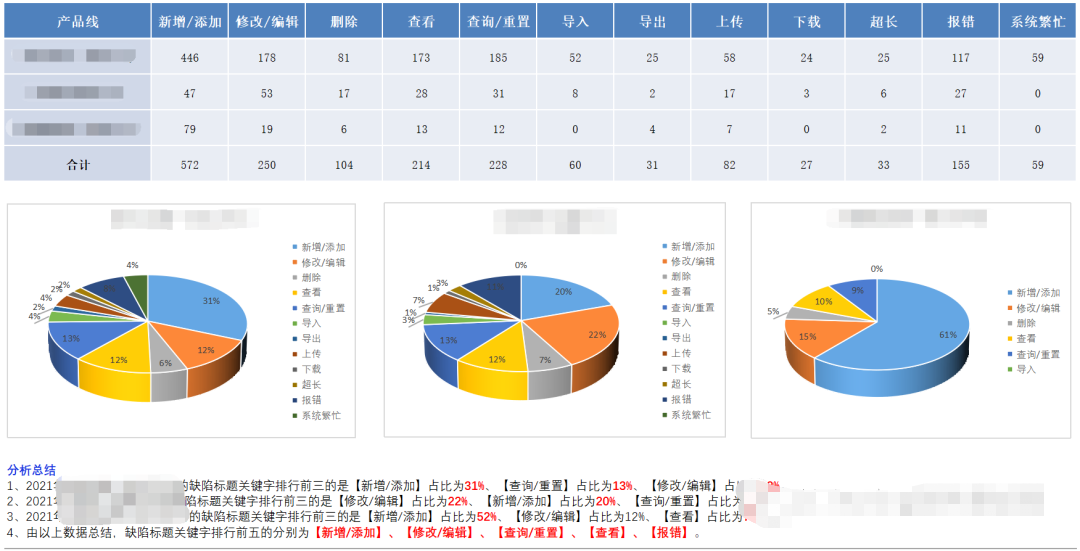

After the staged testing is complete, have you performed defect analysis?

随机推荐

2022牛客多校训练第二场 H题 Take the Elevator

gorm joint table query - actual combat

2022牛客多校训练第二场 L题 Link with Level Editor I

JWT简单介绍

2022杭电多校训练第三场 1009 Package Delivery

第十一章 开关级建模

Software testing interview questions: Have you used some tools for software defect (Bug) management in your past software testing work? If so, please describe the process of software defect (Bug) trac

FSAWS 的全球基础设施和网络

软件测试面试题:设计测试用例时应该考虑哪些方面,即不同的测试用例针对那些方面进行测试?

Will domestic websites use Hong Kong servers be blocked?

仅3w报价B站up主竟带来1200w播放!品牌高性价比B站投放标杆!

2022 Hangzhou Electric Power Multi-School Session 3 K Question Taxi

Inter-process communication and inter-thread communication

自定义线程池

2022 Hangzhou Electric Power Multi-School Session 3 Question L Two Permutations

The principle of NMS and its code realization

深度学习训练前快速批量修改数据集中的图片名

2022 Nioke Multi-School Training Session 2 J Question Link with Arithmetic Progression

2022 The Third J Question Journey

Knowledge Points for Network Planning Designers' Morning Questions in November 2021 (Part 2)