当前位置:网站首页>Semantic segmentation | learning record (2) transpose convolution

Semantic segmentation | learning record (2) transpose convolution

2022-07-08 02:09:00 【coder_ sure】

Semantic segmentation | Learning record (2) Transposition convolution

Tips : come from up Lord thunderbolt Wz, I'm just taking study notes , Original video

List of articles

Preface

Many later networks need to use transpose convolution , So let's first introduce the related concepts of transpose convolution

The transpose convolution part mainly introduces :

- What is transposition convolution

- Transpose convolution operation steps

- Transpose convolution common parameters

- Transpose convolution instance

One 、 What is transposition convolution ?

Transposition convolution (Transposed Convolution), It mainly plays a On the sampling The role of .

Let's first look at the one on the left Ordinary convolution ,padding = 0,strides=1(conv), The effect is achieved through a 3 * 3 Convolution kernel , a sheet 5 * 5 The picture of becomes a 2*2 Pictures of the .

Let's look at the transposed convolution on the right ,padding = 0,strides=1(transposed conv), Be careful ️ Inside padding refer to Whether the corresponding graph after up sampling is expanded compared with the original graph ! Here, the size of the feature layer is restored back to the size before convolution .

Emphasize a little : Transpose convolution and Not the inverse of convolution ! The size is restored , however The value is different from the previous value !

A guide to convolution arithmetic for deep learning

Two 、 Operation steps of transpose convolution

- Fill between input feature map elements s-1 That's ok 、 Column 0(s It refers to the filling in the middle of the feature map 0 The number of rows or columns )

- Fill in around the input feature map k-p-1 That's ok 、 Column 0

- The convolution kernel parameters are up and down 、 Flip left and right

- Do normal convolution ( fill 0, Step length 1)

No padding, no strides, transposed:s=1,p=0,k=3

No padding, strides, transposed:s=2,p=0,k=3

Padding, strides, transposed:s=2,p=1,k=3

The width and height of the output image are calculated as follows : With Padding, strides, transposed:s=2,p=1,k=3 Illustrate with examples .

3、 ... and 、 Examples of transpose convolution

- After filling in the elements of the input feature map and filling around the input feature map, we get the leftmost one feature map As input for the next step ( Ignore paranoia bias)

- Next, we will go up and down the convolution kernel parameters 、 Flip left and right

- Then carry out the operation of ordinary convolution

Only this and nothing more , We have completed the operation of transpose convolution

But why do you do this ? Further explanation will be given later .

PyTorch The official information about transpose convolution related parameters is as follows :

Four 、 Another in-depth exploration example of transpose convolution

First, let's look at the ordinary convolution operation , I won't go over it here .

The convolution form above is what we often see , But the real computer does not slide window by window when calculating , The disadvantage of this is that it is too inefficient !

Instead, take the following convolution kernel Equivalent matrix :

- When the convolution kernel is in the upper left corner , Then build a form like red matrix

- Empathy , Slide to that position , Build the corresponding fill 0 Matrix

- Then the input characteristic graph is combined with the convolution equivalent matrix , Get the output characteristic graph

The final convolution effect is the same as the sliding window effect .

We will enter feature map Flattening ! Get the picture below I

Next, it will be equivalent kernel Convolution kernel is also flattened , Get the picture below C

I and C Multiply , Get the output characteristic graph O( This O That is, output the result of flattening the feature layer )

Next, we will turn the above process : We know O and C, Can we push back the matrix I Well ? The answer is No , In other words Convolution is not reversible , The reason lies in : The condition that a matrix has an inverse matrix is this The matrix must be a square matrix , This condition is not satisfied here .

Although it cannot be pushed back I, But we can get one and the original I A matrix of the same size P. And then pass by reshape, We got one 4*4 A graph of the same size as before convolution , This is what was said before , Transpose convolution is actually an upsampling process .

Here, let's change another form to do the reverse process :

We will O Restore meeting 2*2 The characteristic layer of

then C T C^T CT Each column becomes a 2 * 2 Equivalent matrix of , altogether 16 individual

O And 16 individual C The decomposed small matrices are multiplied in turn

In the above process, we find every small white matrix sum of transpose convolution O The effect of multiplication and green convolution kernel sliding on the characteristic graph is equivalent . This green convolution sum is also called convolution kernel Kernel Turn up, down, left and right , This is the calculation principle of transpose convolution .

Here I believe you have a deeper understanding of transpose convolution !

边栏推荐

- 微软 AD 超基础入门

- 给刚入门或者准备转行网络工程师的朋友一些建议

- [recommendation system paper reading] recommendation simulation user feedback based on Reinforcement Learning

- The way fish and shrimp go

- 软件测试笔试题你会吗?

- Many friends don't know the underlying principle of ORM framework very well. No, glacier will take you 10 minutes to hand roll a minimalist ORM framework (collect it quickly)

- BizDevOps与DevOps的关系

- JVM memory and garbage collection-3-runtime data area / heap area

- leetcode 869. Reordered Power of 2 | 869. 重新排序得到 2 的幂(状态压缩)

- In depth analysis of ArrayList source code, from the most basic capacity expansion principle, to the magic iterator and fast fail mechanism, you have everything you want!!!

猜你喜欢

Key points of data link layer and network layer protocol

Talk about the realization of authority control and transaction record function of SAP system

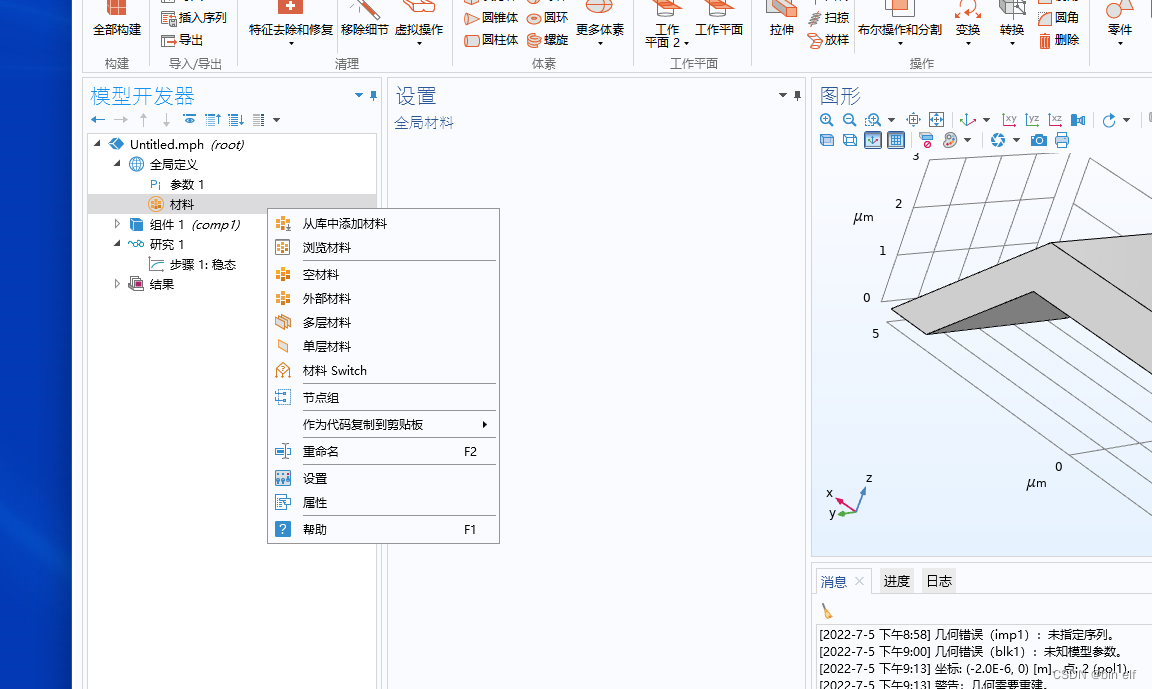

COMSOL --- construction of micro resistance beam model --- final temperature distribution and deformation --- addition of materials

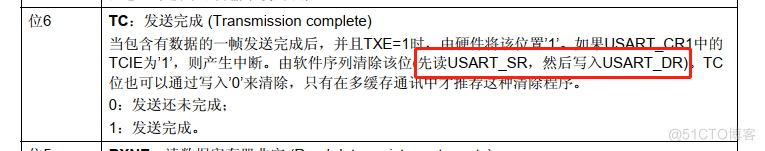

关于TXE和TC标志位的小知识

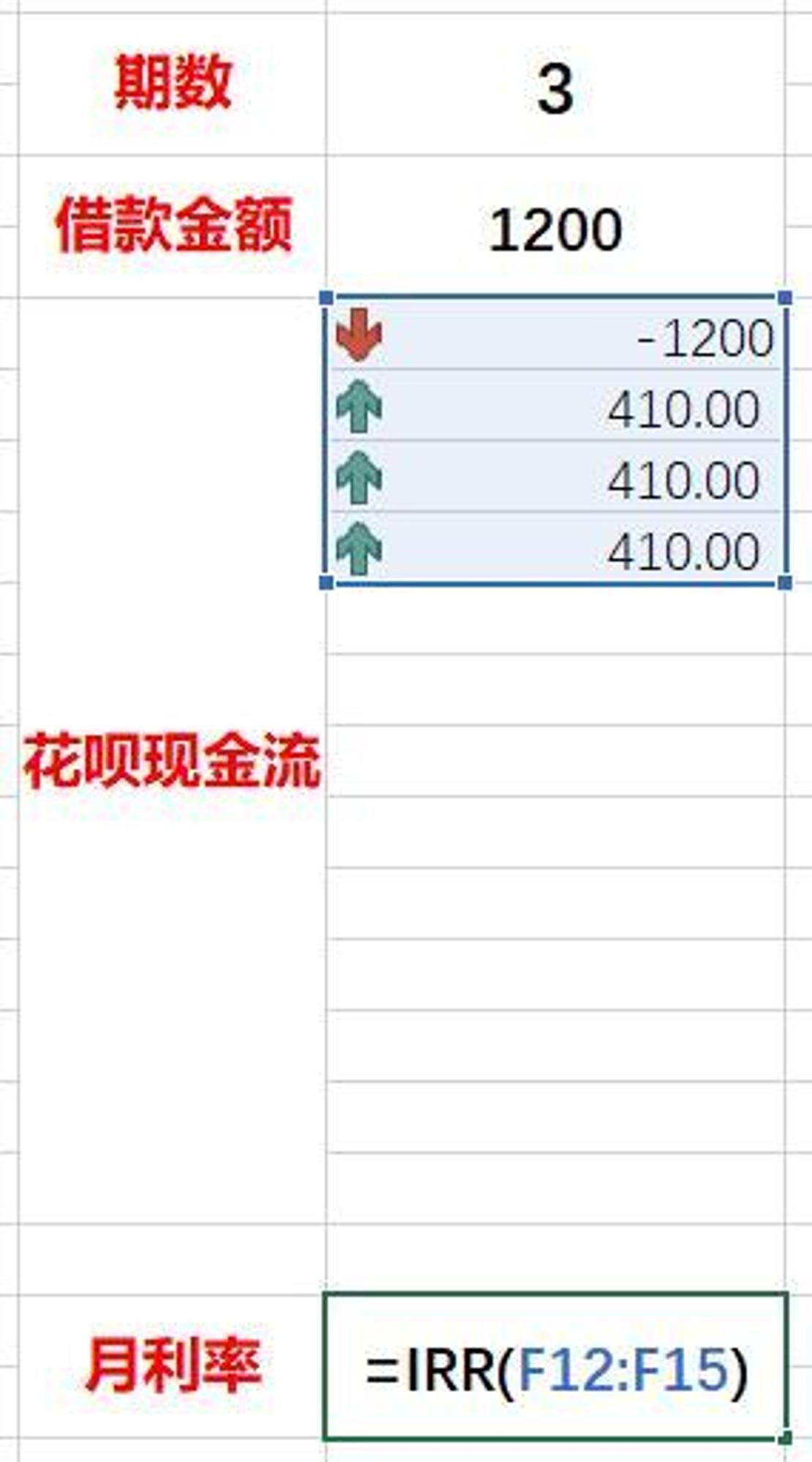

I don't know. The real interest rate of Huabai installment is so high

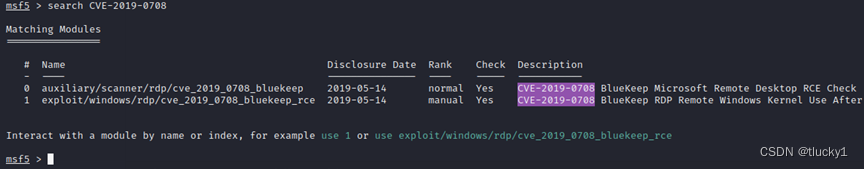

metasploit

burpsuite

快手小程序担保支付php源码封装

Neural network and deep learning-5-perceptron-pytorch

分布式定时任务之XXL-JOB

随机推荐

QT -- create QT program

#797div3 A---C

leetcode 865. Smallest Subtree with all the Deepest Nodes | 865. The smallest subtree with all the deepest nodes (BFs of the tree, parent reverse index map)

Introduction to grpc for cloud native application development

鱼和虾走的路

Reading notes of Clickhouse principle analysis and Application Practice (7)

很多小夥伴不太了解ORM框架的底層原理,這不,冰河帶你10分鐘手擼一個極簡版ORM框架(趕快收藏吧)

The method of using thread in PowerBuilder

nmap工具介紹及常用命令

Beaucoup d'enfants ne savent pas grand - chose sur le principe sous - jacent du cadre orm, non, ice River vous emmène 10 minutes à la main "un cadre orm minimaliste" (collectionnez - le maintenant)

[error] error loading H5 weight attributeerror: 'STR' object has no attribute 'decode‘

Where to think

数据链路层及网络层协议要点

2022年5月互联网医疗领域月度观察

CorelDRAW2022下载安装电脑系统要求技术规格

力扣6_1342. 将数字变成 0 的操作次数

Clickhouse principle analysis and application practice "reading notes (8)

2022国内十大工业级三维视觉引导企业一览

I don't know. The real interest rate of Huabai installment is so high

leetcode 866. Prime Palindrome | 866. 回文素数