Dark horse MySQL note

MySQL Architecture

adjoining course

At the top are some clients and link services , Mainly completes some similar to the connection processing 、 Authorized certification 、 And related safety programs . The server will also provide secure access for each client The client verifies its operation permissions .

Service layer

The second layer architecture mainly completes most of the core service functions , Such as SQL Interface , And complete the cache query ,SQL Analysis and optimization of , Execution of some built-in functions . All cross storage The function of storage engine is also realized in this layer , Such as The process 、 Functions, etc .

Engine layer

The storage engine is really responsible for MySQL The storage and extraction of data in , Server pass API Communicating with the storage engine . Different storage engines have different functions , So I We can according to our own needs , To choose the right storage engine .

Storage layer

Mainly store the data on the file system , And complete the interaction with the storage engine .

show enginesView the storage engines supported by the current database

Storage engine features

InnoDB The underlying file

xxx.ibd:xxx Represents the table name ,innoDB Each table of the engine will correspond to such a tablespace file , The table structure that stores the table (frm、sdi)、 Data and index .

Whether to use independent table spaces can be achieved through innodb_file_per_table To set up .

In profile (my.cnf) Set in : innodb_file_per_table = 1 #1 For opening ,0 To close

adopt show variables like '%per_table%'; Query current status

It can also be done through set global innodb_file_per_table =OFF; Temporary modification , Failure after restart .

MyISAM The underlying file

xxx.sdi: Store table structure information

xxx.MYD: Store the data

xxx.MYI: Storage index

Indexes

Slow query log

# adopt show [session|global] status Command can provide server status information . By the following instructions , You can view the INSERT、UPDATE、DELETE、SELECT Frequency of visits :

SHOW GLOBAL STATUS LIKE 'Com_______';

The slow query log records that all execution times exceed the parameters long_query_time Set the value and the number of scan records is not less than min_examined_row_limit All of SQL Statement log , Default not on .long_query_time The default is 10 second , The minimum is 0, The precision can reach microseconds .

# Slow query log

show variables like 'slow_query_log';

stay MySQL Configuration file for (/etc/my.cnf) The following information is configured in :

# Turn on MYSQL Slow query log

slow_query_log=1

# Set the time of slow query log to 2 second ,SQL Statement execution time exceeds 2 second , It will be regarded as slow query , Log slow queries

long_query_time=2

restart MYSQL Services take effect .

Default slow query log file location /var/lib/mysql/localhost-slow.log

By default , Management statements will not be recorded , Queries that do not use indexes for lookup are also not recorded . have access to log_slow_admin_statements and Change this behavior log_queries_not_using_indexes, As follows .

profile details

show profiles Be able to do SQL Optimization helps us understand where the time is spent .

- adopt have_profiling Parameters , Be able to see the present MySQL Do you support .

- View the current profiling Is it turned on , The default is 0, Indicates not on .

Start profiling

see SQL The time-consuming situation of the statement

# Check each one SQL Time consuming

show profiles; # View specified query_id Of SQL The time-consuming situation of each stage of the statement

show profile for query 2; # View specified query_id Of SQL Statement of all stages CPU Consumption

show profile cpu for query 2;

explain Implementation plan

EXPLAIN perhaps DESC Command acquisition MySQL How to execute SELECT Statement information , Included in SELECT How tables are joined and the order in which they are joined during statement execution .

EXPLAIN The meaning of each field of the execution plan :

* Id: select The serial number of the query , Represents execution in a query select Clause or the order of the operation table (id identical , Execution order from top to bottom ;id Different , The bigger the value is. , Execute first ).

* select_type: Express SELECT The type of , Common values are SIMPLE( A simple watch , That is, no table join or subquery is used )、PRIMARY( Main query , That is, the outer query )、UNION(UNION The second or subsequent query statement in )、SUBQUERY(SELECT/WHERE Then it contains sub queries ) etc.

* type: Indicates the connection type , The connection types with good to poor performance are NULL、system、const、eq_ref、ref、range、 index、all .

* possible_key: Displays the indexes that may be applied to this table , One or more .

* key: Actual index used , If NULL, No index is used .

* key_len: Represents the number of bytes used in the index , This value is the maximum possible length of the index field , It's not the actual length , Without losing accuracy , The shorter the length, the better .

* rows: MySQL The number of rows that you think you must execute the query , stay innodb In the engine's table , Is an estimate , It may not always be accurate .

* filtered: Represents the number of rows returned as a percentage of the number of rows to be read , filtered The greater the value, the better .

Index usage

The leftmost prefix rule

If you index multiple columns ( Joint index ), Follow the leftmost prefix rule . The leftmost prefix rule means that the query starts from the leftmost column of the index , And don't skip columns in the index . If you jump a column , The index will be partially invalidated ( The following field index is invalid ).

Range queries

In the union index , Range query appears (>,<), The column index on the right side of the range query is invalid

Index column operation

Do not operate on index columns , Index will fail .

String without quotes

When using string type fields , Without quotes , Index will fail .

Fuzzy query

If it's just tail blur matching , The index will not fail . If it's a fuzzy head match , Index failure .

or The conditions of connection

use or The conditions of separation , If or The columns in the previous condition are indexed , And there's no index in the next column , Then the indexes involved will not be used .

Data distribution affects

If MySQL Evaluation uses indexes more slowly than full tables , Index is not used .

SQL Tips

use index: It is recommended to use the specified index

ignore index: Ignore the specified index

force index: Force the use of the specified index

Overlay index

Try to use overlay index ( The query uses an index , And the columns that need to be returned , All can be found in this index ), Reduce... On the primary key once select .

Prefix index

When the field type is string (varchar,text etc. ) when , Sometimes you need to index long strings , This makes the index big , When inquiring , Waste a lot of disk IO, shadow Response query efficiency . At this point, you can prefix only part of the string with , Index , This can greatly save index space , To improve index efficiency .

* grammar

create index index_name on table_name(clumn(n));

* Prefix length

It can be determined according to the selectivity of the index , Selectivity refers to index values that are not repeated ( base ) And the total number of records in the data table , The higher the index selectivity, the higher the query efficiency , The only index selectivity is 1, This is the best index selectivity , Performance is also the best

Single column index and joint index

Single index : That is, an index contains only a single column .

Joint index : That is, an index contains multiple columns .

In the business scenario , If there are multiple query criteria , Consider when indexing fields , It is recommended to establish a joint index , Instead of a single column index .

Index design principles

- For large amount of data , The tables that are frequently queried are indexed .

- For often used as query criteria (where)、 Sort (order by)、 grouping (group by) Index the fields of the operation .

- Try to select highly differentiated columns as indexes , Try to build a unique index , The more distinguishable , The more efficient the index is .

- If it is a string type field , The length of the field is long , You can focus on the characteristics of the field , Building prefix index .

- Try to use a federated index , Reduce single column index , When inquiring , Joint indexes can often overwrite indexes , Save storage space , Avoid returning to your watch , Improve query efficiency .

- To control the number of indexes , The index is not that more is better , More indexes , The greater the cost of maintaining the index structure , It will affect the efficiency of addition, deletion and modification .

- If the index column cannot store NULL value , Please use... When creating the table NOT NULL Constrain it . When the optimizer knows whether each column contains NULL When the value of , It can better determine which An index is most effectively used for queries .

SQL Optimize

insert data

insert Optimize

* Batch insert

* Commit transactions manually

* Insert primary key in order

Mass insert data

If you need to insert a large amount of data at one time , Use insert Statement insertion performance is low , You can use MySQL Database provides load Insert instructions . The operation is as follows :

# When the client connects to the server , Add parameters --local-infile

mysql --local-infile -u root -p

# Set global parameters local_infile by 1 , Turn on the switch to import data from the local load file

set global local_infile=1;

# perform load The instruction will prepare the data , Load into table structure

load data local infile '/root/load_user_100w_sort.sql' into table tb_user fields terminated by ',' lines terminated by '\n';

!

The performance of primary key sequential insertion is better than that of out of order insertion

The main reason is that each page of the underlying data is stored on the physical disk in the order of the primary key from low to high , If it is inserted in the order of the primary key, it is written to the disk in a similar order ; If it is inserted out of order , You need to adjust the order of the written data on the disk .

Primary key optimization

How data is organized

stay InnoDB In the storage engine , Table data is organized and stored according to the primary key sequence , The tables in this way of storage are called index organization tables (index organized table IOT).

Page splitting

The page can be empty , You can fill half , You can also fill in 100%. Each page contains 2-N Row data ( How big is a row of data , Line overflow ), Arrange according to the primary key .

Page merge

When deleting a row of records , In fact, the record has not been physically deleted , Just the record is marked (flaged) For deletion and its space becomes allowed to be used by other record declarations .

When the record deleted in the page reaches MERGE_THRESHOLD( The default is... Of the page 50%),InnoDB Will start looking for the closest page ( Before or after ) See if you can merge the two pages to optimize Use space .

Key design principles

* Meet business needs , Try to reduce the length of the primary key .

* When inserting data , Try to insert in order , Choose to use AUTO_INCREMENT Since the primary key .

* Try not to use UUID Make primary keys or other natural primary keys , Such as ID card No .

* During business operation , Avoid modifying the primary key .

order by Optimize

① Using filesort:

Through table index or full table scan , Read the data lines that meet the conditions , Then in the sort buffer Complete the sorting operation in , All sorts that do not return sorting results directly through index are called Sort .

② Using index:

Through the sequential scan of the ordered index, the ordered data can be returned directly , This is the case , No need for extra sorting , High operating efficiency .

* Establish the appropriate index according to the sorting field , When sorting multiple fields , Also follow the leftmost prefix rule .

* Try to use overlay index .

* Multi field sorting One ascending, one descending , At this time, you need to pay attention to the rules when creating the federated index (ASC/DESC ).

* If inevitable filesort, When sorting large amounts of data , You can appropriately increase the size of the sorting buffer sort_buffer_size( Default 256k) .

group by Optimize

* During grouping operation , Efficiency can be improved by indexing .

* When grouping , The use of index also satisfies the leftmost prefix rule .

limit Optimize

A common and very troublesome problem is limit 2000000,10 , At this time need MySQL Before ordering 2000010 Record , Just go back to 2000000 - 2000010 The record of , Other records discarded , The cost of query sorting is very high .

Optimization idea : General paging query , By creating a Overlay index It can better improve the performance , It can be optimized in the form of overlay index and sub query .

# This is time-consuming

select * from tb_user limit 10000,10

# This is relatively fast

select s.* from tb_user s,(select id from tb_user order by id limit 10000,10) a where s.id=a.id;

count Optimize

* MyISAM The engine stores the total number of a table on disk , So execute count(*) It will return this number directly , It's very efficient ;

* InnoDB The engine is in trouble , It performs count() When , You need to read the data line by line from the engine , And then the cumulative count .

Optimization idea : Count yourself .

count Several uses of count Optimize

* count() It's an aggregate function , For the returned result set , Judge line by line , If count The argument to the function is not NULL, Add... To the cumulative value 1, Otherwise, we will not add , most Return the cumulative value after .

* usage :count(*)、count( Primary key )、count( Field )、count(1)

count Several uses of

* count( Primary key )

InnoDB The engine will traverse the entire table , Put each line of Primary key id Take out all the values , Return to the service layer . After the service layer gets the primary key , Accumulate directly by line ( The primary key cannot be null)

* count( Field )

No, not null constraint : InnoDB The engine will traverse the whole table and get the field values of each row , Return to the service layer , The service layer determines whether it is null, Not for null, Count up .

Yes not null constraint :InnoDB The engine will traverse the whole table and get the field values of each row , Return to the service layer , Accumulate directly by line .

* count(1)

InnoDB The engine traverses the entire table , But no value . For each row returned by the service layer , Put a number “1” go in , Accumulate directly by line .

* count(*)

InnoDB The engine doesn't take out all the fields , It's optimized , No value , The service layer accumulates directly by line .

In order of efficiency ,count( Field ) < count( Primary key id) < count(1) ≈ count(*), So try to use count(*).

update Optimize

InnoDB The row lock of is the lock added for the index , It's not a lock on records , And the index cannot be invalidated , Otherwise, it will be upgraded from row lock to table lock .

This statement should be wrong , It can only be said that the appearance is upgraded from row lock to table lock . Specific details can be seen here Innodb How to lock it - Nuggets (juejin.cn)

View

Introduce

View (View) It's a virtual existence table . The data in the view does not actually exist in the database , Row and column data comes from tables used in queries that define views , And is using visual The graph is dynamically generated .

Generally speaking , The view only saves the of the query SQL Logic , Do not save query results . So when we create views , The main job is to create this SQL On the query statement .

establish

# grammar

CREATE [ OR REPLACE ] VIEW View name [( List of names )] AS SELECT sentence [WITH [CASCADED | LOCAL ] CHECK OPTION]

# Example

create or replace view view_tb_user as select id,name from tb_user where id<10;

Inquire about

# View the create view statement

SHOW CREATE VIEW View name ;

# View view data

SELECT * FROM View name ...;

modify

# Mode one

CREATE [ OR REPLACE ] VIEW View name [( List of names )] AS SELECT sentence [WITH [CASCADED | LOCAL ] CHECK OPTION]

# Mode two

ALTER VIEW View name [( List of names )] AS SELECT sentence [WITH [CASCADED | LOCAL ] CHECK OPTION]

Delete

DROP VIEW [IF EXISTS] View name [, View name ]

Check options for view

When using WITH CHECK OPTION Clause when creating a view ,MySQL Each row that is changing is checked through the view , for example Insert , to update , Delete , To make it conform to the definition of the view The righteous . MySQL Allows you to create a view based on another view , It also checks the rules in the dependency view for consistency .

In order to determine the scope of inspection ,mysql There are two options : CASCADED and LOCAL , The default value is CASCADED

Update of view

To make the view updatable , There must be a one-to-one relationship between the rows in the view and the rows in the underlying table .

If the view contains any of the following , The view is not updatable :

- Aggregate function or window function (SUM()、 MIN()、 MAX()、 COUNT() etc. )

- DISTINCT

- GROUP BY

- HAVING

- UNION perhaps UNION ALL

effect

* Simple

Views not only simplify the user's understanding of the data , They can also simplify their operations . Queries that are often used can be defined as views , Thus, the user does not have to operate for the future Make all the conditions specified each time .

* Security

The database can authorize , However, it cannot be authorized to specific rows and columns in the database . Through view users can only query and modify the data they can see

* Data independence

View can help users shield the impact of changes in real table structure .

System variables

Look at the system variables

SHOW [SESSION|GLOBAL] VARIABLES; # See all system variables

SHOW [SESSION|GLOBAL] VARIABLES LIKE '...'; # Fuzzy view system variables

SHOW @@[SESSION|GLOBAL] System variable name ; # View the value of the specified variable

Set the value of the variable

SET [SESSION|GLOBAL] System variable name = value ;

SET @@[SESSION|GLOBAL] System variable name = value ;

Be careful :

If not specified SESSION/GLOBAL, The default is SESSION, Session variables .

mysql After the service is restarted , The global parameters set will become invalid , In order not to fail , Can be in /etc/my.cnf Middle configuration .

lock

Global lock

Global lock is to lock the whole database instance , After locking, the entire instance is in a read-only state , Follow up DML Write statement ,DDL sentence , The transaction commit statements that have been updated are Will be blocked .

Its typical use scenario is to make a logical backup of the whole database , Lock all tables , To get a consistent view , Ensure data integrity .

grammar

flush tables with read lock; # Open global lock

...... # Only read-only statements can be executed , all session The statements written in the session will block

unlock tables; # Unlock the global lock

Backup database

mysqldump -uroot -p123456 Database instance name >xxx.sql

characteristic

Add a global lock to the database , It's a heavy operation , There are the following problems :

- If it is backed up on the primary database , The update cannot be performed during the backup , Business basically has to stop .

- If you are backing up from a library , During the backup, the slave database cannot synchronize the binary logs from the master database (binlog), Will cause master-slave delay .

stay InnoDB In the engine , We can add parameters during backup --single-transaction Parameter to complete the consistent data backup without lock .

mysqldump --single-transaction -uroot -p123456 Database instance name >xxx.sql

Table lock

Table lock , Lock the whole table with each operation . Large locking size , The highest probability of lock collisions , Lowest degree of concurrency . Apply to MyISAM、InnoDB、BDB Wait for the storage engine .

For table lock , It is mainly divided into the following three categories :

- Table locks

- Metadata lock (meta data lock,MDL)

- Intent locks

Table locks

For table locks , There are two kinds of :

- Table share read lock (read lock)

- Table Write Lock (write lock)

grammar :

- Lock :lock tables Table name ... read/write.

- Release the lock :unlock tables / Client disconnected .

Metadata lock ( meta data lock, MDL)

MDL The locking process is automatically controlled by the system , No explicit use is required , When accessing a table, it will automatically add .MDL Lock is mainly used to maintain data consistency of table metadata , In the table When there are active transactions on , You cannot write metadata . for fear of DML And DDL Conflict , Ensure the correctness of reading and writing .

stay MySQL5.5 Introduced in MDL, When adding, deleting, modifying and querying a table , Add MDL Read the lock ( share ); When changing the table structure , Add MDL Write lock ( Exclusive ).

Corresponding SQL Lock type explain lock tables xxx read / write SHARED_READ_ONLY / SHARED_NO_READ_WRITE select 、select ... lock in share mode SHARED_READ And SHARED_READ、SHARED_WRITE compatible , And EXCLUSIVE Mutually exclusive insert 、update、delete、select ... for update SHARED_WRITE And SHARED_READ、SHARED_WRITE compatible , And EXCLUSIVE Mutually exclusive alter table ... EXCLUSIVE With other MDL Are mutually exclusive View metadata lock

select object_type,object_schema,object_name,lock_type,lock_duration from performance_schema.metadata_locks;

Intent locks

for fear of DML When executed , Conflict between row lock and table lock , stay InnoDB Intention lock is introduced in , So that the table lock does not need to check whether each row of data is locked , Use intent lock to reduce Less check of watch lock .

Intention sharing lock (IS):

By statement select ... lock in share mode add to .

Share lock with table lock (read) compatible , Exclusive lock with table lock (write) Mutually exclusive .

Intention exclusive lock (IX):

from insert、update、delete、select ... for update add to .

Share lock with table lock (read) And exclusive lock (write) Are mutually exclusive . Intent locks are not mutually exclusive .

Check the locking of intention lock and row lock

select object_schema,object_name,index_name,lock_type,lock_mode,lock_data from performance_schema.data_locks;

Row-level locks

Row-level locks , Each operation locks the corresponding row data . Locking granularity minimum , The lowest probability of lock collisions , Highest concurrency . Apply to InnoDB In the storage engine .

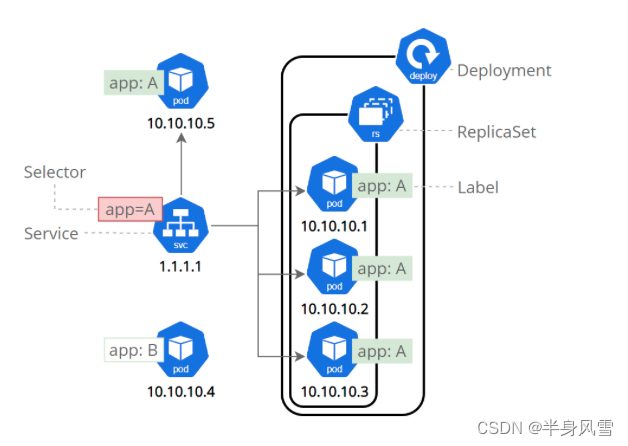

InnoDB The data is organized based on indexes , Row locking is achieved by locking the index items on the index , Instead of locking records . For row level locks , It is mainly divided into the following three categories :

Row lock (Record Lock): Lock a single row record , Prevent other transactions from... On this line update and delete. stay RC、RR Both isolation levels support .

- Shared lock (S): Allow a transaction to read a line , Prevent other transactions from obtaining exclusive locks on the same dataset .

- Exclusive lock (X): Allow to get transaction update data of exclusive lock , Prevent other transactions from obtaining shared and exclusive locks of the same dataset .

By default ,InnoDB stay REPEATABLE READ Transaction isolation level running ,InnoDB Use next-key Lock for search and index scan , To prevent unreal reading .

- When searching for a unique index , When performing equivalence matching on existing records , It will be automatically optimized as row lock .

- InnoDB The row lock of is a lock added to the index , Retrieve data without index criteria , that InnoDB Lock all records in the table , here Will be upgraded to table lock .

Clearance lock (Gap Lock): Lock index record gap ( This record is not included ), Ensure that the index record gap remains unchanged , Prevent other transactions from going on in this gap insert, It produces unreal reading . stay RR Both isolation levels support .

Temporary key lock (Next-Key Lock): Combination of row lock and clearance lock , Lock the data at the same time , And lock the gap in front of the data Gap. stay RR Support at isolation level .

By default ,InnoDB stay REPEATABLE READ Transaction isolation level running ,InnoDB Use next-key Lock for search and index scan , To prevent unreal reading .

- Equivalent query on Index ( unique index ), When locking records that do not exist , Optimized for clearance lock .

- Equivalent query on Index ( General index ), When traversing to the right, the last value does not meet the query requirements ,next-key lock Degenerate to clearance lock .

- Range query on Index ( unique index )-- Access to the first value that does not satisfy the condition .

Be careful : The only purpose of a gap lock is to prevent other transactions from inserting gaps . Gap locks can coexist , A gap lock adopted by one transaction will not prevent another transaction from adopting a gap lock on the same gap .

InnoDB engine

Logical storage structure

framework

MySQL5.5 Version start , By default InnoDB Storage engine , It's good at transaction processing , With crash recovery features , It is widely used in daily development . Here is InnoDB Architecture diagram , On the left is the memory structure , Right The side is disk structure .

framework - Memory architecture

framework - Disk structure

Example :

- The implementation is as follows sql:

create tablespace ts_itheima add datafile 'myitheima.ibd' engine=innodb;

create table a(id int primary key auto_increment,name varchar(32) ) engine=innodb tablespace ts_itheima;

- see mysql Data file directory , Default in

/var/lib/mysqlCatalog . There will bemyitheima.ibdThis file

framework - Background thread

see innod Status information

show engine innodb status;

How things work

Business Is a collection of operations , It is an indivisible unit of work , The transaction will submit or revoke the operation request to the system as a whole , That is, these operations Or at the same time , Or fail at the same time .

characteristic

• Atomicity (Atomicity): Transactions are the smallest and indivisible unit of operations , All or nothing , All or nothing .

• Uniformity (Consistency): When the transaction completes , All data must be kept consistent .

• Isolation, (Isolation): The isolation mechanism provided by the database system , Ensure that transactions run in an independent environment that is not affected by external concurrent operations .

• persistence (Durability): Once a transaction is committed or rolled back , Its changes to the data in the database are permanent .

The atomicity of transactions , Uniformity , Persistent adoption redo log、undo log To achieve .

Transaction isolation through lock ,MVCC To achieve .

Redo log , It records the physical modification of the data page when the transaction is committed , It is used to achieve the persistence of transactions .

The log file consists of two parts : Redo Log Buffer (redo log buffer) And redo the log file (redo log file), The former is in memory , The latter is on disk . When a transaction After submitting, all modification information will be saved in the log file , Used to refresh dirty pages to disk , When something goes wrong , For data recovery use .

Rollback log , It is used to record the information before the data is modified , The function consists of two parts : Provide rollback and MVCC( Multi version concurrency control ) .

undo log and redo log Recording physical logs is not the same , It's a logical log . Can be considered as delete When a record ,undo log A corresponding insert Record , conversely also , When update When a record , It records an opposite update Record . When executed rollback when , You can start from undo log The logical record in reads the corresponding content And roll back .

Undo log The destruction :undo log Generated during transaction execution , Transaction commit , It doesn't immediately delete undo log, Because these logs may also be used for MVCC.

Undo log Storage :undo log Manage and record in the way of segments , Stored in the previously described rollback segment In rollback segment , It contains 1024 individual undo log segment.

MVCC- Basic concepts

The current reading

The latest version of the record is read , When reading, it is also necessary to ensure that other concurrent transactions cannot modify the current record , Will lock the read record .

For our daily operation , Such as : select ... lock in share mode( Shared lock ),select ... for update、update、insert、delete( Exclusive lock ) Is a current reading .

Read the snapshot

ordinary select( No locks ) That's snapshot reading , Read the snapshot , Read the visible version of the recorded data , It could be historical data , No locks , Non blocking read .

- Read Committed: Every time select, All generate a snapshot read .

- Repeatable Read: First after opening transaction select The statement is where the snapshot is read .

- Serializable: Snapshot reads degenerate into current reads .

MVCC

Full name Multi-Version Concurrency Control, Multi version concurrency control . It refers to maintaining multiple versions of a data , So that there is no conflict between read and write operations , Snapshot read as MySQL Realization MVCC Provides a non blocking read function .MVCC The concrete realization of , You also need to rely on three implicit fields in the database record 、undo log journal 、readView.

MVCC- Realization principle

Hidden fields in records

Can pass idb2sdi Command by viewing idb file ( Default in /var/lib/mysql/), You can see the table structure , There are hidden fields . such as

DB_TRX_ID、DB_ROLL_PTRibd2sdi XXX.idb

undo log

Rollback log , stay insert、update、delete The log generated at the time of data rollback .

When insert When , Produced undo log Logs only need to be rolled back , After the transaction is committed , Can be deleted immediately .

and update、delete When , Produced undo log Logs need not only to be rolled back , You also need to read the snapshot , Will not be deleted immediately .

undo log Version chain

Pictured above :

- Business 2 Execution time , Remember to modify the data before , Use .

Business 3 Execution time , Due to transaction 2 Submitted , So it needs to be in the transaction 2 Operate on the basis of the execution results . So it's undo log The records inside are transactions 2 Records of execution .

Business 4 Execution time , Due to transaction 3 Submitted , So it needs to be in the transaction 2 Operate on the basis of the execution results . So it's undo log The records inside are transactions 3 Records of execution .

The same record is modified by different transactions or the same transaction , Will cause the record to undolog Generate a linked list of record versions , The head of the linked list is the latest old record , The end of the linked list is the oldest old record .

readview

ReadView( Read view ) yes Read the snapshot SQL Execution time MVCC The basis for extracting data , Record and maintain the current active transactions of the system ( Uncommitted )id.

ReadView Contains four core fields :

| Field | meaning |

|---|---|

| m_ids | Currently active transactions ID aggregate |

| min_trx_id | Minimum active transaction ID |

| max_trx_id | Preallocated transactions ID, Current maximum transaction ID+1( Because business ID Since the increase ) |

| creator_trx_id | ReadView The creator's business ID |

above 4 A rule , As long as meet 1,2,4 Any one can access the corresponding data ; dissatisfaction 3 You have to refuse .

Different isolation levels , Generate ReadView The timing is different :

* READ COMMITTED : Generated each time a snapshot read is performed in a transaction ReadView.

* REPEATABLE READ: Generated only on the first snapshot read in a transaction ReadView, Subsequently reuse the ReadView.

Pictured above , The current isolation level is READ COMMITTED when , Business 5 The result of the first query in is a transaction 2 Records submitted .

Pictured above , When the current segregation sector READ COMMITTED when , Business 5 The result of the second query in is a transaction 3 Records submitted

The current isolation level is when the read has been committed , Business 5 Two queries in ReadView It's the same , So the results of both queries are transactions 2 Records submitted .

MySQL management

Mysql After the database is installed , It comes with four databases , The specific functions are as follows :

| database | meaning |

|---|---|

| mysql | Storage MySQL All kinds of information required for the normal operation of the server ( The time zone 、 Master-slave 、 user 、 Authority, etc ) |

| information_schema | Provides various tables and views for accessing database metadata , Include database , surface , Field type and access rights, etc |

| performance_schema | by MySQL Server runtime status provides an underlying monitoring function , Mainly used for mobile database server performance parameters |

| sys | Contains a series of convenient DBA And developers take advantage of performance_schema View of performance database for performance tuning and diagnosis |

Commonly used tools :

mysql

The mysql Don't mean mysql service , But to mysql Client tools .

-e Options can be found in Mysql Client execution SQL sentence , Instead of connecting to MySQL The database then executes , For some batch scripts , This is especially convenient .

mysqladmin

It can be done through mysqladmin --help See all options

mysqladmin Is a client program that performs administrative operations . It can be used to check the configuration and current state of the server 、 Create and delete databases, etc .

mysqlbinlog

Because the binary log file generated by the server is saved in binary format , So if you want to check the text format of these texts , Will be used to mysqlbinlog Log management tools .

Such as through

mysqlbinlog binlog.000001Check the log ( stay /var/lib/mysql Catalog )

mysqlshow

mysqlshow Client object finder , Used to quickly find which databases exist 、 Tables in the database 、 A column or index in a table .

mysqldump

mysqldump Client tools are used to back up databases or migrate data between different databases . The backup includes creating tables , And insert the SQL sentence .

mysqldump -uroot -p123456 test>test.sql # Backup database test To test.sql

mysqldump -uroot -p123456 -T /var/lib/mysql-files test # take test The table structure and data of the database are exported to /var/lib/mysql-files Under the table of contents

mysqlimport/source

mysqlimport Is the client data import tool , Used to import mysqldump Add -T The text file exported after the parameter .

If you need to import sql file , have access to mysql Medium source Instructions .

MySQL More related articles of advanced notes

- 「 MySQL Advanced 」MySQL Index principle , Design principles

Hello everyone , I am a melo, A sophomore backstage trainee , The third day of the Lunar New Year , I've come to be the first person to fight against the insider again !!! Column introduction MySQL, A familiar and unfamiliar noun , Early learning Javaweb When , We used MySQL database , At that stage , ...

- Silicon Valley MySQL Advanced learning notes

Catalog database MySQL Learning notes advanced chapter Write it at the front 1. mysql Introduction to the architecture of mysql brief introduction mysqlLinux Installation of version mysql The configuration file mysql Introduction to logical architecture mysql Storage engine 2. Index optimization ...

- MySQL Advanced | Index Introduction

Preface Performance degradation SQL The reason for the slow The query statement is poorly written Index failure Single value index Composite index Too many associated queries join( Design defects or unavoidable requirements ) Server tuning and parameter settings ( buffer . Number of threads, etc ) What is the index MySQL The official index of ...

- MySQL Advanced learning notes ( Four ): Index optimization analysis

List of articles Performance degradation SQL slow Long execution time Long waiting time The query statement is poorly written Too much query data Too many tables are associated , Too much join Not using index Single value Reunite with Server tuning and parameter settings ( buffer . Number of threads, etc )( Is not important DBA Of ...

- MySQL Advanced learning notes

1. Variable correlation Temporary variable -- Variables defined in function bodies or stored procedures -- The usage will be mentioned when talking about functions User variables , Also called session variables -- User variables are only valid for the current connected user , Other connected users cannot access -- Use @ Identifier declaration ...

- Mysql The basic chapter ( note )

mysql The database is created by DB Follow DBMS Follow sql form DB Database warehouse DBMS Database management system SQL A general database language Database startup command : close ->net stop MySQL || Turn on ...

- MySQL Advanced learning notes ( 7、 ... and ):MySql Master slave copy

List of articles The fundamentals of replication slave From master Read binlog To synchronize data Three steps + Schematic diagram The basic principle of reproduction The biggest problem with copying One master one slave common configuration mysql Version consistent and running as a service in the background ( Both parties can pin ...

- MySQL Advanced learning notes ( 6、 ... and ):MySql Locking mechanism

List of articles summary Definition Life shopping Classification of locks From the type of data operation ( read \ Write ) branch From the granularity of data operations Three locks Table locks ( Partial reading ) characteristic case analysis Build table SQL Add read lock Add write lock Conclusion How to analyze table locking Row lock ( Partial writing ) characteristic ...

- MySQL Advanced learning notes ( 5、 ... and ): Query interception analysis

List of articles Slow query log What is it? How do you play? explain See if and how to turn it on Default Turn on After the slow query log is turned on , Like what? SQL Will be recorded in the slow query log ? Case Configure version Log analysis tool mysqldumpsl ...

- MySQL Advanced learning notes ( 3、 ... and ):Mysql Introduction to logical architecture 、mysql Storage engine

List of articles Mysql Introduction to logical architecture General overview General overview mysql Storage engine View command Look at you mysql What storage engine is now available : Look at you mysql The current default storage engine : Introduction to each engine MyISA ...

Random recommendation

- python Study abnormal

#=========================== Common abnormal ===================== #print(a);#NameError: name 'a' is not defined ...

- Win10 UI introduction RelativePanel(2)

The adaptive 1) Gif: Add animation 2)

- JS Letter

Node JS About JS call Called :exports.cv=cv; cv For the class , Can use its method cv.***: cv For function name , You can use its functions cv( , ): call : var cv=require(cv); ...

- Java Development Maven Environment configuration and introduction

It's hot these days 12306 Our Booking Software go-home, I also downloaded a copy , Used for a while , Also from the svn Put the code in down down , But in eclipse There's something wrong with it , Rely on the jar None of the bags were found , Later I learned that they used mav ...

- [ skill ] hold Excel How to insert the data in the database

. If first in 6 The last column of row data inserts a column of data , Please write down the names first , Then enter the number under this column in the first row , Then select the cell and drag it down to see the small black box , Double click the black dot in the lower right corner ,6 Row data is Will fill in the default number ...

- Gradle User guide

Download and install gradle 2.1 Download address :http://www.gradle.org/learn Installation prerequisites :gradle Installation needs 1.6 Or later jdk(jre)( have access to java –versio ...

- Druid There are two simple ways to use database connection pool

Alibaba's domestic database connection pool , According to online tests , Better than the current DBCP or C3P0 Database connection pooling performs better Simple introduction to use Druid Basically the same as other database connection pools ( And DBCP Very similar ), All the connection information of the database ...

- Linux Script learning notes

Transfer the ownership of files to other users chown [-R] Account name : User group name File or directory It's going on hadoop Distributed deployment , The key pair needs to be generated. The specific operation is as follows master Of hadoop Create under directory .sshm ...

- K3CLOUD Installation tutorial

1. install SQLSERVER2008 2. install K3CLOUD Installation package , Various installations here iis,tomcat,ftp Such as the environment , There was it Experienced people should be able to handle it by themselves , Don't go into details 3. Enter the management center , Set it up , The default is 127. ...

- Hyper

https://github.com/zeit/hyper https://gist.github.com/coco-napky/404220405435b3d0373e37ec43e54a23 Ho ...

![[Yunju entrepreneurial foundation notes] Chapter II entrepreneur test 22](/img/e0/21367eeaeca10c0a2f2aab3a4fa1fb.jpg)

![[Yunju entrepreneurial foundation notes] Chapter II entrepreneur test 10](/img/89/1c2f98973b79e8d181c10d7796fbb5.jpg)

![[Yunju entrepreneurial foundation notes] Chapter II entrepreneur test 6](/img/38/51797fcdb57159b48d0e0a72eeb580.jpg)