当前位置:网站首页>Cloud detection 2020: self attention generation countermeasure network for cloud detection in high-resolution remote sensing images

Cloud detection 2020: self attention generation countermeasure network for cloud detection in high-resolution remote sensing images

2022-07-07 13:02:00 【HheeFish】

Self attention generation countermeasure network for cloud detection in high-resolution remote sensing images Self-Attentive Generative Adversarial Network for Cloud Detection in High Resolution Remote Sensing Images

0. Abstract

Cloud detection is an important step in remote sensing image processing . Most of them are based on Convolutional Neural Networks (CNN) All cloud detection methods need pixel level labels , These labels are time-consuming and expensive to annotate . To overcome this challenge , This paper proposes a new semi supervised cloud detection algorithm , Generate confrontation network by training self attention (SAGAN) To extract the feature difference between cloud image and cloud free image . Our main idea is to introduce visual attention to generation “ real ” In the process of cloudless image .SAGAN Our training is based on three guiding principles : Expand the attention map of the cloud area , Replace with the translated cloudless image ; Reduce attention map , Make it coincide with the cloud boundary ; Optimize self attention network , To deal with extreme situations .SAGAN The input of training is image and image level label , With the existing based on CNN Compared with , They are easier 、 Cheaper 、 More time saving . In order to test SAGAN Performance of , Yes Sentinel-2A 1C Experiments were carried out with level image data . It turns out that , This method only needs image level labels of training samples , You can get good results .

1. summary

With the rapid development of satellite technology , People are near real-time 、 Obtain remote sensing images in large quantities . However , The global annual average cloud cover is about 66%[1]. This will blur the surface features , Thereby reducing the availability of applied optical images [2]. The brightness of clouds ranges from visible light to near-infrared , This leads to many bright surfaces , For example, bare land 、 Exposed rock and concrete surfaces , It's easy to confuse with cloud . Thin clouds contain spectral features of the land surface , Difficult to separate from clear objects [3]

In recent years , Deep learning has been applied to classification 、 Target detection and image segmentation . Many are based on DL Cloud detection method of remote sensing image has been proposed .Mateo wait forsomeone [4] A convolutional neural network is designed (CNN) The simple structure of , Used to detect Proba-V Clouds in multispectral images .Le Goff wait forsomeone [5] A new method is proposed for SPOT6 The end-to-end convolutional network of cloud detection . Zhan et al [6] Designed a CNN Network to distinguish clouds and snow in remote sensing images . Zhang et al [7] use U-Net Carry out on-board cloud detection on small satellites . Xie et al [8] A new method based on DL Multilevel cloud detection method . Li et al [9] Medium and high resolution remote sensing images for different sensors , A new method based on DL Cloud detection method .

Although previously based on DL The method has been successfully applied to cloud detection in remote sensing images , but CNN The training of human annotation usually requires pixel level labels , This is time-consuming and expensive . therefore , Unsupervised feature extraction is more attractive . lately , A generation confrontation network (GAN) Proposed as an unsupervised DL Model . The model generates models (G) And discriminant models (D)[10] The minimax game between two people generates false samples .GAN Used for image generation [11] And translation [12]、[13]. Because of its effectiveness ,GAN It is one of the most promising methods of unsupervised learning with complex distribution .

In recent years , More and more researchers add attention mechanism to dynamic learning . Visual attention mechanism is a unique mechanism of human visual brain signal processing . By quickly scanning the global image , Human visual attention will focus on the target area , It is often called focus of attention . In order to get more information , Human vision will pay more attention to the details of the target , And ignore other useless information .

This paper proposes a new method based on GAN Self attention cloud detection method , among GAN The architecture is used to detect cloud areas . Zhu et al 13] And Qian et al [14] inspire , We propose a cloud detection method , This method uses unpaired remote sensing images with corresponding image level labels (0 Represents a cloud image ,1 Represents a cloudless image ). The main contributions are as follows .

1) We propose a new cloud detection method - Self attention GAN(SAGAN), With image and image level labels , Its annotation time is less than that of pixel level labels 1%. As far as we know , This is the first time the framework has been used in cloud detection .

2) We introduce attention mechanism into the method . The attention network in our method is used to extract cloud features and generate cloud masks . The well-trained attention network in our proposed method can automatically detect cloud areas .

2. Method

2.1. Framework of the proposed approach

GAN The algorithm was originally used to generate false data . It's made up of two networks : Generation network (G) And discriminant networks (d), They compete with each other in minimax Games [10].G Try to build “ real ” sample ,D Try to distinguish between real and generated samples . The formula is as follows :

among D and G Play minimax Games . After several rounds of game ,G(z) The distribution of will be similar to dx,D Will be indistinguishable G(z) and x.

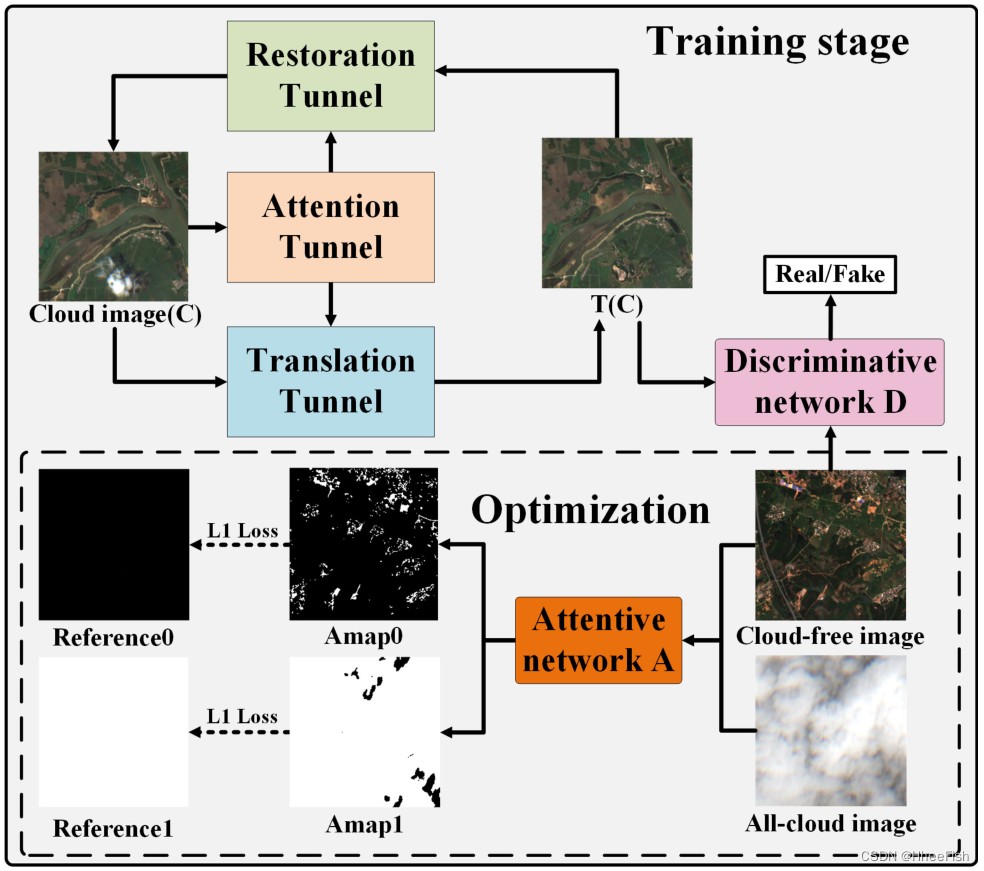

proposal SAGAN Flow chart of 1 Shown .SAGAN The training phase of includes three , Include translate 、 Recovery and attention . In order to improve the A The ability to recognize , Designed an optimization . The test phase applies attention only to the input image to detect the cloud .

chart 1. Based on the proposed SAGAN Cloud detection flow chart of method .

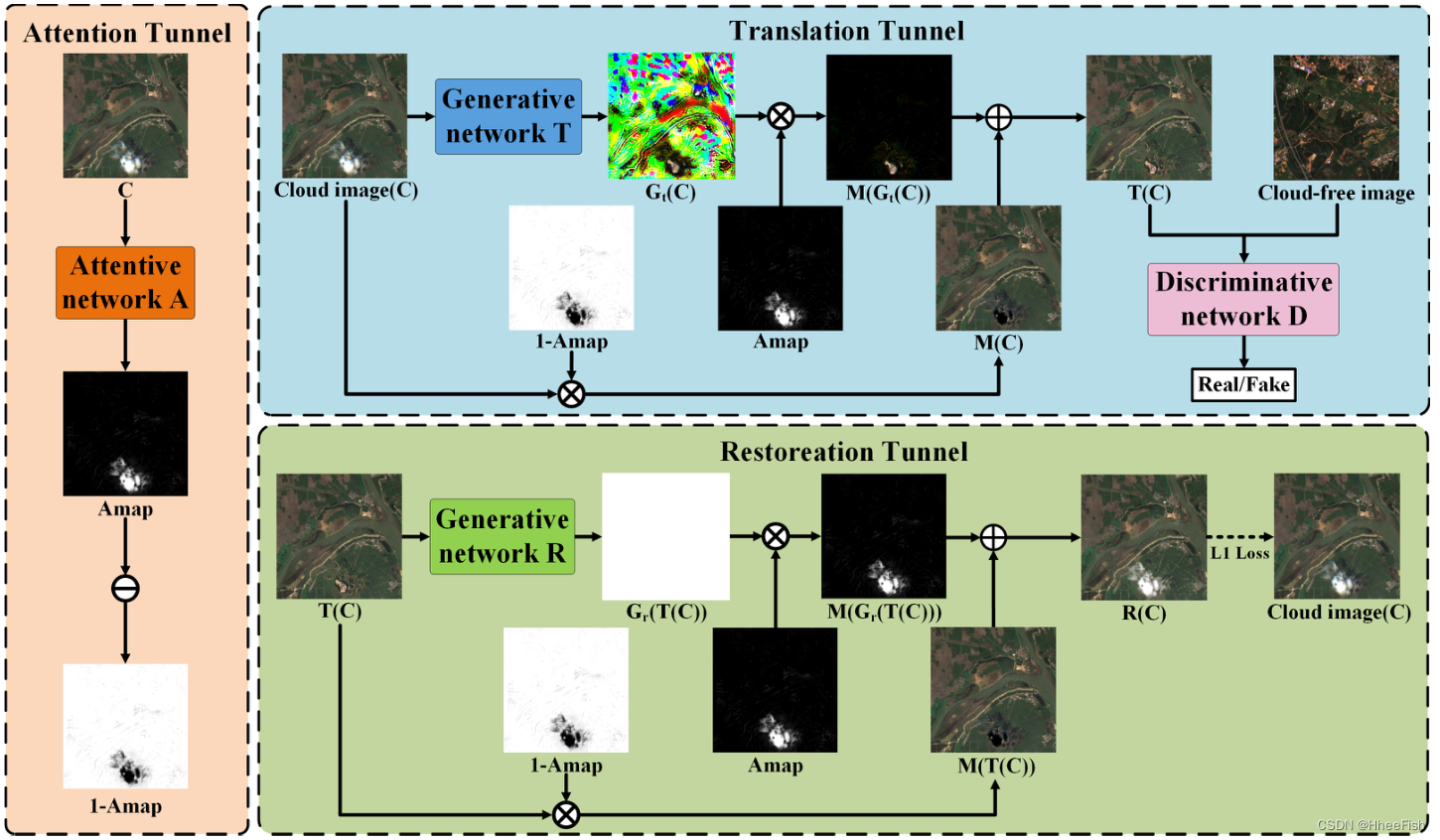

chart 2. The translation is introduced in detail 、 Recovery and attention . Translation and recovery tracks have the same fusion operation , However, translation and restoration will be input into the detection area of the image respectively (Amap White area in ) Replace with background and cloud .

translate 、 The details of recovery and attention are shown in the figure 2 Shown . In attention , We designed a Attention networks (A) To get the attention map of the cloud ( matrix P=μ(0,1),P It's the attention map of the cloud ), It will guide the transformation and recovery process to pay more attention to the cloud area . Translation aims to translate the attention area into the background . Restoration aims to restore the translated area to the original image .

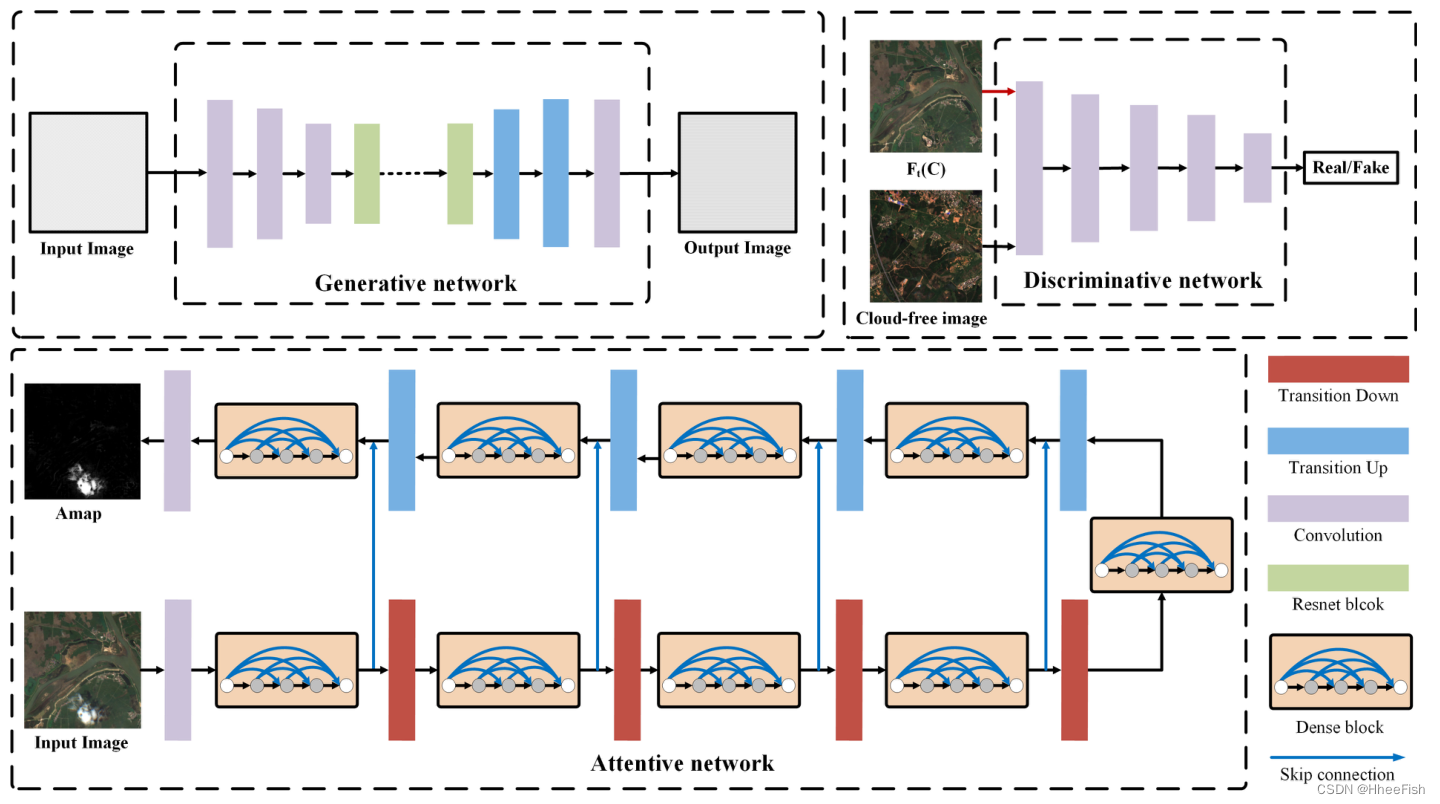

chart 3. Generation network 、 Pay attention to the detailed composition of the network and discrimination network .T and R Both use the same network architecture to convert the input image into the target image

SAGAN The network details used in are shown in the figure 3 Shown . suffer Jégou wait forsomeone [15] Inspired by image segmentation , Self attention network in our method (A) Use complete convolution (FC)-DenseNet framework , The architecture consists of contraction path and expansion path . The contraction path consists of several basic units , Each basic unit consists of a dense block immediately following the transition layer . Expansion path and contraction path are symmetrical ; Only the downward transition layer is replaced by the upward transition layer . Feature maps of the same size in the downward and upward paths are concatenated at the feature channel . Both of these architectures for generating networks are based on ResNet[12], It can avoid gradient disappearance or explosion , And ensure the integrity of the input information . Discrimination network (D) Patch based GAN[16], It has a large receptive field in the original image .

2.2. Expansion and Translation

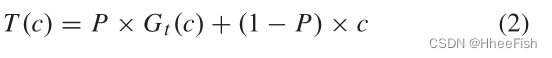

At this stage , translate (T) Convert the input cloud image into a cloudless image , The attention map generated by attention determines which areas should be translated . The joint operation of the two structures is as follows :

among c Represents the input image ,Gt Represents the translated image ,T Represents the translated cloudless image after introducing attention operation .

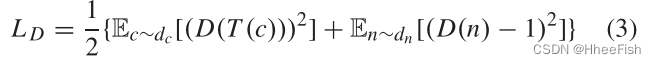

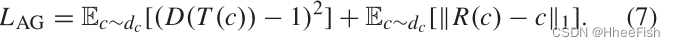

Discrimination network (D) Used to assess T The quality of the . We have given D The expression of the loss function of is as follows

among dc It's the distribution of cloud pictures ,dn Is the distribution of cloudless images

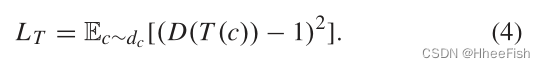

from Gt and A Composed of T The loss function of can be written as

We use the least square function as D Loss function of . To minimize LT, The translation tunnel will try to be as large as possible c The area is translated as n To confuse D, It means a Will expand the attention area ,Gt High quality images will be generated .

2.3. Reduce and recover

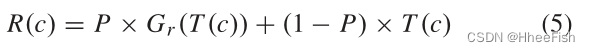

In order to limit A Only focus on cloud areas , We designed a recovery structure (R). Restoration structure restores the translated cloudless image to the original cloud image , By the attention structure (A) The generated attention map determines which areas should be restored . The process can be combined as follows :

among ,Gr(T) Represents the restored image ,R Indicates the restored image after introducing attention operation .

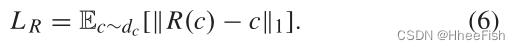

then , We will restore the image R And the original image c Compare . The recovery process can be evaluated as follows :

We use absolute loss as R Loss function of ,R from A and Gr form . The absolute loss can be assessed R and c The absolute difference between .

stay LT Constrained by , Note that the area will never be zero , It means LR Never reach the minimum . To solve this problem , The best solution is to keep c unchanged .c The changing area of is determined by the attention map in the cycle , It means A Will reduce the attention area . meanwhile ,Gr Will try to restore the changed area to the original image c, To minimize LR.

stay LT and LR Under the constraint of , Attention plays a “ Minimax ” game , It can guide the attention map to match the cloud boundary well .A、Gt and Gr The loss function of can be combined as follows :

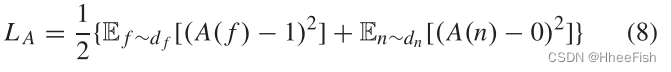

2.4. Optimize

Because cloud free images and cloud filled images are not considered in the above process , therefore A Not applicable to these two extreme cases , Unable to generate an accurate attention map . To avoid this problem , This method introduces another optimization A The algorithm of , To make full use of spectral information . The optimization process is shown in the figure 1 Shown . The attention map of cloudless image should be 0 Matrix , The attention map of the whole cloud image should be 1 Matrix . The optimization function is as follows

among df Is the distribution of images full of clouds .

For the smallest LA,A Spatial and spectral information about clouds and backgrounds will be obtained , To generate an accurate attention map .

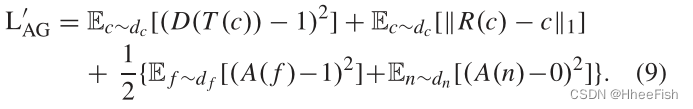

At the suggestion of SAGAN In the method ,Gr、Gt and A Mutual cooperation . Their parameters are updated together . The final loss function is as follows :

reference

[1] Y . Zhang, W. B. Rossow, A. A. Lacis, V . Oinas, and M. I. Mishchenko, “Calculation of radiative fluxes from the surface to top of atmosphere based on ISCCP and other global data sets: Refinements of the radiative transfer model and the input data,” J. Geophys. Res., vol. 109, Oct. 2004, Art. no. D19105.

[2] A. Fisher, “Cloud and cloud-shadow detection in SPOT5 HRG imagery with automated morphological feature extraction,” Remote Sens., v o l . 6 , no. 1, pp. 776–800, 2014.

[3] R. Cahalan, L. Oreopoulos, G. Wen, A. Marshak, S.-C Tsay, and T. DeFelice, “Cloud characterization and clear-sky correction from Landsat-7,” Remote Sens. Environ., vol. 78, nos. 1–2, pp. 83–98, 2001.

[4] G. Mateo-García, L. Gómez-Chova, and G. Camps-Valls, “Convolutional neural networks for multispectral image cloud masking,” in Proc. IEEE Int. Geosci. Remote Sens. Symp. (IGARSS), Jul. 2017, pp. 2255–2258.

[5] M. L. Goff, J.-Y . Tourneret, H. Wendt, M. Ortner, and M. Spigai, “Deep learning for cloud detection,” in Proc. 8th Int. Conf. Pattern Recognit.

Syst. (ICPRS), 2017, pp. 1–6.

[6] Y . Zhan, J. Wang, J. Shi, G. Cheng, L. Y ao, and W. Sun, “Distinguishing cloud and snow in satellite images via deep convolutional network,” IEEE Geosci. Remote Sens. Lett., vol. 14, no. 10, pp. 1785–1789, Oct. 2017.

[7] Z. A. Zhang Iwasaki, X. Guodong, and S. Jianing, “Small satellite cloud detection based on deep learning and image compression,” to be published, doi: 10.20944/preprints201802.0103.v1.

[8] F. Xie, M. Shi, Z. Shi, J. Yin, and D. Zhao, “Multilevel cloud detection in remote sensing images based on deep learning,” IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens., vol. 10, no. 8, pp. 3631–3640, Aug. 2017.

[9] Z. Li, H. Shen, Q. Cheng, Y . Liu, S. You, and Z. He, “Deep learning based cloud detection for medium and high resolution remote sensing images of different sensors,” ISPRS J. Photogramm. Remote Sens., vol. 150, pp. 197–212, Apr. 2019.

[10] I. J. Goodfellow et al., “Generative adversarial nets,” in Advances in Neural Information Processing Systems, vol. 27, Z. Ghahramani, M. Welling, C. Cortes, N. D. Lawrence, and K. Q. Weinberger, Eds.

Red Hook, NY , USA: Curran Associates, 2014, pp. 2672–2680.

[11] G. J. Qi, “Loss-sensitive generative adversarial networks on Lipschitz densities,” 2017, arXiv:1701.06264. [Online]. Available: https://arxiv.

org/abs/1701.06264 [12] P . Isola, J.-Y . Zhu, T. Zhou, and A. A. Efros, “Image-to-image translation with conditional adversarial networks,” in Proc. IEEE Conf.

Comput. Vis. Pattern Recognit. (CVPR), Honolulu, HI, USA, Jul. 2017, pp. 5967–5976.

[13] J.-Y . Zhu, T. Park, P . Isola, and A. A. Efros, “Unpaired image-to-image translation using cycle-consistent adversarial networks,” in Proc. IEEE Int. Conf. Comput. Vis. (ICCV), Oct. 2017, pp. 2223–2232.

[14] R. Qian, R. T. Tan, W. Y ang, J. Su, and J. Liu, “Attentive generative adversarial network for raindrop removal from a single image,” in Proc.

IEEE CVPR, Salt Lake City, UT, USA, Jun. 2018, pp. 2482–2491.

[15] S. Jegou, M. Drozdzal, D. V azquez, A. Romero, and Y . Bengio, “The one hundred layers Tiramisu: Fully convolutional DenseNets for semantic segmentation,” in Proc. IEEE CVPR, Honolulu, HI, USA, Jul. 2017, pp. 11–19.

[16] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” in Proc. IEEE CVPR, Las V egas, NV , USA, Jun. 2016, pp. 770–778.

[17] M. Main-Knorn, B. Pflug, J. Louis, V. Debaecker, U. Müller-Wilm, and F. Gascon, “Sen2Cor for Sentinel-2,” Proc. SPIE, vol. 10427, Oct. 2017, Art. no. 1042704.

[18] O. Ronneberger, P. Fischer, and T. Brox, “U-Net: Convolutional networks for biomedical image segmentation,” in Proc. Medical Image Comput. Comput. Assisted Intervention—MICCAI, vol. 9351. Munich, Germany: Springer, Oct. 2015, pp. 234–241.

[19] L.-C. Chen, G. Papandreou, F. Schroff, and H. Adam, “Rethinking Atrous convolution for semantic image segmentation,” 2017, arXiv:1706.05587. [Online]. Available: https://arxiv.org/abs/1706.05587

边栏推荐

- Common text processing tools

- 关于 appium 启动 app 后闪退的问题 - (已解决)

- Sample chapter of "uncover the secrets of asp.net core 6 framework" [200 pages /5 chapters]

- . Net ultimate productivity of efcore sub table sub database fully automated migration codefirst

- Adopt a cow to sprint A shares: it plans to raise 1.85 billion yuan, and Xu Xiaobo holds nearly 40%

- xshell评估期已过怎么办

- Differences between MySQL storage engine MyISAM and InnoDB

- 通过Keil如何查看MCU的RAM与ROM使用情况

- Sample chapter of "uncover the secrets of asp.net core 6 framework" [200 pages /5 chapters]

- [learn wechat from 0] [00] Course Overview

猜你喜欢

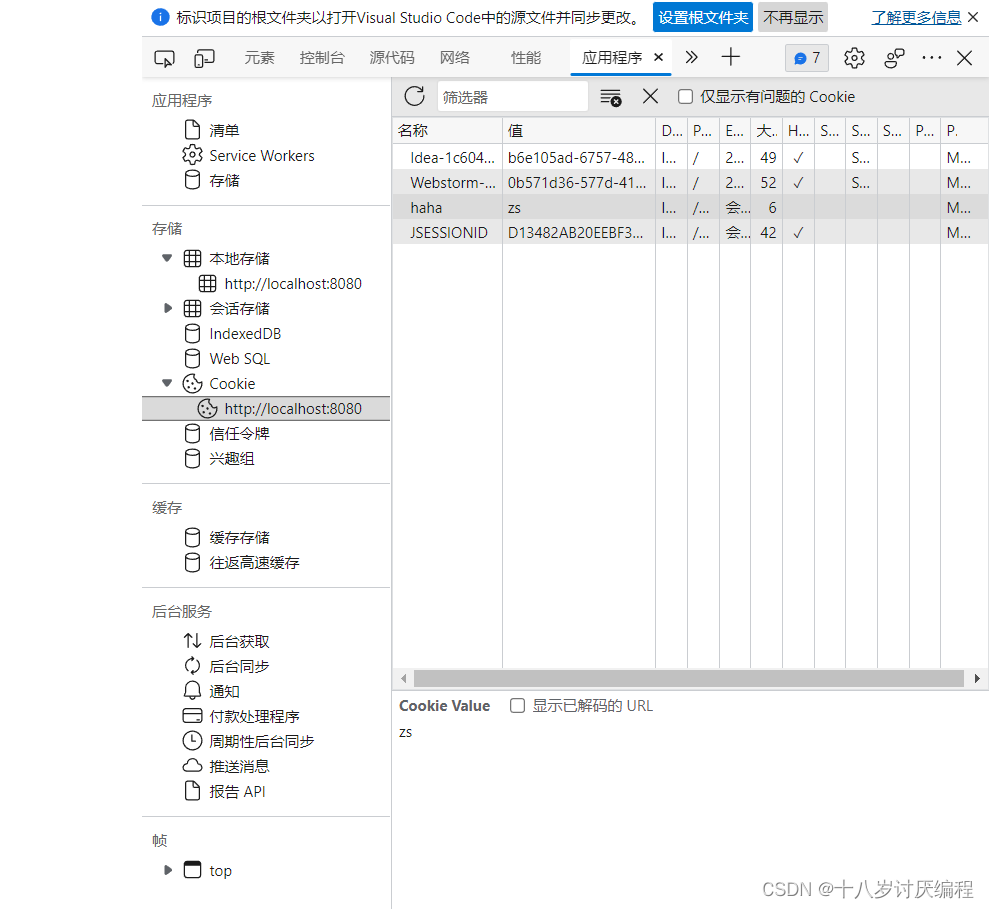

Cookie

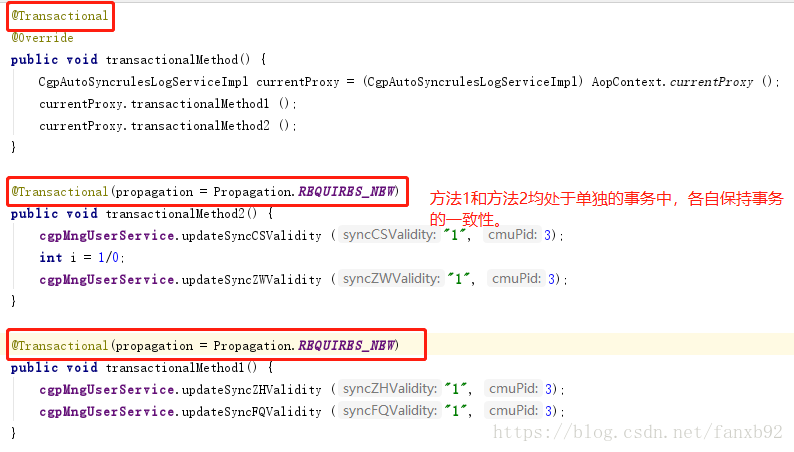

How to apply @transactional transaction annotation to perfection?

![《ASP.NET Core 6框架揭秘》样章[200页/5章]](/img/4f/5688c391dd19129d912a3557732047.jpg)

《ASP.NET Core 6框架揭秘》样章[200页/5章]

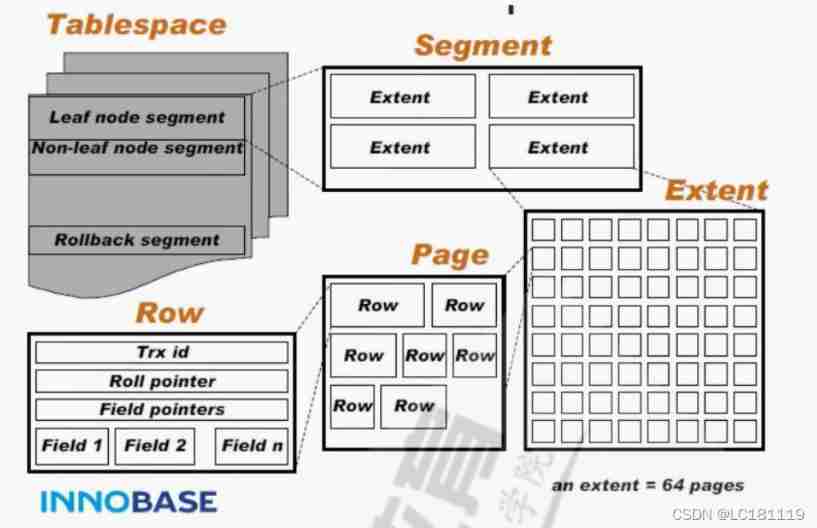

Differences between MySQL storage engine MyISAM and InnoDB

通过Keil如何查看MCU的RAM与ROM使用情况

AUTOCAD——大于180度的角度标注、CAD直径符号怎么输入?

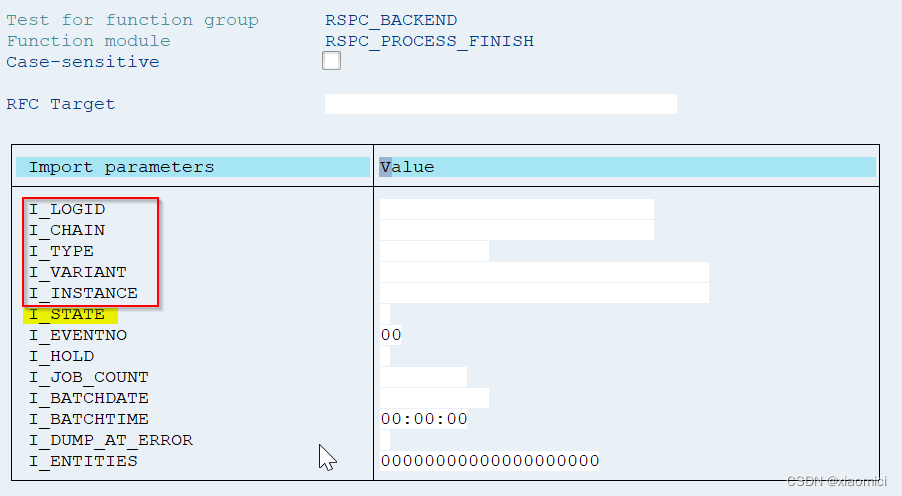

处理链中断后如何继续/子链出错removed from scheduling

为租客提供帮助

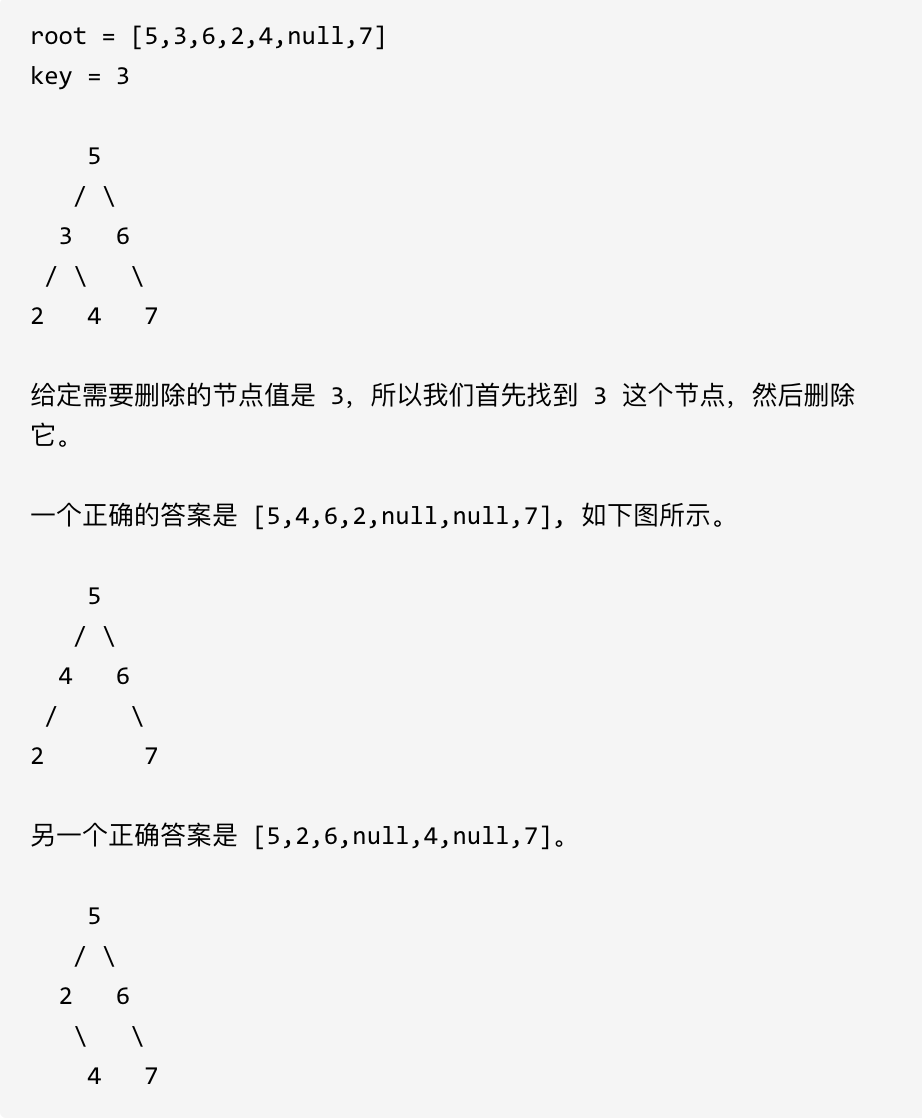

Leetcode skimming: binary tree 27 (delete nodes in the binary search tree)

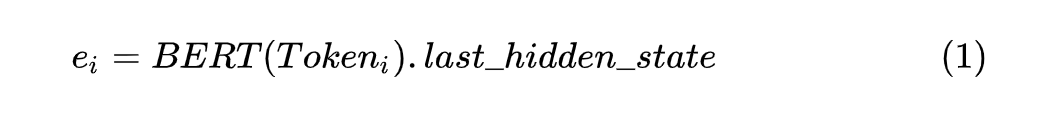

ACL 2022 | small sample ner of sequence annotation: dual tower Bert model integrating tag semantics

随机推荐

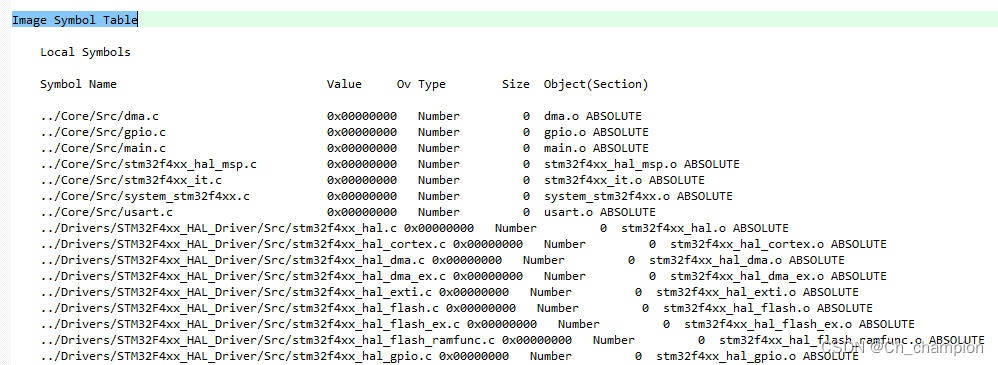

通过Keil如何查看MCU的RAM与ROM使用情况

Leetcode question brushing: binary tree 26 (insertion operation in binary search tree)

Initialization script

Master公式。(用于计算递归的时间复杂度。)

HZOJ #235. 递归实现指数型枚举

Analysis of DHCP dynamic host setting protocol

Cmu15445 (fall 2019) project 2 - hash table details

Steps of building SSM framework

Differences between MySQL storage engine MyISAM and InnoDB

《ASP.NET Core 6框架揭秘》样章[200页/5章]

在字符串中查找id值MySQL

Day22 deadlock, thread communication, singleton mode

ICLR 2022 | 基于对抗自注意力机制的预训练语言模型

处理链中断后如何继续/子链出错removed from scheduling

The URL modes supported by ThinkPHP include four common modes, pathinfo, rewrite and compatibility modes

关于 appium 启动 app 后闪退的问题 - (已解决)

Find ID value MySQL in string

谷歌浏览器如何重置?谷歌浏览器恢复默认设置?

Leetcode brush question: binary tree 24 (the nearest common ancestor of binary tree)

.Net下极限生产力之efcore分表分库全自动化迁移CodeFirst