当前位置:网站首页>How does kubernetes support stateful applications through statefulset? (07)

How does kubernetes support stateful applications through statefulset? (07)

2022-07-06 20:17:00 【wzlinux】

We learned Kubernetes Stateless workload in , And put it into practice Deployment object , I believe now you have gradually fallen in love with Kubernetes 了 .

So this class , Let's have a look at Kubernetes Another workload in StatefulSet. You can tell by the name , This workload is mainly used for stateful service publishing . About stateful services and stateless Services , You can refer to the previous chapter .

Let's gradually understand from a specific example 、 know StatefulSet. stay kubectl Command line , We usually StatefulSet Shorthand for sts. Deploying a StatefulSet When , There is a pre dependent object , namely Headless Services. This object is StatefulSet The role of , We will come together in the following . in addition , Detailed introduction and other functions of this object , We will explain it separately in later courses . Here it is , You can skip it for a while Service Perception . Let's first look at the following Headless Services:

The above paragraph yaml It means , stay demo In this namespace , Create a file called nginx-demo Service for , This service is exposed 80 port , You can access with app=nginx This label Of Pod.

Now let's use the above paragraph yaml Create a in the cluster Service:

Create the pre dependent Service, Now we can start to create real StatefulSet object , Refer to the following yaml file :

You can see , I have named it web-demo Of StatefulSet Deployment is complete .

Now let's explore a little StatefulSet The secret of , See what features it has , Why can we guarantee the stateful operation of services .

StatefulSet Characteristics of

adopt kubectl Of watch function ( Add parameters to the command line -w), We can observe that Pod The state changes step by step .

adopt StatefulSet created Pod Names have certain rules , namely $(statefulset name )-$( Serial number ), For example, in this example web-demo-0、web-demo-1.

There is another interesting point here ,web-demo-0 This Pod Than web-demo-1 Priority creation , And in web-demo-0 Turn into Running After the State , Was created . To prove this conjecture , We observe in a terminal window StatefulSet Of Pod:

Let's open another terminal port watch This namespace Medium event:

Now we try to change this StatefulSet Number of copies , Change it to 5:

At this time, we observe the output of the other two terminal ports :

We see again StatefulSet Managed Pod according to 2、3、4 Create in order , The name is regular , Pass the following section Deployment Created randomly Pod Names are very different .

By observing the corresponding event Information , It can also confirm our conjecture again .

Now let's try to shrink the volume :

At this time, observe the other two terminal windows , They are as follows :

You can see , At the time of volume reduction ,StatefulSet The associated Pod Press 4、3、2 Delete in order .

so , For a possession N Copies of StatefulSet Come on ,Pod When deploying, follow {0 …… N-1} Created in sequence number order , When deleting, delete one by one in reverse order , This is the first feature I want to talk about .

Then let's see ,StatefulSet created Pod Have fixed 、 And the exact host name , such as :

Let's look at the top StatefulSet Of API Object definitions , Have you found anything similar to that in our last section Deployment The definition of is very similar , The main difference is spec.serviceName This field . It is very important ,StatefulSet According to this field , For each Pod Create a DNS domain name , This The format of the domain name by $(podname).(headless service name), Let's take a look at it through examples .

At present Pod and IP The corresponding relationship between them is as follows :

Pod web-demo-0 Of IP The address is 10.244.0.39,web-demo-1 Of IP The address is 10.244.0.40. Here we go through kubectl run In the same namespace demo Create a name in dns-test Of Pod, meanwhile attach Into the container , Be similar to docker run -it --rm This command .

We run in containers nslookup To query their internal DNS Address , As shown below :

You can see , Every Pod There is a corresponding A Record .

Let's delete these now Pod, See what's going to change :

After deleting successfully , You can find StatefulSet A new Pod, however Pod The name remains unchanged . The only change is IP It has changed .

Let's see DNS Record :

It can be seen that ,DNS On record Pod The domain name of has not changed , only IP The address has changed . So when Pod The node where it is located fails, resulting in Pod Drift to other nodes , perhaps Pod Deleted and rebuilt due to failure ,Pod Of IP Will change , however Pod There will be no change in your domain name , That means Services can pass through constant Pod Domain name to ensure the stability of communication , Instead of relying on Pod IP.

With spec.serviceName This field , To ensure the StatefulSet The associated Pod Can have a stable network identity , namely Pod The serial number of 、 Host name 、DNS Record name, etc .

The last thing I want to say is , For stateful Services , Each copy will use persistent storage , And the data used are different .

StatefulSet adopt PersistentVolumeClaim(PVC) Can guarantee Pod The one-to-one corresponding binding relationship between storage volumes . meanwhile , Delete StatefulSet The associated Pod when , Will not delete its associated PVC.

We will introduce it in the following chapters of network storage , Skip again .

How to update StatefulSet

that , If you want to talk to a StatefulSet upgrade , What to do ?

stay StatefulSet in , Two update and upgrade strategies are supported , namely RollingUpdate and OnDelete.

RollingUpdate Strategy is Default update strategy . Can achieve Pod Rolling upgrade , Follow us in the last lesson Deployment To introduce the RollingUpdate The strategy is the same . For example, we did the image update operation at this time , Then the whole upgrade process is roughly as follows , Delete all in reverse order first Pod, Then create new images in turn Pod come out . Here you can go through kubectl get pod -n demo -w -l app=nginx Come and watch .

Use at the same time RollingUpdate The update strategy also supports partition Parameter to update a segment StatefulSet. All serial numbers are greater than or equal to partition Of Pod Will be updated . You can also update manually here StatefulSet Configuration to experiment .

When you set the update policy to OnDelete when , We have to delete it manually first Pod, To trigger a new Pod to update .

Last

Now let's summarize StatefulSet Characteristics :

- Have fixed network marks , For example, host name , Domain name etc. ;

- Support persistent storage , And it's better to bind with instances one by one ;

- You can deploy and expand in order ;

- You can terminate and delete in order ;

- During rolling upgrade , It will also follow a certain order .

With the help of StatefulSet These capabilities of , We can deploy some stateful Services , such as MySQL、ZooKeeper、MongoDB etc. . You can follow this course stay Kubernetes Build a ZooKeeper colony .

Welcome to scan the code to pay attention to , For more information

边栏推荐

- Example of applying fonts to flutter

- 【云原生与5G】微服务加持5G核心网

- Qinglong panel white screen one key repair

- 持续测试(CT)实战经验分享

- Node.js: express + MySQL实现注册登录,身份认证

- Continuous test (CT) practical experience sharing

- [network planning] Chapter 3 data link layer (3) channel division medium access control

- Ideas and methods of system and application monitoring

- 【Yann LeCun点赞B站UP主使用Minecraft制作的红石神经网络】

- Poj3617 best cow line

猜你喜欢

Tencent architects first, 2022 Android interview written examination summary

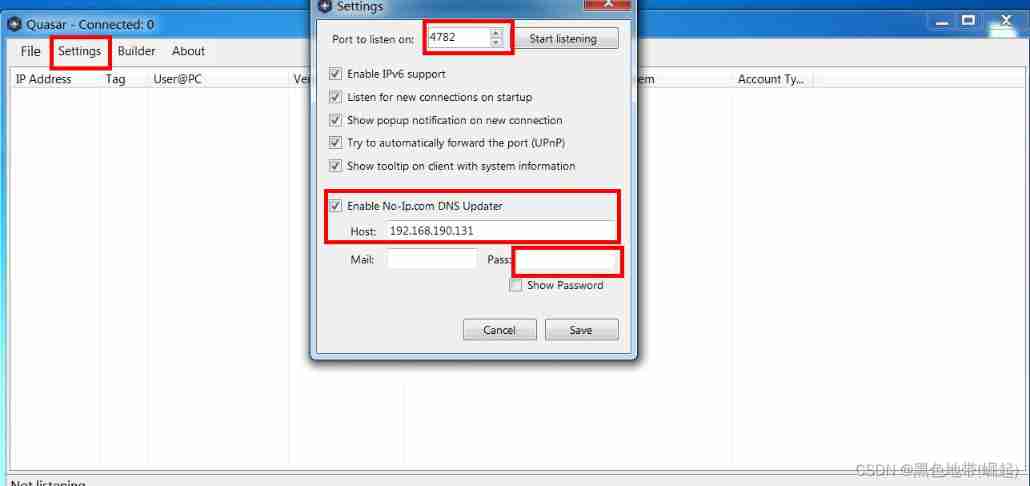

Configuration and simple usage of the EXE backdoor generation tool quasar

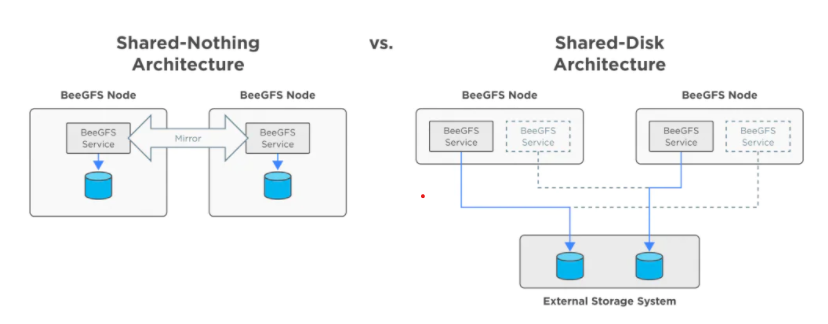

beegfs高可用模式探讨

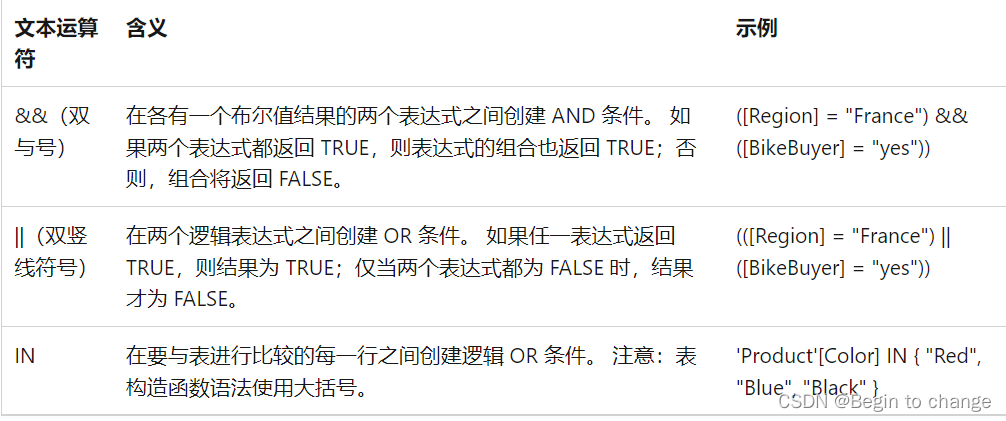

PowerPivot——DAX(初识)

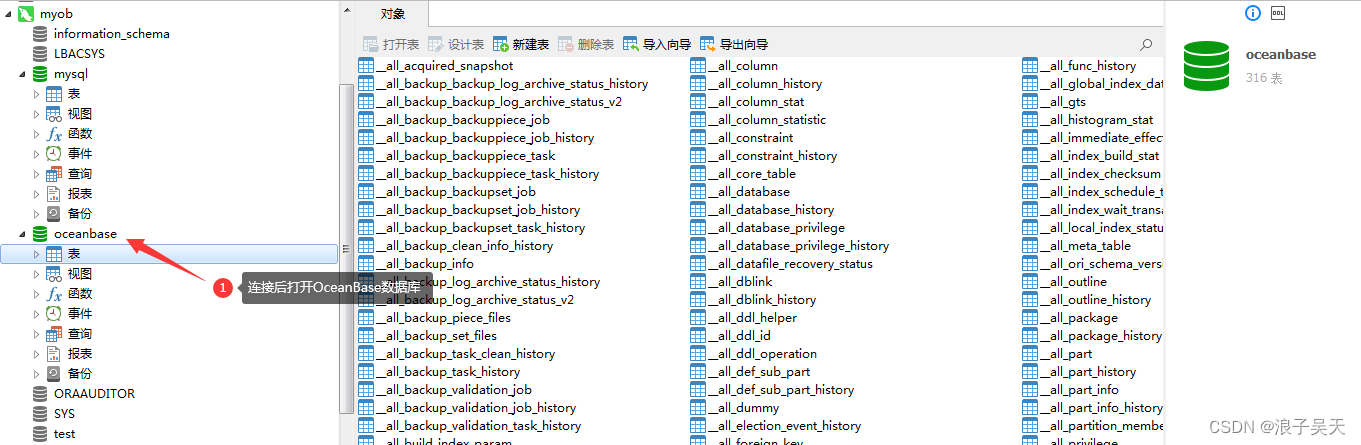

OceanBase社区版之OBD方式部署方式单机安装

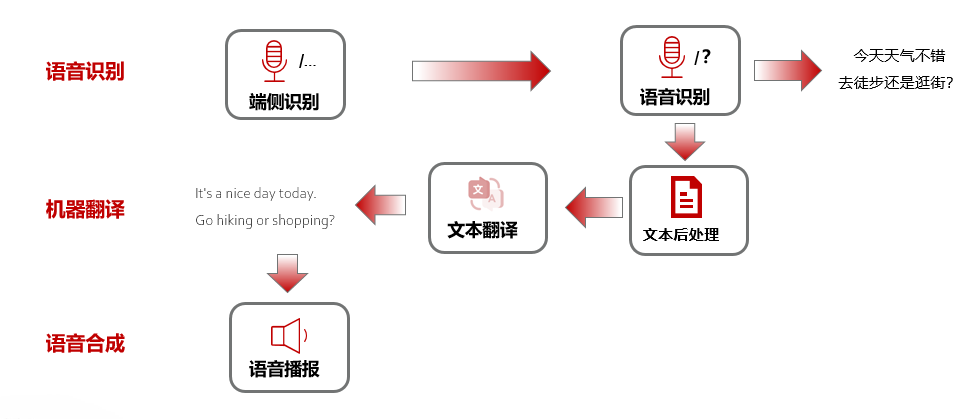

HMS Core 机器学习服务打造同传翻译新“声”态,AI让国际交流更顺畅

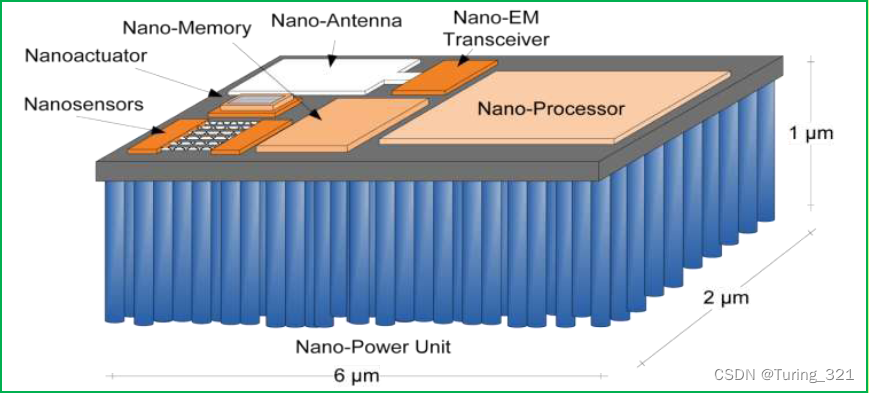

5. 无线体内纳米网:十大“可行吗?”问题

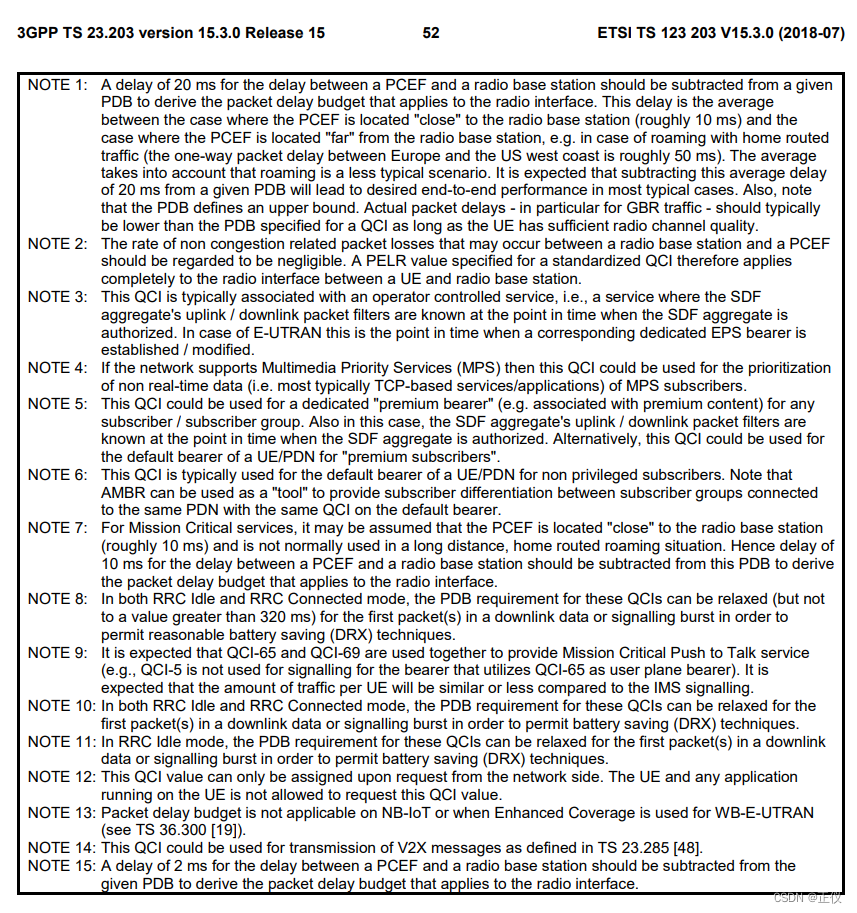

Standardized QCI characteristics

![[network planning] Chapter 3 data link layer (4) LAN, Ethernet, WLAN, VLAN](/img/b8/3d48e185bb6eafcdd49889f0a90657.png)

[network planning] Chapter 3 data link layer (4) LAN, Ethernet, WLAN, VLAN

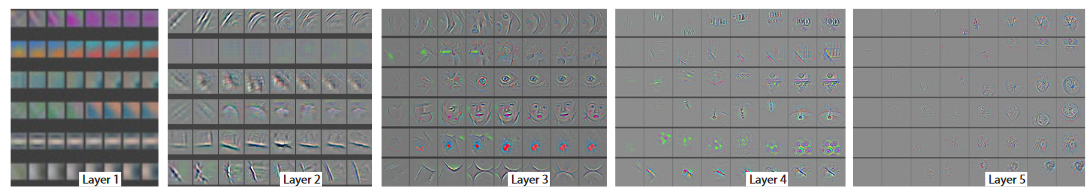

Deep learning classification network -- zfnet

随机推荐

Special topic of rotor position estimation of permanent magnet synchronous motor -- fundamental wave model and rotor position angle

范式的数据库具体解释

Method keywords deprecated, externalprocname, final, forcegenerate

永磁同步电机转子位置估算专题 —— 基波模型类位置估算概要

2022年6月语音合成(TTS)和语音识别(ASR)论文月报

Catch ball game 1

OceanBase社区版之OBD方式部署方式单机安装

AddressSanitizer 技术初体验

爬虫(14) - Scrapy-Redis分布式爬虫(1) | 详解

Tencent cloud database public cloud market ranks top 2!

棋盘左上角到右下角方案数(2)

Standardized QCI characteristics

【计网】第三章 数据链路层(3)信道划分介质访问控制

句号压缩过滤器

A5000 vgpu display mode switching

Cesium Click to draw a circle (dynamically draw a circle)

Leetcode question 283 Move zero

HDU 1026 search pruning problem within the labyrinth of Ignatius and the prince I

Guangzhou's first data security summit will open in Baiyun District

Tencent T4 architect, Android interview Foundation