当前位置:网站首页>Mongodb meets spark (for integration)

Mongodb meets spark (for integration)

2022-07-07 13:12:00 【cui_ yonghua】

The basic chapter ( Can solve the problem of 80% The problem of ):

MongoDB data type 、 Key concepts and shell Commonly used instructions

MongoDB Various additions to documents 、 to update 、 Delete operation summary

Advanced :

Other :

One . And HDFS comparison ,MongoDB The advantages of

1、 In terms of storage mode ,HDFS In documents , The size of each file is 64M~128M, and mongo The performance is more fine grained ;

2、MongoDB Support HDFS There is no index concept , So it is faster in reading speed ;

3、MongoDB It is easier to modify data ;

4、HDFS The response level is minutes , and MongoDB The response category is milliseconds ;

5、 You can use MongoDB Powerful Aggregate Function for data filtering or preprocessing ;

6、 If you use MongoDB, There is no need to be like the traditional mode , To Redis After memory database calculation , Then save it to HDFS On .

Two . Hierarchical architecture of big data

MongoDB Can replace HDFS, As the core part of big data platform , It can be layered as follows :

The first 1 layer :MongoDB perhaps HDFS;

The first 2 layer : Resource management Such as YARN、Mesos、K8S;

The first 3 layer : Calculation engine Such as MapReduce、Spark;

The first 4 layer : Program interface Such as Pig、Hive、Spark SQL、Spark Streaming、Data Frame etc.

Reference resources :

mongo-python-driver: https://github.com/mongodb/mongo-python-driver/

Official documents :https://www.mongodb.com/docs/spark-connector/current/

3、 ... and . The source code is introduced

mongo-spark/examples/src/test/python/introduction.py

# -*- coding: UTF-8 -*-

#

# Copyright 2016 MongoDB, Inc.

#

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# To run this example use:

# ./bin/spark-submit --master "local[4]" \

# --conf "spark.mongodb.input.uri=mongodb://127.0.0.1/test.coll?readPreference=primaryPreferred" \

# --conf "spark.mongodb.output.uri=mongodb://127.0.0.1/test.coll" \

# --packages org.mongodb.spark:mongo-spark-connector_2.11:2.0.0 \

# introduction.py

from pyspark.sql import SparkSession

if __name__ == "__main__":

spark = SparkSession.builder.appName("Python Spark SQL basic example").getOrCreate()

logger = spark._jvm.org.apache.log4j

logger.LogManager.getRootLogger().setLevel(logger.Level.FATAL)

# Save some data

characters = spark.createDataFrame([("Bilbo Baggins", 50), ("Gandalf", 1000), ("Thorin", 195), ("Balin", 178), ("Kili", 77), ("Dwalin", 169), ("Oin", 167), ("Gloin", 158), ("Fili", 82), ("Bombur", None)], ["name", "age"])

characters.write.format("com.mongodb.spark.sql").mode("overwrite").save()

# print the schema

print("Schema:")

characters.printSchema()

# read from MongoDB collection

df = spark.read.format("com.mongodb.spark.sql").load()

# SQL

df.registerTempTable("temp")

centenarians = spark.sql("SELECT name, age FROM temp WHERE age >= 100")

print("Centenarians:")

centenarians.show()

边栏推荐

- 如何让electorn打开的新窗口在window任务栏上面

- Japanese government and enterprise employees got drunk and lost 460000 information USB flash drives. They publicly apologized and disclosed password rules

- Common text processing tools

- Sequoia China completed the new phase of $9billion fund raising

- shell 批量文件名(不含扩展名)小写改大写

- PCAP学习笔记二:pcap4j源码笔记

- 将数学公式在el-table里面展示出来

- 飞桨EasyDL实操范例:工业零件划痕自动识别

- COSCon'22 社区召集令来啦!Open the World,邀请所有社区一起拥抱开源,打开新世界~

- [untitled]

猜你喜欢

随机推荐

《ASP.NET Core 6框架揭秘》样章[200页/5章]

The difference between cache and buffer

MongoDB内部的存储原理

Sample chapter of "uncover the secrets of asp.net core 6 framework" [200 pages /5 chapters]

基于鲲鹏原生安全,打造安全可信的计算平台

【无标题】

Adopt a cow to sprint A shares: it plans to raise 1.85 billion yuan, and Xu Xiaobo holds nearly 40%

How did Guotai Junan Securities open an account? Is it safe to open an account?

将数学公式在el-table里面展示出来

How to reset Firefox browser

Practical example of propeller easydl: automatic scratch recognition of industrial parts

货物摆放问题

【黑马早报】华为辟谣“军师”陈春花;恒驰5预售价17.9万元;周杰伦新专辑MV 3小时播放量破亿;法华寺回应万元月薪招人...

高端了8年,雅迪如今怎么样?

[learning notes] zkw segment tree

PACP学习笔记三:PCAP方法说明

RecyclerView的数据刷新

. Net ultimate productivity of efcore sub table sub database fully automated migration codefirst

error LNK2019: 无法解析的外部符号

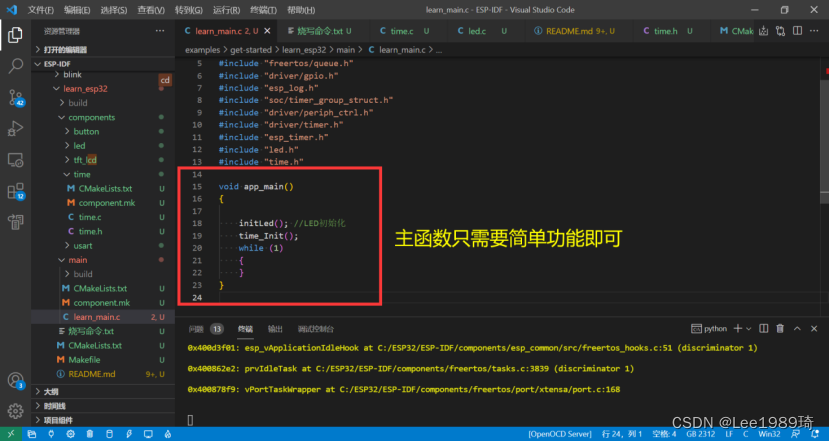

ESP32系列专栏