当前位置:网站首页>Which detector is more important, backbone or neck? The new work of Dharma hall has different answers

Which detector is more important, backbone or neck? The new work of Dharma hall has different answers

2022-07-08 02:20:00 【pogg_】

Title of thesis 《GIRAFFEDET: A kind of heavy-neck New paradigm of object detection 》

Address of thesis :https://arxiv.org/pdf/2202.04256.pdf

Abstract

In the traditional target detection framework , The model extracts deep-seated potential features from the backbone , These potential features are then fused by the neck module , Capture information at different scales . Because the resolution requirement of target detection is much larger than that of image recognition , Therefore, the computing cost of the backbone often accounts for most of the reasoning cost . This backbone design paradigm is left over when traditional image recognition develops to target detection , But this paradigm is not an end-to-end optimization design for target detection . In this work , We prove that this paradigm can only produce sub optimal target detection models . So , We propose a new heavy neck design paradigm ,GiraffeDet, An effective object detection network similar to giraffe .GiraffeDet It uses a very light trunk and a very deep and large neck module , This structure can carry out intensive information exchange at different spatial scales and different levels of latent semantics . This design paradigm helps the detector process high-level semantic information and low-level spatial information with the same priority in the early stage of the network , Make it more effective in detection tasks . The evaluation of several popular testing benchmarks shows , It is always better than the previous SOTA Model .

Introduce

In the last few years , Significant progress has been made in target detection methods based on deep learning . Although the target detection network is in the architectural design 、 Training strategies and other aspects become more and more strengthened , But detection is important for large-scale The goal of change has not changed . So , We solve this problem by designing an effective and robust method . In order to alleviate the pain caused by large-scale Problems caused by changes , An intuitive method is to use multi-scale pyramid strategy for training and testing . Although this method improves most existing cnn The detection performance of the detector , But it is not practical , Because the image pyramid method needs to process each image with different proportions , Calculation is more expensive . later , The characteristic pyramid network is proposed , Similar to image pyramid, but at a lower cost . Recent research still relies on superior backbone design , But this will make the information exchange between high-level features and low-level features insufficient .

Based on the above challenges , In this task, the following two questions are raised :

Is the backbone of the image classification task indispensable in the detection model ?

What types of multiscale representations are effective for detection tasks ?

These two problems prompted us to design a new framework , It includes two sub tasks , That is, effective feature downsampling and sufficient multi-scale fusion . First , Traditional backbone computing for feature extraction is expensive , And it exists domain-shift The problem of . secondly , The detector is very important for information fusion between high-level semantics and low-level spatial features . According to the above phenomena , We designed a giraffe like network , be known as GiraffeDet, It has the following characteristics :(1) A new lightweight backbone can extract multi-scale features , Without large calculation costs . (2) Sufficient cross scale connections – The queen fused , Like the Queen's path in chess , To deal with different levels of feature fusion .(3) According to the design of lightweight backbone and flexible FPN, We listed each GiraffeDet Series type FLOPs, Experimental results show that , our GiraffeDet Series in each FLOPs Both have achieved higher accuracy and better efficiency .

in summary , The main contributions of our work are as follows :

• As far as we know , We are the first to propose lightweight alternative backbone and flexibility FPN As a team of detectors . Proposed GiraffeDet The family consists of lightweight S2D-chain and Generalized-FPN form , Demonstrated the most advanced performance .

• We designed a lightweight space depth chain (S2D-Chain), Not based on tradition CNN The main chain , Experiments show that , In target detection mode ,FPN The role of is more important than the traditional backbone .

• Based on what we put forward above Generalized-FPN(GFPN), A new queen fusion is proposed as our cross scale connection , It combines the hierarchical characteristics of the previous layer and the current layer , as well as n A hop layer link to provide more efficient information transmission , This approach can be extended to deeper structures . Design paradigm based on light trunk and heavy neck ,GiraffeDet The family model is FLOPs- Perform well in performance tradeoffs .GiraffeDet-D29 stay COCO Reached on dataset 54.1% Of mAP, And better than others SOTA Model .

Related work

Identifying targets by learning scale features is the key to locating targets .large-scale Traditional solutions to problems are mainly based on improvements CNN The Internet . be based on CNN The target detector of is mainly divided into two-stage detector and one-stage detector . In recent years , The main research route is to use pyramid strategy , Including image pyramid and feature pyramid . The image pyramid strategy detects instances by scaling the image . for example ,Singhetal stay 2018 In, a fast multi-scale training method was proposed , This method samples the foreground area and background area around the real object , Train at different scales . Different from the image pyramid method , The feature pyramid method integrates the pyramid expression of different scales and different semantic information layers . for example ,PANet Enhance the feature hierarchy at the top of the feature pyramid network through additional bottom-up paths . Besides ,NAS-FPN Using neural structure automatic search to explore the topology of feature pyramid network . Our focus is on the feature pyramid strategy , A high-level semantic and low-level spatial information fusion method is proposed . Some researchers began to design new CNN Architecture to solve large-scale The problem of ,FishNet By designing the encoder connected by hopping - Decoder architecture to integrate multi-scale features .SpineNet Designed as a trunk + It has the middle feature of scale arrangement + The way of cross scale connection , Learning through neural structure search . Our work is inspired by these methods , Therefore, a lightweight spatial depth backbone is proposed , Our network design Light backbone, heavy neck Architecture of , It has been proved to be effective in the detection task .

3、THE GIRAFFEDET

large-scale Still a challenge , In order to exchange multi-scale information fully and effectively , We propose a method for target detection GiraffeDet, The whole frame is as shown in the figure 1 Shown , It generally follows the paradigm of one-stage detector .![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-OHQngQsY-1644569325196)(C:\Users\HP\AppData\Roaming\Typora\typora-user-images\image-20220211151731469.png)]](/img/d0/80ff1ded29c94c93f5a7c27360b486.jpg)

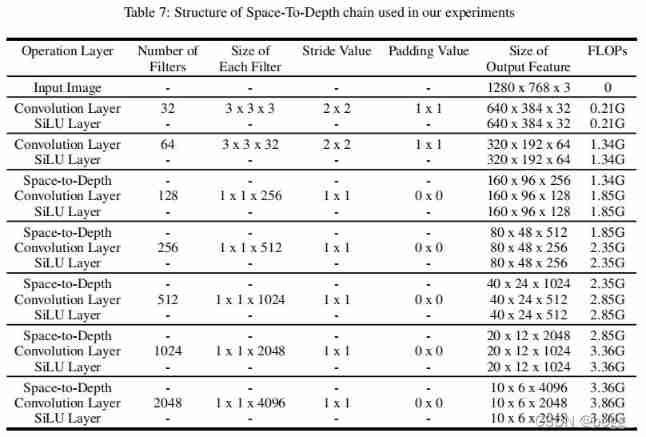

3.1 LIGHTWEIGHT SPACE-TO-DEPTH CHAIN(S2D chain)

Most feature pyramid networks use traditional CNN Network as the backbone , Extract multi-scale feature map , So as to exchange information . However , With CNN The development of , The backbone becomes heavier , The computational cost of using them is expensive . Besides , The backbone is mainly pre trained on the classified data set , for example , stay ImageNet On the pre training ResNet50, We think these pre trained backbones are not suitable for the detection task , Still domain-shift The problem of . by comparison ,FPN Pay more attention to high-level semantic exchange and low-level spatial information exchange . therefore , We assume that FPN In the target detection model, it is more important than the traditional backbone .

We propose a spatial depth chain (S2D chain ) As our lightweight backbone , There are two 3x3 Convolution networks and stacked S2D Block. say concretely ,3x3 Convolution is used for initial down sampling and introduces more nonlinear transformations . Every S2D Block By a S2D Layer and a 1x1 Convolution composition . S2D Layer moves spatial dimension information to deeper dimensions through uniform sampling and reorganization , Downsampling features without additional parameters . And then use 1x1 Convolution to provide channel pooling to generate fixed dimensional feature graphs .![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-0fPIeYoE-1644569325197)(C:\Users\HP\AppData\Roaming\Typora\typora-user-images\image-20220211151750584.png)]](/img/91/fbff6d5c69e568662860ce1ba7c460.jpg)

To test our hypothesis , We are the first 4 The same target is detected in section , Control experiments were carried out on different trunks and necks . It turns out that , The neck is more important than the traditional backbone in the task of target detection .

3.2 GENERALIZED-FPN

In the feature pyramid network , The purpose of multi-scale feature fusion is to aggregate different features extracted from the backbone network feature map. chart 3 It shows the evolution process of feature pyramid network design . Conventional FPN Introduced a top-down path , From the 3 Up to 7 Level of multi-scale feature fusion . Considering the limitations of one-way information flow ,PANet Added an additional bottom-up path aggregation network , But the calculation cost is greater . Besides ,BiFPN Deleted a node with only one input edge , And add additional edges from the original input at the same level . However , We observed that , Previous methods only focused on feature fusion , And lack of internal block connection . therefore , We designed a new path fusion , Including jump layer and cross scale connection , Pictured 3(d). Shown .![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-5I8D22QD-1644569325198)(C:\Users\HP\AppData\Roaming\Typora\typora-user-images\image-20220211151823005.png)]](/img/c0/567d3291f736306f3fae07f550d3e9.jpg)

Jump layer connection . Compared with other connection methods , In the process of back propagation , The jump connection has a short distance between feature layers . In order to reduce the heavy-neck The gradient disappears , We propose two feature linking methods : d e n s e − l i n k dense-link dense−link and l o g 2 n − l i n k log_2n-link log2n−link, Pictured 4 Shown :![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-1aeOrp5P-1644569907475)(C:\Users\HP\AppData\Roaming\Typora\typora-user-images\image-20220211151834589.png)]](/img/c0/8841c33836267222d8a1acdcff8938.jpg)

**dense-link:** suffer DenseNet Inspired by the , about k Each scale feature of the layer P k l P_k^l Pkl, The first l l l Layer receives the characteristic information of all previous layers :![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-QuY1spzl-1644569325198)(C:\Users\HP\AppData\Roaming\Typora\typora-user-images\image-20220211151849871.png)]](/img/ec/6cc606bb3c3d0452fcf50b326d2ee2.jpg)

among C o n c a t ( ) Concat() Concat() It refers to the connection of feature mapping generated in all previous layers , C o n v ( ) Conv() Conv() Express 3x3 Convolution .

l o g 2 n − l i n k log_2n-link log2n−link: To be specific , about k k k The layer structure , The first l l l The layer receives at most l o g 2 l + 1 log_2l + 1 log2l+1 Characteristic information of , These input layers and depths i i i Exponential separation relationship , The base number is 2:![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-KIaH5B43-1644569325199)(C:\Users\HP\AppData\Roaming\Typora\typora-user-images\image-20220211151908867.png)]](/img/82/2b16aea0ffc09b7d0827c5868b392e.jpg)

among l − 2 n ≥ 0 l-2^n≥0 l−2n≥0、 C o n c a t ( ) Concat() Concat() and C o n v ( ) Conv() Conv() It also means connection and 3x3 Convolution . And depth l l l Situated dense-link comparison , Complexity only costs O ( l ⋅ l o g 2 l ) O(l·log_2l) O(l⋅log2l), instead of O ( l ) O(l) O(l). Besides , In the process of back propagation , l o g 2 n − l i n k log_2n-link log2n−link Change the distance between layers from 1 Add to 1 + l o g 2 l . 1+log_2l. 1+log2l.. 1 + l o g 2 l . 1+log_2l. 1+log2l. It can be extended to deeper Networks .

** Cross scale connection :** Based on our assumptions , The information exchange module we designed should not only include hop layer connection , It should also include cross scale connections , To overcome multi-scale changes . therefore , We propose a new cross scale fusion method , It's called queen fusion , Consider the following figure 3(d) Features of the same layer and adjacent layers shown . Pictured 5(b) An example shown , The connection of Queen fusion includes the down sampling of the previous layer , In this study , We use bilinear interpolation and maximum pooling as up sampling and down sampling functions respectively . therefore , In the case of extreme scale changes , The model needs to be high enough 、 Low level information exchange . Based on our hopping layer and cross scale connection mechanism , What we proposed Generalized-FPN It can be expanded as much as possible , It's like “ Giraffe neck ” equally . With this “ Heavy neck and light backbone , our GiraffeDet It can achieve higher accuracy and better efficiency .”![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-ceFM3Wvp-1644569325199)(C:\Users\HP\AppData\Roaming\Typora\typora-user-images\image-20220211151918105.png)]](/img/2b/64d8d8c30cb8b0d155e5eccd1ed3c9.jpg)

3.3 GIRAFFEDET FAMILY

According to our proposal S2D-chain and Generalized-FPN, We can develop a range of different GiraffeDet Model . Previous work has expanded the performance of the detector in an inefficient way , Such as changing a larger backbone network , Such as ResNeXt, Or stack FPN block . Specially ,EffificientDet Start using the common composite coefficient * φ φ φ To expand all dimensions of the trunk . And EffificientDet The difference is , We only focus on GFPN Scaling of layers , Instead of including the entire framework of lightweight backbone . To be specific , We applied two coefficients φ d φ_d φd* and φ w φ_w φw Can flexibly adjust GFPN The depth and width of .![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-dUGvYycS-1644569325200)(C:\Users\HP\AppData\Roaming\Typora\typora-user-images\image-20220211151940855.png)]](/img/63/031a0d7e1dcb5f9341e3589011e544.jpg)

Follow the above equation . We have developed six GiraffeDet framework , As shown in the table 1 Shown .D7、D11、D14、D16 And resnet Series models have a stalemate level , We will discuss GiraffeDet Family and SOTA Compare the performance of the model . Please note that ,GFPN Layers and others FPN Design different , Pictured 3 Shown . In our proposal GFPN in , Each layer represents a depth , and PANet and BiFPN One layer contains two depths .

In this section , Let's first introduce the implementation details , And give us in COCO Experimental results on datasets . Then we put forward GiraffeDet Compare the family with other state-of-the-art methods , Finally, provide an in-depth and comprehensive analysis , To better understand our network .

4.1 Data sets and implementation details

For a fair comparison , All the results are in mmdetection Framework and standard coco Under the evaluation scheme . All models are trained from scratch , To reduce the backbone pair ImageNet Influence . The short side of the input image is adjusted to 800, The maximum size is limited to 1333 Within the scope of . In order to improve the stability of training , We use multiscale training for all models , Include : stay R2-101-DCN Used in trunk experiments 2x imagenet-pretrained (p-2x) Training program (24 epoch, stay 16 and 22 epoch attenuation ),3x scratch(s-3x) Training program (36 epoch, stay 28 and 33 attenuation ) And now SOTA In network comparison 6x Scratch (s-6x) The training plan of the team (72 epochs, stay 65 and 71 epochs attenuation ).

4.2 COCO Evaluation of data sets

For a fair comparison , We also used RetinaNet、FCOS、HRNet、GFLV2 Wait for the model , the 6 Time training , Recorded as seven variances . According to the figure 6 Performance of , We can observe what we put forward GiraffeDet The best performance is achieved in each pixel scale range , This shows the design paradigm of light trunk and heavy neck, as well as our proposed GFPN It can effectively solve the problem of large-scale variance . Besides , Under jump layer and cross scale connection , It can realize the full exchange of high-level semantic information and low-level spatial information . Many instances are smaller than the image area 1%, This makes it difficult to detect , But our method is in pixels 0-32 It is still better than RetinaNet high 5.7 individual map, In the middle pixel 80-144 Have the same map. It is worth noting that , When the pixel is 192-256 Of Fan around Inside , The proposed GiraffeDet Performance is better than other methods , This proves that our design can effectively learn the characteristics of different scales .

![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-dLrTb6Ru-1644569325201)(C:\Users\HP\AppData\Roaming\Typora\typora-user-images\image-20220211152000952.png)]](/img/e2/e5c87cecf911f187007f76d9a295c6.jpg)

From the table 2 It can be seen that , our GiraffeDet Compared with each detector of the same level, it has better performance , This shows that our method can effectively detect targets .

1) And based on resnet At a low level FLOPs Compared with the method on scale , We found that , Even if the overall performance is not significantly improved , But our method has remarkable performance in detecting small objects and large objects . This shows that our method performs better on large-scale data sets .

2) And based on ResNexts Compared with , We found that GiraffeDet Lower than FLOPs Has higher performance , This indicates good FPN Design is more important than backbone .

3) Compared with other methods , The proposed GiraffeDet Also has the SOTA performance , Proved our design in every FLOPs Higher accuracy and efficiency have been achieved on both levels . Besides , be based on NAS The method consumes a lot of computing resources in the training process , Therefore, we do not consider comparing with our method . Last , Through the multi-scale test program , our GiraffeDet Reached 54.1% Of mAP, especially A P s APs APs Added 2.8%, A P l APl APl growth 2.3%, Far more than A P m APm APm Added 1.9%.

![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-NRH3WmHq-1644569325201)(C:\Users\HP\AppData\Roaming\Typora\typora-user-images\image-20220211152013419.png)]](/img/6b/9a99b52ef6fcfc8712d9b5bf6aa7a2.jpg)

The influence of depth and width . To further differ from neck Make a fair comparison , We are in the same FLOPs Horizontally right FPN、PANet and BiFPN Two groups of experiments were compared , To analyze our proposed Generalized-FPN Medium depth and width effectiveness . Please note that , Pictured 3 Shown , our GFPN Each layer contains a depth , and PANet and BiFPN Each layer of contains two depths .![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-1TSuxy65-1644569325202)(C:\Users\HP\AppData\Roaming\Typora\typora-user-images\image-20220211152023894.png)]](/img/a2/fad62fbe2d6c09db9d90e93dbf088d.jpg)

As shown in the table 4 Shown , We observed what we proposed GFPN It is superior to others in various depths and widths FPN, It also shows that l o g 2 n − l i n k log_2n-link log2n−link Integration with queen can effectively provide information transmission and Exchange . Besides , What we proposed GFPN Higher performance can be achieved in smaller designs .

** Backbone effect .** chart 7 It shows the difference neck Depth and difference backbones In the same FLOPs Horizontal performance . It turns out that ,S2D-chain and GFPN The combination is excellent For other backbone models , This can test our hypothesis ,** namely FPN More critical , The traditional backbone will not improve performance with the increase of depth .** especially , Performance even declines as the trunk becomes heavier . This may be because domain-shift The problem becomes more serious in a big trunk .

![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-wIgIJkHr-1644569325202)(C:\Users\HP\AppData\Roaming\Typora\typora-user-images\image-20220211152034862.png)]](/img/50/8a8bb045d3d142803a0cfbcc9e28b5.jpg)

add to DCN Result

surface 5:GiraffeDet-D11 The result of applying deformable convolution network (val 2017). *‡* It means using more gpu Trained SyBN GFPN.

![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-CILu2p0F-1644569325202)(C:\Users\HP\AppData\Roaming\Typora\typora-user-images\image-20220211152046075.png)]](/img/ac/ae90938ed3ecb7b82f1b8846495af9.jpg)

In our GiraffeDet Introduce deformable convolution network (DCN), The network has recently been widely used to improve detection performance . As shown in the table 5 Shown , We observed that DCN Can significantly improve GiraffeDet Performance of . Especially according to the table 2,GiraffeDet-D11 Than GiraffeDet-D16 Better performance . Under acceptable reasoning time , We observed that DCN Trunk and shallow GFPNTiny Can improve performance , And the performance increases with GFPN With the growth of depth , As shown in the table 6 Shown .

surface 6: Have more than one GFPN Of Res2Net-101-DCN(R2-101-DCN) The result of backbone (val-2017).GFPNtiny Depth refers to depth 8, Width is 122( And FPN Of FLOPs Same level ) Of GFPN.![[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-NaDBg3oN-1644569325203)(C:\Users\HP\AppData\Roaming\Typora\typora-user-images\image-20220211152055972.png)]](/img/ac/2d04671a3a04157644020468c49211.jpg)

5 Conclusion

In this paper , We propose a new design paradigm ,GiraffeDet, A giraffe like network , To solve the problem large-scale Problem of change . especially ,GiraffeDet Use a lightweight spatial depth chain as the backbone ,Generalized-FPN As neck. Using lightweight spatial depth chain to extract multi-scale image features ,GFPN To deal with high-level semantic information and low-level spatial information exchange . A large number of results show that , The proposed GiraffeDet Higher accuracy and better efficiency , Especially detect small and large objects .

边栏推荐

- #797div3 A---C

- Learn CV two loss function from scratch (4)

- [knowledge map paper] r2d2: knowledge map reasoning based on debate dynamics

- Leetcode question brushing record | 27_ Removing Elements

- "Hands on learning in depth" Chapter 2 - preparatory knowledge_ 2.2 data preprocessing_ Learning thinking and exercise answers

- Flutter 3.0框架下的小程序运行

- 需要思考的地方

- From starfish OS' continued deflationary consumption of SFO, the value of SFO in the long run

- Keras深度学习实战——基于Inception v3实现性别分类

- Semantic segmentation | learning record (5) FCN network structure officially implemented by pytoch

猜你喜欢

![[knowledge map paper] Devine: a generative anti imitation learning framework for knowledge map reasoning](/img/c1/4c147a613ba46d81c6805cdfd13901.jpg)

[knowledge map paper] Devine: a generative anti imitation learning framework for knowledge map reasoning

Neural network and deep learning-5-perceptron-pytorch

谈谈 SAP iRPA Studio 创建的本地项目的云端部署问题

th:include的使用

Applet running under the framework of fluent 3.0

excel函数统计已存在数据的数量

Introduction to grpc for cloud native application development

C language -cmake cmakelists Txt tutorial

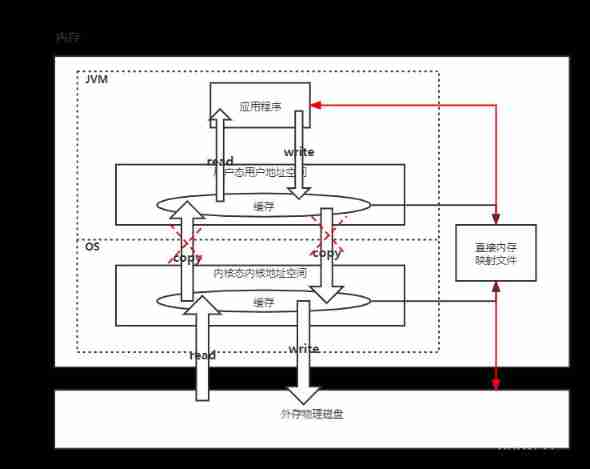

JVM memory and garbage collection-3-direct memory

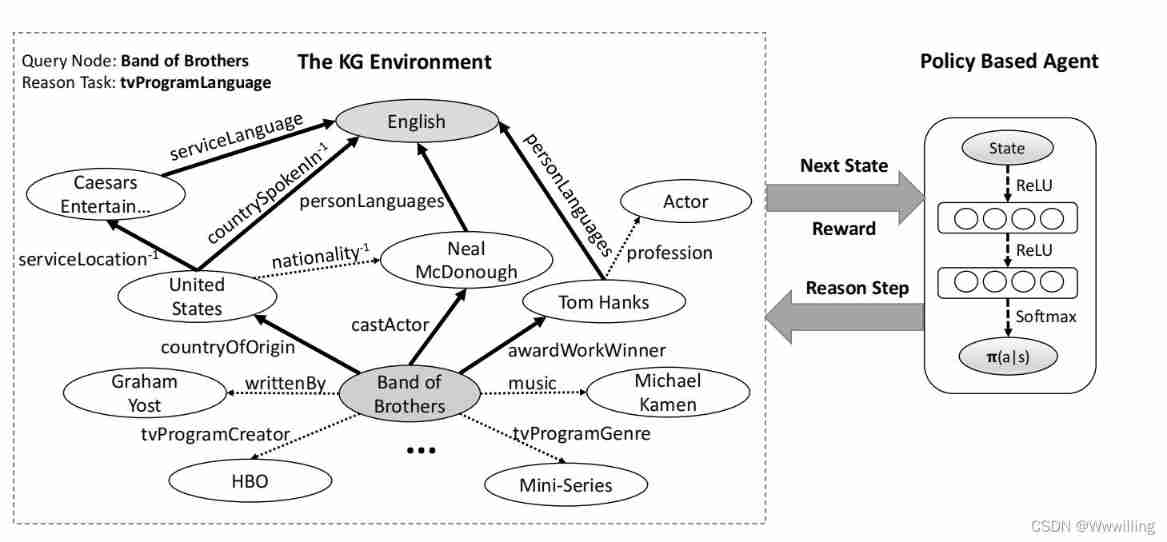

Deeppath: a reinforcement learning method of knowledge graph reasoning

随机推荐

[reinforcement learning medical] deep reinforcement learning for clinical decision support: a brief overview

Thread deadlock -- conditions for deadlock generation

leetcode 865. Smallest Subtree with all the Deepest Nodes | 865. The smallest subtree with all the deepest nodes (BFs of the tree, parent reverse index map)

Infrared dim small target detection: common evaluation indicators

EMQX 5.0 发布:单集群支持 1 亿 MQTT 连接的开源物联网消息服务器

《通信软件开发与应用》课程结业报告

Leetcode question brushing record | 485_ Maximum number of consecutive ones

The bank needs to build the middle office capability of the intelligent customer service module to drive the upgrade of the whole scene intelligent customer service

Ncnn+int8+yolov4 quantitative model and real-time reasoning

COMSOL --- construction of micro resistance beam model --- final temperature distribution and deformation --- addition of materials

JVM memory and garbage collection-3-direct memory

Anan's judgment

Applet running under the framework of fluent 3.0

Direct addition is more appropriate

阿锅鱼的大度

金融业数字化转型中,业务和技术融合需要经历三个阶段

[knowledge map paper] attnpath: integrate the graph attention mechanism into knowledge graph reasoning based on deep reinforcement

cv2-drawline

1331:【例1-2】后缀表达式的值

How does the bull bear cycle and encryption evolve in the future? Look at Sequoia Capital