当前位置:网站首页>Migrate data from CSV files to tidb

Migrate data from CSV files to tidb

2022-07-06 08:01:00 【Tianxiang shop】

This document describes how to start from CSV File migration data to TiDB.

TiDB Lightning Support reading CSV File format , And other delimiter formats , Such as TSV( Tabs separate values ). For others “ Flat file ” Data import of type , You can also refer to this document .

Prerequisite

The first 1 Step : Get ready CSV file

All to be imported CSV Files in the same directory , If you want to TiDB Lightning Identify all CSV file , The file name must meet the following format :

- Containing the data of the whole table CSV file , It needs to be named

${db_name}.${table_name}.csv. - If a table is distributed in multiple CSV file , these CSV The suffix of document number shall be added to the document name , Such as

${db_name}.${table_name}.003.csv. The digital part does not need to be continuous , But it must be incremented , And you need to fill the number part with zeros , Make sure the suffix is the same length .

The first 2 Step : Create target table structure

CSV The file itself does not contain table structure information . To put CSV Data import TiDB, You must provide a table structure for the data . You can create a table structure by any of the following methods :

Method 1 : Use TiDB Lightning Create a table structure .

Write include DDL Of the statement SQL The documents are as follows :

- The file name format is

${db_name}-schema-create.sql, The content should includeCREATE DATABASEsentence . - The file name format is

${db_name}.${table_name}-schema.sql, The content should includeCREATE TABLEsentence .

- The file name format is

Method 2 : Manually downstream TiDB Database and table building .

The first 3 Step : Writing configuration files

New file tidb-lightning.toml, Contains the following :

[lightning] # journal level = "info" file = "tidb-lightning.log" [tikv-importer] # "local": This mode is used by default , Apply to TB Large amount of data above level , But downstream during import TiDB Unable to provide external services . # "tidb":TB Data volume below level can also be used `tidb` Back end mode , The downstream TiDB Can normally provide services . For more information about the backend mode, see :https://docs.pingcap.com/tidb/stable/tidb-lightning-backends backend = "local" # Set the temporary storage address of sorted key value pairs , The destination path must be an empty directory , The directory space must be larger than the size of the dataset to be imported , It is recommended to set it to and `data-source-dir` Different disk directories and flash media , Monopoly IO You will get better import performance sorted-kv-dir = "/mnt/ssd/sorted-kv-dir" [mydumper] # Source data directory . data-source-dir = "${data-path}" # Local or S3 route , for example :'s3://my-bucket/sql-backup?region=us-west-2' # Definition CSV Format [mydumper.csv] # Field separator , Must not be empty . If the source file contains fields of non string or numeric type ( Such as binary, blob, bit etc. ), It is not recommended to use the default “,” Simple separator , recommend “|+|” And other unusual character combinations separator = ',' # Reference delimiter , Can be zero or more characters . delimiter = '"' # CSV Whether the file contains header . # If true, be lightning Will use the first line of content to resolve the corresponding relationship of fields . header = true # CSV Does it include NULL. # If true,CSV No column of the file can be resolved to NULL. not-null = false # If `not-null` by false( namely CSV Can contain NULL), # Fields with the following values will be resolved to NULL. null = '\N' # Whether the backslash contained in the string ('\') Characters are treated as escape characters backslash-escape = true # Whether to remove the last separator at the end of the line . trim-last-separator = false [tidb] # Information about the target cluster host = ${host} # for example :172.16.32.1 port = ${port} # for example :4000 user = "${user_name}" # for example :"root" password = "${password}" # for example :"rootroot" status-port = ${status-port} # Import process Lightning You need to start from TiDB Of “ Status port ” Get table structure information , for example :10080 pd-addr = "${ip}:${port}" # colony PD The address of ,Lightning adopt PD Get some information , for example 172.16.31.3:2379. When backend = "local" when status-port and pd-addr Must be filled in correctly , Otherwise, an exception will appear in the import .

More information about configuration files , Please see the TiDB Lightning Configuration parameters .

The first 4 Step : Import performance optimization ( Optional )

The unified size of the imported file is about 256 MiB when ,TiDB Lightning Can achieve the best working condition . If you import a single CSV A large file ,TiDB Lightning In the default configuration, only one thread can be used to process , This will slow down the import .

To solve this problem , But first of all, I will CSV The file is divided into multiple files . For general format CSV file , Without reading the entire file , Unable to quickly determine the start and end positions of lines . therefore , By default TiDB Lightning Will not automatically split CSV file . But if you are sure what to import CSV The document complies with specific restrictions , You can enable strict-format Pattern . When enabled ,TiDB Lightning Will single CSV The large file is divided into a single size of 256 MiB Multiple file blocks for parallel processing .

Be careful

If CSV The file is not strictly formatted , but strict-format It is mistakenly set to true, A single complete field spanning multiple rows will be split into two parts , Causes parsing to fail , Even import the damaged data without error .

Strictly formatted CSV In file , Each field occupies only one line , That is, one of the following conditions must be met :

- delimiter It's empty ;

- Each field does not contain CR (\r) or LF(\n).

If you confirm that the conditions are met , It can be started according to the following configuration strict-format Mode to speed up import .

[mydumper] strict-format = true

The first 5 Step : Perform import

function tidb-lightning. If you start the program directly from the command line , Maybe because SIGHUP Signal and exit , Suggest cooperation nohup or screen Tools such as , Such as :

nohup tiup tidb-lightning -config tidb-lightning.toml > nohup.out 2>&1 &

After the import starts , You can view the progress in any of the following ways :

- adopt

grepLog keywordsprogressView progress , Default 5 Minute update . - Check the progress through the monitoring panel , Please refer to TiDB Lightning monitor .

- adopt Web Page view progress , Please refer to Web Interface .

After import ,TiDB Lightning Will automatically exit . Check the last of the log 5 There will be the whole procedure completed, It indicates that the import was successful .

Be careful

Whether the import is successful or not , The last line will show tidb lightning exit. It just means TiDB Lightning The normal exit , Does not mean that the task is completed .

If you encounter problems during import , Please see the TiDB Lightning common problem .

Files in other formats

If the data source is in another format , In addition to the file name, you must still use .csv Outside the end , The configuration file tidb-lightning.toml Of [mydumper.csv] The format definition also needs to be modified accordingly . The common format is modified as follows :

TSV:

# Format example # ID Region Count # 1 East 32 # 2 South NULL # 3 West 10 # 4 North 39 # Format configuration [mydumper.csv] separator = "\t" delimiter = '' header = true not-null = false null = 'NULL' backslash-escape = false trim-last-separator = false

TPC-H DBGEN:

# Format example # 1|East|32| # 2|South|0| # 3|West|10| # 4|North|39| # Format configuration [mydumper.csv] separator = '|' delimiter = '' header = false not-null = true backslash-escape = false trim-last-separator = true

边栏推荐

- 1204 character deletion operation (2)

- Artcube information of "designer universe": Guangzhou implements the community designer system to achieve "great improvement" of urban quality | national economic and Information Center

- Launch APS system to break the problem of decoupling material procurement plan from production practice

- Leetcode question brushing record | 203_ Remove linked list elements

- (lightoj - 1410) consistent verbs (thinking)

- Hackathon ifm

- WebRTC系列-H.264预估码率计算

- 上线APS系统,破除物料采购计划与生产实际脱钩的难题

- DataX self check error /datax/plugin/reader/_ drdsreader/plugin. Json] does not exist

- "Designer universe" APEC design +: the list of winners of the Paris Design Award in France was recently announced. The winners of "Changsha world center Damei mansion" were awarded by the national eco

猜你喜欢

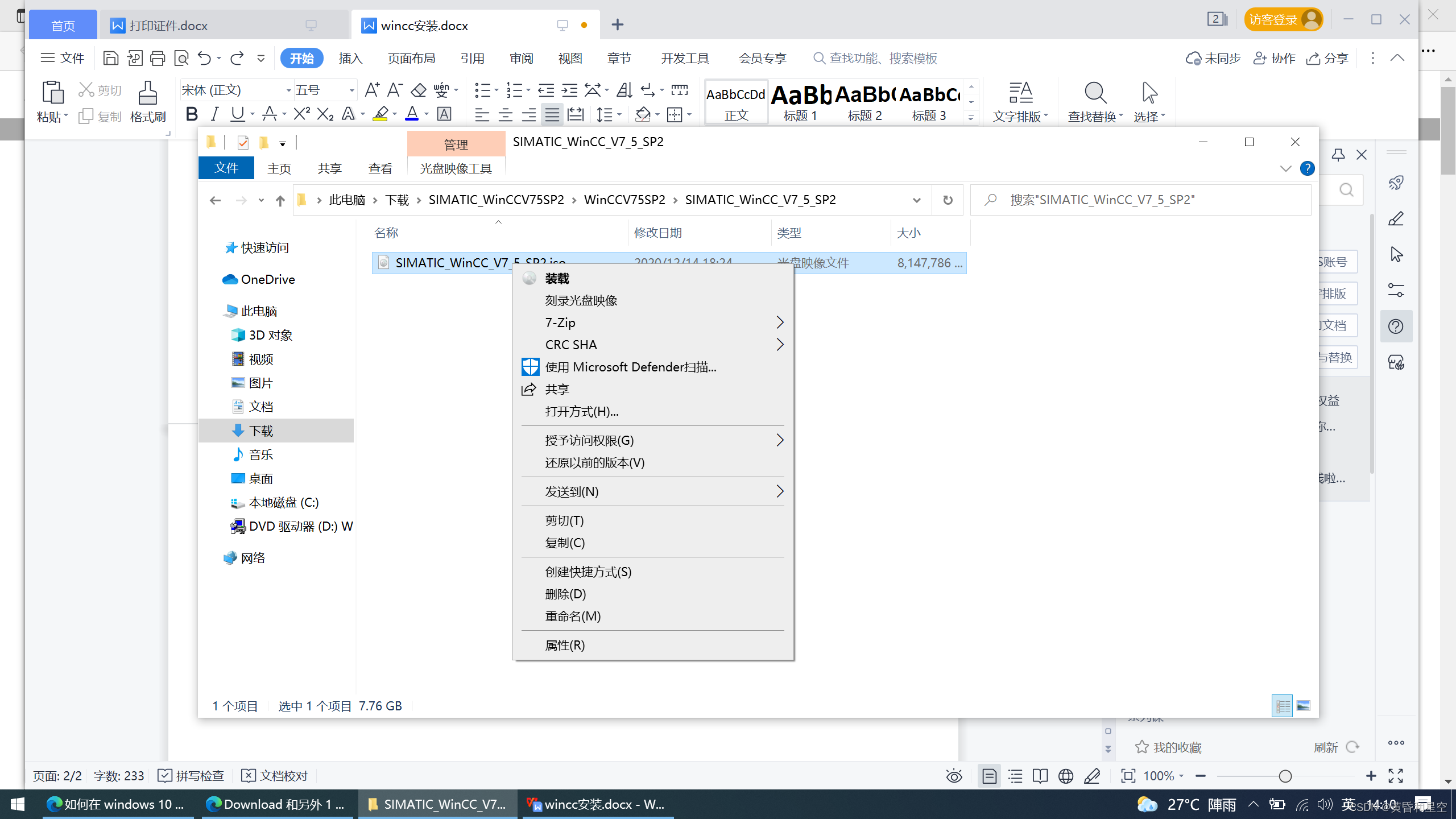

wincc7.5下载安装教程(Win10系统)

Asia Pacific Financial Media | designer universe | Guangdong responds to the opinions of the national development and Reform Commission. Primary school students incarnate as small community designers

![[1. Delphi foundation] 1 Introduction to Delphi Programming](/img/14/272f7b537eedb0267a795dba78020d.jpg)

[1. Delphi foundation] 1 Introduction to Delphi Programming

. Net 6 learning notes: what is NET Core

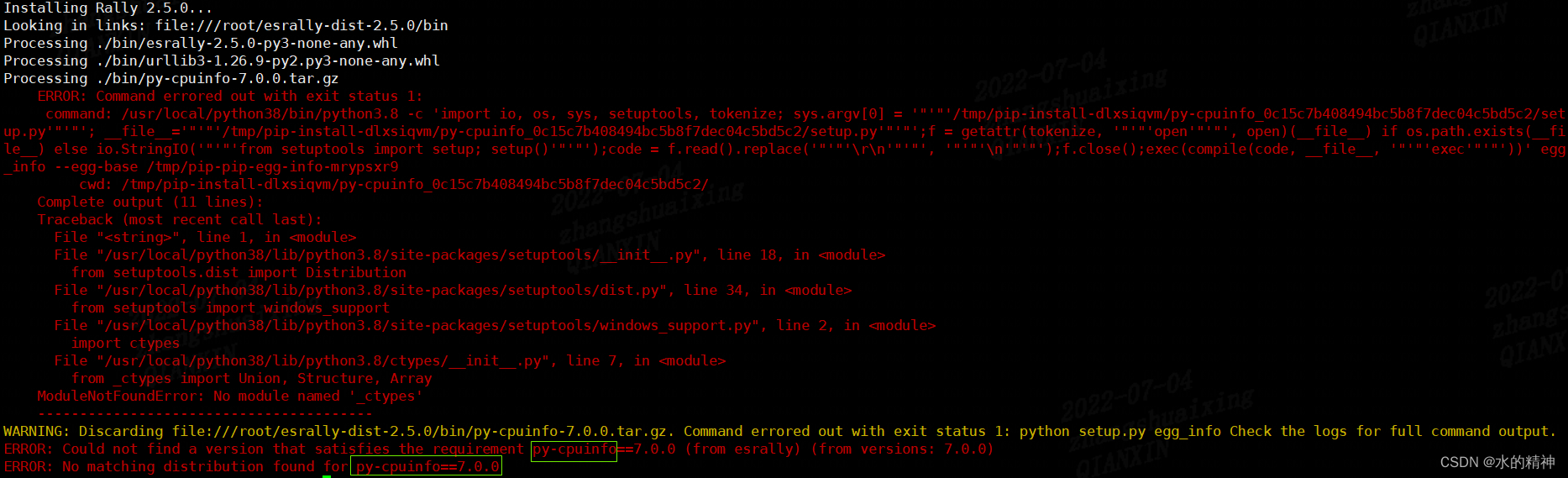

esRally国内安装使用避坑指南-全网最新

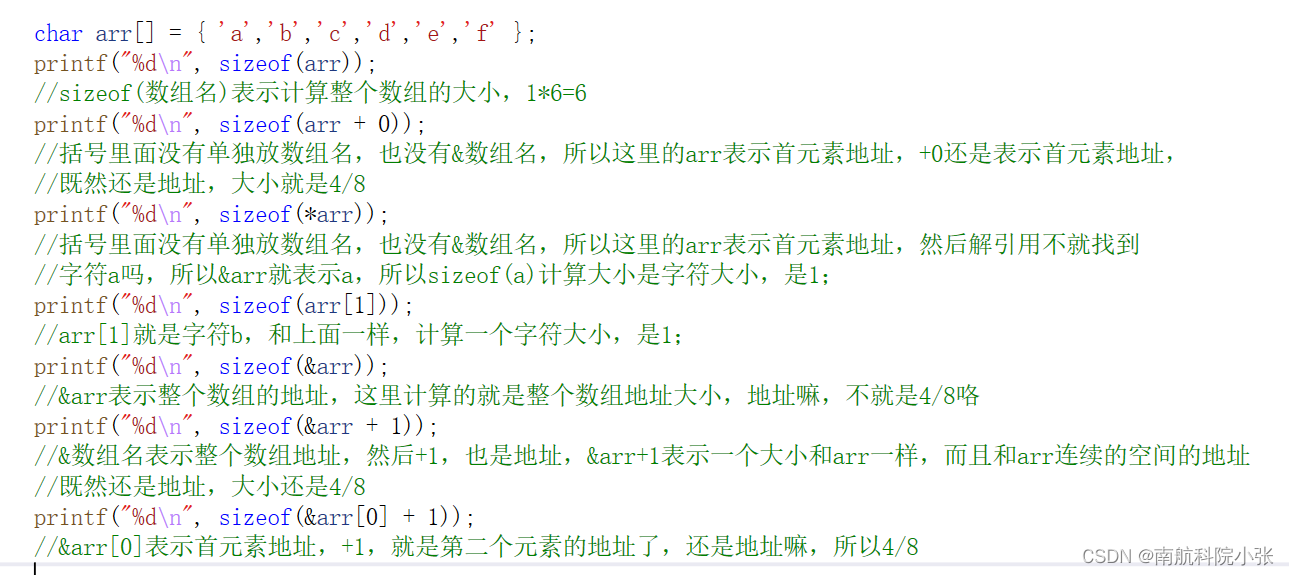

Make learning pointer easier (3)

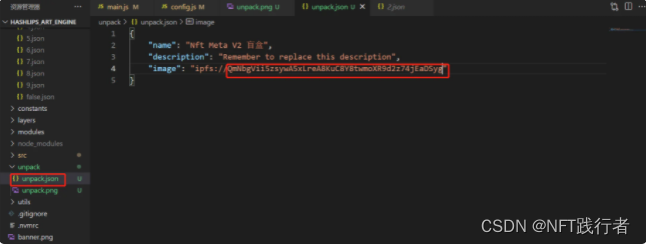

NFT smart contract release, blind box, public offering technology practice -- jigsaw puzzle

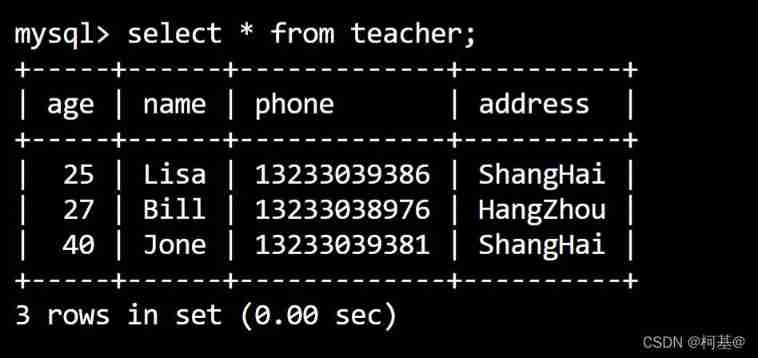

21. Delete data

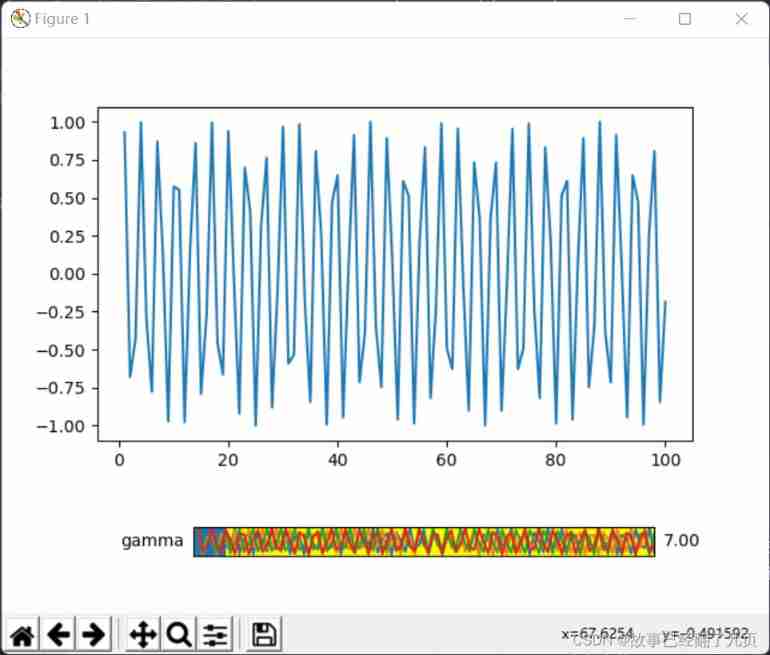

matplotlib. Widgets are easy to use

Hcip day 16

随机推荐

Generator Foundation

How to prevent Association in cross-border e-commerce multi account operations?

It's hard to find a job when the industry is in recession

Iterator Foundation

On why we should program for all

Yu Xia looks at win system kernel -- message mechanism

Artcube information of "designer universe": Guangzhou implements the community designer system to achieve "great improvement" of urban quality | national economic and Information Center

C语言 - 位段

Artcube information of "designer universe": Guangzhou implements the community designer system to achieve "great improvement" of urban quality | national economic and Information Center

MySQL view tablespace and create table statements

Nacos Development Manual

[factorial inverse], [linear inverse], [combinatorial counting] Niu Mei's mathematical problems

onie支持pice硬盘

National economic information center "APEC industry +": economic data released at the night of the Spring Festival | observation of stable strategy industry fund

P3047 [usaco12feb]nearby cows g (tree DP)

Webrtc series-h.264 estimated bit rate calculation

Document 2 Feb 12 16:54

MES, APS and ERP are essential to realize fine production

WebRTC系列-H.264预估码率计算

Database addition, deletion, modification and query