当前位置:网站首页>Analysis of high frequency interview questions in massive data processing

Analysis of high frequency interview questions in massive data processing

2022-06-11 09:24:00 【Menon research monk】

Catalog

Preface

Hardware expansion is difficult to meet the needs of massive data processing , How to use the existing conditions for massive information processing

Massive information processing has increasingly become a new highlight in the current written interview for programmers

The main reference books are :《java Programmer interview book 》

The basic method

By querying all aspects of online knowledge points , And read some related books

Common methods are Hash Law 、Bit - map Law 、Bloom filter Law 、 Database optimization method 、 Inverted index 、 External ranking method 、Trie Trees 、 Pile up 、 Double bucket method and MapRe-duce FA et al

1.1 The hash algorithm

Also known as hashing ( The mapping relationship ). The key in the data element is key, Calculate by hash function hash ( key), That is to calculate its storage address , So as to perform some operations on the data

Conflict means that two keywords correspond to the same function value , That is, the same address is mapped

To reduce conflict , Hash functions are also very particular . Try to be simple , The function range should be within its range , Also reduce their conflicts

Data structures are also mentioned in this book

- The commonly used methods for constructing hash functions are : direct addressing ( According to the linear relationship of keywords )、 Take the mold 、 Digital analysis 、 Square middle method, etc

- Common conflict resolution methods mainly include : Open address method ( When encountering conflict, it should detect in some way )、 Chain address method and so on

1.2 Bitmap method

B Use digit groups to indicate whether certain elements exist ( It can be used for duplicate checking , It can also be used to judge whether a certain data exists ) Fast search for massive data 、 Weight determination 、 Delete etc. .

The specific operation is to generate N Bit character , If any number is marked as 1, No numbers are marked as 0.( Trade space for time , The time complexity of sorting is O(n))

1.3 Bloom Filter

Check whether an element belongs to a collection . Sacrifice accuracy to improve space efficiency and time efficiency .

The basic principle is Digit group and Hash function The combination of , contain m Digit group ( Initialize to 0), Definition k Different hash function ( Each function maps to the... Of the bit set k A place ) A combination of . When inserting elements into a collection , according to k individual Hash Function to get the number of bits in a group k bits ( Set to 1), Query queries whether an element belongs to a collection ,k A for 1( There is ),k A bit is not for 1( non-existent ). When inserting other elements , Other locations may be 1, There was a mistake .

1.4 Database optimization

You can use database tools 、 Data partition 、 Indexes 、 Caching mechanisms 、 Optimized query 、 Sort, etc

1.5 Inverted index

The index is built by sorting according to some values of keywords

The mapping of the storage location of a word in a document or a group of documents under full-text search , It is the most commonly used data structure in document retrieval system

There are two different forms of reverse indexing :

The first form is a horizontal reverse index of a record ( Or reverse file index ) A list of documents containing each reference word ;

The second form is the horizontal reverse index of a word ( Or completely reverse indexing ) It also contains the position of each word in a document .

The second form provides more compatibility ( For example, phrase search ), But it takes more time and space to create .

In general, matrix storage can be used to store , But it wastes a lot of space

1.6 External ranking method

Definition : You cannot process too many objects in memory at once , It must be put out in the form of documents . Sorting requires step-by-step memory processing ( Relatively large files , Cannot load into memory at one time , Data needs to be exchanged many times )

Merge sort or two-way merge sort can be adopted

It is applicable to sorting and duplicate checking of big data , But it's less efficient , Because we need to use it io The operation of

1.7 Dictionary tree

Use the common prefix of the string to reduce the time and space cost ( Space for time ). Multi tree structure for fast string retrieval

Count and sort a large number of strings ( But it's not just about strings ), So it is often used in text word frequency statistics by search engine system .

Advantage is : Minimize unnecessary string comparisons , Query efficiency is higher than hash table .

Trie Trees generally have 3 Basic characteristics :

- The root node does not contain characters , Except the root node, each node contains only one character .

- From the root node to a node , The characters passing through the path are concatenated , Is the string corresponding to the node .

- All child nodes of each node contain different characters .

2. Analysis of classical problems

2.1 top k problem

The most frequent k Number or find the largest k Number, etc , At present, the better method is Divide and conquer +Trie Trees /hash + Small cap pile .

- hash Method is decomposed into multiple small data sets ( If there are duplicate numbers , Reduce a lot of data sets by de duplication )

- Trie Trees or hash Count the... In each small data set query Word frequency

- The small top stack calculates the highest frequency front in each data set K Number

- Finally, in all top K Find the final top K

in application , There may be enough memory , Then directly throw the data into the memory for one-time processing , It's also possible that the machine has multiple cores , This allows multithreading of the entire dataset .

2.2 Repetitive questions

This problem can be solved by bitmap method , This method is optimal

The specific execution code can be shown in the book , This is the display code of the book

2.3 Scheduling problem

- Database sorting ( The database specifications are required to be good )

- Divide and conquer method ( Although the memory is reduced , But the coding is complicated , Slow down )

- Bitmap method

边栏推荐

- Exclusive interview - dialogue on open source Zhai Jia: excellent open source projects should be seen by more people. I am honored to participate in them

- Comparison and introduction of OpenCV oak cameras

- [C language - data storage] how is data stored in memory?

- Install jupyter in the specified environment

- Day 47 how to query a table

- [TiO websocket] III. The TiO websocket server can send messages to users anywhere

- 工厂出产流程中的这些问题,应该怎么处理?

- Identifier keyword literal data type base conversion character encoding variable data type explanation operator

- Sword finger offer II 036 Postfix Expression

- Augmented reality experiment IV of Shandong University

猜你喜欢

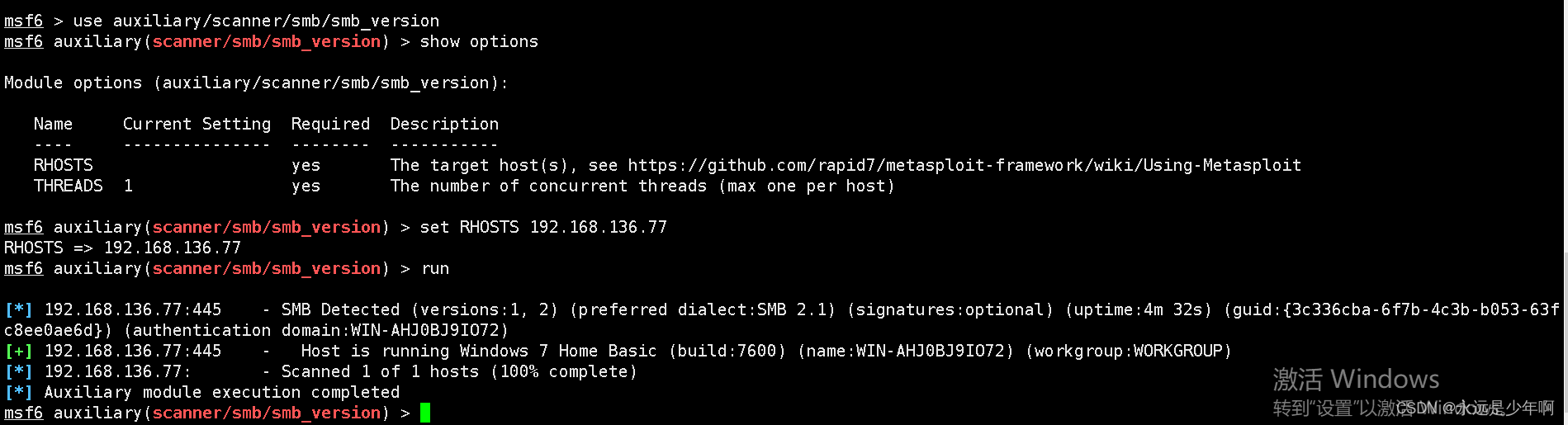

Use of MSF evaluation module

Complexity analysis of matrix inversion operation (complexity analysis of inverse matrix)

Machine learning notes - convolutional neural network memo list

MSF SMB based information collection

shell脚本之sed详解 (sed命令 , sed -e , sed s/ new / old / ... )

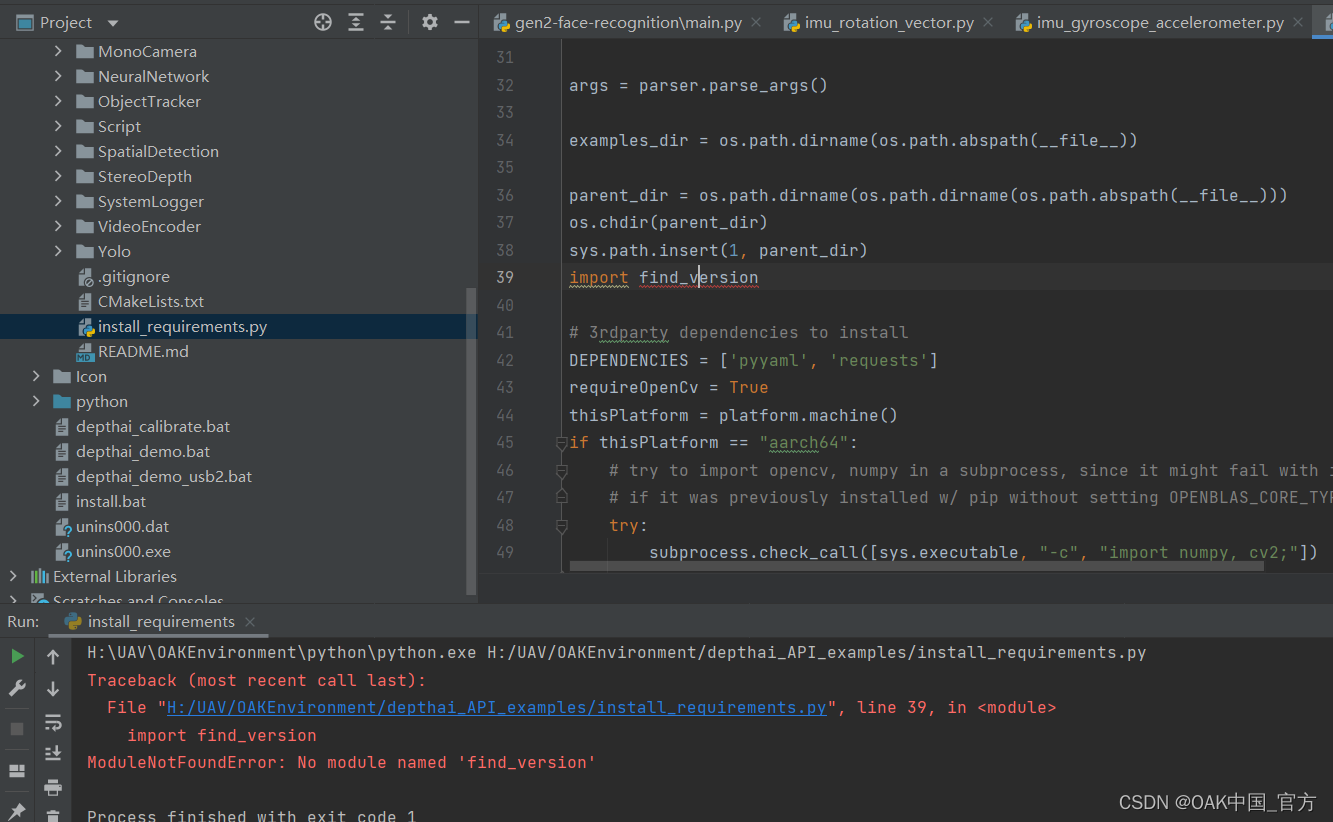

Modularnotfounderror: no module named 'find_ version’

Sed explanation of shell script (SED command, sed -e, sed s/ new / old /...)

keyboard entry.

Day41 process pool and thread pool

Pytorch installation for getting started with deep learning

随机推荐

面试题 17.10. 主要元素

Sed explanation of shell script (SED command, sed -e, sed s/ new / old /...)

affair

Leveldb simple use example

Machine learning notes - the story of master kaggle Janio Martinez Bachmann

MySQL startup error "bind on tcp/ip port: address already in use"

Day45 storage engine data type integer floating point character type date type enumeration and set type constraints table to table relationships

Type-C扩展坞自适应供电专利维权案例

Tissu. JS définit dynamiquement la taille de la police

1854. 人口最多的年份

[scheme development] sphygmomanometer scheme pressure sensor sic160

[scheme design] scheme of household oximeter based on single chip microcomputer

Control statement if switch for while while break continue

【智能开发】血压计方案设计与硬件开发

企业决议时,哪个部分应该主导ERP项目?

[image processing] spatial domain image enhancement

Augmented reality experiment IV of Shandong University

[FAQ for novices on the road] about data visualization

【方案开发】红外体温计测温仪方案

基于SIC32F911RET6设计的腕式血压计方案