当前位置:网站首页>Ascend 910 realizes tensorflow1.15 to realize the Minist handwritten digit recognition of lenet network

Ascend 910 realizes tensorflow1.15 to realize the Minist handwritten digit recognition of lenet network

2022-07-07 14:40:00 【Hua Weiyun】

One 、 Environment and preparation

CPU/GPU Reappearance using Huawei cloud ModelArts-CodeLab platform

Ascend Reappearance using Huawei cloud ModelArts- development environment -Notebook

original Lenet Code link :https://gitee.com/lai-pengfei/LeNet

Two 、 stay CPU/GPU Run the original code in

First step : open CodeLab

notes : If you need to switch GPU resources , Can point

Resource selection GPU resources , have access to 1 Hours ,1 When I was young, I needed to renew my time manually

Click on the Terminal Enter the terminal interface :

Terminal interface :

The second step : Enter into work Catalog and git clone Related codes

git clone https://gitee.com/lai-pengfei/LeNet You can see on the left git Down the code

The third step : Switch TensorFlow Running environment

source activate /home/ma-user/anaconda3/envs/TensorFlow-1.13.1/Here is 1.13 Of , But it's not a big problem , Big difference is not bad , You can also run and 1.15 almost

Step four : Enter the folder and execute the original code

cd cd LeNet/python Train.py Running :

Running results :

3、 ... and 、 Model migration

The environment uses Huawei cloud - development environment Notebook

Create an environment

Mirror select the checked

The specifications and other settings are shown in the figure below :

Environment and code download reference CPU/GPU

Switch to Ascend Under the TensorFlow 1.15 Running environment

source activate /home/ma-user/anaconda3/envs/TensorFlow-1.15.0/Code changes

Modify the Train.py

Document address :https://www.hiascend.com/document/detail/zh/CANNCommunityEdition/51RC2alpha007/moddevg/tfmigr/atlasmprtg_13_0011.html

Add the code to introduce the package :

from npu_bridge.npu_init import * Modify to create session And initialize the resource related code

This step is mainly in sess.run(tf.initialize_all_variables()) Add the following lines of code before

config = tf.ConfigProto()custom_op = config.graph_options.rewrite_options.custom_optimizers.add()custom_op.name = "NpuOptimizer"config.graph_options.rewrite_options.remapping = RewriterConfig.OFF # Must explicitly close config.graph_options.rewrite_options.memory_optimization = RewriterConfig.OFF # Must explicitly close sess = tf.Session(config=config) This Demo You also need to change the first line of related library code

Original code :

import tensorflow.examples.tutorials.mnist.input_data as input_dataIt is amended as follows :

from tensorflow.examples.tutorials.mnist import input_dataRun code

python Train.py

notice W tf_adapt Almost words indicate that NPU resources

Operation process :

Can open another Terminal Check whether it is really used Ascend, In the new Terminal Use the following command in :

npu-smi info

Running results :

summary

It's simple here TensorFlow transplant Ascend The code modification of the platform is completed , In fact, the whole process is relatively simple , It's not difficult to meet. , No, it may be difficult . The main difficulties of model migration are that some operators may not support and the accuracy performance optimization .

边栏推荐

- 【愚公系列】2022年7月 Go教学课程 005-变量

- PD virtual machine tutorial: how to set the available shortcut keys in the parallelsdesktop virtual machine?

- ES日志报错赏析-maximum shards open

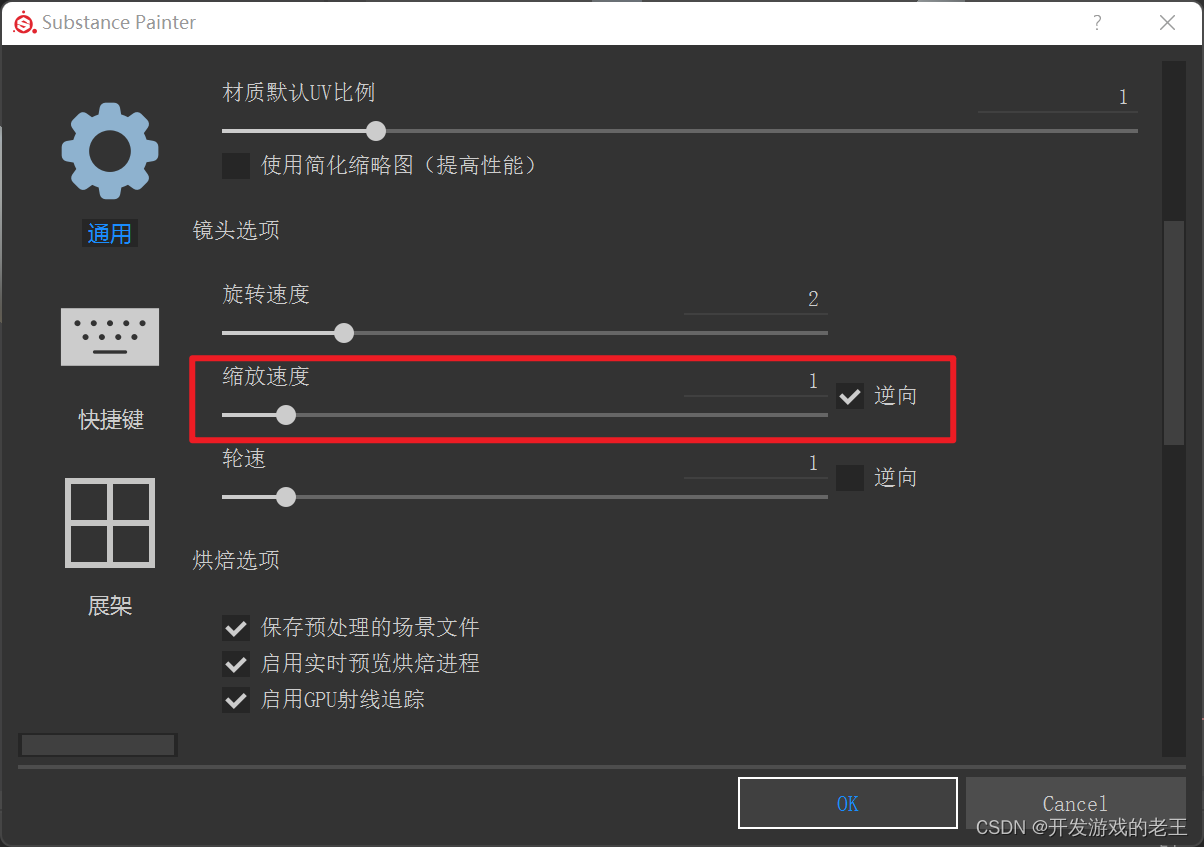

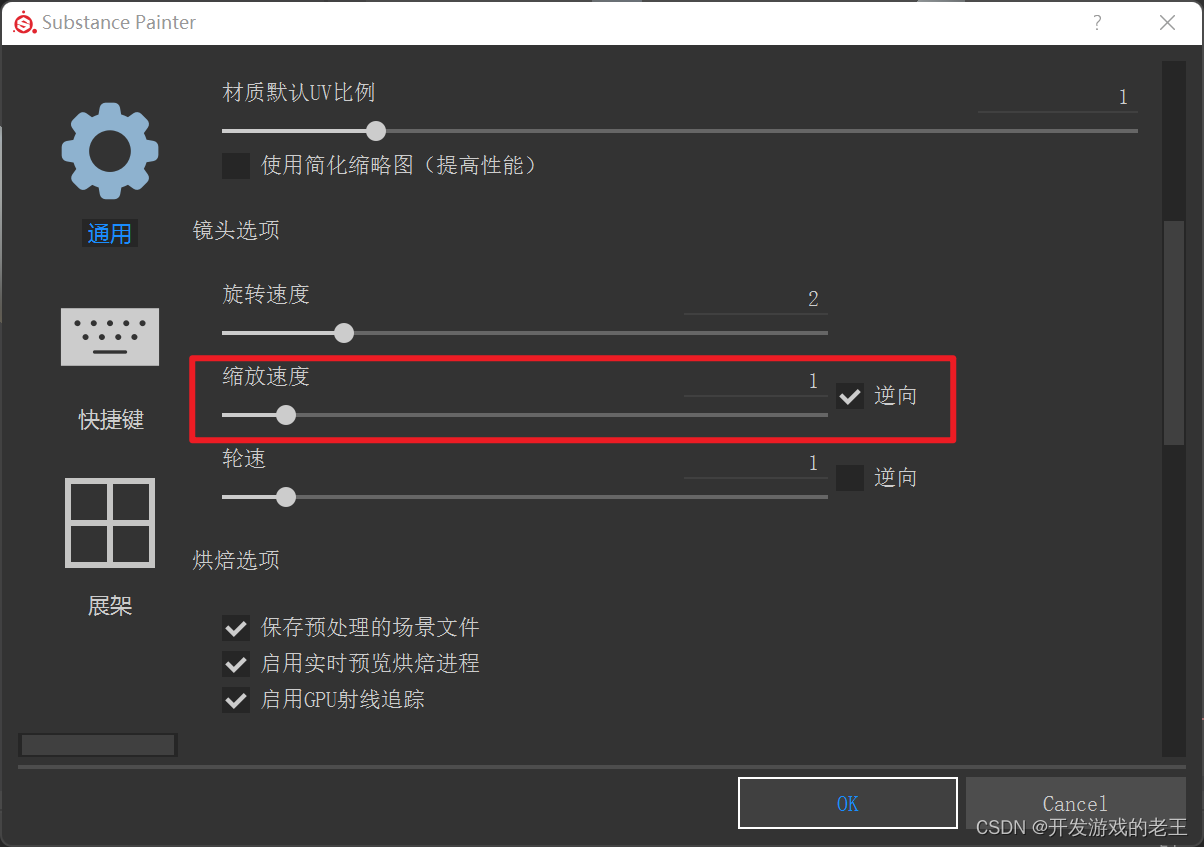

- Substance painter notes: settings for multi display and multi-resolution displays

- Cascading update with Oracle trigger

- Summary on adding content of background dynamic template builder usage

- The world's first risc-v notebook computer is on pre-sale, which is designed for the meta universe!

- 杭电oj2092 整数解

- MicTR01 Tester 振弦采集模块开发套件使用说明

- JS get the current time, month, day, year, and the uniapp location applet opens the map to select the location

猜你喜欢

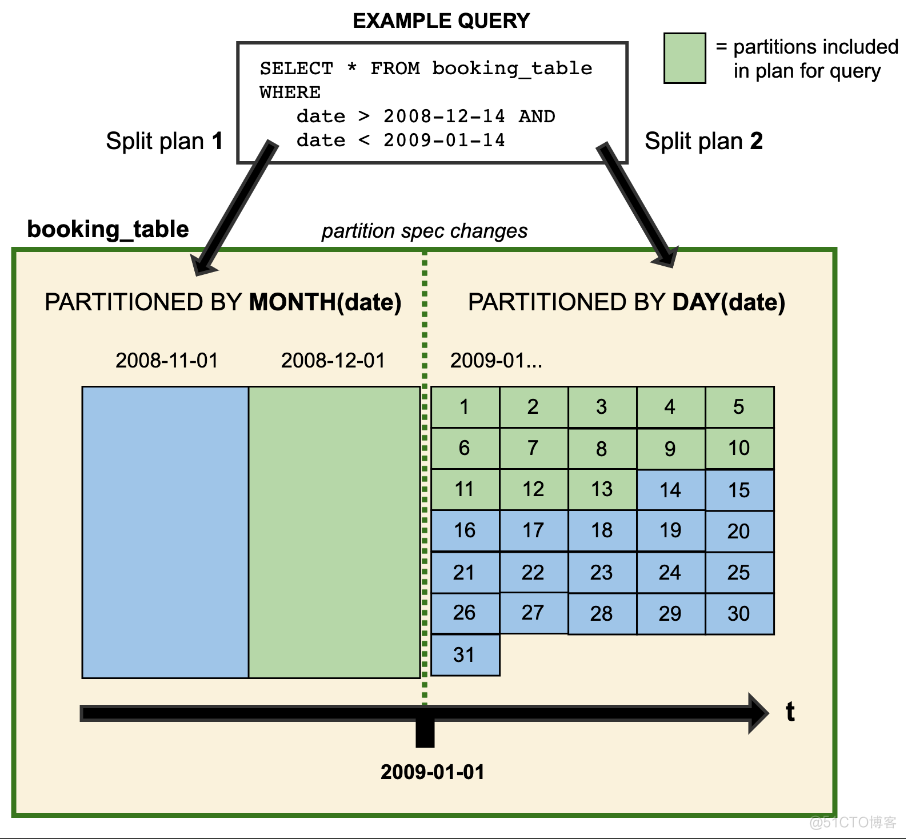

Data Lake (IX): Iceberg features and data types

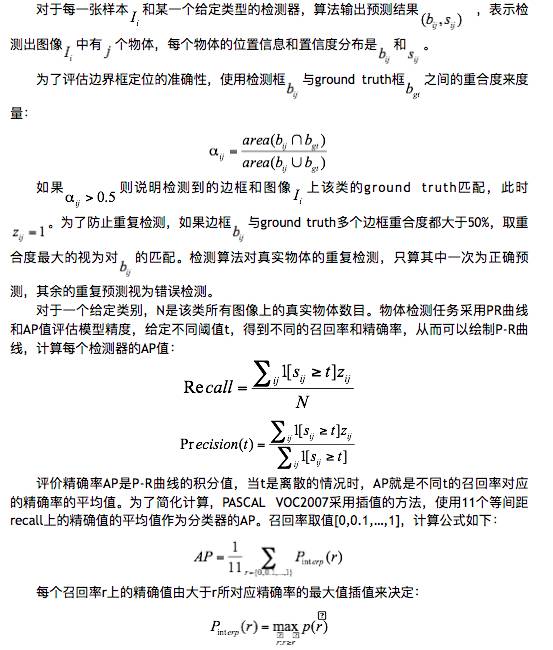

KITTI数据集简介与使用

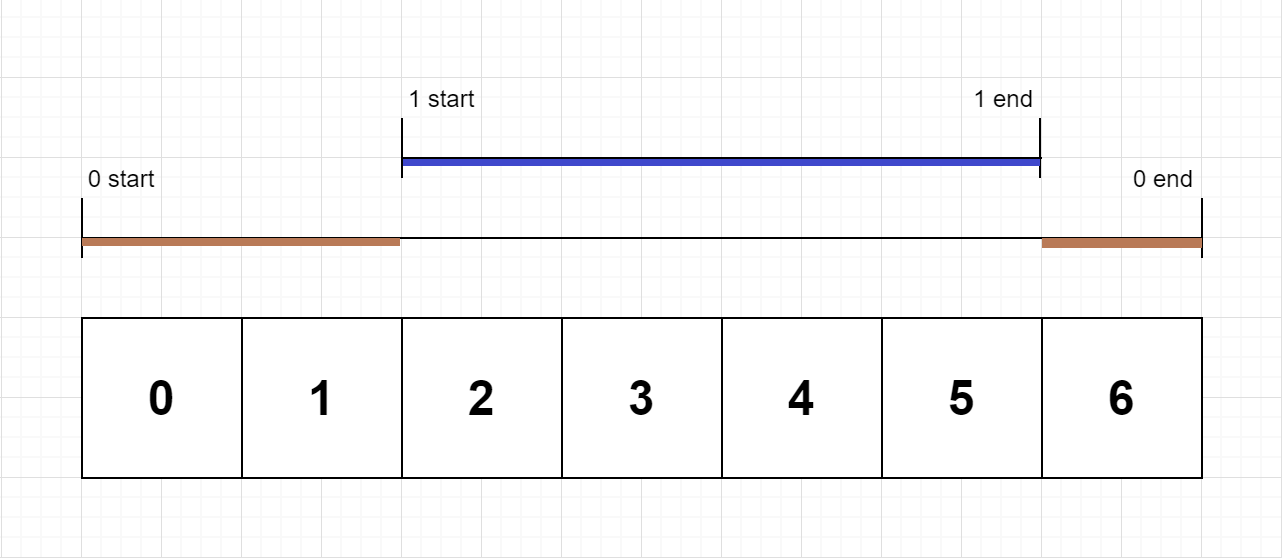

LeetCode每日一题(636. Exclusive Time of Functions)

在软件工程领域,搞科研的这十年!

Substance Painter笔记:多显示器且多分辨率显示器时的设置

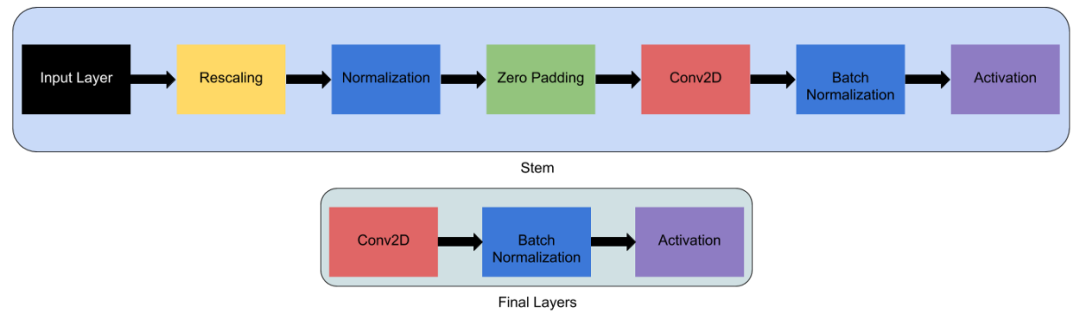

EfficientNet模型的完整细节

![[server data recovery] a case of RAID data recovery of a brand StorageWorks server](/img/8c/77f0cbea54730de36ce7b625308d2f.png)

[server data recovery] a case of RAID data recovery of a brand StorageWorks server

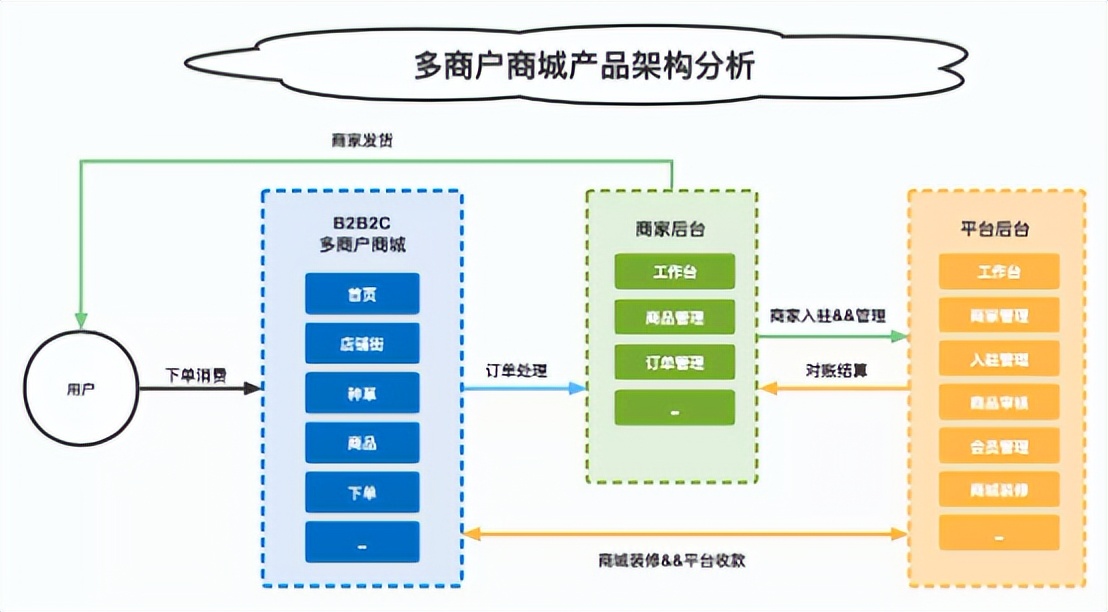

多商戶商城系統功能拆解01講-產品架構

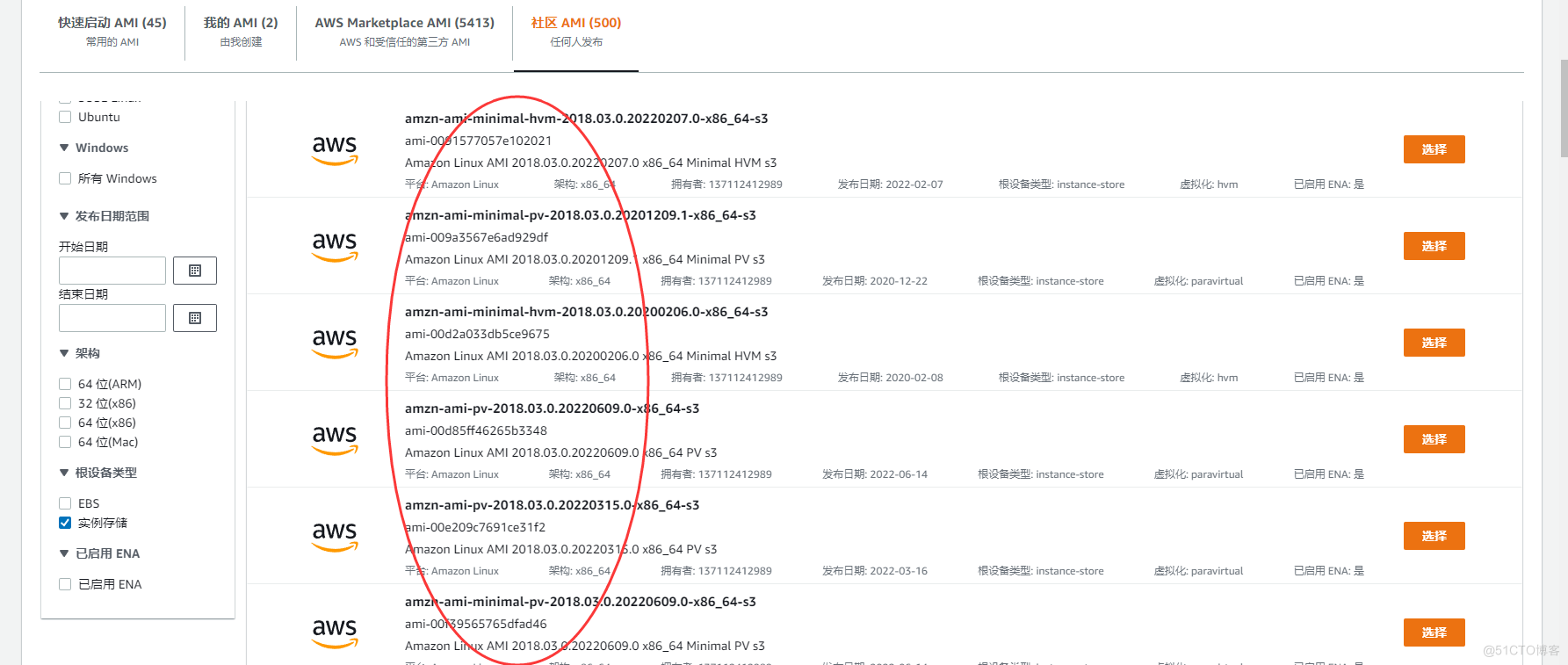

AWS学习笔记(三)

Substance painter notes: settings for multi display and multi-resolution displays

随机推荐

解析PHP跳出循环的方法以及continue、break、exit的区别介绍

MLGO:Google AI发布工业级编译器优化机器学习框架

Es log error appreciation -- allow delete

小程序目录结构

Use case diagram

搜索引擎接口

PD virtual machine tutorial: how to set the available shortcut keys in the parallelsdesktop virtual machine?

Oracle Linux 9.0 officially released

关于后台动态模板添加内容的总结 Builder使用

2022 cloud consulting technology series high availability special sharing meeting

Ascend 910实现Tensorflow1.15实现LeNet网络的minist手写数字识别

LeetCode 648. Word replacement

Hangdian oj2092 integer solution

Substance Painter筆記:多顯示器且多分辨率顯示器時的設置

Because the employee set the password to "123456", amd stolen 450gb data?

属性关键字ServerOnly,SqlColumnNumber,SqlComputeCode,SqlComputed

Half an hour of hands-on practice of "live broadcast Lianmai construction", college students' resume of technical posts plus points get!

Leetcode one question per day (636. exclusive time of functions)

NDK beginner's study (1)

数据库如何进行动态自定义排序?