当前位置:网站首页>Introduction and use of Kitti dataset

Introduction and use of Kitti dataset

2022-07-07 14:32:00 【Xiaobai learns vision】

Click on the above “ Xiaobai studies vision ”, Optional plus " Star standard " or “ Roof placement ”

Heavy dry goods , First time delivery Abstract : This article integrates Are we ready for Autonomous Driving? The KITTI Vision Benchmark Suite and Vision meets Robotics: The KITTI Dataset The contents of the two papers , This paper mainly introduces KITTI Data set Overview , Data acquisition platform , Detailed description of data set , Evaluation criteria and specific use cases . This paper deals with KITTI Data sets provide a more detailed and comprehensive introduction , Focus on using KITTI Data sets for various studies and experiments .

KITTI Data set Overview

KITTI The dataset was jointly founded by karlsruhr Institute of technology in Germany and Toyota American Institute of technology , It is the largest computer vision algorithm evaluation data set under automatic driving scenario in the world . This dataset is used to evaluate stereoscopic images (stereo), Optical flow (optical flow), Visual ranging (visual odometry),3D Object detection (object detection) and 3D track (tracking) The performance of other computer vision technologies in vehicle environment .KITTI Including downtown 、 Real image data collected from scenes such as villages and highways , Up to... Per image 15 Cars and 30 A pedestrian , There are also various degrees of occlusion and truncation . The whole dataset consists of 389 Stereo image and optical flow diagram ,39.2 km Visual ranging sequence and more than 200k 3D The image composition of the marked object [1] , With 10Hz Frequency sampling and synchronization . On the whole , The original data set is classified as ’Road’, ’City’, ’Residential’, ’Campus’ and ’Person’. about 3D Object detection ,label Subdivided into car, van, truck, pedestrian, pedestrian(sitting), cyclist, tram as well as misc form .

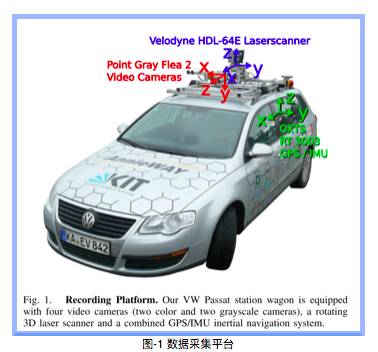

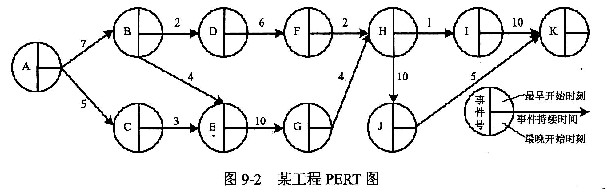

Data acquisition platform

Pictured -1 Shown ,KITTI The data collection platform of data set is equipped with 2 A grayscale camera ,2 A color camera , One Velodyne 64 Line 3D Laser radar ,4 An optical lens , as well as 1 individual GPS Navigation system . The specific sensor parameters are as follows [2] :

• 2 × PointGray Flea2 grayscale cameras (FL2-14S3M-C), 1.4 Megapixels, 1/2” Sony ICX267 CCD, global shutter

• 2 × PointGray Flea2 color cameras (FL2-14S3C-C), 1.4 Megapixels, 1/2” Sony ICX267 CCD, global shutter

• 4 × Edmund Optics lenses, 4mm, opening angle ∼ 90◦, vertical opening angle of region of interest (ROI) ∼ 35◦

• 1 × Velodyne HDL-64E rotating 3D laser scanner, 10 Hz, 64 beams, 0.09 degree angular resolution, 2 cm distance accuracy, collecting ∼ 1.3 million points/second, field of view: 360◦ horizontal, 26.8◦ vertical, range: 120 m

• 1 × OXTS RT3003 inertial and GPS navigation system, 6 axis, 100 Hz, L1/L2 RTK, resolution: 0.02m / 0.1◦

chart -1 Data acquisition platform

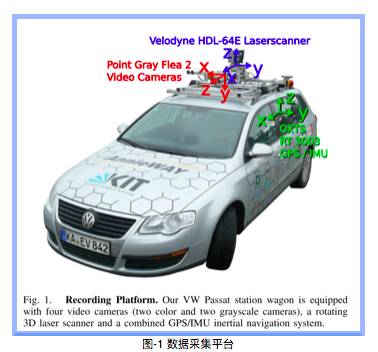

Pictured -2 The configuration plan of the sensor is shown . In order to generate binocular stereo images , The same type of camera 54cm install . Because the resolution and contrast of the color camera are not good enough , So two stereo gray cameras are used , It's far from the color camera 6cm install . In order to facilitate the calibration of sensor data , The direction of the specified coordinate system is as follows [2] :

• Camera: x = right, y = down, z = forward

• Velodyne: x = forward, y = left, z = up

• GPS/IMU: x = forward, y = left, z = up

chart -2 Sensor settings

Dataset detailed

chart -3 It shows KITTI A typical sample of a dataset , It is divided into ’Road’, ’City’, ’Residential’, ’Campus’ and ’Person’ Five category . The original data was collected in 2011 Year of 5 God , share 180GB data .

chart -3 KITTI Samples of data sets , show KITTI Diversity of data sets .

3.1 Data organization form

The paper [2] Data organization form mentioned in , It may be an early version , And current KITTI The official website of the dataset is published in different forms , This article briefly introduces .

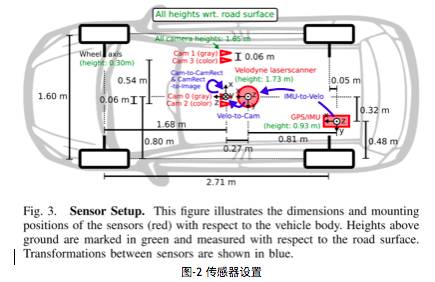

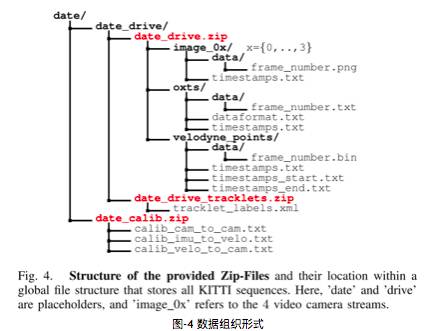

Pictured -4 Shown , All sensor data of a video sequence is stored in data_drive Under the folder , among date and drive It's a placeholder , Indicates the date and video number of the collected data . The timestamp is recorded in Timestamps.txt file .

chart -4 Data organization form

From KITTI Datasets datasets of each sub task downloaded from the official website , Its document organization form is relatively simple . With Object detection For example , The picture below is Object Detection Evaluation 2012 Standard dataset left color images The directory structure of the file , Samples are stored in testing and training Data sets .

data_object_image_2

|── testing

│ └── image_2

└── training

└── image_2

The picture below is training Data sets label Folder directory structure .

training/

└── label_2

3.2 Annotations

KITTI The data set provides a 3D Border callout ( Use the coordinate system of lidar ). The annotation of this data set is divided into 8 Categories :’Car’, ’Van’, ’Truck’, ’Pedestrian’, ’Person (sit- ting)’, ’Cyclist’, ’Tram’ and ’Misc’ (e.g., Trailers, Segways). The paper [2] It says 3D Annotation information is stored in date_drive_tracklets.xml, Each object is marked by its category and 3D Size (height,weight and length) form . The annotation of the current dataset is stored in the... Of each task sub dataset label In the folder , not quite the same .

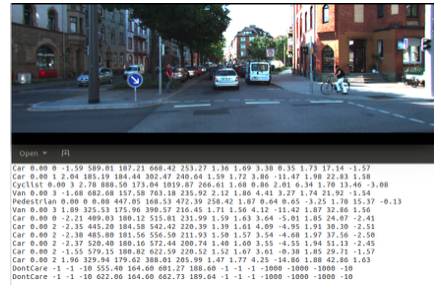

To illustrate KITTI Annotation format of data set , This article takes Object detection Task data set as an example . The data description is in Object development kit Of readme.txt In the document . Link from annotation data training labels of object data set (5 MB) Download data , Unzip the file and enter the directory , Each image corresponds to one .txt file . A frame of image and its corresponding .txt The marking file is shown in the figure -5 Shown .

chart -5 object detection Samples and labels

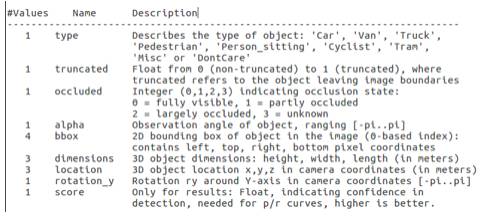

In order to understand the meaning of each field of the annotation file , You need to read the explanatory annotation file readme.txt file . The file is stored in object development kit (1 MB) In file ,readme The sample size of the sub data set is introduced in detail ,label Number of categories , File organization format , annotation format , Evaluation methods and other contents . The following describes the data format label describe :

Be careful ,'DontCare' The label indicates that the area is not marked , For example, because the target object is too far away from the lidar . To prevent during the evaluation process ( Mainly calculation precision), Count the areas that are originally target objects but have not been marked for some reasons as False positive (false positives), The evaluation script is automatically ignored 'DontCare' Regional prediction results .

3.3 Development Kit

KITTI Each sub data set provides development tools development kit, Mainly by cpp Folder ,matlab Folder ,mapping The folder and readme.txt form . In the figure below object detection Folder for task devkit_object For example , You can see cpp The folder mainly contains the source code of the evaluation model evaluate_object.cpp.Mapping The file in the folder records the mapping from the training set to the original data set , So developers can use LIDAR point cloud at the same time ,gps data , Multi modal data such as color camera data and gray camera image on the right .Matlab The tools in the folder contain read-write tags , draw 2D/3D Marquee , function demo Tools such as .Readme.txt Documents are very important , The data format of a subset is introduced in detail ,benchmark Introduce , Result evaluation method and other details .

devkit_object

|── cpp

│ |── evaluate_object.cpp

│ └── mail.h

|── mapping

│ |── train_mapping.txt

│ └── train_rand.txt

|── matlab

│ |── computeBox3D.m

│ |── computeOrientation3D.m

│ |── drawBox2D.m

│ |── drawBox3D.m

│ |── projectToImage.m

│ |── readCalibration.m

│ |── readLabels.m

│ |── run_demo.m

│ |── run_readWriteDemo.m

│ |── run_statistics.m

│ |── visualization.m

│ └── writeLabels.m

└── readme.txt

Evaluation criteria Evaluation Metrics

4.1 stereo And visual odometry Mission

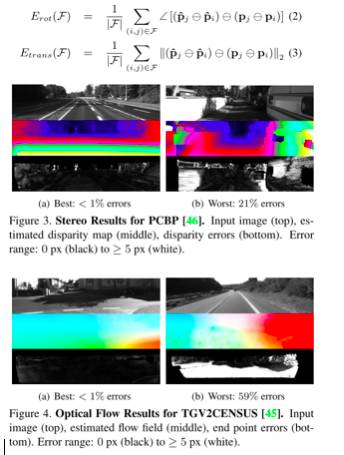

KITTI Data sets adopt different evaluation criteria for different tasks . For stereoscopic images and optical flow (stereo and optical flow), basis disparity and end-point error Calculate the average number of wrong pixels (average number of erroneous pixels).

For visual ranging and SLAM Mission (visual odometry/SLAM), According to the end of the track (trajectory end-point) The error is evaluated . The traditional method considers both translation and rotation errors ,KITTI Evaluate separately [1] :

chart -6 Stereo and optical flow Prediction results and evaluation

4.2 3D Object detection and direction prediction

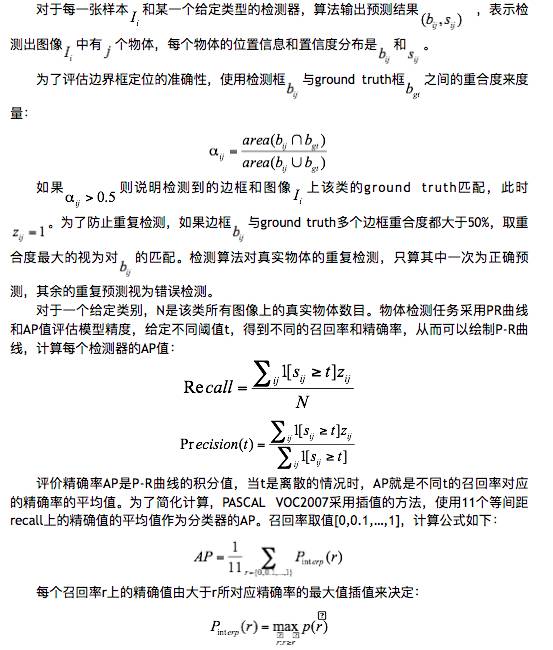

Target detection needs to achieve target location and target recognition at the same time . among , By comparing the prediction frame with ground truth The overlap of borders (Intersection over Union,IoU) And thresholds (e.g. 0.5) The size of determines the correctness of target positioning ; The accuracy of target recognition is determined by comparing the confidence score with the threshold . The above two steps comprehensively determine whether the target detection is correct , Finally, the detection problem of multi category targets is transformed into “ Certain objects are detected correctly 、 Detect errors ” The dichotomous problem of , Thus, the confusion matrix can be constructed , Use a series of indicators of target classification to evaluate the accuracy of the model .

KITTI The data set uses literature [3] The average accuracy used (Average Precision,mAP) Evaluate the results of single class target detection model .PASCAL Visual Object Classes Challenge2007 (VOC2007)[3] Dataset use Precision-Recall Qualitative analysis of the curve , Use average precision(AP) Quantitative analysis model accuracy . The object detection and evaluation standard punishes the missing and wrong detection of objects , At the same time, it is stipulated that repeated and correct detection of the same object is only counted once , Redundant detection is considered an error ( False positive ).

about KITTI Target detection task , Only evaluate that the target height is greater than 25pixel Forecast results of , Treat confusing categories as the same to reduce false positives (false positives) rate , And use 41 Equally spaced recall The average of the exact values on approximates the classifier's AP.

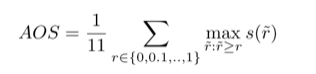

For object direction prediction , The literature [1] A novel method is proposed : Average directional similarity ,Average Orientation Similarity (AOS). This indicator is defined as :

among ,r Represents the recall rate of object detection recall. In dependent variable r Next , Directional similarity s∈[0,1] It is defined as all prediction samples and ground truth Normalization of cosine distance :

among D(r) It means the recall rate r Set all the predicted positive samples ,∆θ(i) Indicates the detected object i The prediction angle is similar to ground truth Difference . To punish multiple detections for matching to the same ground truth, If i Has been matched to ground truth(IoU At least 50%) Set up δi = 1, otherwise δi = 0.

5. Data usage practice

KITTI The annotation information of the dataset is richer , In actual use, only some fields may be required , Or it needs to be converted to the format of other data sets . For example, you can put KITTI Data sets are converted to PASCAL VOC Format , Thus, it is more convenient to use Faster RCNN perhaps SSD And other advanced detection algorithms . transformation KITTI Datasets need to pay attention to the format of source datasets and target datasets , Reprocessing of category labels , Implementation details are recommended for reference Jesse_Mx[4] and github On manutdzou Open source projects for [5] , These materials introduce the transformation KITTI The data set is PASCAL VOC Format , So as to facilitate training Faster RCNN perhaps SSD Wait for the model .

Reference

Andreas Geiger and Philip Lenz and Raquel Urtasun. Are we ready for Autonomous Driving? The KITTI Vision Benchmark Suite. CVPR, 2012

Andreas Geiger and Philip Lenz and Christoph Stiller and Raquel Urtasun. Vision meets Robotics: The KITTI Dataset. IJRR, 2013

M. Everingham, L.Van Gool, C. K. I.Williams, J.Winn, and A. Zisserman. The PASCAL Visual Object Classes Challenge 2011 (VOC2011) Results.

Jesse_Mx.SD: Single Shot MultiBox Detector Training KITTI Data sets (1).

http://blog.csdn.net/jesse_mx/article/details/65634482

manutdzou.manutdzou/KITTI_SSD.https://github.com/manutdzou/KITTI_SSD

appendix

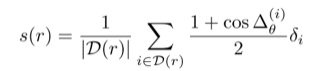

Fig.7 Frequency of different types of objects in the data set ( Upper figure );

For two main categories ( vehicle , Pedestrians ) Main direction statistical histogram ( The figure below )

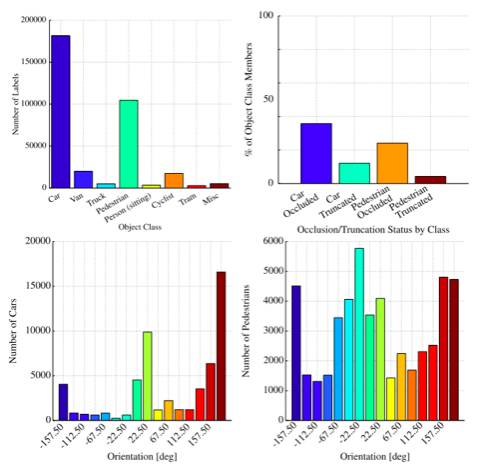

Fig.8 Statistics of the frequency of different types of objects in each figure .

Fig.9 Speed , The acceleration ( Eliminate the static state ) Statistical histogram ; Video sequence length statistical histogram ; Every scene (e.g., Campus, city) Frame number statistical histogram .

The good news !

Xiaobai learns visual knowledge about the planet

Open to the outside world

download 1:OpenCV-Contrib Chinese version of extension module

stay 「 Xiaobai studies vision 」 Official account back office reply : Extension module Chinese course , You can download the first copy of the whole network OpenCV Extension module tutorial Chinese version , Cover expansion module installation 、SFM Algorithm 、 Stereo vision 、 Target tracking 、 Biological vision 、 Super resolution processing and other more than 20 chapters .

download 2:Python Visual combat project 52 speak

stay 「 Xiaobai studies vision 」 Official account back office reply :Python Visual combat project , You can download, including image segmentation 、 Mask detection 、 Lane line detection 、 Vehicle count 、 Add Eyeliner 、 License plate recognition 、 Character recognition 、 Emotional tests 、 Text content extraction 、 Face recognition, etc 31 A visual combat project , Help fast school computer vision .

download 3:OpenCV Actual project 20 speak

stay 「 Xiaobai studies vision 」 Official account back office reply :OpenCV Actual project 20 speak , You can download the 20 Based on OpenCV Realization 20 A real project , Realization OpenCV Learn advanced .

Communication group

Welcome to join the official account reader group to communicate with your colleagues , There are SLAM、 3 d visual 、 sensor 、 Autopilot 、 Computational photography 、 testing 、 Division 、 distinguish 、 Medical imaging 、GAN、 Wechat groups such as algorithm competition ( It will be subdivided gradually in the future ), Please scan the following micro signal clustering , remarks :” nickname + School / company + Research direction “, for example :” Zhang San + Shanghai Jiaotong University + Vision SLAM“. Please note... According to the format , Otherwise, it will not pass . After successful addition, they will be invited to relevant wechat groups according to the research direction . Please do not send ads in the group , Or you'll be invited out , Thanks for your understanding ~边栏推荐

- Oracle Linux 9.0 officially released

- ES日志报错赏析-- allow delete

- 小程序目录结构

- STM32CubeMX,68套组件,遵循10条开源协议

- libSGM的horizontal_path_aggregation程序解读

- JS in the browser Base64, URL, blob mutual conversion

- ndk初学习(一)

- 最长上升子序列模型 AcWing 482. 合唱队形

- The world's first risc-v notebook computer is on pre-sale, which is designed for the meta universe!

- 电脑Win7系统桌面图标太大怎么调小

猜你喜欢

Pert diagram (engineering network diagram)

常用數字信號編碼之反向不歸零碼碼、曼徹斯特編碼、差分曼徹斯特編碼

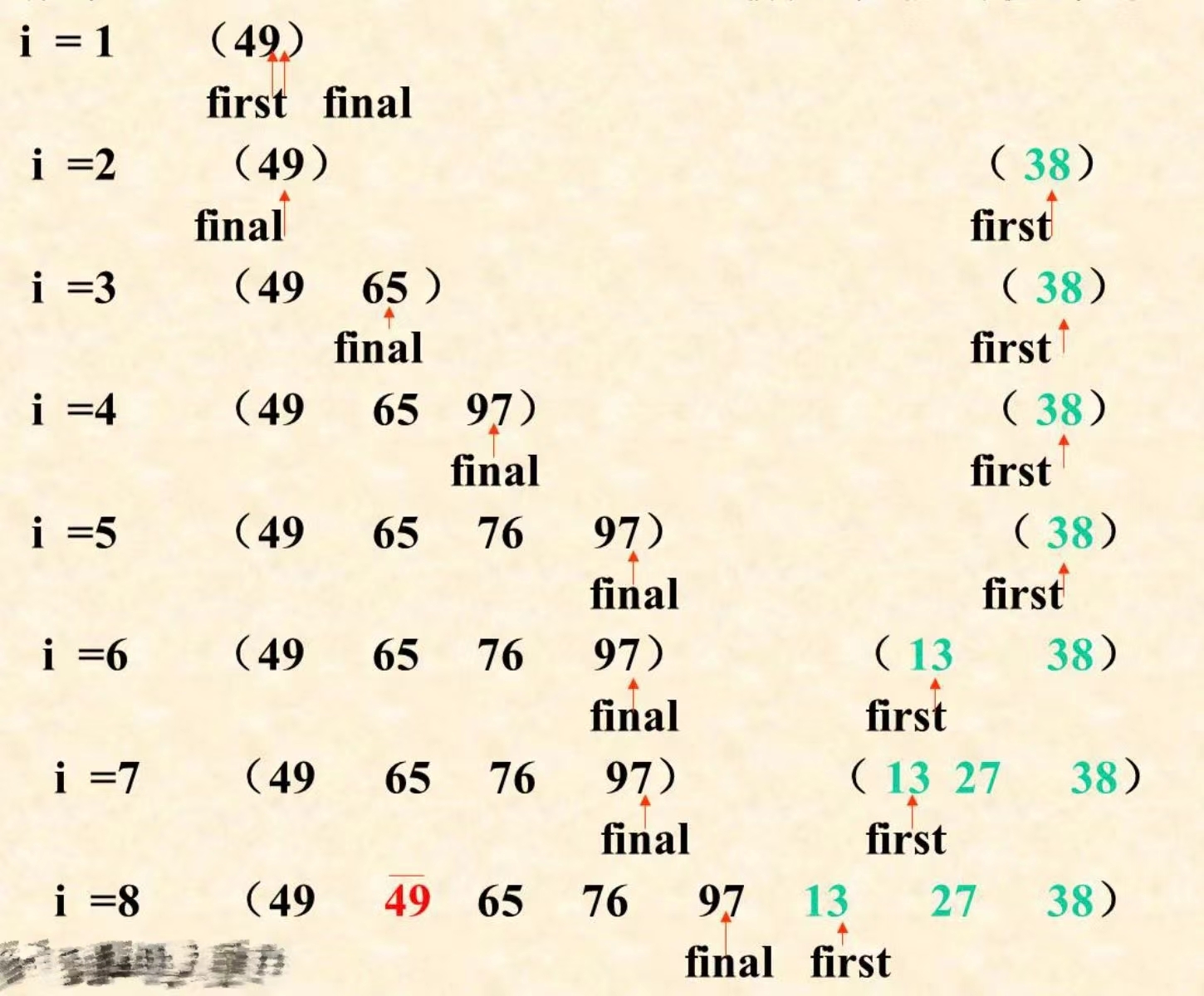

内部排序——插入排序

Instructions d'utilisation de la trousse de développement du module d'acquisition d'accord du testeur mictr01

2022年13个UX/UI/UE最佳创意灵感网站

MicTR01 Tester 振弦采集模块开发套件使用说明

Applet directory structure

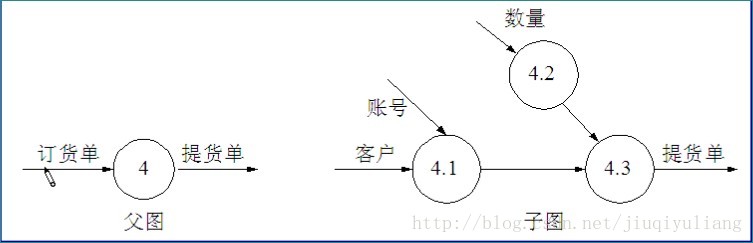

Data flow diagram, data dictionary

UML 状态图

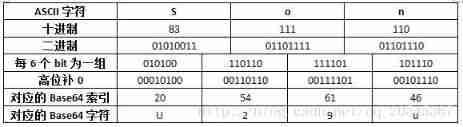

Base64 encoding

随机推荐

Substance Painter筆記:多顯示器且多分辨率顯示器時的設置

ES日志报错赏析-- allow delete

The longest ascending subsequence model acwing 1012 Sister cities

数据流图,数据字典

低代码平台中的数据连接方式(下)

属性关键字OnDelete,Private,ReadOnly,Required

AWS learning notes (III)

一个简单LEGv8处理器的Verilog实现【四】【单周期实现基础知识及模块设计讲解】

Data flow diagram, data dictionary

Es log error appreciation -maximum shards open

Because the employee set the password to "123456", amd stolen 450gb data?

6、Electron无边框窗口和透明窗口 锁定模式 设置窗口图标

Instructions for mictr01 tester vibrating string acquisition module development kit

Nllb-200: meta open source new model, which can translate 200 languages

SAKT方法部分介绍

Cascading update with Oracle trigger

在软件工程领域,搞科研的这十年!

PD虚拟机教程:如何在ParallelsDesktop虚拟机中设置可使用的快捷键?

「2022年7月」WuKong编辑器更版记录

2022PAGC 金帆奖 | 融云荣膺「年度杰出产品技术服务商」