当前位置:网站首页>My colleague didn't understand selenium for half a month, so I figured it out for him in half an hour! Easily showed a wave of operations of climbing Taobao [easy to understand]

My colleague didn't understand selenium for half a month, so I figured it out for him in half an hour! Easily showed a wave of operations of climbing Taobao [easy to understand]

2022-07-05 13:07:00 【Full stack programmer webmaster】

Hello everyone , I meet you again , I'm your friend, Quan Jun .

Because of work needs , Colleagues are just beginning to learn python, Acquire selenium This tool hasn't been understood for half a month , Because this made him bald for half a month , Finally, find me and give him an answer .

So I explained it to him with an example of Taobao crawler , He figured it out in less than an hour . Crawler projects that beginners can understand .

We need to understand some concepts before reptiles start , This reptile will use selenium.

What is? selenium?

selenium It's a web automation testing tool , Can operate the browser automatically . If you need to operate which browser, you need to install the corresponding driver, For example, you need to pass selenium operation chrome, That has to be installed chromedriver, And the version is the same as chrome bring into correspondence with .

When you're done , install selenium:

pip install selenium -i https://pypi.tuna.tsinghua.edu.cn/simpleOne 、 The import module

First, we import the module

from selenium import webdriverWe will use other modules later , I'll let it all out first :

Two 、 Browser initialization

Then there is the initialization of the browser

browser = webdriver.Chrome()You can use many browsers ,android、blackberry、ie wait . Want to use another browser , Just download the corresponding browser driver .

Because I only installed the driver of Google browser , So it uses chrome Google , Drivers can be downloaded by themselves .

chrome Google browser corresponding to driver:

http://npm.taobao.org/mirrors/chromedriver/

3、 ... and 、 Login acquisition page

The first thing to solve is the login problem , When logging in, do not directly enter the account to log in , Because Taobao's anti climbing is particularly serious , If it detects that you are a reptile , You are not allowed to log in , Taobao's measures for logging in are very strict .

So I used another login method , Alipay scan code login , Request to Alipay scan code login page URL .

def loginTB():

browser.get(

'https://auth.alipay.com/login/index.htm?loginScene=7&goto=https%3A%2F%2Fauth.alipay.com%2Flogin%2Ftaobao_trust_login.htm%3Ftarget%3Dhttps%253A%252F%252Flogin.taobao.com%252Fmember%252Falipay_sign_dispatcher.jhtml%253Ftg%253Dhttps%25253A%25252F%25252Fwww.taobao.com%25252F¶ms=VFBMX3JlZGlyZWN0X3VybD1odHRwcyUzQSUyRiUyRnd3dy50YW9iYW8uY29tJTJG')Jump to Alipay scan code login interface .

I set a waiting time here ,180 Seconds later, the search box appears , In fact, I won't wait 180 second , Is a display waiting , As long as the element appears , You won't be waiting .

Then find the search box and enter the keyword search .

# Set display wait Wait for the search box to appear

wait = WebDriverWait(browser, 180)

wait.until(EC.presence_of_element_located((By.ID, 'q')))

# Find the search box , Enter the search keyword and click search

text_input = browser.find_element_by_id('q')

text_input.send_keys(' food ')

btn = browser.find_element_by_xpath('//*[@id="J_TSearchForm"]/div[1]/button')

btn.click()Four 、 Parsing data

After getting the page , Then analyze the data , Climb the required product data to , It's used here lxml Parsing library ,XPath Select child nodes for direct resolution .

5、 ... and 、 Crawl the page

After searching in the search box, the required product page details will appear , But not just a page , Is to constantly crawl to the next page to get more than one page of product information . Here's a dead cycle , It's gone all the way to the product page

def loop_get_data():

page_index = 1

while True:

print("=================== We're grabbing number one {} page ===================".format(page_index))

print(" Current page URL:" + browser.current_url)

# Parsing data

parse_html(browser.page_source)

# Set display wait Wait for the next button

wait = WebDriverWait(browser, 60)

wait.until(EC.presence_of_element_located((By.XPATH, '//a[@class="J_Ajax num icon-tag"]')))

time.sleep(1)

try:

# Through action chain , Scroll to the button element on the next page

write = browser.find_element_by_xpath('//li[@class="item next"]')

ActionChains(browser).move_to_element(write).perform()

except NoSuchElementException as e:

print(" Crawling over , Next page data does not exist !")

print(e)

sys.exit(0)

time.sleep(0.2)

# Click next

a_href = browser.find_element_by_xpath('//li[@class="item next"]')

a_href.click()

page_index += 16、 ... and 、 Crawler complete

The last is the call loginTB(), loop_get_data() These two were written before ,def loop_get_data() stay while Called in the loop , So no more calls are needed .

When the crawler is finished, it is saved to a shop_data.json In the document .

The results of crawling are as follows :

The web pages involved in this crawler can be replaced , Friends need source code , Comment in the comments area :taobao I can post a private letter , Or you can ask me any questions in the process of crawling .

Publisher : Full stack programmer stack length , Reprint please indicate the source :https://javaforall.cn/149590.html Link to the original text :https://javaforall.cn

边栏推荐

- CF:A. The Third Three Number Problem【关于我是位运算垃圾这个事情】

- APICloud Studio3 WiFi真机同步和WiFi真机预览使用说明

- 前缀、中缀、后缀表达式「建议收藏」

- Notion 类笔记软件如何选择?Notion 、FlowUs 、Wolai 对比评测

- 聊聊异步编程的 7 种实现方式

- #从源头解决# 自定义头文件在VS上出现“无法打开源文件“XX.h“的问题

- RHCSA5

- There is no monitoring and no operation and maintenance. The following is the commonly used script monitoring in monitoring

- Realize the addition of all numbers between 1 and number

- The solution of outputting 64 bits from printf format%lld of cross platform (32bit and 64bit)

猜你喜欢

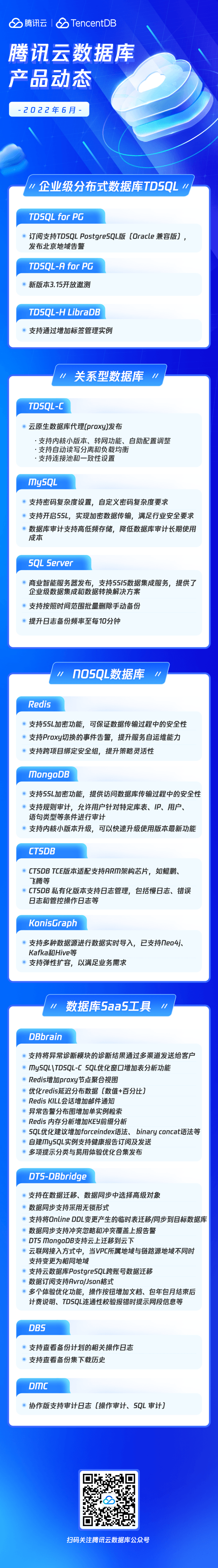

精彩速递|腾讯云数据库6月刊

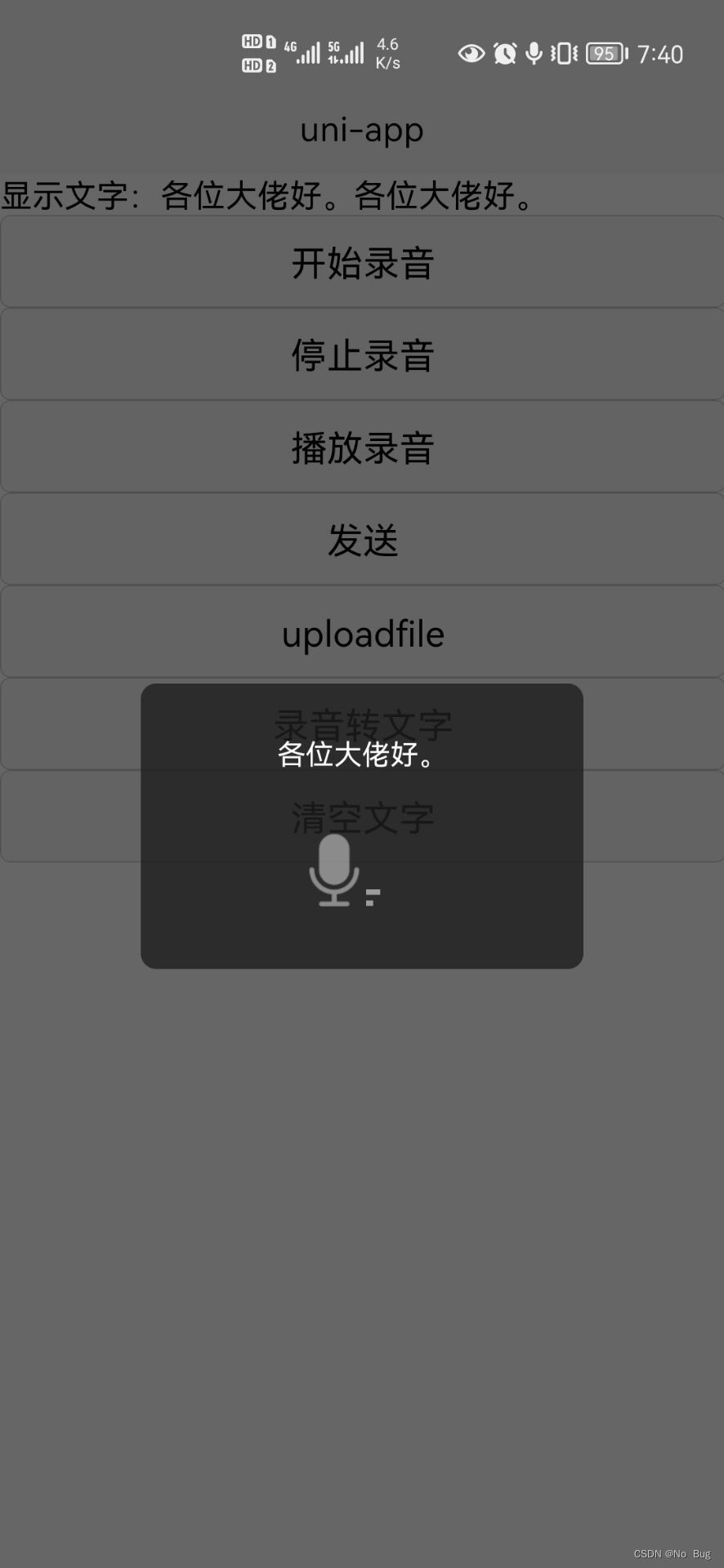

uni-app开发语音识别app,讲究的就是简单快速。

峰会回顾|保旺达-合规和安全双驱动的数据安全整体防护体系

From the perspective of technology and risk control, it is analyzed that wechat Alipay restricts the remote collection of personal collection code

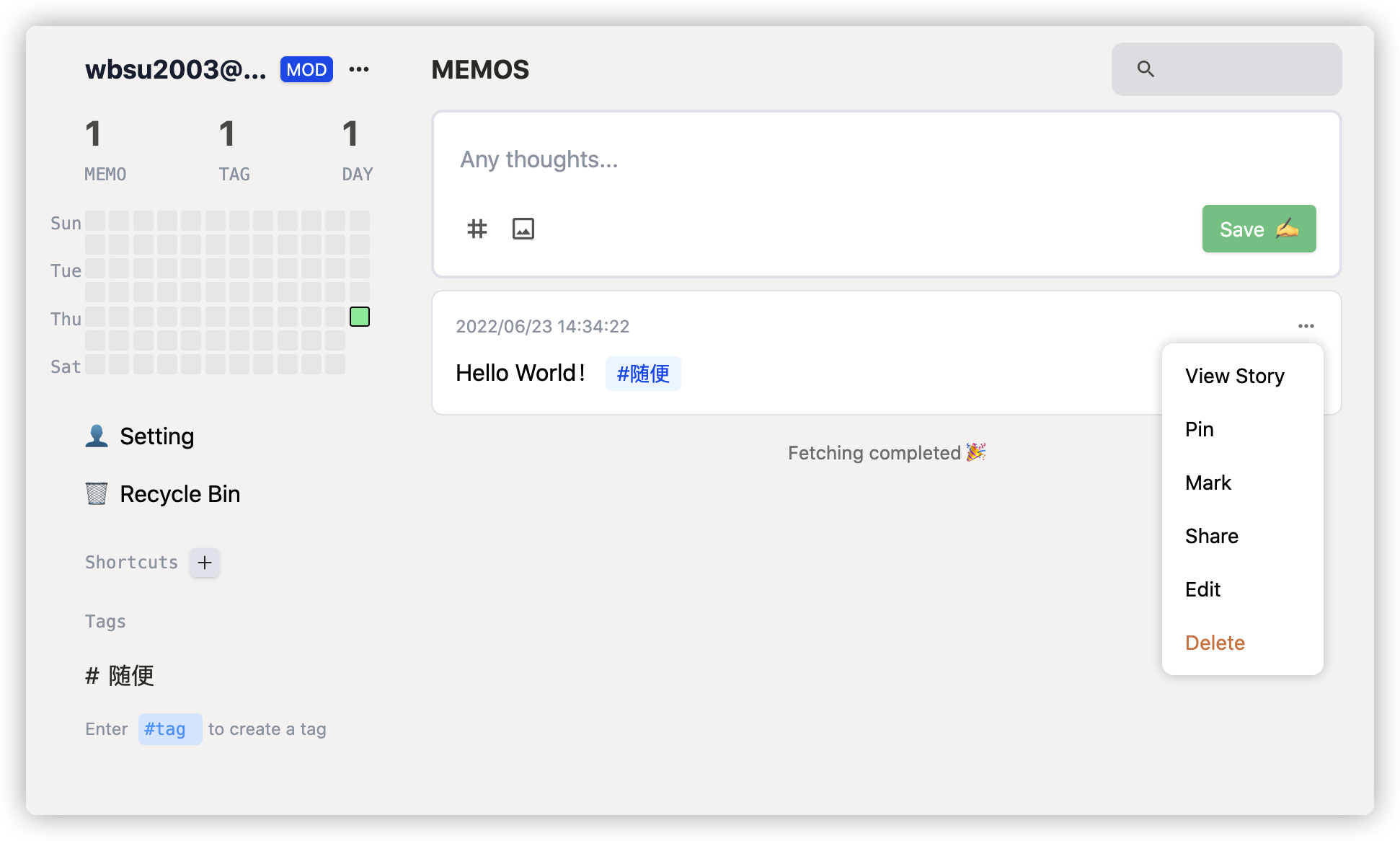

碎片化知识管理工具Memos

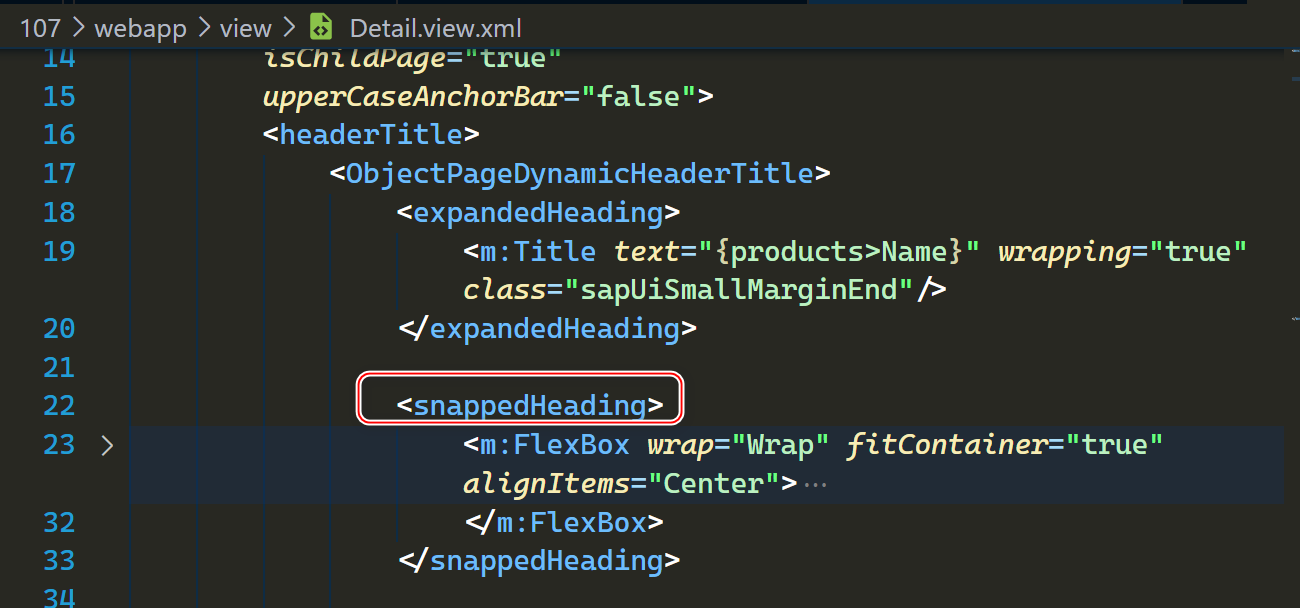

SAP UI5 ObjectPageLayout 控件使用方法分享

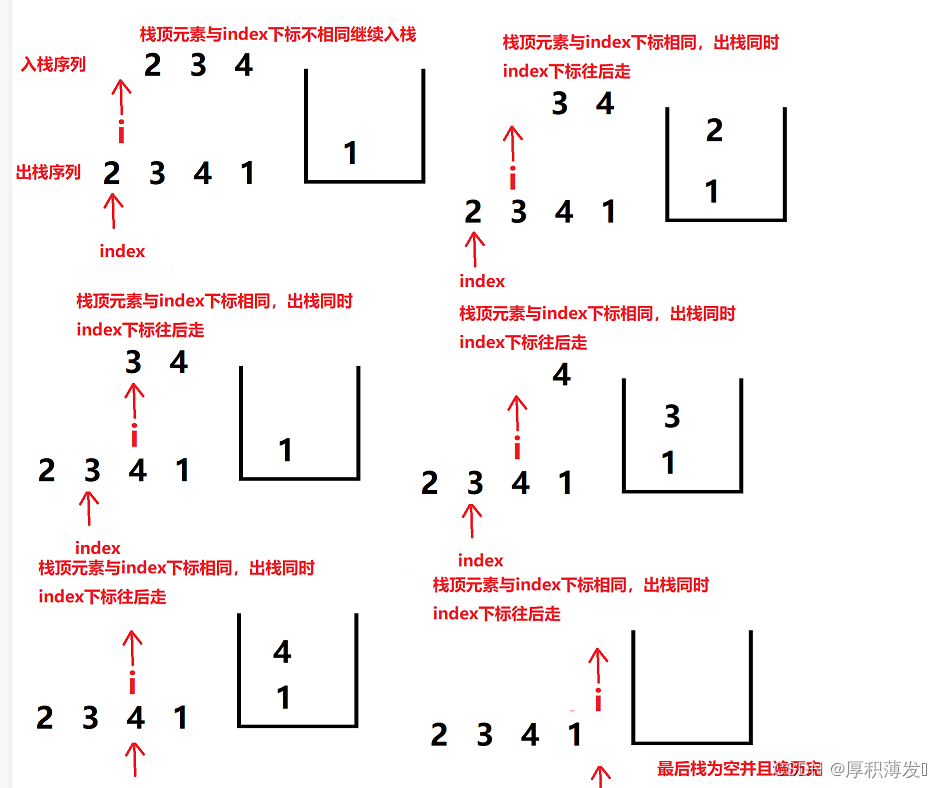

946. 验证栈序列

How can non-technical departments participate in Devops?

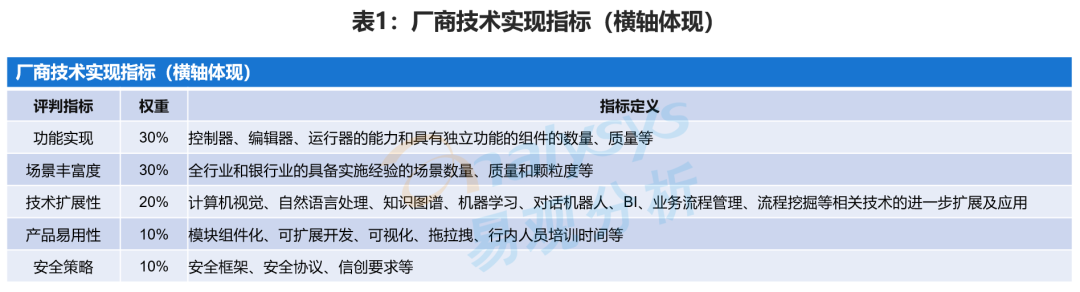

Le rapport de recherche sur l'analyse matricielle de la Force des fournisseurs de RPA dans le secteur bancaire chinois en 2022 a été officiellement lancé.

Taobao short video, why the worse the effect

随机推荐

跨平台(32bit和64bit)的 printf 格式符 %lld 输出64位的解决方式

Principle and performance analysis of lepton lossless compression

MySQL splits strings for conditional queries

SAP SEGW 事物码里的 ABAP 类型和 EDM 类型映射的一个具体例子

Notion 类笔记软件如何选择?Notion 、FlowUs 、Wolai 对比评测

Rocky基础命令3

无密码身份验证如何保障用户隐私安全?

MySQL giant pit: update updates should be judged with caution by affecting the number of rows!!!

Hiengine: comparable to the local cloud native memory database engine

《2022年中國銀行業RPA供應商實力矩陣分析》研究報告正式啟動

自然语言处理系列(一)入门概述

How to realize batch sending when fishing

HiEngine:可媲美本地的云原生内存数据库引擎

[cloud native] event publishing and subscription in Nacos -- observer mode

使用 jMeter 对 SAP Spartacus 进行并发性能测试

Pandora IOT development board learning (HAL Library) - Experiment 7 window watchdog experiment (learning notes)

RHCSA2

解决uni-app配置页面、tabBar无效问题

155. 最小栈

How do e-commerce sellers refund in batches?