当前位置:网站首页>Spark Tuning (III): persistence reduces secondary queries

Spark Tuning (III): persistence reduces secondary queries

2022-07-07 16:28:00 【InfoQ】

1. cause

2. Optimization starts

df = sc.sql(sql)

df1 = df.persist()

df1.createOrReplaceTempView(temp_table_name)

subdf = sc.sql(select * from temp_table_name)

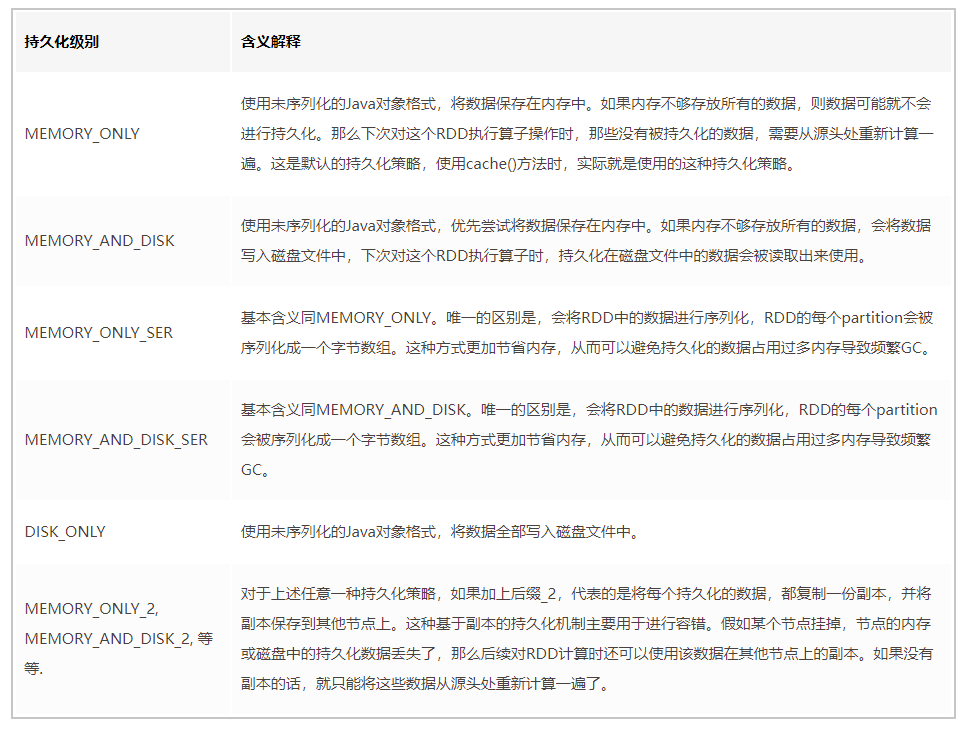

- By default , Of course, the highest performance is MEMORY_ONLY, But only if you have enough memory , More than enough to store the whole RDD All data for . Because there is no serialization or deserialization , This part of the performance overhead is avoided ; For this RDD The subsequent operator operations of , All operations are based on data in pure memory , There is no need to read data from the disk file , High performance ; And there's no need to make a copy of the data , And remote transmission to other nodes . But what we have to pay attention to here is , In the actual production environment , I'm afraid there are limited scenarios where this strategy can be used directly , If RDD When there are more data in ( For example, billions ), Use this persistence level directly , It can lead to JVM Of OOM Memory overflow exception .

- If you use MEMORY_ONLY Memory overflow at level , So it is recommended to try to use MEMORY_ONLY_SER Level . This level will RDD Data is serialized and stored in memory , At this point, each of them partition It's just an array of bytes , It greatly reduces the number of objects , And reduce the memory consumption . This is a level ratio MEMORY_ONLY Extra performance overhead , The main thing is the cost of serialization and deserialization . But subsequent operators can operate based on pure memory , So the overall performance is relatively high . Besides , The possible problems are the same as above , If RDD If there is too much data in , Or it may lead to OOM Memory overflow exception .

- If the level of pure memory is not available , Then it is recommended to use MEMORY_AND_DISK_SER Strategy , instead of MEMORY_AND_DISK Strategy . Because now that it's this step , Just explain RDD A lot of data , Memory can't be completely down . The serialized data is less , Can save memory and disk space overhead . At the same time, this strategy will try to cache data in memory as much as possible , Write to disk if memory cache is not available .

- It is generally not recommended to use DISK_ONLY And suffixes are _2 The level of : Because the data is read and write based on the disk file , Can cause a dramatic performance degradation , Sometimes it's better to recalculate all RDD. The suffix is _2 The level of , All data must be copied in one copy , And send it to other nodes , Data replication and network transmission will lead to large performance overhead , Unless high availability of the job is required , Otherwise, it is not recommended to use .

Conclusion

边栏推荐

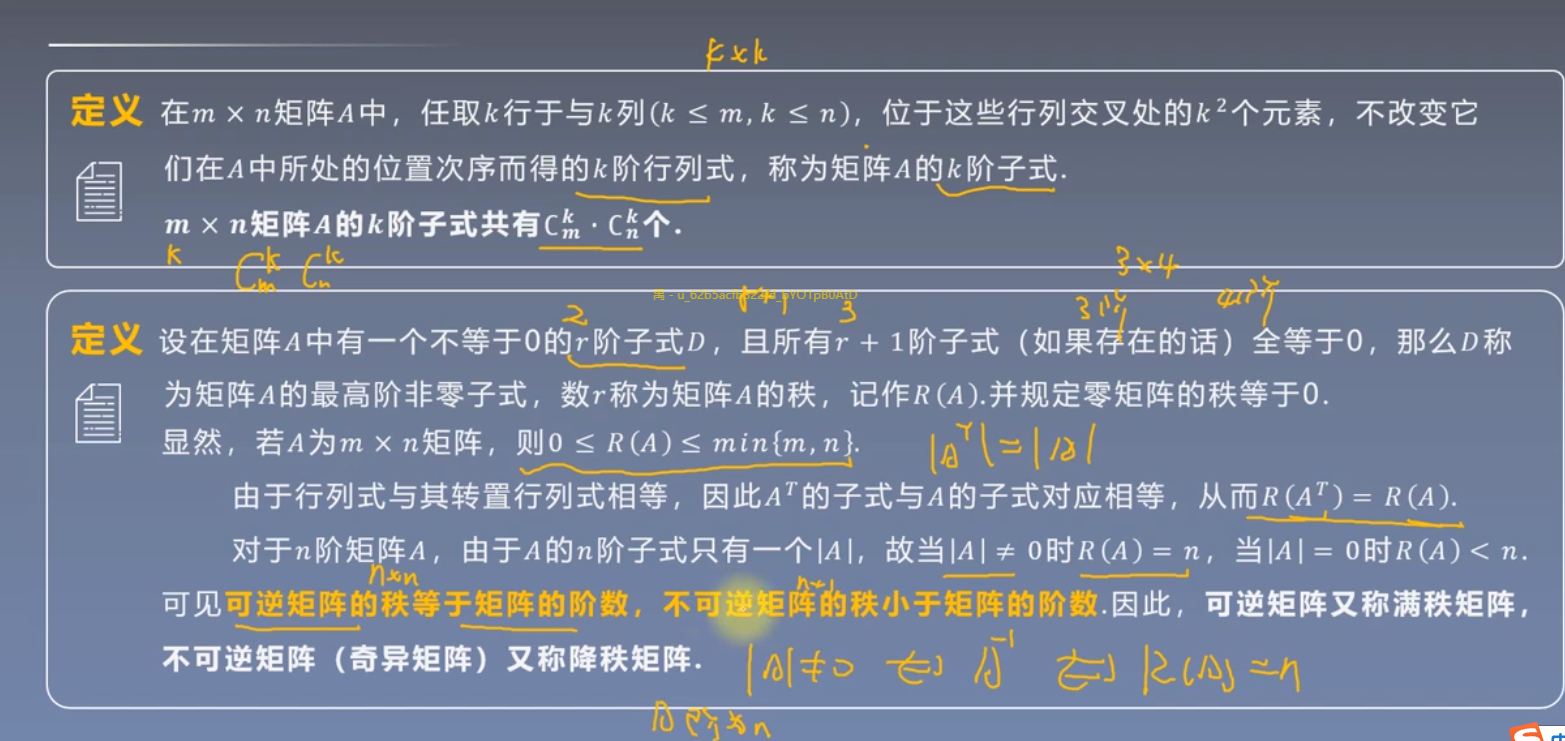

- 深度之眼(七)——矩阵的初等变换(附:数模一些模型的解释)

- 分步式监控平台zabbix

- How does laravel run composer dump autoload without emptying the classmap mapping relationship?

- Usage of config in laravel

- Shandong old age Expo, 2022 China smart elderly care exhibition, smart elderly care and aging technology exhibition

- laravel 是怎么做到运行 composer dump-autoload 不清空 classmap 映射关系的呢?

- You Yuxi, coming!

- 95. (cesium chapter) cesium dynamic monomer-3d building (building)

- Unity3D_ Class fishing project, control the distance between collision walls to adapt to different models

- iptables只允许指定ip地址访问指定端口

猜你喜欢

Unity3D_ Class fishing project, bullet rebound effect is achieved

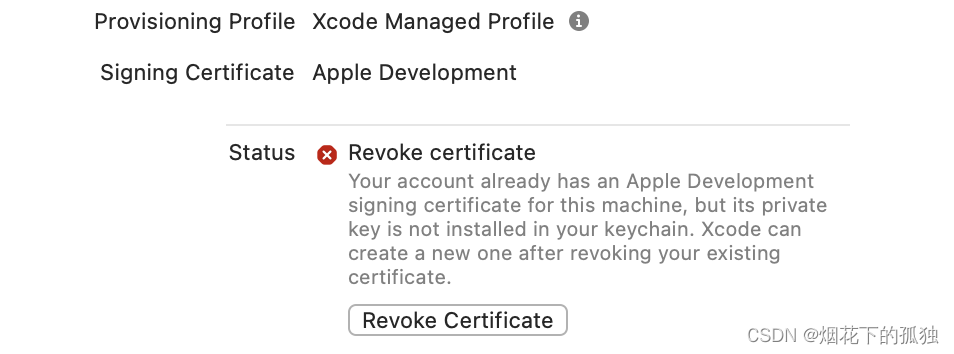

Xcode Revoke certificate

2022 the 4th China (Jinan) International Smart elderly care industry exhibition, Shandong old age Expo

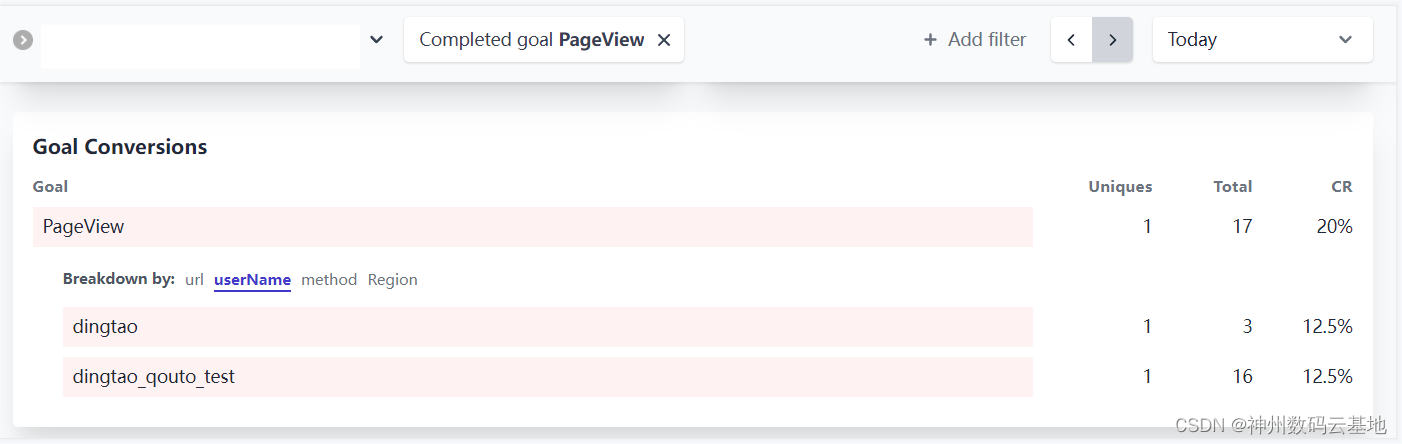

Odoo集成Plausible埋码监控平台

![[vulnhub range] thales:1](/img/fb/721d08697afe9b26c94fede628c4d1.png)

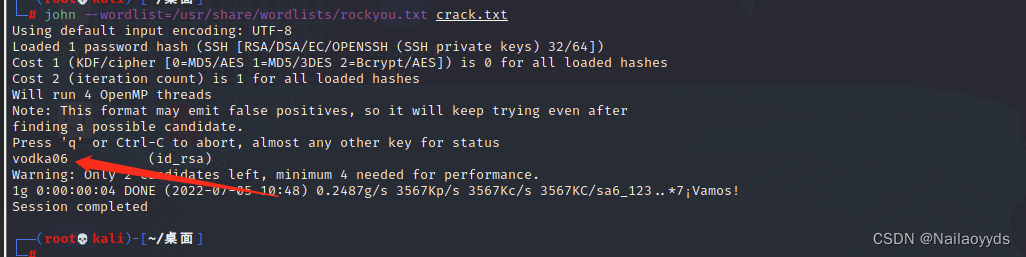

[vulnhub range] thales:1

深度之眼(七)——矩阵的初等变换(附:数模一些模型的解释)

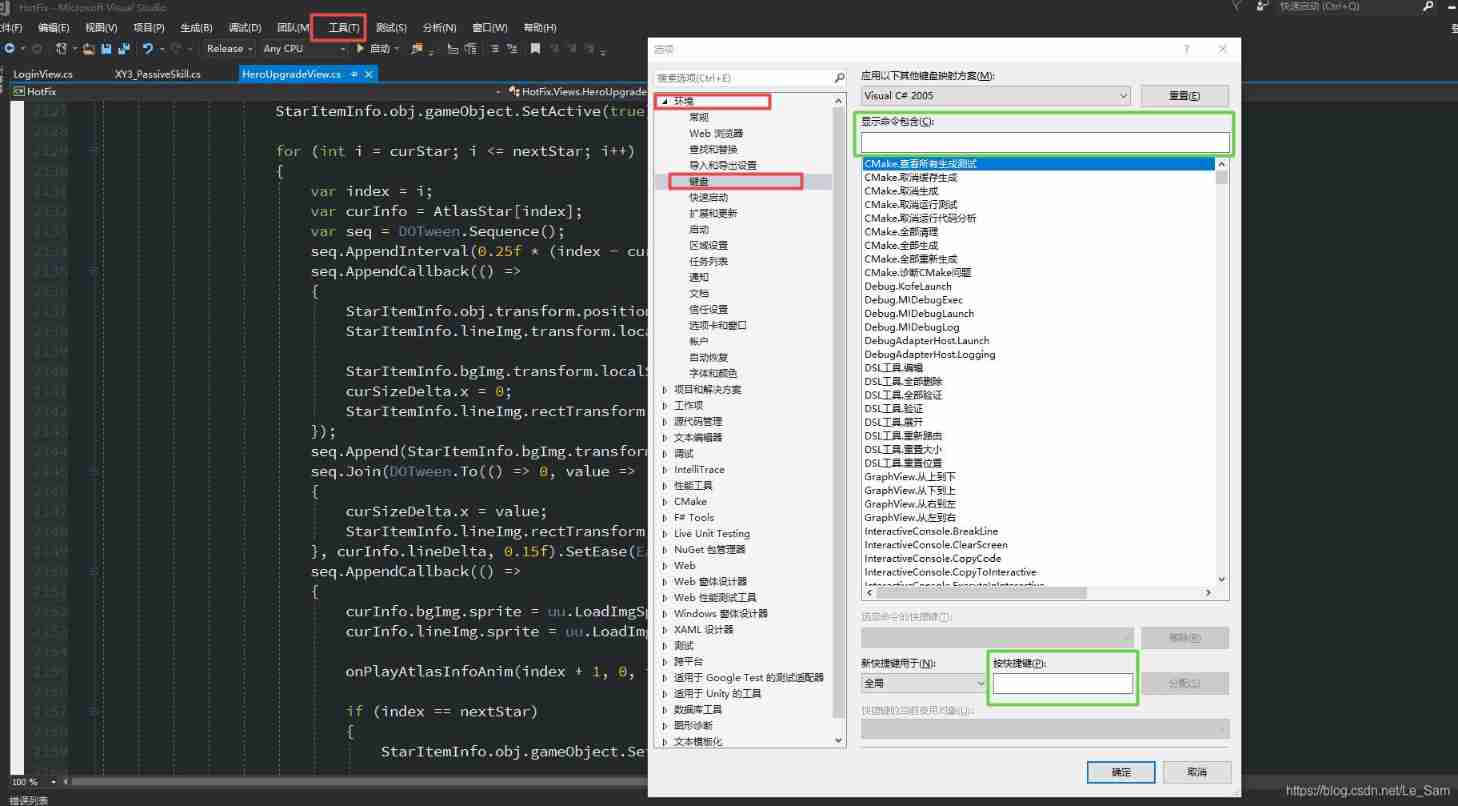

Description of vs common shortcut keys

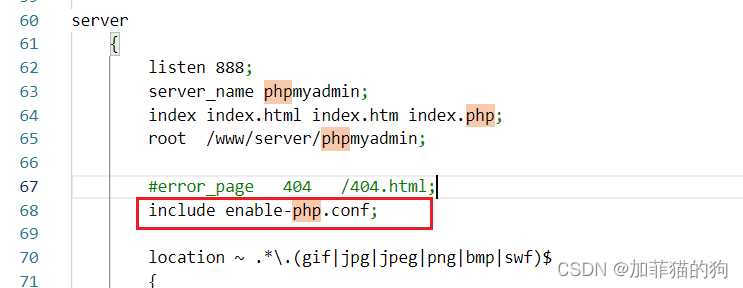

记一次项目的迁移过程

【Vulnhub靶场】THALES:1

Lecturer solicitation order | Apache seatunnel (cultivating) meetup sharing guests are in hot Recruitment!

随机推荐

Step by step monitoring platform ZABBIX

融云斩获 2022 中国信创数字化办公门户卓越产品奖!

Unity3d click events added to 3D objects in the scene

prometheus api删除某个指定job的所有数据

Shader basic UV operations, translation, rotation, scaling

How does laravel run composer dump autoload without emptying the classmap mapping relationship?

2022 the 4th China (Jinan) International Smart elderly care industry exhibition, Shandong old age Expo

Introduction to ThinkPHP URL routing

Laravel5.1 路由 -路由分组

PHP中exit,exit(0),exit(1),exit(‘0’),exit(‘1’),die,return的区别

如何快速检查钢网开口面积比是否符合 IPC7525

U3D_ Infinite Bessel curve

Unity3D_ Class fishing project, bullet rebound effect is achieved

TCP framework___ Unity

MySQL中, 如何查询某一天, 某一月, 某一年的数据

Power of leetcode-231-2

通知Notification使用全解析

Logback logging framework third-party jar package is available for free

Performance comparison of tidb for PostgreSQL and yugabytedb on sysbench

修改配置文件后tidb无法启动