当前位置:网站首页>一文读懂简单查询代价估算

一文读懂简单查询代价估算

2022-07-05 18:33:00 【InfoQ】

查询代价估算——如何选择一条最优的执行路径

- 词法分析:描述词法分析器的*.l文件经Lex工具编译生成lex.yy.c, 再由C编译器生成可执行的词法分析器。基本功能就是将一堆字符串根据设定的保留关键字和非保留关键字,转化成相应的标识符(Tokens)。

- 语法分析:语法规则描述文件*.y经YACC工具编译生成gram.c,再由C编译器生成可执行的语法分析器。基本功能就是将一堆标识符(Tokens)根据设定的语法规则,转化成原始语法树。

- 分析重写:查询分析将原始语法树转换为查询语法树(各种transform);查询重写根据pg_rewrite中的规则改写表和视图,最终得到查询语法树。

- 查询优化:经过逻辑优化和物理优化(生成最优路径)。

- 查询计划生成:将最优的查询路径转化为查询计划。

- 查询执行:通过执行器去执行查询计划生成查询的结果集。

为何需要这些统计信息,有了这些够不够?

- 是不是空数组

- 是不是常量数组

- 是不是唯一无重复

- 是不是有序

- 是不是单调的,等差,等比

- 出现次数最多的数字有哪些

- 数据的分布规律(打点方式描绘数据增长趋势)

如何依据统计信息估算查询代价

- 统计信息只来自部分采样数据,不能精准描述全局特征。

- 统计信息是历史数据,实际数据随时可能在变化。

最简单的表的行数估算(使用relpages和reltuples)

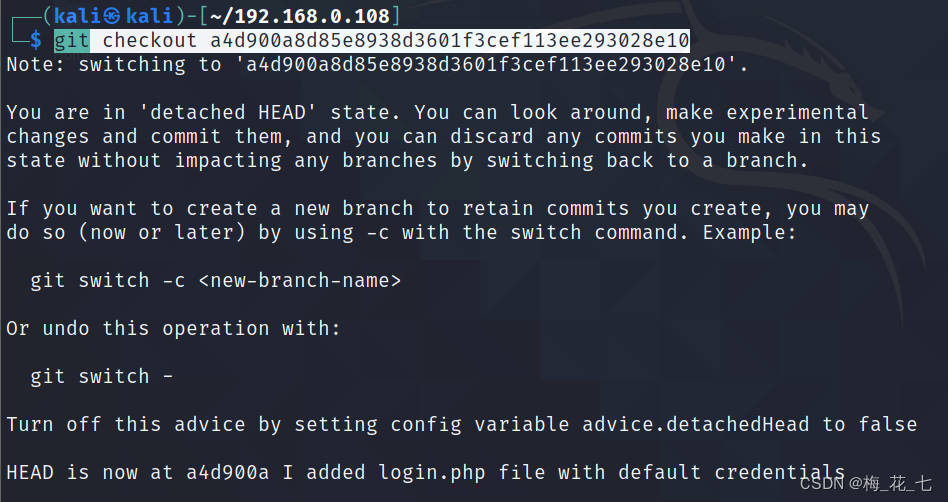

SELECT relpages, reltuples FROM pg_class WHERE relname = 'tenk1'; ---采样估算有358个页面,10000条元组

relpages | reltuples

----------+-----------

358 | 10000

EXPLAIN SELECT * FROM tenk1; ---查询估算该表有10000条元组

QUERY PLAN

-------------------------------------------------------------

Seq Scan on tenk1 (cost=0.00..458.00 rows=10000 width=244)- 先通过表物理文件的大小计算实际页面数actual_pages = file_size / BLOCKSIZE

- 计算页面的元组密度

- a. 如果relpages大于0,密度= reltuples / (double) relpages

- b. 如果relpages为空,density = (BLCKSZ - SizeOfPageHeaderData) / tuple_width, 页面大小 / 元组宽度。

- 估算表元组个数 = 页面元组密度 * 实际页面数 = density * actual_pages

最简单的范围比较(使用直方图)

SELECT histogram_bounds FROM pg_stats WHERE tablename='tenk1' AND attname='unique1';

histogram_bounds

------------------------------------------------------

{0,993,1997,3050,4040,5036,5957,7057,8029,9016,9995}

EXPLAIN SELECT * FROM tenk1 WHERE unique1 < 1000; ---估算小于1000的有1007条

QUERY PLAN

--------------------------------------------------------------------------------

Bitmap Heap Scan on tenk1 (cost=24.06..394.64 rows=1007 width=244)

Recheck Cond: (unique1 < 1000)

-> Bitmap Index Scan on tenk1_unique1 (cost=0.00..23.80 rows=1007 width=0)

Index Cond: (unique1 < 1000)选择率 = (前面桶的总数 + 目标值在当前桶中的范围 / 当前桶的区间范围) / 桶的总数

selectivity = (1 + (1000 - bucket[2].min) / (bucket[2].max - bucket[2].min)) / num_buckets

= (1 + (1000 - 993) / (1997 - 993)) / 10

= 0.100697

估算元组数 = 基表元组数 * 条件选择率

rows = rel_cardinality * selectivity

= 10000 * 0.100697

= 1007 (rounding off)最简单的等值比较(使用MCV)

SELECT null_frac, n_distinct, most_common_vals, most_common_freqs FROM pg_stats WHERE tablename='tenk1' AND attname='stringu1';

null_frac | 0

n_distinct | 676

most_common_vals | {EJAAAA,BBAAAA,CRAAAA,FCAAAA,FEAAAA,GSAAAA,JOAAAA,MCAAAA,NAAAAA,WGAAAA}

most_common_freqs | {0.00333333,0.003,0.003,0.003,0.003,0.003,0.003,0.003,0.003,0.003}

EXPLAIN SELECT * FROM tenk1 WHERE stringu1 = 'CRAAAA'; ---

QUERY PLAN

----------------------------------------------------------

Seq Scan on tenk1 (cost=0.00..483.00 rows=30 width=244)

Filter: (stringu1 = 'CRAAAA'::name)CRAAAA位于MCV中的第3项,占比是0.003

selectivity = mcf[3]

= 0.003

rows = 10000 * 0.003

= 30EXPLAIN SELECT * FROM tenk1 WHERE stringu1 = 'xxx'; ---查找不存在于MCV中的值

QUERY PLAN

----------------------------------------------------------

Seq Scan on tenk1 (cost=0.00..483.00 rows=15 width=244)

Filter: (stringu1 = 'xxx'::name)selectivity = (1 - sum(mvf)) / (num_distinct - num_mcv)

= (1 - (0.00333333 + 0.003 + 0.003 + 0.003 + 0.003 + 0.003 +

0.003 + 0.003 + 0.003 + 0.003)) / (676 - 10)

= 0.0014559

rows = 10000 * 0.0014559

= 15 (rounding off)复杂一点的范围比较(同时使用MCV和直方图)

SELECT null_frac, n_distinct, most_common_vals, most_common_freqs FROM pg_stats WHERE tablename='tenk1' AND attname='stringu1';

null_frac | 0

n_distinct | 676

most_common_vals | {EJAAAA,BBAAAA,CRAAAA,FCAAAA,FEAAAA,GSAAAA,JOAAAA,MCAAAA,NAAAAA,WGAAAA}

most_common_freqs | {0.00333333,0.003,0.003,0.003,0.003,0.003,0.003,0.003,0.003,0.003}

SELECT histogram_bounds FROM pg_stats WHERE tablename='tenk1' AND attname='stringu1';

histogram_bounds

--------------------------------------------------------------------------------

{AAAAAA,CQAAAA,FRAAAA,IBAAAA,KRAAAA,NFAAAA,PSAAAA,SGAAAA,VAAAAA,XLAAAA,ZZAAAA}

EXPLAIN SELECT * FROM tenk1 WHERE stringu1 < 'IAAAAA'; ---查找一个不存在于MCV中的值

QUERY PLAN

------------------------------------------------------------

Seq Scan on tenk1 (cost=0.00..483.00 rows=3077 width=244)

Filter: (stringu1 < 'IAAAAA'::name)selectivity = sum(relevant mvfs)

= 0.00333333 + 0.003 + 0.003 + 0.003 + 0.003 + 0.003

= 0.01833333selectivity = mcv_selectivity + histogram_selectivity * histogram_fraction

= 0.01833333 + ((2 + ('IAAAAA'-'FRAAAA')/('IBAAAA'-'FRAAAA')) / 10) * (1 - sum(mvfs))

= 0.01833333 + 0.298387 * 0.96966667

= 0.307669

rows = 10000 * 0.307669

= 3077 (rounding off)复制多条件联合查询的例子

EXPLAIN SELECT * FROM tenk1 WHERE unique1 < 1000 AND stringu1 = 'xxx';

QUERY PLAN

--------------------------------------------------------------------------------

Bitmap Heap Scan on tenk1 (cost=23.80..396.91 rows=1 width=244)

Recheck Cond: (unique1 < 1000)

Filter: (stringu1 = 'xxx'::name)

-> Bitmap Index Scan on tenk1_unique1 (cost=0.00..23.80 rows=1007 width=0)

Index Cond: (unique1 < 1000)selectivity = selectivity(unique1 < 1000) * selectivity(stringu1 = 'xxx')

= 0.100697 * 0.0014559

= 0.0001466

rows = 10000 * 0.0001466

= 1 (rounding off)一个使用JOIN的例子

EXPLAIN SELECT * FROM tenk1 t1, tenk2 t2

WHERE t1.unique1 < 50 AND t1.unique2 = t2.unique2;

QUERY PLAN

--------------------------------------------------------------------------------------

Nested Loop (cost=4.64..456.23 rows=50 width=488)

-> Bitmap Heap Scan on tenk1 t1 (cost=4.64..142.17 rows=50 width=244)

Recheck Cond: (unique1 < 50)

-> Bitmap Index Scan on tenk1_unique1 (cost=0.00..4.63 rows=50 width=0)

Index Cond: (unique1 < 50)

-> Index Scan using tenk2_unique2 on tenk2 t2 (cost=0.00..6.27 rows=1 width=244)

Index Cond: (unique2 = t1.unique2)selectivity = (0 + (50 - bucket[1].min)/(bucket[1].max - bucket[1].min))/num_buckets

= (0 + (50 - 0)/(993 - 0))/10

= 0.005035

rows = 10000 * 0.005035

= 50 (rounding off)SELECT tablename, null_frac,n_distinct, most_common_vals FROM pg_stats

WHERE tablename IN ('tenk1', 'tenk2') AND attname='unique2';

tablename | null_frac | n_distinct | most_common_vals

-----------+-----------+------------+------------------

tenk1 | 0 | -1 |

tenk2 | 0 | -1 |【*】选择率 = 两边的非空概率相乘,再除以最大JOIN条数。

selectivity = (1 - null_frac1) * (1 - null_frac2) * min(1/num_distinct1, 1/num_distinct2)

= (1 - 0) * (1 - 0) / max(10000, 10000)

= 0.0001

【*】JOIN的函数估算就是两边输入数量的笛卡尔积,再乘以选择率

rows = (outer_cardinality * inner_cardinality) * selectivity

= (50 * 10000) * 0.0001

= 50更详细的信息

边栏推荐

- 如何写出好代码 - 防御式编程

- websocket 工具的使用

- Case sharing | integrated construction of data operation and maintenance in the financial industry

- Notes on common management commands of openshift

- Multithreading (I) processes and threads

- 兄弟组件进行传值(显示有先后顺序)

- Le cours d'apprentissage de la machine 2022 de l'équipe Wunda arrive.

- Find in MySQL_ in_ Detailed explanation of set() function usage

- 【在优麒麟上使用Electron开发桌面应】

- [HCIA cloud] [1] definition of cloud computing, what is cloud computing, architecture and technical description of cloud computing, Huawei cloud computing products, and description of Huawei memory DD

猜你喜欢

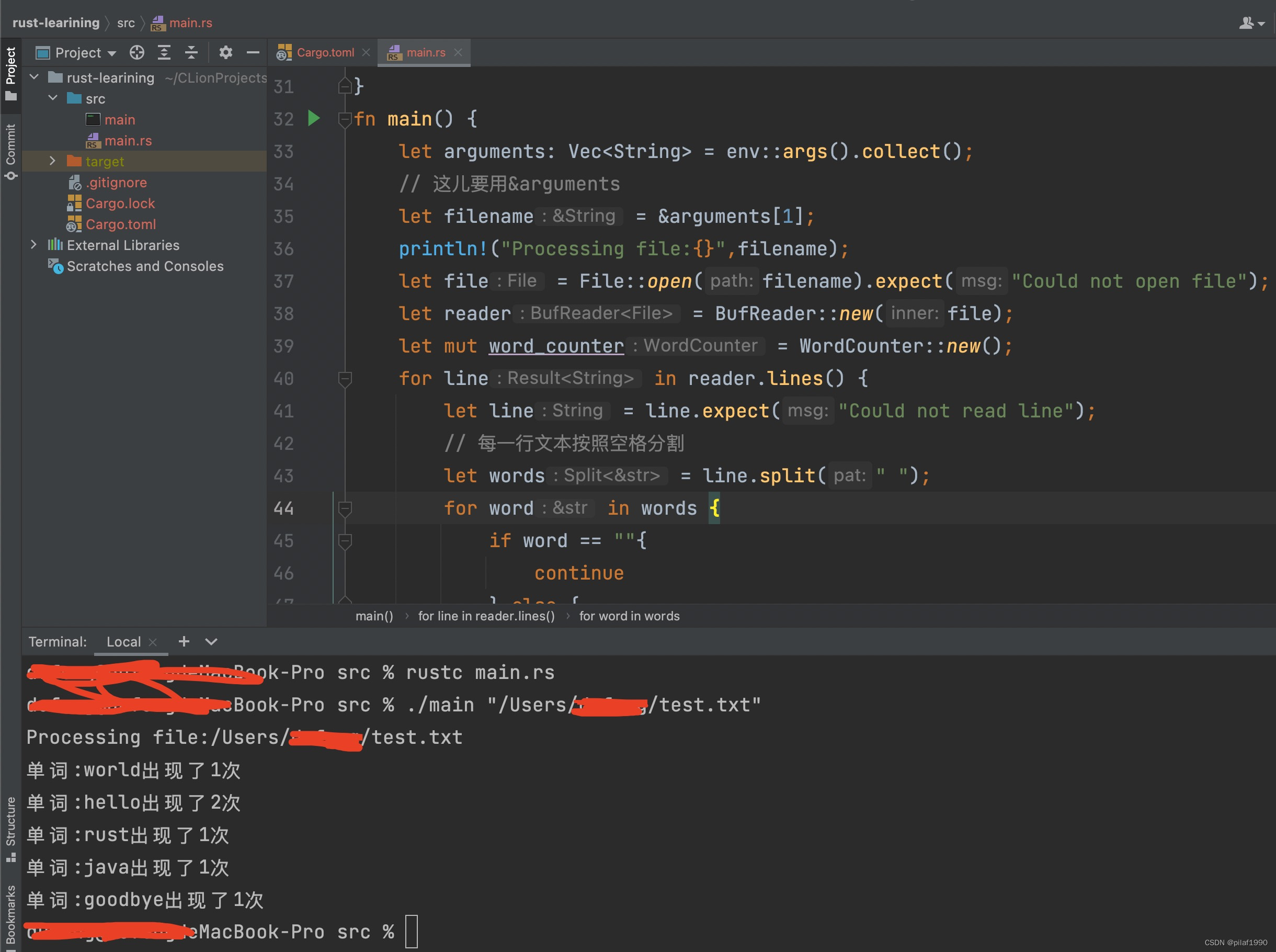

rust统计文件中单词出现的次数

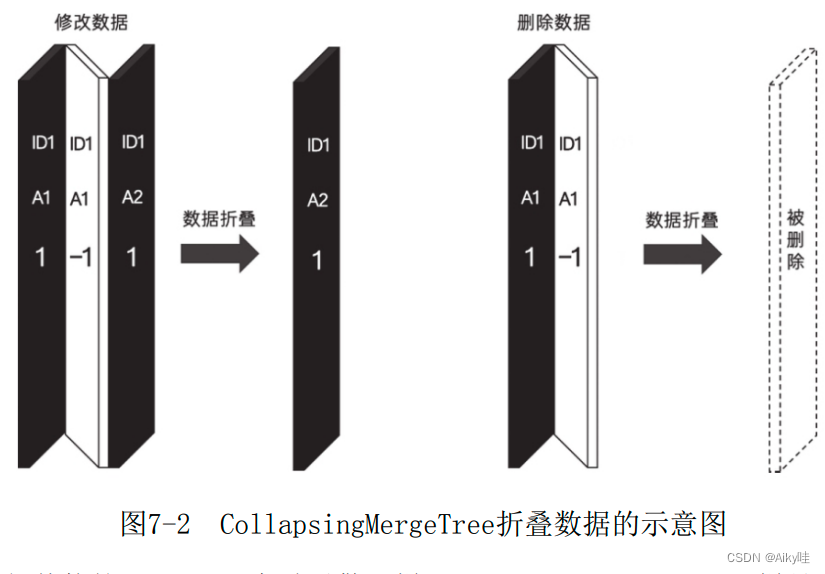

Reading notes of Clickhouse principle analysis and Application Practice (5)

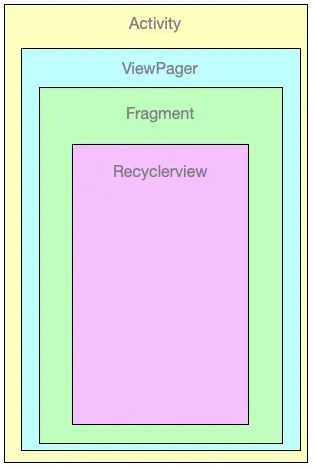

ViewPager + RecyclerView的内存泄漏

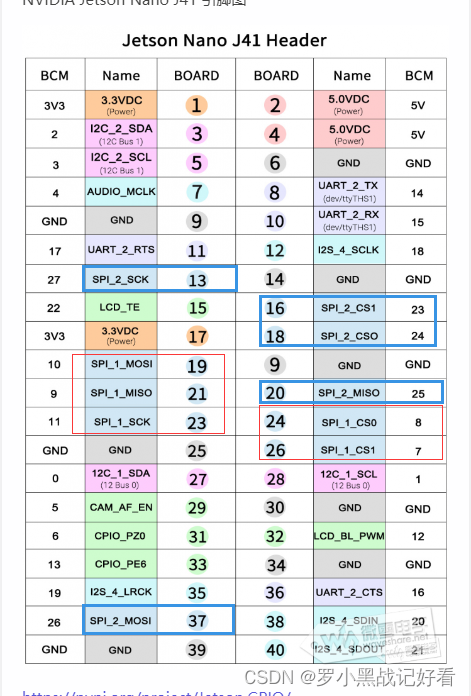

Can communication of nano

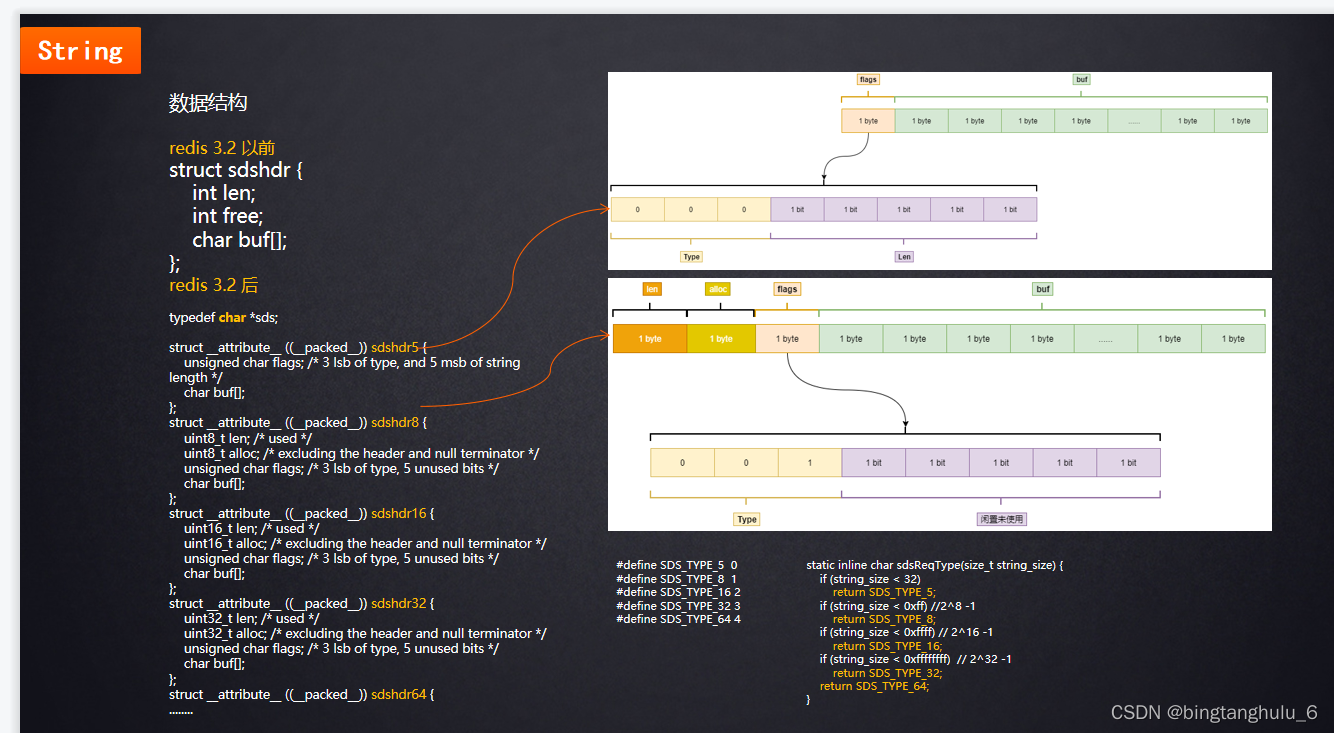

深入底层C源码讲透Redis核心设计原理

Solutions contents have differences only in line separators

Wu Enda team 2022 machine learning course, coming

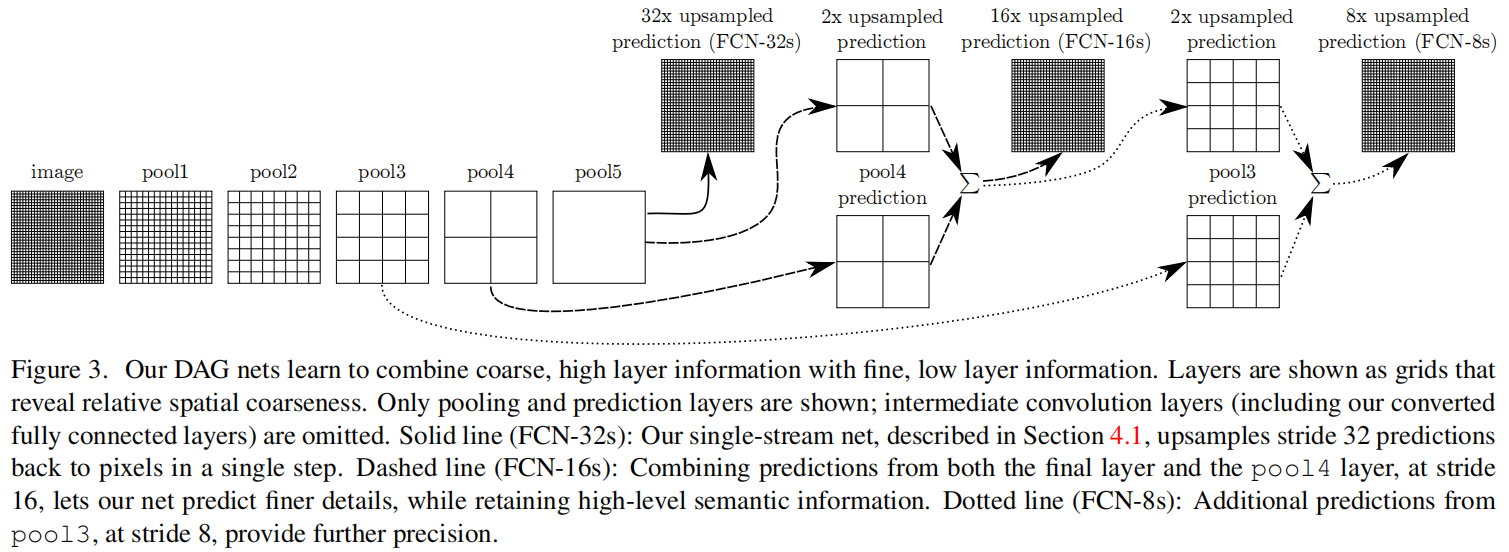

FCN: Fully Convolutional Networks for Semantic Segmentation

Vulnhub's darkhole_ two

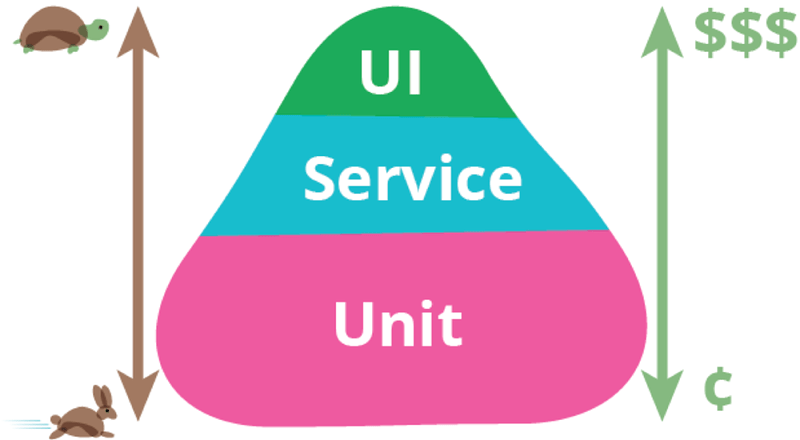

技术分享 | 接口测试价值与体系

随机推荐

案例分享|金融业数据运营运维一体化建设

Image classification, just look at me!

JDBC reads a large amount of data, resulting in memory overflow

sample_rate(采樣率),sample(采樣),duration(時長)是什麼關系

[utiliser Electron pour développer le Bureau sur youkirin devrait]

分享:中兴 远航 30 pro root 解锁BL magisk ZTE 7532N 8040N 9041N 刷机 刷面具原厂刷机包 root方法下载

Sibling components carry out value transfer (there is a sequence displayed)

第十一届中国云计算标准和应用大会 | 华云数据成为全国信标委云计算标准工作组云迁移专题组副组长单位副组长单位

SAP feature description

sample_ What is the relationship between rate, sample and duration

【HCIA-cloud】【1】云计算的定义、什么是云计算、云计算的架构与技术说明、华为云计算产品、华为内存DDR配置工具说明

【在优麒麟上使用Electron开发桌面应】

About Statistical Power(统计功效)

The 10th global Cloud Computing Conference | Huayun data won the "special contribution award for the 10th anniversary of 2013-2022"

The easycvr platform reports an error "ID cannot be empty" through the interface editing channel. What is the reason?

IDEA配置npm启动

[paddlepaddle] paddedetection face recognition custom data set

U-Net: Convolutional Networks for Biomedical Images Segmentation

Matlab built-in function how different colors, matlab subsection function different colors drawing

Electron installation problems