当前位置:网站首页>Feature scaling normalization

Feature scaling normalization

2022-07-04 22:44:00 【Melody2050】

- Feature Scaling (How it really works?) Explained !!

- How To Calculate the Mean and Standard Deviation — Normalizing Datasets in Pytorch

- Normalization and Standardization

Purpose of feature scaling

For most machine learning algorithms and optimization algorithms , Scaling the eigenvalues to the same interval can make the model with better performance .

for example :

(a) There are two different characteristics , The value range of the first feature is 110, The value range of the second feature is 110000. In gradient descent algorithm , The cost function is the least square error function , So when using gradient descent algorithm , The algorithm will obviously favor the second feature , Because its value range is larger .

(b)k Nearest neighbor algorithm , It uses European distance , It will also lead to its preference for the second feature . For decision trees and random forests as well XGboost In terms of algorithm , Feature scaling has little effect on them .

Common feature scaling methods

- Standardization . Subtract the mean , And divide it by the standard deviation

- normalization . Use min、max, Shrink the value to [0,1]

边栏推荐

- MD5 tool class

- PMO: compare the sample efficiency of 25 molecular optimization methods

- 攻防世界 MISC 进阶区 Erik-Baleog-and-Olaf

- Recommendation of mobile app for making barcode

- Logo Camp d'entraînement section 3 techniques créatives initiales

- Alibaba launched a new brand "Lingyang" and is committed to becoming a "digital leader"

- Now MySQL cdc2.1 is parsing the datetime class with a value of 0000-00-00 00:00:00

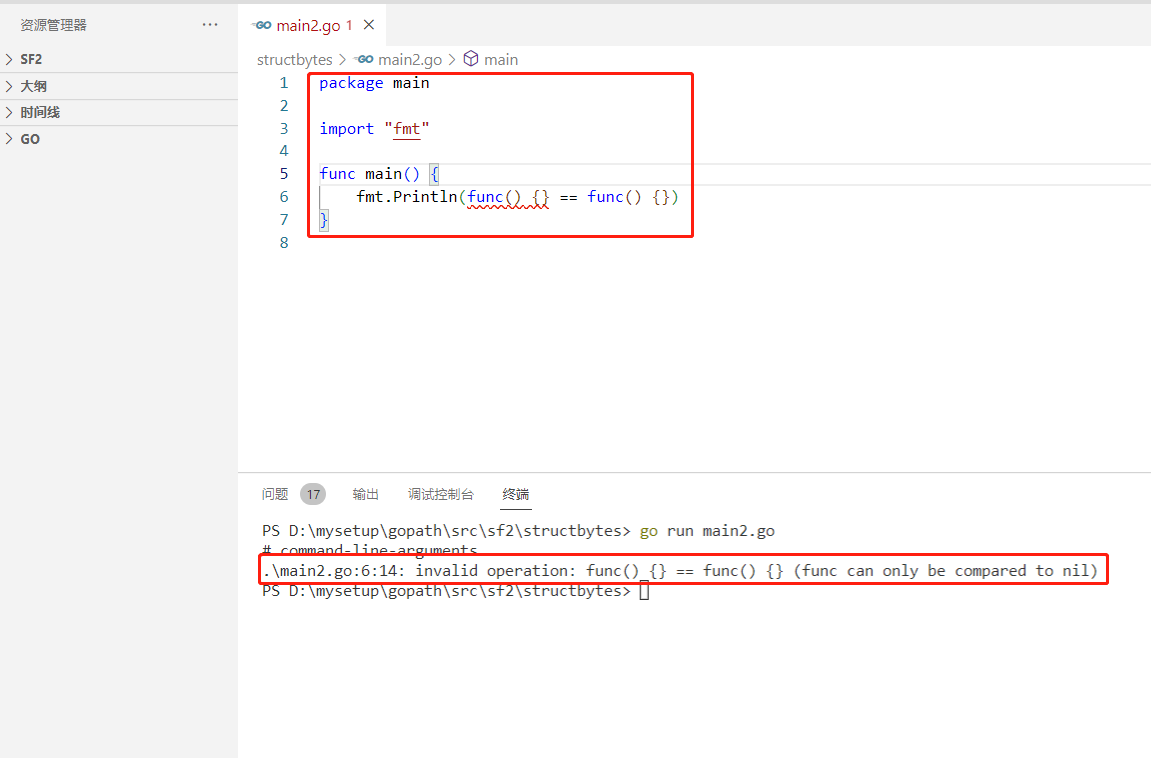

- 2022-07-04:以下go语言代码输出什么?A:true;B:false;C:编译错误。 package main import “fmt“ func main() { fmt.Pri

- Practice and principle of PostgreSQL join

- 高中物理:直线运动

猜你喜欢

都说软件测试很简单有手就行,但为何仍有这么多劝退的?

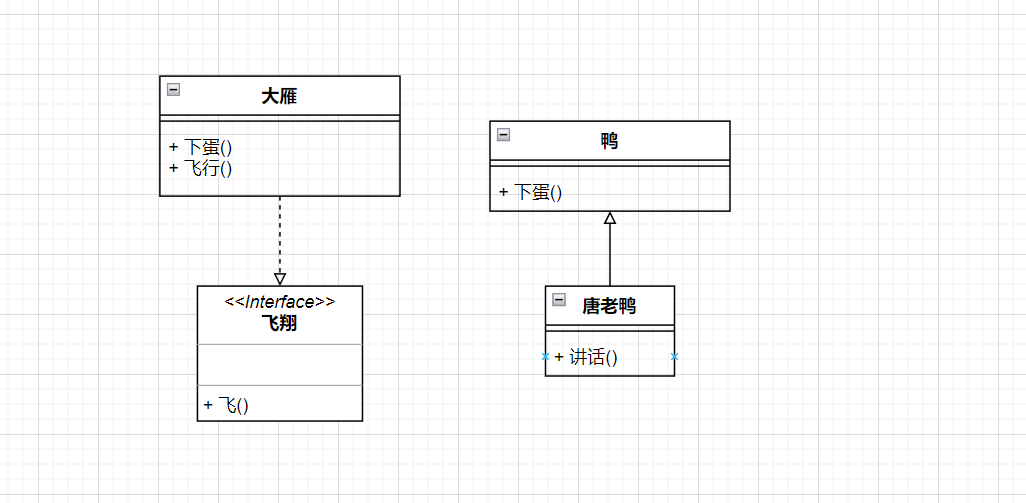

UML图记忆技巧

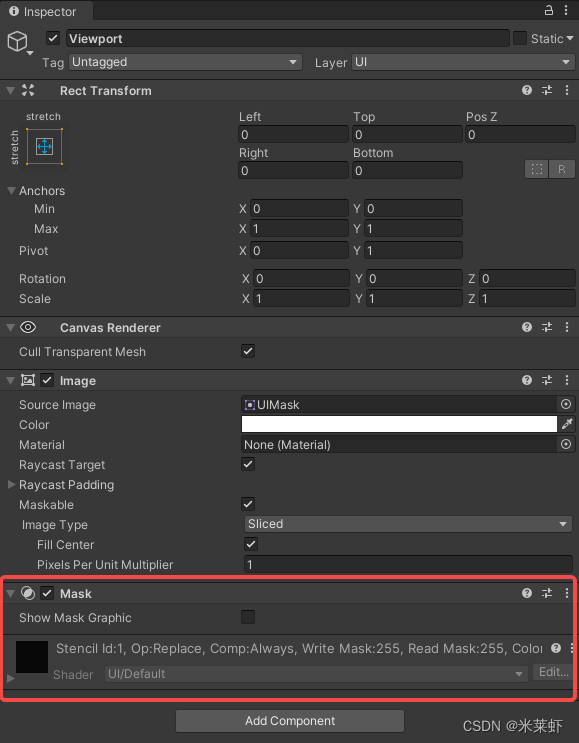

Unity修仙手游 | lua动态滑动功能(3种源码具体实现)

NFT Insider #64:电商巨头eBay提交NFT相关商标申请,毕马威将在Web3和元宇宙中投入3000万美元

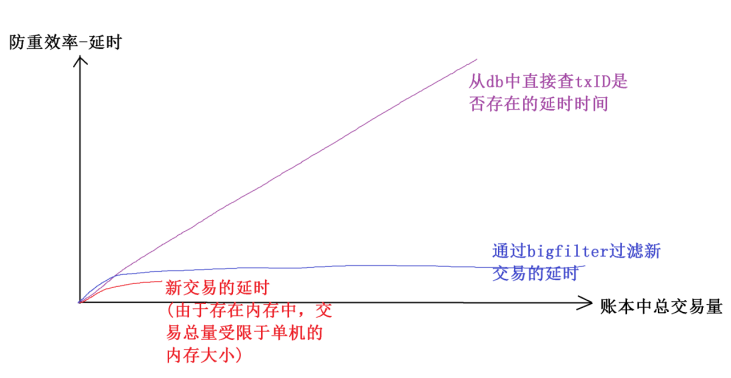

Introduction and application of bigfilter global transaction anti duplication component

业务太忙,真的是没时间搞自动化理由吗?

30余家机构联合发起数字藏品行业倡议,未来会如何前进?

2022-07-04:以下go语言代码输出什么?A:true;B:false;C:编译错误。 package main import “fmt“ func main() { fmt.Pri

Wake up day, how do I step by step towards the road of software testing

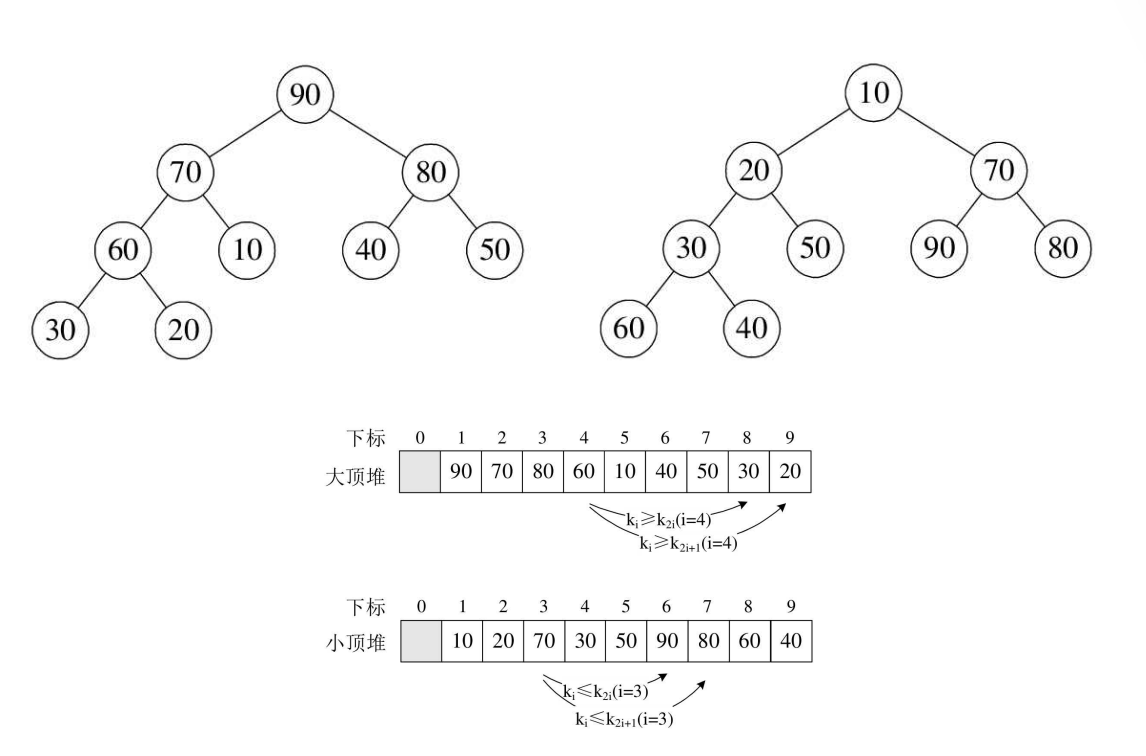

Detailed explanation of heap sort code

随机推荐

国产数据库乱象

MySQL Architecture - user rights and management

攻防世界 MISC 進階區 Erik-Baleog-and-Olaf

Solana chain application crema was shut down due to hacker attacks

Logo special training camp section II collocation relationship between words and graphics

[acwing] solution of the 58th weekly match

MySQL storage data encryption

[Yugong series] go teaching course 003-ide installation and basic use in July 2022

关于栈区、堆区、全局区、文字常量区、程序代码区

Close system call analysis - Performance Optimization

串口数据帧

攻防世界 MISC 进阶 glance-50

Prosperity is exhausted, things are right and people are wrong: where should personal webmasters go

How to reset the password of MySQL root account

Logo special training camp section 1 Identification logo and logo design ideas

Test will: bug classification and promotion solution

LOGO特训营 第二节 文字与图形的搭配关系

Logo Camp d'entraînement section 3 techniques créatives initiales

With this PDF, we finally got offers from eight major manufacturers, including Alibaba, bytek and Baidu

Attack and defense world misc advanced grace-50