当前位置:网站首页>"Hands on learning in depth" Chapter 2 - preparatory knowledge_ 2.5 automatic differentiation_ Learning thinking and exercise answers

"Hands on learning in depth" Chapter 2 - preparatory knowledge_ 2.5 automatic differentiation_ Learning thinking and exercise answers

2022-07-06 02:31:00 【coder_ sure】

List of articles

2.5. Automatic differentiation

author github link : github link

practice

- Prove a matrix A \mathbf{A} A The transpose of is A \mathbf{A} A, namely ( A ⊤ ) ⊤ = A (\mathbf{A}^\top)^\top = \mathbf{A} (A⊤)⊤=A.

- Two matrices are given A \mathbf{A} A and B \mathbf{B} B, prove “ They are transposed and ” be equal to “ They are transposed with ”, namely A ⊤ + B ⊤ = ( A + B ) ⊤ \mathbf{A}^\top + \mathbf{B}^\top = (\mathbf{A} + \mathbf{B})^\top A⊤+B⊤=(A+B)⊤.

- Given an arbitrary square matrix A \mathbf{A} A, A + A ⊤ \mathbf{A} + \mathbf{A}^\top A+A⊤ Is it always symmetrical ? Why? ?

- We define shapes in this section ( 2 , 3 , 4 ) (2,3,4) (2,3,4) Tensor

X.len(X)What is the output of ? - For tensors of arbitrary shape

X,len(X)Whether it always corresponds toXThe length of a particular axis ? What is this axis ? - function

A/A.sum(axis=1), See what happens . Can you analyze the reason ? - Consider a with a shape ( 2 , 3 , 4 ) (2,3,4) (2,3,4) Tensor , In the shaft 0、1、2 What shape is the summation output on ?

- by

linalg.normFunction provides 3 Tensors of one or more axes , And observe its output . For tensors of any shape, what does this function calculate ?

Practice reference answers

- Why is it more expensive to calculate the second derivative than the first derivative ?

Because the second derivative is based on the calculation of the first derivative , Therefore, the cost of calculating the second derivative must be greater than that of the first derivative - After running the back propagation function , Run it again now , See what happens .

The complains , about Pytorch Come on , The forward process establishes a calculation diagram , Release after back propagation . Because the intermediate result of the calculation graph has been released , So the second run of back propagation will make an error . At this moment in backward Add parameters to the function retain_graph=True, You can run back propagation twice . - In the case of control flow , We calculated

dAboutaThe derivative of , If we put variablesaChange to random vector or matrix , What's going to happen ?

A runtime error has occurred , stay Pytorch in , Don't let the tensor derive from the tensor , Only scalar derivatives of tensors are allowed . If you want to call on a non scalar backward(), You need to pass in a gradient Parameters . - Redesign an example of finding the gradient of control flow , Run and analyze the results .

# When a The norm of is greater than 10 when , Gradient for all elements is 1 Vector ; When a The gradient of is not greater than 10 when , Gradient for all elements is 2 Vector .

def f(a):

if a.norm() > 10:

b = a

else:

b = 2 * a

return b.sum()

a = torch.tensor([1.0, 2.0, 3.0, 4.0, 5.0], requires_grad=True)

d = f(a)

d.backward()

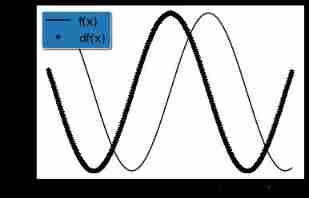

- send f ( x ) = sin ( x ) f(x)=\sin(x) f(x)=sin(x), draw f ( x ) f(x) f(x) and d f ( x ) d x \frac{df(x)}{dx} dxdf(x) Image , The latter does not use f ′ ( x ) = cos ( x ) f'(x)=\cos(x) f′(x)=cos(x).

The latter does not use f ′ ( x ) = cos ( x ) f'(x)=\cos(x) f′(x)=cos(x), The original intention of this problem is to save all the derivative values obtained from the derivation of the function , According to these saved df value , Draw f ′ ( x ) f'(x) f′(x)

# Import the corresponding library

import numpy as np

import torch

import matplotlib.pyplot as plt

# Make some definitions

x = np.arange(-5, 5, 0.02)# Define the argument in [5,5] Between , Every number interval 0.02

f = np.sin(x)

df = []

for i in x:

# Yes x Find the derivative for every value of

v = torch.tensor(i,requires_grad=True)

y = torch.sin(v)

y.backward()

df.append(v.grad)

# The drawing part

# Create plots with pre-defined labels.

fig, ax = plt.subplots()

ax.plot(x, f, 'k', label='f(x)')

ax.plot(x, df, 'k*', label='df(x)')

legend = ax.legend(loc='upper left', shadow=True, fontsize='x-large')

# Put a nicer background color on the legend.

legend.get_frame().set_facecolor('C0')

plt.show()

Reference material

边栏推荐

- 【coppeliasim】6自由度路径规划

- 0211 embedded C language learning

- 论文笔记: 图神经网络 GAT

- 2022 edition illustrated network pdf

- 有没有sqlcdc监控多张表 再关联后 sink到另外一张表的案例啊?全部在 mysql中操作

- 机器学习训练与参数优化的一般过程 (讨论)

- 高数_向量代数_单位向量_向量与坐标轴的夹角

- 更换gcc版本后,编译出现make[1]: cc: Command not found

- [untitled] a query SQL execution process in the database

- 2022 eye health exhibition, vision rehabilitation exhibition, optometry equipment exhibition, eye care products exhibition, eye mask Exhibition

猜你喜欢

技术管理进阶——什么是管理者之体力、脑力、心力

Pat grade a 1033 to fill or not to fill

Audio and video engineer YUV and RGB detailed explanation

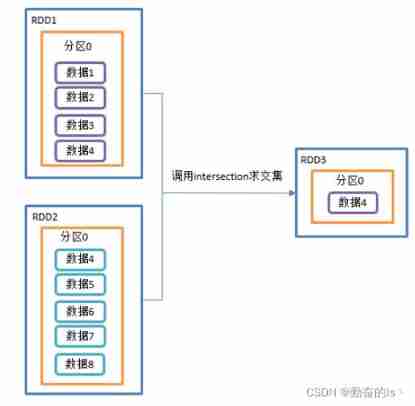

RDD conversion operator of spark

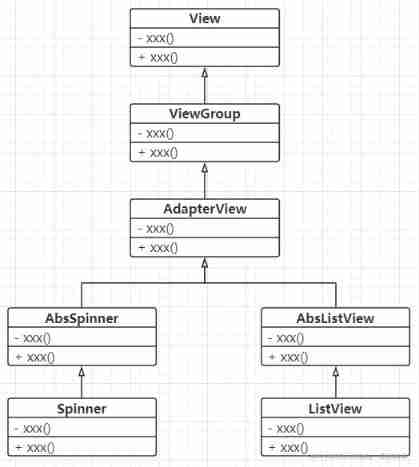

Use the list component to realize the drop-down list and address list

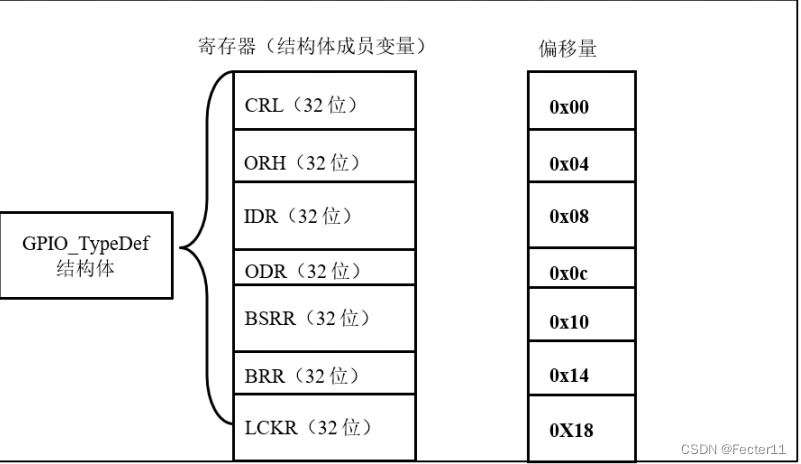

Zero foundation self-study STM32 - Review 2 - encapsulating GPIO registers with structures

一位博士在华为的22年

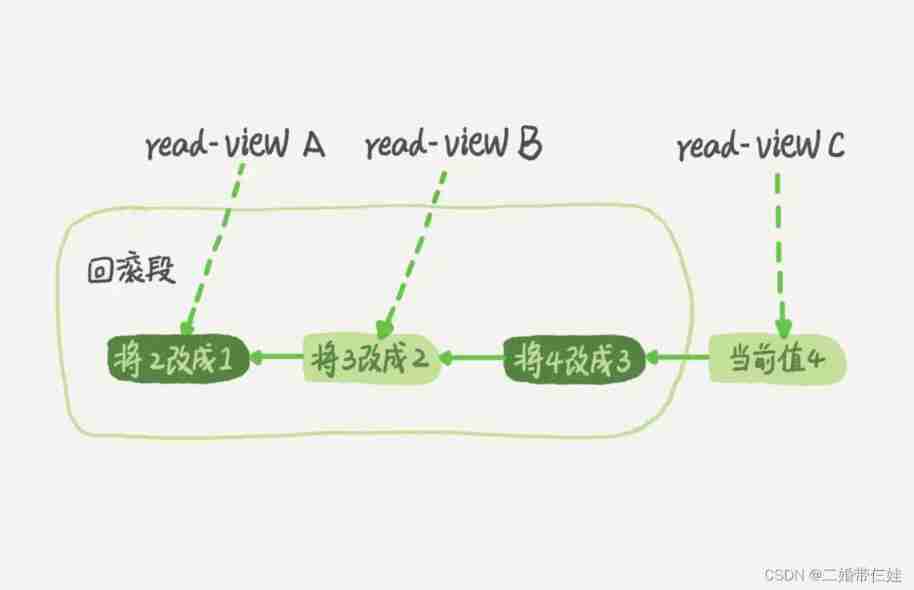

MySQL lethal serial question 1 -- are you familiar with MySQL transactions?

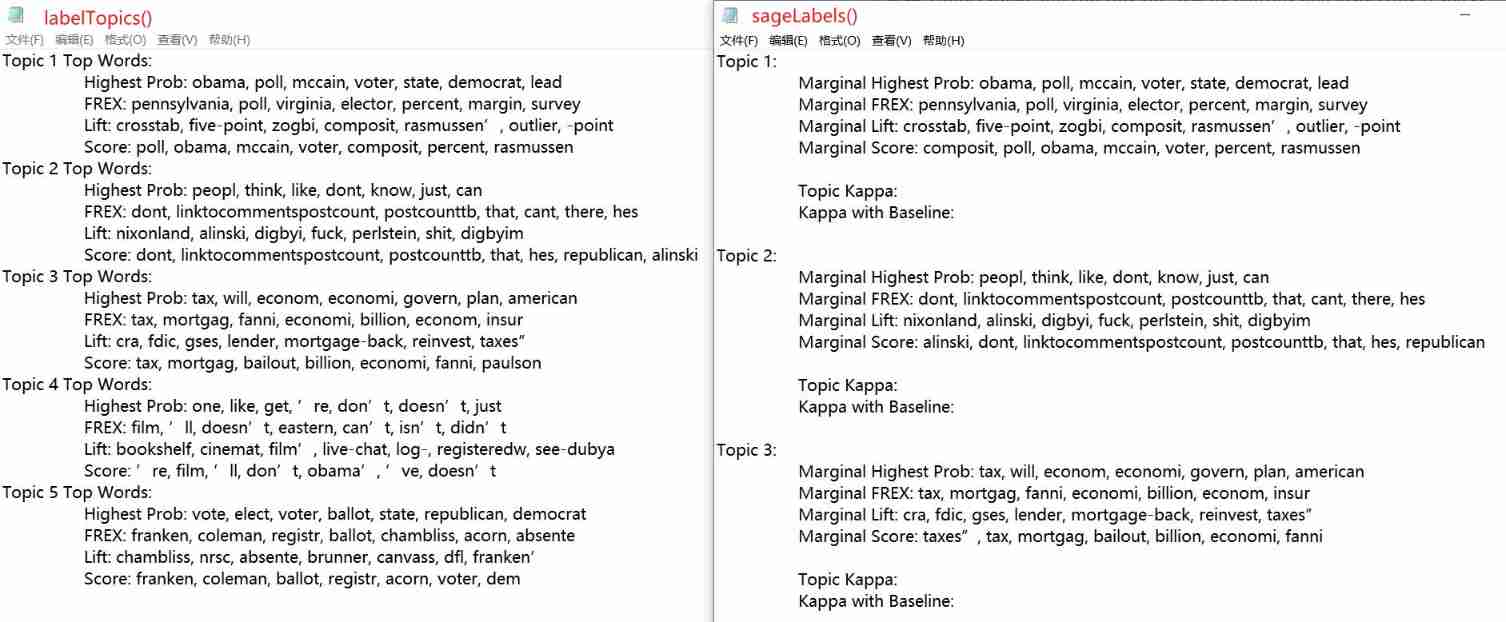

Structural theme model (I) STM package workflow

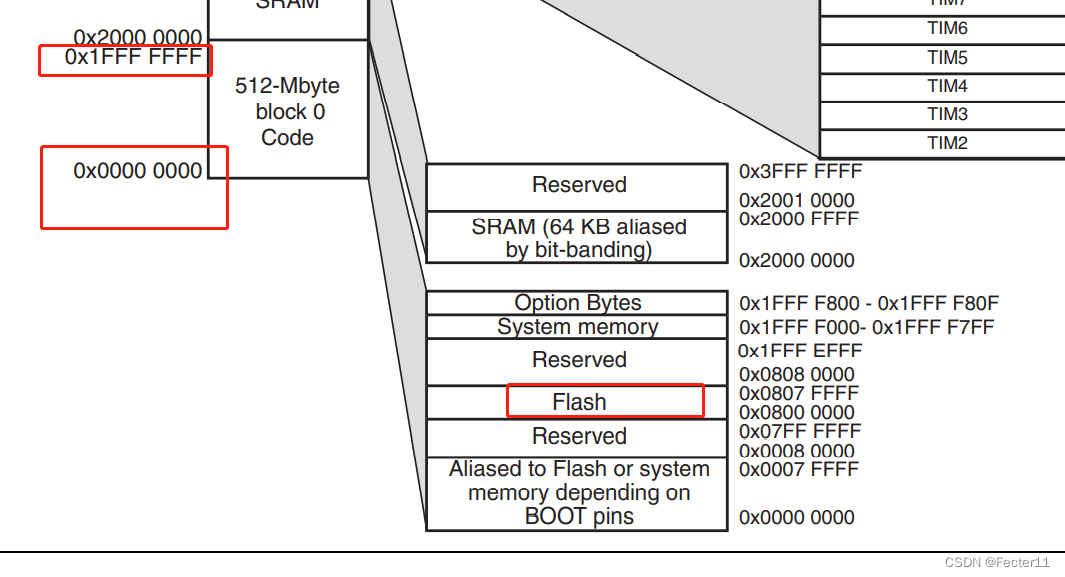

零基础自学STM32-野火——GPIO复习篇——使用绝对地址操作GPIO

随机推荐

Ue4- how to make a simple TPS role (II) - realize the basic movement of the role

有沒有sqlcdc監控多張錶 再關聯後 sink到另外一張錶的案例啊?全部在 mysql中操作

Global and Chinese markets for single beam side scan sonar 2022-2028: Research Report on technology, participants, trends, market size and share

Crawler (9) - scrape framework (1) | scrape asynchronous web crawler framework

2020.02.11

Template_ Quick sort_ Double pointer

零基础自学STM32-复习篇2——使用结构体封装GPIO寄存器

[untitled] a query SQL execution process in the database

[Yunju entrepreneurial foundation notes] Chapter II entrepreneur test 8

Multi function event recorder of the 5th National Games of the Blue Bridge Cup

模板_快速排序_双指针

模板_求排列逆序对_基于归并排序

我把驱动换成了5.1.35,但是还是一样的错误,我现在是能连成功,但是我每做一次sql操作都会报这个

vs code保存时 出现两次格式化

力扣今日題-729. 我的日程安排錶 I

[Yunju entrepreneurial foundation notes] Chapter II entrepreneur test 9

好用的 JS 脚本

Global and Chinese market of wheelchair climbing machines 2022-2028: Research Report on technology, participants, trends, market size and share

米家、涂鸦、Hilink、智汀等生态哪家强?5大主流智能品牌分析

550 permission denied occurs when FTP uploads files, which is not a user permission problem