当前位置:网站首页>RDD partition rules of spark

RDD partition rules of spark

2022-07-06 02:04:00 【Diligent ls】

1.RDD Data is created from a collection

a. Do not specify partition

Create... From collection rdd, If you do not write the number of partitions manually , The default number of partitions is the same as that of local mode cpu The number of cores is related to

local : 1 individual local[*] : Number of all cores of notebook local[K]:K individual

b. The specified partition

object fenqu {

def main(args: Array[String]): Unit = {

val conf: SparkConf = new SparkConf().setMaster("local[*]").setAppName("SparkCoreTest")

val sc: SparkContext = new SparkContext(conf)

//1)4 Data , Set up 4 Zones , Output :0 Partition ->1,1 Partition ->2,2 Partition ->3,3 Partition ->4

val rdd: RDD[Int] = sc.makeRDD(Array(1, 2, 3, 4), 4)

//2)4 Data , Set up 3 Zones , Output :0 Partition ->1,1 Partition ->2,2 Partition ->3,4

//val rdd: RDD[Int] = sc.makeRDD(Array(1, 2, 3, 4), 3)

//3)5 Data , Set up 3 Zones , Output :0 Partition ->1,1 Partition ->2、3,2 Partition ->4、5

//val rdd: RDD[Int] = sc.makeRDD(Array(1, 2, 3, 4, 5), 3)

rdd.saveAsTextFile("output")

sc.stop()

}

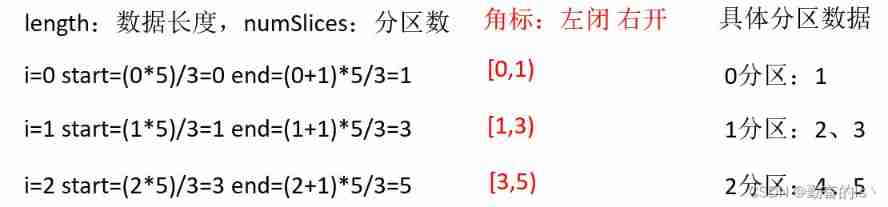

}The rules

The starting position of the partition = ( Zone number * Total data length )/ Total number of divisions

End of partition =(( Zone number + 1)* Total data length )/ Total number of divisions

2. Create after reading in the file

a. Default

The default value is the current number of cores and 2 The minimum value of , It's usually 2

b. Appoint

1). How to calculate the number of partitions :

totalSize = 10

goalSize = 10 / 3 = 3(byte) Indicates that each partition stores 3 Bytes of data

Partition number = totalSize/ goalSize = 10 /3 => 3,3,4

4 Subsection greater than 3 Subsection 1.1 times , accord with hadoop section 1.1 Double strategy , Therefore, an additional partition will be created , That is, there are 4 Zones 3,3,3,1

2). Spark Read the file , It's using hadoop Read by , So read line by line , It has nothing to do with the number of bytes

3). The calculation of data reading position is in the unit of offset .

4). Calculation of offset range of data partition

0 => [0,3] 1 012 0 => 1,2

1 => [3,6] 2 345 1 => 3

2 => [6,9] 3 678 2 => 4

3 => [9,9] 4 9 3 => nothing

边栏推荐

- 安装php-zbarcode扩展时报错,不知道有没有哪位大神帮我解决一下呀 php 环境用的7.3

- 2022 PMP project management examination agile knowledge points (8)

- [Jiudu OJ 09] two points to find student information

- 抓包整理外篇——————状态栏[ 四]

- How to set an alias inside a bash shell script so that is it visible from the outside?

- FTP server, ssh server (super brief)

- 【Flask】静态文件与模板渲染

- Internship: unfamiliar annotations involved in the project code and their functions

- selenium 等待方式

- Extracting key information from TrueType font files

猜你喜欢

NiO related knowledge (II)

Redis-列表

Initialize MySQL database when docker container starts

Computer graduation design PHP campus restaurant online ordering system

Social networking website for college students based on computer graduation design PHP

PHP campus movie website system for computer graduation design

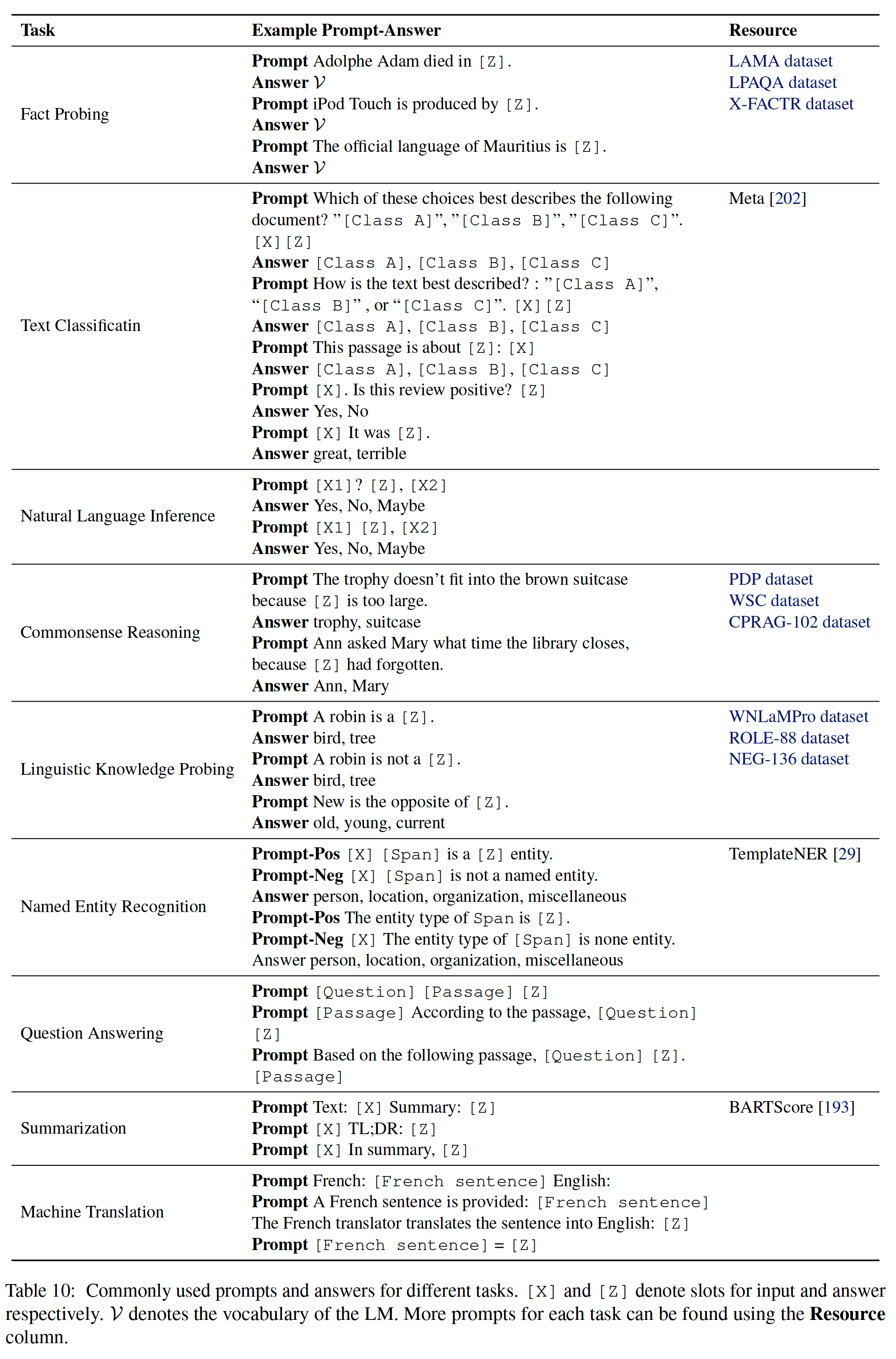

NLP第四范式:Prompt概述【Pre-train,Prompt(提示),Predict】【刘鹏飞】

![[Clickhouse] Clickhouse based massive data interactive OLAP analysis scenario practice](/img/3a/63f3e89ddf84f23f950ed9620b4405.jpg)

[Clickhouse] Clickhouse based massive data interactive OLAP analysis scenario practice

02.Go语言开发环境配置

![[flask] official tutorial -part2: Blueprint - view, template, static file](/img/bd/a736d45d7154119e75428f227af202.png)

[flask] official tutorial -part2: Blueprint - view, template, static file

随机推荐

Computer graduation design PHP campus restaurant online ordering system

2022年PMP项目管理考试敏捷知识点(8)

01.Go语言介绍

Redis daemon cannot stop the solution

Selenium element positioning (2)

Paddle framework: paddlenlp overview [propeller natural language processing development library]

【Flask】静态文件与模板渲染

GBase 8c数据库升级报错

Virtual machine network, networking settings, interconnection with host computer, network configuration

Redis list

Win10 add file extension

[the most complete in the whole network] |mysql explain full interpretation

Luo Gu P1170 Bugs Bunny and Hunter

Basic operations of databases and tables ----- non empty constraints

NLP第四范式:Prompt概述【Pre-train,Prompt(提示),Predict】【刘鹏飞】

leetcode3、实现 strStr()

Ali test open-ended questions

selenium 元素定位(2)

leetcode3、實現 strStr()

正则表达式:示例(1)