当前位置:网站首页>3D vision - 4 Getting started with gesture recognition - using mediapipe includes single frame and real time video

3D vision - 4 Getting started with gesture recognition - using mediapipe includes single frame and real time video

2022-07-06 01:23:00 【Tourists 26024】

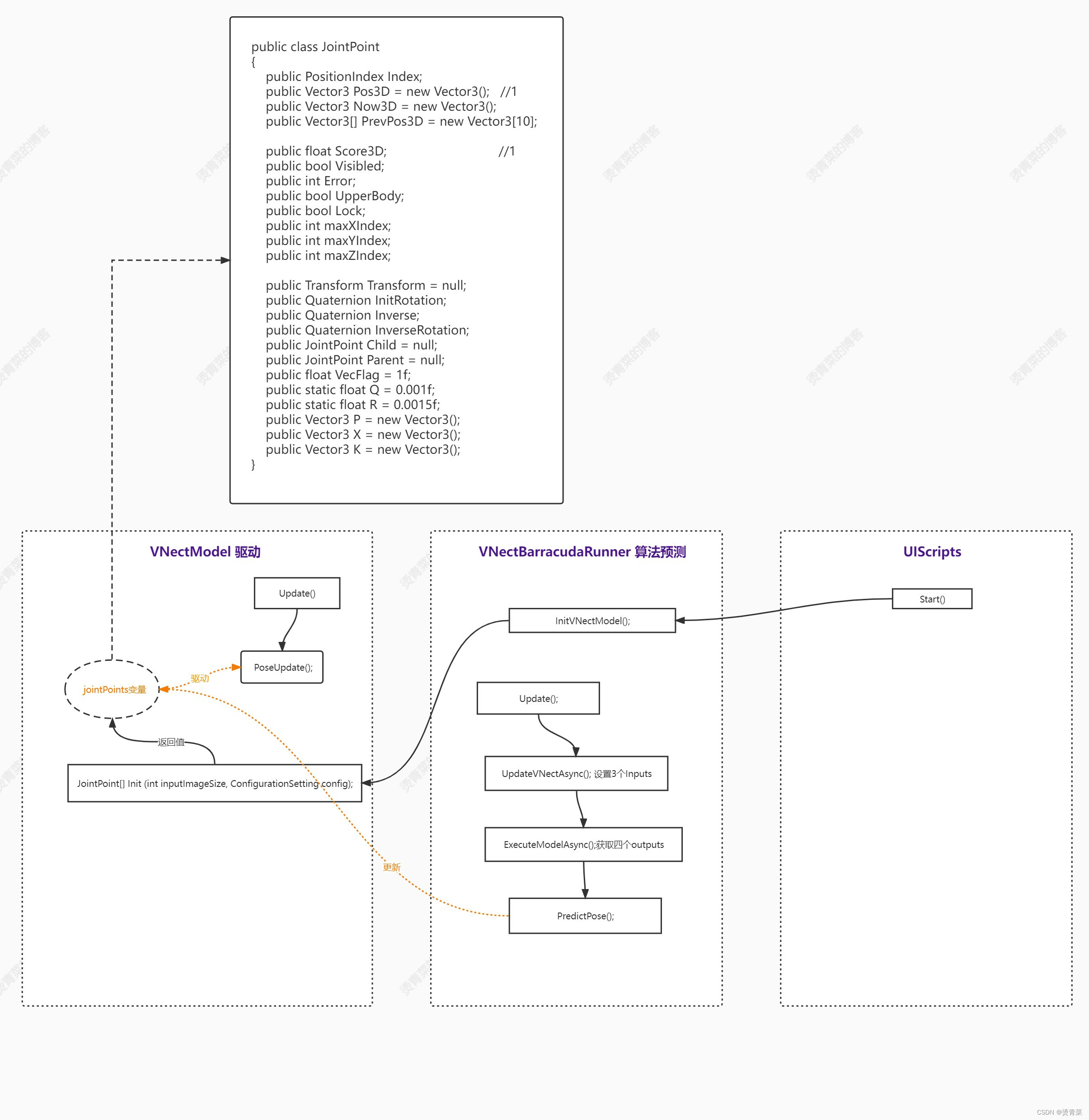

Last words 3D Vision ——3. Human posture estimation (Pose Estimation) Algorithm comparison namely Effect display ——MediaPipe And OpenPose https://blog.csdn.net/XiaoyYidiaodiao/article/details/125571632?spm=1001.2014.3001.5502 This chapter about Use MediaPipe Gesture recognition

https://blog.csdn.net/XiaoyYidiaodiao/article/details/125571632?spm=1001.2014.3001.5502 This chapter about Use MediaPipe Gesture recognition

Single frame gesture recognition code

Focus on simple code explanation

1.solutions.hands

import mediapipe as mp

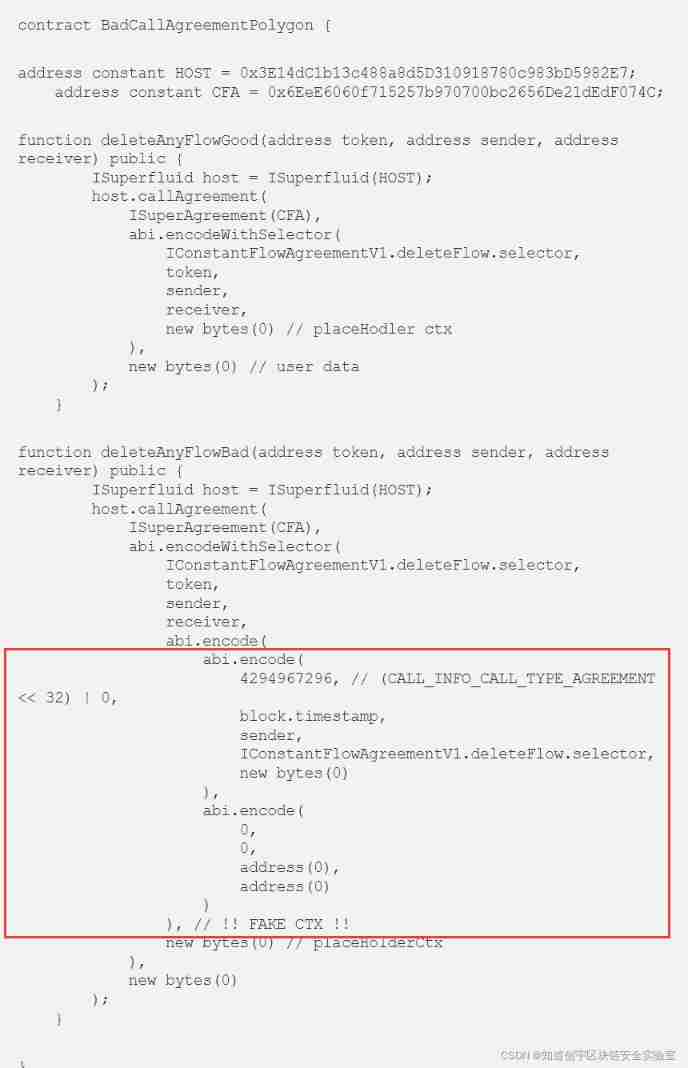

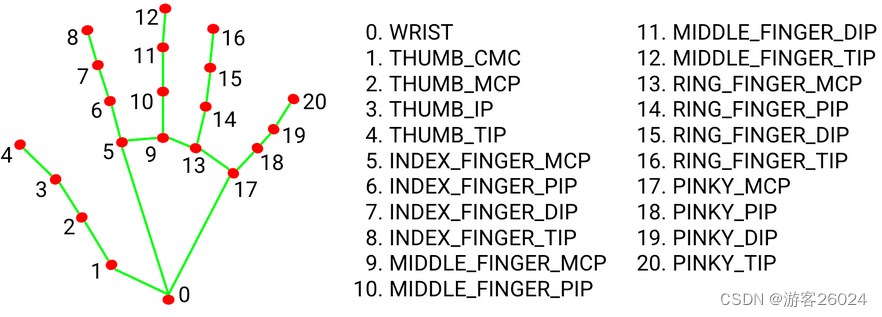

mp_hands = mp.solutions.handsmediapipe Gesture module (.solutions.hands) Divide your hands into 21 A little bit (0-20) Here's the picture 1. , You can judge the angle of the gesture , To recognize what gestures .8 Key point No. is very important , Because of doing HCI( human-computer interaction ) It's all about 8 Key point No. is the element .

2.mp_hand.Hands()

static_image_mode Express Still image or continuous frame video ;

max_num_hands Express How many hands can you recognize at most , The more hands, the slower recognition ;

min_detection_confidence Express Detection confidence threshold ;

min_tracking_confidence Express Tracking confidence threshold between frames ;

hands = mp_hands.Hands(static_image_mode=True,

max_num_hands=2,

min_detection_confidence=0.5,

min_tracking_confidence=0.5)3.mp.solutions.drawing_utils

mapping

draw = mp.solutions.drawing_utils

draw.draw_landmarks(img, hand, mp_hands.HAND_CONNECTIONS)Complete code

import cv2

import mediapipe as mp

import matplotlib.pyplot as plt

if __name__ == '__main__':

mp_hands = mp.solutions.hands

hands = mp_hands.Hands(static_image_mode=True,

max_num_hands=2,

min_detection_confidence=0.5,

min_tracking_confidence=0.5)

draw = mp.solutions.drawing_utils

img = cv2.imread("3.jpg")

# Flip Horizontal

img = cv2.flip(img, 1)

# BGR to RGB

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

results = hands.process(img)

# detected hands

if results.multi_hand_landmarks:

for hand_idx, _ in enumerate(results.multi_hand_landmarks):

hand = results.multi_hand_landmarks[hand_idx]

draw.draw_landmarks(img, hand, mp_hands.HAND_CONNECTIONS)

plt.imshow(img)

plt.show()

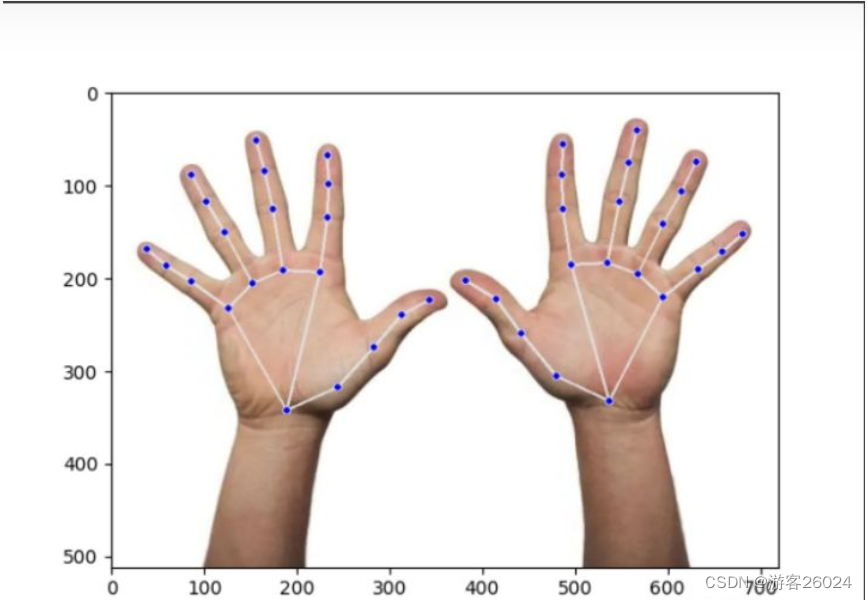

Running results

Output result parsing

import cv2

import mediapipe as mp

import matplotlib.pyplot as plt

if __name__ == "__main__":

mp_hands = mp.solutions.hands

hands = mp_hands.Hands(static_image_mode=False,

max_num_hands=2,

min_detection_confidence=0.5,

min_tracking_confidence=0.5)

draw = mp.soultions.drawing_utils

img = cv2.imread("3.jpg")

img = cv2.filp(img, 1)

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

results = hands.process(img)

print(results.multi_handedness)Show confidence and left and right hands

print(results.multi_handedness)

# results.multi_handednessgive the result as follows , among :label Express Left and right hands ;socre Express Degree of confidence .

[classification {

index: 0

score: 0.9686367511749268

label: "Left"

}

, classification {

index: 1

score: 0.9293265342712402

label: "Right"

}

]

Process finished with exit code 0The call index is 1 Confidence or label ( Left and right hands )

print(results.multi_handedness[1].classification[0].label)

# results.multi_handedness[1].classification[0].label

print(results.multi_handedness[1].classification[0].score)

# results.multi_handedness[1].classification[0].scorgive the result as follows

Right

0.9293265342712402

Process finished with exit code 0Key point coordinates

The key coordinates of all fingers are displayed

print(results.multi_hand_landmarks)

# results.multi_hand_landmarksgive the result as follows , Among them the z The axis is not really normalized or true distance , It's with the 0 Key point No ( Wrist root ) Relative units , So in another aspect, this is also 2.5D

[landmark {

x: 0.2627016007900238

y: 0.6694213151931763

z: 5.427047540251806e-07

}

landmark {

x: 0.33990585803985596

y: 0.6192424297332764

z: -0.03650109842419624

}

landmark {

x: 0.393616259098053

y: 0.5356684923171997

z: -0.052688632160425186

}

landmark {

x: 0.43515193462371826

y: 0.46728551387786865

z: -0.06730890274047852

}

landmark {

x: 0.47741779685020447

y: 0.4358704090118408

z: -0.08190854638814926

}

landmark {

x: 0.3136638104915619

y: 0.3786224126815796

z: -0.031268805265426636

}

landmark {

x: 0.32385849952697754

y: 0.2627469599246979

z: -0.05158748850226402

}

landmark {

x: 0.32598018646240234

y: 0.19216321408748627

z: -0.06765355914831161

}

landmark {

x: 0.32443925738334656

y: 0.13277988135814667

z: -0.08096525818109512

}

landmark {

x: 0.2579415738582611

y: 0.37382692098617554

z: -0.032878294587135315

}

landmark {

x: 0.2418910712003708

y: 0.24533957242965698

z: -0.052028004080057144

}

landmark {

x: 0.2304030954837799

y: 0.16545917093753815

z: -0.06916359812021255

}

landmark {

x: 0.2175263613462448

y: 0.10054746270179749

z: -0.08164216578006744

}

landmark {

x: 0.2122785747051239

y: 0.4006640613079071

z: -0.03896263241767883

}

landmark {

x: 0.16991624236106873

y: 0.29440340399742126

z: -0.06328895688056946

}

landmark {

x: 0.14282439649105072

y: 0.22940337657928467

z: -0.0850684642791748

}

landmark {

x: 0.12077423930168152

y: 0.17327186465263367

z: -0.09951525926589966

}

landmark {

x: 0.17542198300361633

y: 0.45321160554885864

z: -0.04842161759734154

}

landmark {

x: 0.11945687234401703

y: 0.3971719741821289

z: -0.07581596076488495

}

landmark {

x: 0.08262123167514801

y: 0.364059180021286

z: -0.0922597199678421

}

landmark {

x: 0.05292154848575592

y: 0.3294071555137634

z: -0.10204877704381943

}

, landmark {

x: 0.7470765113830566

y: 0.6501559019088745

z: 5.611524898085918e-07

}

landmark {

x: 0.6678823232650757

y: 0.5958800911903381

z: -0.02732565440237522

}

landmark {

x: 0.6151978969573975

y: 0.5073886513710022

z: -0.04038363695144653

}

landmark {

x: 0.5764827728271484

y: 0.4352443814277649

z: -0.05264268442988396

}

landmark {

x: 0.5317870378494263

y: 0.39630216360092163

z: -0.0660330131649971

}

landmark {

x: 0.6896227598190308

y: 0.36289966106414795

z: -0.0206490196287632

}

landmark {

x: 0.6775739192962646

y: 0.24553297460079193

z: -0.040212471038103104

}

landmark {

x: 0.6752403974533081

y: 0.1725052148103714

z: -0.0579310804605484

}

landmark {

x: 0.6765506267547607

y: 0.10879574716091156

z: -0.07280831784009933

}

landmark {

x: 0.7441056370735168

y: 0.35811278223991394

z: -0.027878733351826668

}

landmark {

x: 0.7618186473846436

y: 0.22912392020225525

z: -0.04549985006451607

}

landmark {

x: 0.7751038670539856

y: 0.14770638942718506

z: -0.06489630043506622

}

landmark {

x: 0.788221538066864

y: 0.07831943035125732

z: -0.08037586510181427

}

landmark {

x: 0.7893437743186951

y: 0.38241785764694214

z: -0.03979477286338806

}

landmark {

x: 0.8274303674697876

y: 0.27563461661338806

z: -0.06700978428125381

}

landmark {

x: 0.8543692827224731

y: 0.20750769972801208

z: -0.09000862389802933

}

landmark {

x: 0.877074122428894

y: 0.14460964500904083

z: -0.10605450719594955

}

landmark {

x: 0.8275361061096191

y: 0.4312361180782318

z: -0.05377437546849251

}

landmark {

x: 0.879731297492981

y: 0.3712681233882904

z: -0.08328921347856522

}

landmark {

x: 0.9155224561691284

y: 0.33470967411994934

z: -0.09889543056488037

}

landmark {

x: 0.9464077949523926

y: 0.297884464263916

z: -0.109173983335495

}

]

Process finished with exit code 0Count the number of hands

print(len(results.multi_hand_landmarks))

# len(results.multi_hand_landmarks)give the result as follows

2

Process finished with exit code 0Get the index as 1 Of the 3 Coordinates of key point No

print(results.multi_hand_landmarks[1].landmark[3])

# results.multi_hand_landmarks[1].landmark[3]give the result as follows

x: 0.5764827728271484

y: 0.4352443814277649

z: -0.05264268442988396

Process finished with exit code 0The above x,y Coordinates are normalized according to height and width , Convert it into coordinate results in the image

height, width, _ = img.shape

print("height:{},width:{}".format(height, width))

cx = results.multi_hand_landmarks[1].landmark[3].x * width

cy = results.multi_hand_landmarks[1].landmark[3].y * height

print("cx:{}, cy:{}".format(cx, cy))give the result as follows

height:512,width:720

cx:415.0675964355469, cy:222.84512329101562

Process finished with exit code 0Connections between keys

print(mp_hands.HAND_CONNECTIONS)

# mp_hands.HAND_CONNECTIONSgive the result as follows

frozenset({(3, 4),

(0, 5),

(17, 18),

(0, 17),

(13, 14),

(13, 17),

(18, 19),

(5, 6),

(5, 9),

(14, 15),

(0, 1),

(9, 10),

(1, 2),

(9, 13),

(10, 11),

(19, 20),

(6, 7),

(15, 16),

(2, 3),

(11, 12),

(7, 8)})

Process finished with exit code 0Display left and right hand information

if results.multi_hand_landmarks:

hand_info = ""

for h_idx, hand in enumerate(results.multi_hand_landmarks):

hand_info += str(h_idx) + ":" + str(results.multi_handedness[h_idx].classification[0].label) + ", "

print(hand_info)give the result as follows

0:Left, 1:Right,

Process finished with exit code 0Single frame gesture recognition code optimization

Complete code

import cv2

import mediapipe as mp

import matplotlib.pyplot as plt

if __name__ == '__main__':

mp_hands = mp.solutions.hands

hands = mp_hands.Hands(static_image_mode=True,

max_num_hands=2,

min_detection_confidence=0.5,

min_tracking_confidence=0.5)

draw = mp.solutions.drawing_utils

img = cv2.imread("3.jpg")

img = cv2.flip(img, 1)

height, width, _ = img.shape

img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

results = hands.process(img)

if results.multi_hand_landmarks:

handness_str = ''

index_finger_tip_str = ''

for h_idx, hand in enumerate(results.multi_hand_landmarks):

# get 21 key points of hand

hand_info = results.multi_hand_landmarks[h_idx]

# log left and right hands info

draw.draw_landmarks(img, hand_info, mp_hands.HAND_CONNECTIONS)

temp_handness = results.multi_handedness[h_idx].classification[0].label

handness_str += "{}:{} ".format(h_idx, temp_handness)

cz0 = hand_info.landmark[0].z

for idx, keypoint in enumerate(hand_info.landmark):

cx = int(keypoint.x * width)

cy = int(keypoint.y * height)

cz = keypoint.z

depth_z = cz0 - cz

# radius about depth_z

radius = int(6 * (1 + depth_z))

if idx == 0:

img = cv2.circle(img, (cx, cy), radius * 2, (0, 0, 255), -1)

elif idx == 8:

img = cv2.circle(img, (cx, cy), radius * 2, (193, 182, 255), -1)

index_finger_tip_str += '{}:{:.2f} '.format(h_idx, depth_z)

elif idx in [1, 5, 9, 13, 17]:

img = cv2.circle(img, (cx, cy), radius, (16, 144, 247), -1)

elif idx in [2, 6, 10, 14, 18]:

img = cv2.circle(img, (cx, cy), radius, (1, 240, 255), -1)

elif idx in [3, 7, 11, 15, 19]:

img = cv2.circle(img, (cx, cy), radius, (140, 47, 240), -1)

elif idx in [4, 12, 16, 20]:

img = cv2.circle(img, (cx, cy), radius, (223, 155, 60), -1)

img = cv2.putText(img, handness_str, (10, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.6, (255, 0, 0), 2)

img = cv2.putText(img, index_finger_tip_str, (10, 80), cv2.FONT_HERSHEY_SIMPLEX, 0.6, (255, 0, 255), 2)

plt.imshow(img)

plt.show()Don't use that Morandi color this time , My friend said that the Morandi color system I used was not as obvious as the original color .

Run the program

Video gesture recognition

1. Camera shooting gesture recognition

Simplified code

import cv2

import mediapipe as mp

mp_hands = mp.solutions.hands

hands = mp_hands.Hands(static_image_mode=False,

max_num_hands=2,

min_tracking_confidence=0.5,

min_detection_confidence=0.5)

draw = mp.solutions.drawing_utils

def process_frame(img):

img = cv2.flip(img, 1)

height, width, _ = img.shape

results = hands.process(img)

if results.multi_hand_landmarks:

for h_idx, hand in enumerate(results.multi_hand_landmarks):

draw.draw_landmarks(img, hand, mp_hands.HAND_CONNECTIONS)

return img

if __name__ == '__main__':

cap = cv2.VideoCapture()

cap.open(0)

while cap.isOpened():

success, frame = cap.read()

if not success:

raise ValueError("Error")

frame = process_frame(frame)

cv2.imshow("hand", frame)

if cv2.waitKey(1) in [ord('q'), 27]:

break

cap.release()

cv2.destroyAllWindows()Optimized code

import cv2

import mediapipe as mp

mp_hands = mp.solutions.hands

hands = mp_hands.Hands(static_image_mode=False,

max_num_hands=2,

min_tracking_confidence=0.5,

min_detection_confidence=0.5)

draw = mp.solutions.drawing_utils

def process_frame(img):

img = cv2.flip(img, 1)

height, width, _ = img.shape

results = hands.process(img)

handness_str = ''

index_finger_tip_str = ''

if results.multi_hand_landmarks:

for h_idx, hand in enumerate(results.multi_hand_landmarks):

draw.draw_landmarks(img, hand, mp_hands.HAND_CONNECTIONS)

# get 21 key points of hand

hand_info = results.multi_hand_landmarks[h_idx]

# log left and right hands info

temp_handness = results.multi_handedness[h_idx].classification[0].label

handness_str += "{}:{} ".format(h_idx, temp_handness)

cz0 = hand_info.landmark[0].z

for idx, keypoint in enumerate(hand_info.landmark):

cx = int(keypoint.x * width)

cy = int(keypoint.y * height)

cz = keypoint.z

depth_z = cz0 - cz

# radius about depth_z

radius = int(6 * (1 + depth_z))

if idx == 0:

img = cv2.circle(img, (cx, cy), radius * 2, (0, 0, 255), -1)

elif idx == 8:

img = cv2.circle(img, (cx, cy), radius * 2, (193, 182, 255), -1)

index_finger_tip_str += '{}:{:.2f} '.format(h_idx, depth_z)

elif idx in [1, 5, 9, 13, 17]:

img = cv2.circle(img, (cx, cy), radius, (16, 144, 247), -1)

elif idx in [2, 6, 10, 14, 18]:

img = cv2.circle(img, (cx, cy), radius, (1, 240, 255), -1)

elif idx in [3, 7, 11, 15, 19]:

img = cv2.circle(img, (cx, cy), radius, (140, 47, 240), -1)

elif idx in [4, 12, 16, 20]:

img = cv2.circle(img, (cx, cy), radius, (223, 155, 60), -1)

img = cv2.putText(img, handness_str, (25, 50), cv2.FONT_HERSHEY_SIMPLEX, 0.6, (255, 0, 0), 2)

img = cv2.putText(img, index_finger_tip_str, (25, 100), cv2.FONT_HERSHEY_SIMPLEX, 0.6, (255, 0, 255), 2)

return img

if __name__ == '__main__':

cap = cv2.VideoCapture()

cap.open(0)

while cap.isOpened():

success, frame = cap.read()

if not success:

raise ValueError("Error")

frame = process_frame(frame)

cv2.imshow("hand", frame)

if cv2.waitKey(1) in [ord('q'), 27]:

break

cap.release()

cv2.destroyAllWindows()This code works properly , Do not display operation results !

2. Video real-time gesture recognition

Simplified complete code

import cv2

from tqdm import tqdm

import mediapipe as mp

mp_hands = mp.solutions.hands

hands = mp_hands.Hands(static_image_mode=False,

max_num_hands=2,

min_tracking_confidence=0.5,

min_detection_confidence=0.5)

draw = mp.solutions.drawing_utils

def process_frame(img):

img = cv2.flip(img, 1)

height, width, _ = img.shape

results = hands.process(img)

if results.multi_hand_landmarks:

for h_idx, hand in enumerate(results.multi_hand_landmarks):

draw.draw_landmarks(img, hand, mp_hands.HAND_CONNECTIONS)

return img

def out_video(input):

file = input.split("/")[-1]

output = "out-" + file

print("It will start processing video: {}".format(input))

cap = cv2.VideoCapture(input)

frame_count = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))

frame_size = (cap.get(cv2.CAP_PROP_FRAME_WIDTH), cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

# create VideoWriter, VideoWriter_fourcc is video decode

fourcc = cv2.VideoWriter_fourcc(*'mp4v')

fps = cap.get(cv2.CAP_PROP_FPS)

out = cv2.VideoWriter(output, fourcc, fps, (int(frame_size[0]), int(frame_size[1])))

with tqdm(range(frame_count)) as pbar:

while cap.isOpened:

success, frame = cap.read()

if not success:

break

frame = process_frame(frame)

out.write(frame)

pbar.update(1)

pbar.close()

cv2.destroyAllWindows()

out.release()

cap.release()

print("{} finished!".format(output))

if __name__ == '__main__':

video_dirs = "7.mp4"

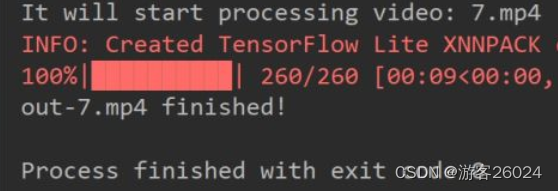

out_video(video_dirs)Running results

This video is what I casually found on the Internet to do experiments

Gesture recognition

Optimized complete code

import cv2

import time

from tqdm import tqdm

import mediapipe as mp

mp_hands = mp.solutions.hands

hands = mp_hands.Hands(static_image_mode=False,

max_num_hands=2,

min_tracking_confidence=0.5,

min_detection_confidence=0.5)

draw = mp.solutions.drawing_utils

def process_frame(img):

t0 = time.time()

img = cv2.flip(img, 1)

height, width, _ = img.shape

results = hands.process(img)

handness_str = ''

index_finger_tip_str = ''

if results.multi_hand_landmarks:

for h_idx, hand in enumerate(results.multi_hand_landmarks):

draw.draw_landmarks(img, hand, mp_hands.HAND_CONNECTIONS)

# get 21 key points of hand

hand_info = results.multi_hand_landmarks[h_idx]

# log left and right hands info

temp_handness = results.multi_handedness[h_idx].classification[0].label

handness_str += "{}:{} ".format(h_idx, temp_handness)

cz0 = hand_info.landmark[0].z

for idx, keypoint in enumerate(hand_info.landmark):

cx = int(keypoint.x * width)

cy = int(keypoint.y * height)

cz = keypoint.z

depth_z = cz0 - cz

# radius about depth_z

radius = int(6 * (1 + depth_z))

if idx == 0:

img = cv2.circle(img, (cx, cy), radius * 2, (0, 0, 255), -1)

elif idx == 8:

img = cv2.circle(img, (cx, cy), radius * 2, (193, 182, 255), -1)

index_finger_tip_str += '{}:{:.2f} '.format(h_idx, depth_z)

elif idx in [1, 5, 9, 13, 17]:

img = cv2.circle(img, (cx, cy), radius, (16, 144, 247), -1)

elif idx in [2, 6, 10, 14, 18]:

img = cv2.circle(img, (cx, cy), radius, (1, 240, 255), -1)

elif idx in [3, 7, 11, 15, 19]:

img = cv2.circle(img, (cx, cy), radius, (140, 47, 240), -1)

elif idx in [4, 12, 16, 20]:

img = cv2.circle(img, (cx, cy), radius, (223, 155, 60), -1)

img = cv2.putText(img, handness_str, (25, 100), cv2.FONT_HERSHEY_SIMPLEX, 0.6, (255, 0, 0), 2)

img = cv2.putText(img, index_finger_tip_str, (25, 150), cv2.FONT_HERSHEY_SIMPLEX, 0.6, (255, 0, 255), 2)

fps = 1 / (time.time() - t0)

img = cv2.putText(img, "FPS:" + str(int(fps)), (25, 50), cv2.FONT_HERSHEY_SIMPLEX, 0.6, (0, 255, 0), 2)

return img

def out_video(input):

file = input.split("/")[-1]

output = "out-optim-" + file

print("It will start processing video: {}".format(input))

cap = cv2.VideoCapture(input)

frame_count = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))

frame_size = (cap.get(cv2.CAP_PROP_FRAME_WIDTH), cap.get(cv2.CAP_PROP_FRAME_HEIGHT))

# create VideoWriter, VideoWriter_fourcc is video decode

fourcc = cv2.VideoWriter_fourcc(*'mp4v')

fps = cap.get(cv2.CAP_PROP_FPS)

out = cv2.VideoWriter(output, fourcc, fps, (int(frame_size[0]), int(frame_size[1])))

with tqdm(range(frame_count)) as pbar:

while cap.isOpened:

success, frame = cap.read()

if not success:

break

frame = process_frame(frame)

out.write(frame)

pbar.update(1)

pbar.close()

cv2.destroyAllWindows()

out.release()

cap.release()

print("{} finished!".format(output))

if __name__ == '__main__':

video_dirs = "7.mp4"

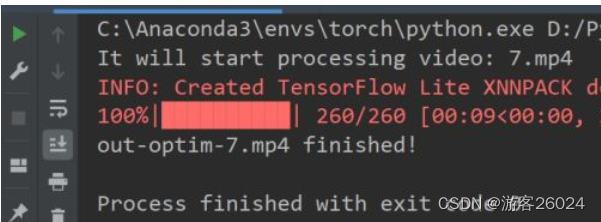

out_video(video_dirs)Running results

Gesture recognition optimization

unfinished ...

边栏推荐

- The growth path of test / development programmers, the problem of thinking about the overall situation

- The inconsistency between the versions of dynamic library and static library will lead to bugs

- Obstacle detection

- Recoverable fuse characteristic test

- 【第30天】给定一个整数 n ,求它的因数之和

- Ubantu check cudnn and CUDA versions

- Huawei Hrbrid interface and VLAN division based on IP

- 程序员搞开源,读什么书最合适?

- Condition and AQS principle

- After 95, the CV engineer posted the payroll and made up this. It's really fragrant

猜你喜欢

随机推荐

MATLB | real time opportunity constrained decision making and its application in power system

leetcode刷题_平方数之和

282. Stone consolidation (interval DP)

【全网最全】 |MySQL EXPLAIN 完全解读

Leetcode 剑指 Offer 59 - II. 队列的最大值

MYSQL---查询成绩为前5名的学生

Unity VR resource flash surface in scene

Mobilenet series (5): use pytorch to build mobilenetv3 and learn and train based on migration

Xunrui CMS plug-in automatically collects fake original free plug-ins

037 PHP login, registration, message, personal Center Design

SCM Chinese data distribution

Yii console method call, Yii console scheduled task

网易智企逆势进场,游戏工业化有了新可能

Pbootcms plug-in automatically collects fake original free plug-ins

Dedecms plug-in free SEO plug-in summary

Gartner发布2022-2023年八大网络安全趋势预测,零信任是起点,法规覆盖更广

JMeter BeanShell的基本用法 一下语法只能在beanshell中使用

Development trend of Ali Taobao fine sorting model

The inconsistency between the versions of dynamic library and static library will lead to bugs

VMware Tools安装报错:无法自动安装VSock驱动程序