当前位置:网站首页>Introduction and application of bigfilter global transaction anti duplication component

Introduction and application of bigfilter global transaction anti duplication component

2022-07-04 22:18:00 【Chang'an chain open source community】

We will use three articles to explain the work of Chang'an chain in improving the anti duplication ability of transactions , This is the third part .

All three chapters mainly include the following contents :

1. Chang'an chain trading pool and anti duplication trading optimization ;

2. The cuckoo filter of Chang'an chain transaction ;

3. bigfilter Introduction and application of global transaction anti duplication component .

One 、 background

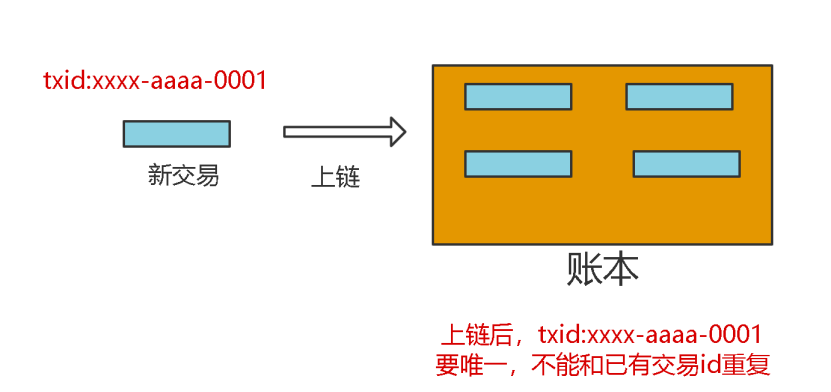

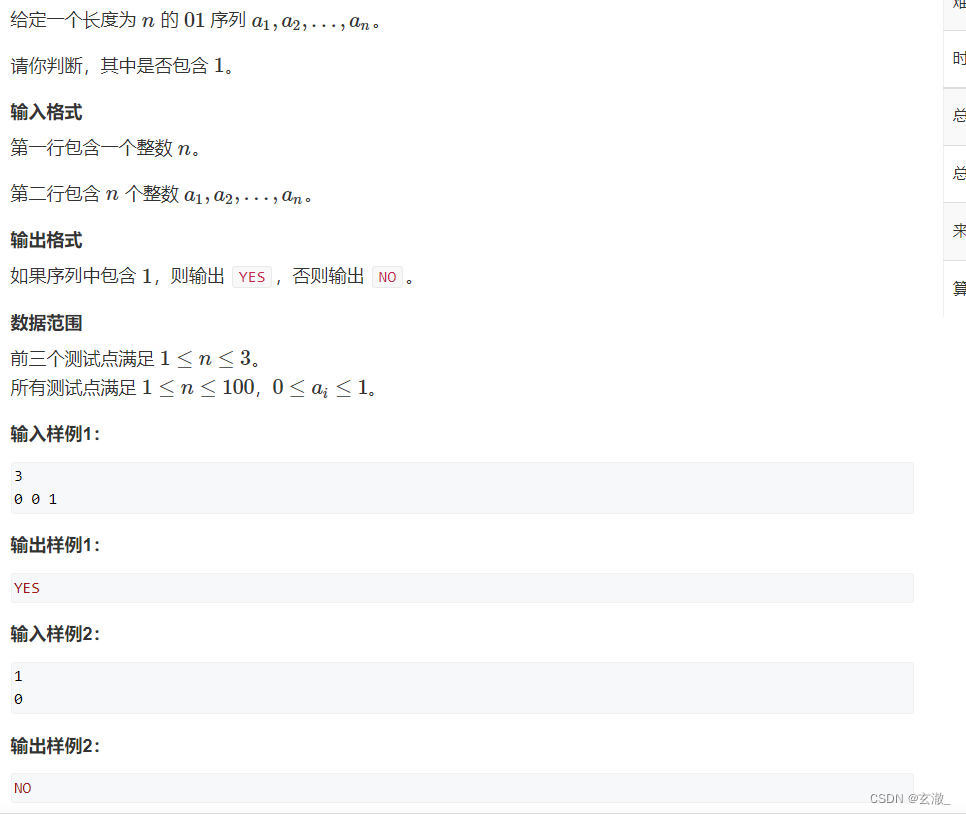

All new transactions in the blockchain ledger need a transaction id, transaction id It has to be globally unique , Cannot conflict with existing transactions in the ledger ( equally ), Because the transactions in the blockchain ledger are increasing , So there will be more and more transactions accumulated in the ledger .

chart 1

New transactions should be stored in the ledger , It is necessary to check the transactions of new transactions id Whether it already exists in the account book . This check is called transaction filtering ( Anti weight ). The corresponding mathematical model is a new element newTxID Whether there is a collection leadger_txIDs in . The mathematical model is very simple , Combined with blockchain scenario , Demand is :

1. txID Generate rules , Must be decentralized , Generated arbitrarily by the user , No restrictions ;

2. txID Can't repeat .

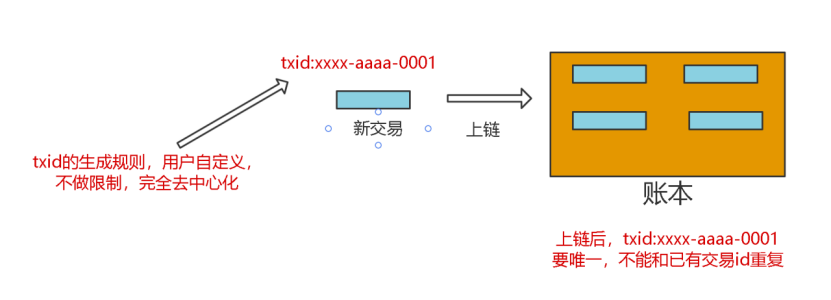

Seemingly simple requirements , But it's actually very difficult . What is the specific difficulty ?

chart 2

Two 、 Difficulty analysis

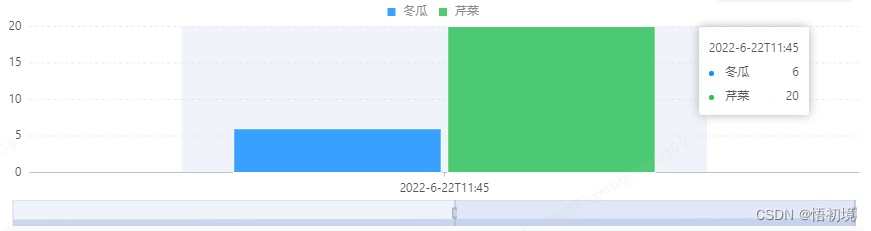

difficulty 1: Transaction filtering is required to be fast .

technical , Verify whether an element is in a collection , The fastest way is to use hash sets ; Algorithm , Time complexity O(1), Spatial complexity O(n) Build a collection Set, Each element first determines whether there is , Do a hash operation .

But collections are built in memory , If thousands 、 Tens of thousands txid It is acceptable to store in memory , But if it is 10 One hundred million txid, This magnitude is too large , Memory may not be able to store ; What's more? , Transactions in the blockchain are only increasing , The collection will be bigger and bigger , It will burst the memory .

difficulty 2: There are many transactions .

Transactions in the blockchain are only increasing . If the number of transactions accumulated in the ledger reaches 10 Billion , What do I do ? In memory 10 Billion may not be saved . above-mentioned , The time complexity of hashing is O(1), But the spatial complexity is O(n);10 One hundred million TxID Put it in memory ,10^9*32B=32GB( Suppose a txid from 2 individual uuid, common 32Byte).

difficulty 3: Single machine resources are limited .

Single server memory is limited . If there is 50 Billion 、100 Billion 、 even to the extent that 1000 Billion transactions , A single machine can't be saved ,100 Billion will occupy 320G Memory .

difficulty 4: Transactions are not lost .

Transactions cannot be written only to memory , Because data will be lost in case of power failure , So you need persistence .

3、 ... and 、 Technical solution analysis

1. Algorithm

Through the hash function, we will TxID Mapping to a certain value is a good choice , At the same time, you cannot directly use the original string txID Store in collection .

2. Storage efficiency

If you just take txid In memory , It takes up a lot of space .

Change the way of thinking :

Through a function , hold txid Mapping to 0-n An integer in the integer interval of , such as txid->interN; Then through a function interN Map to a bit of a binary number bit On ; Through the above two steps , You can put some txid Map to a bit of a binary number bit On , This is equivalent to putting a pile of txid, Stored in a binary number , Judge a txid Whether there is , Just operate on two functions + One and operation is enough , This is it. The bloon filter The prototype of the .

such ,100 One hundred million txid, It only needs 10^10 individual bit position , It only needs 1200MB( about ) Storage space ; And direct deposit needs 320000MB, Efficiency comparison 266 times .

3. Node expansion

The storage space and computing resources of a single machine are limited , In order to store more data and make use of more resources in parallel at the same time, we need to add another function , hold txid Map to a server in some servers ; meanwhile , Support batch processing txid Whether there is , Also need to be able to do sharding And aggregation .

4. Persistence

The fastest way to write is WAL. Because the seek time of mechanical disk , Including disk rotation + moving head , The time of random data reading and writing is much longer than that of sequential data reading and writing . If we put txid Written in WAL in , It's the fastest 、 A scheme without losing data ; At the same time, in order to filter faster , You need to use a bloom filter , also bitmap Data should reside in memory .

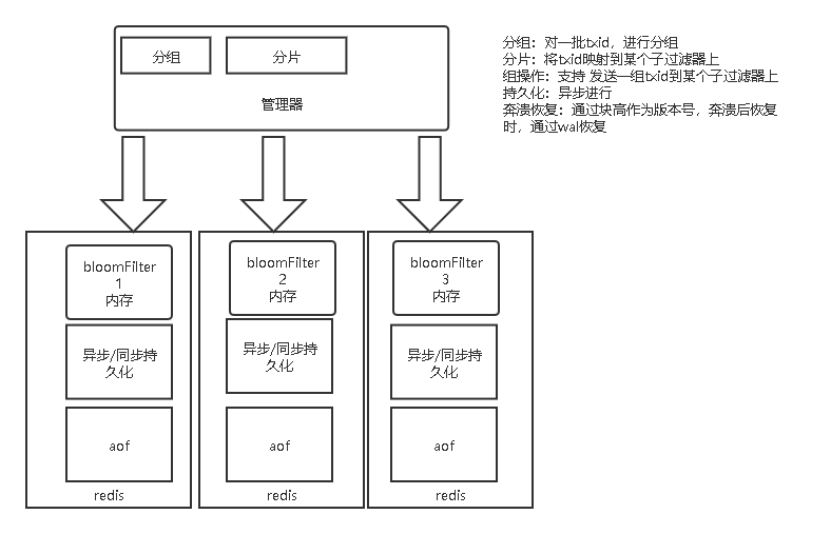

Four 、 Chang'an chain solution -bigfilter

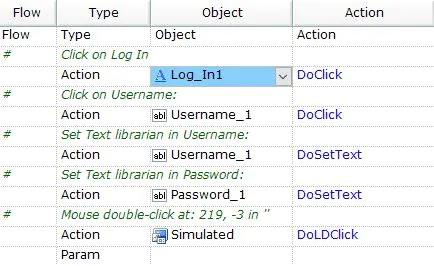

Based on the above background 、 problem 、 Difficulties and technical analysis we designed and implemented bigfilter.bigfilter The functions are as follows :

1. Filter new transactions faster . The bottom layer uses The bloon filter , Data stored in memory , With redis-bloom Plug in form .

2. Support massive transactions . Manage multiple through one manager redis-bloom node , Complete the data sharding And aggregation .

3. Persistence . adopt wal Write the new deal log in , At the same time redis Memory and aof Persistent storage , Do not lose data 、 It can recover automatically after crash .

4. Batch filtering . Support writing a batch of new transactions to bigfilter in , It also supports batch judgment of whether a batch of new transactions are bigfilter And achieve parallel computing .

chart 3

5、 ... and 、 Realization effect

We start from the storage space 、 Anti weight stability 、 Read and write performance 、 Four dimensions of accuracy are used to evaluate the effect .

Storage space : It refers to the implementation of transaction filtering , Memory used 、 Disk and other space size .

Storage comparison | disk | Memory | remarks | The number of deals |

bigfilter | 10GB | 5G | 100 Billion | |

leveldb | 500GB | txid Direct deposit | 100 Billion | |

Redis set | >1TB | 1TB | With set Mode storage | 100 Billion |

You can see ,bigfilter It takes up the least memory and disk space , Than directly storing the original txid The efficiency of the method is dozens of times 、 Even a hundred times the gap .

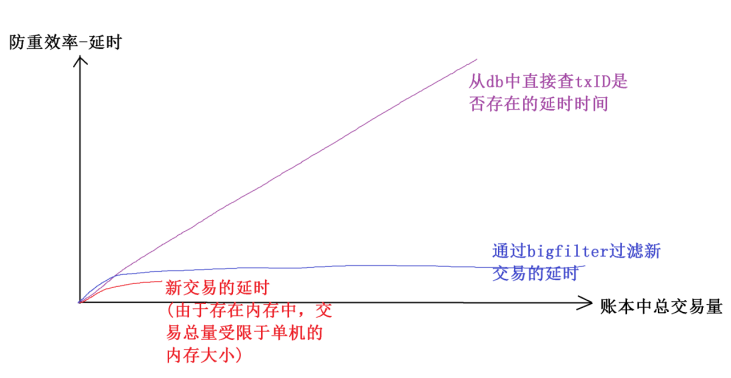

Anti weight stability : It refers to the increasing number of transactions accumulated in the account book , Whether the time delay required for a new transaction filtering will increase significantly with the increase of the total transaction volume in the ledger .

chart 4

You can see ,bigfilter As the total volume of transactions increases , The delay time for filtering new transactions is basically the same . And based on db Do transaction filtering , As the total volume of transactions increases, the delay also increases ; Memory based filtering , Due to the limitation of single machine memory, it cannot support too much transaction volume .

Read and write performance : It refers to the delay in writing some new transactions into the ledger and filtering whether some new transactions are in the ledger .

In our tests :

1. In trade id, Completely decentralized , Without restricting the generation of rules ;

2. Already exists in the ledger 50 Billion transactions .

Read and write performance - Batch filtering , Judge 1 Whether 10000 new transactions are 50 There are hundreds of millions of transactions ( Filter ) need 30ms, On average, every transaction 3us.

Read and write performance - Batch write , write in 1 Ten thousand transactions , need 10ms, On average, every transaction 1us.

Accuracy rate : Refers to a new transaction , Not in the account book , But after the anti weight calculation in the filter , Sometimes it is misjudged as This new deal txID In the account book , Get rid of this misjudgment , The accuracy percentage of the transaction weight prevention obtained .

In different configurations , The performance is different , because bigfilter It is a distributed component that supports multiple node storage . Theoretically speaking , When the total trading volume is certain , More nodes , The higher the accuracy of transaction filtering . In our test environment , stay 50 Billion in total trading volume , You can achieve 99% The accuracy of .

6、 ... and 、 summary

test result :

1. Storage space .50 Billion transactions ,redis aof Occupy 10G. Memory footprint 5G.

2. Anti weight stability . Basically stable , Specific view .

3. Read and write performance - Batch filtering . Judge 1 Whether 10000 new transactions are 50 There are hundreds of millions of transactions ( Filter ) need 30ms, On average, every transaction 3us.

4. Read and write performance - Batch write . write in 1 Ten thousand transactions , need 10ms. On average, every transaction 1us.

RECOMMEND

Recommended reading

Changan chain ChainMaker Anti duplication optimization of trading pool transactions

Changan chain ChainMaker v2.2.x Performance pursuit of Vm-Docker-Go Optimize

Tips

More Chang'an chain open source projects QA, You can log in to the open source community 、 Technical document library view .

Download the source code

https://git.chainmaker.org.cn/chainmaker/chainmaker-go

Consult the documentation

https://docs.chainmaker.org.cn/

Changan chain ChainMaker Case collection

http://www.wenjuan.com/s/UZBZJvhFGte/

“ Changan chain ChainMaker” It is the first independent and controllable blockchain software and hardware technology system in China , It is jointly developed by microchip Research Institute, enterprises and universities , With full autonomy 、 High performance 、 Strong privacy 、 The outstanding characteristics of wide cooperation . Chang'an chain is oriented to large-scale node networking 、 High transaction processing performance 、 Strong data security, privacy and other next-generation blockchain technology requirements , Integrate the special acceleration chip hardware of blockchain and the bottom software platform that can be assembled , To build high performance 、 High credibility 、 High security digital infrastructure provides new solutions , Provide strong blockchain technical support for Chang'an chain ecological alliance . The name “ Changan chain ”, Metaphorical meaning “ lasting political stability 、 Create brilliance again 、 Link to the world “.

边栏推荐

- What is the stock account opening process? Is it safe to use flush mobile stock trading software?

- 短视频系统源码,点击屏幕空白处键盘不自动收起

- Bizchart+slider to realize grouping histogram

- New intersectionobserver usage notes

- 传智教育|如何转行互联网高薪岗位之一的软件测试?(附软件测试学习路线图)

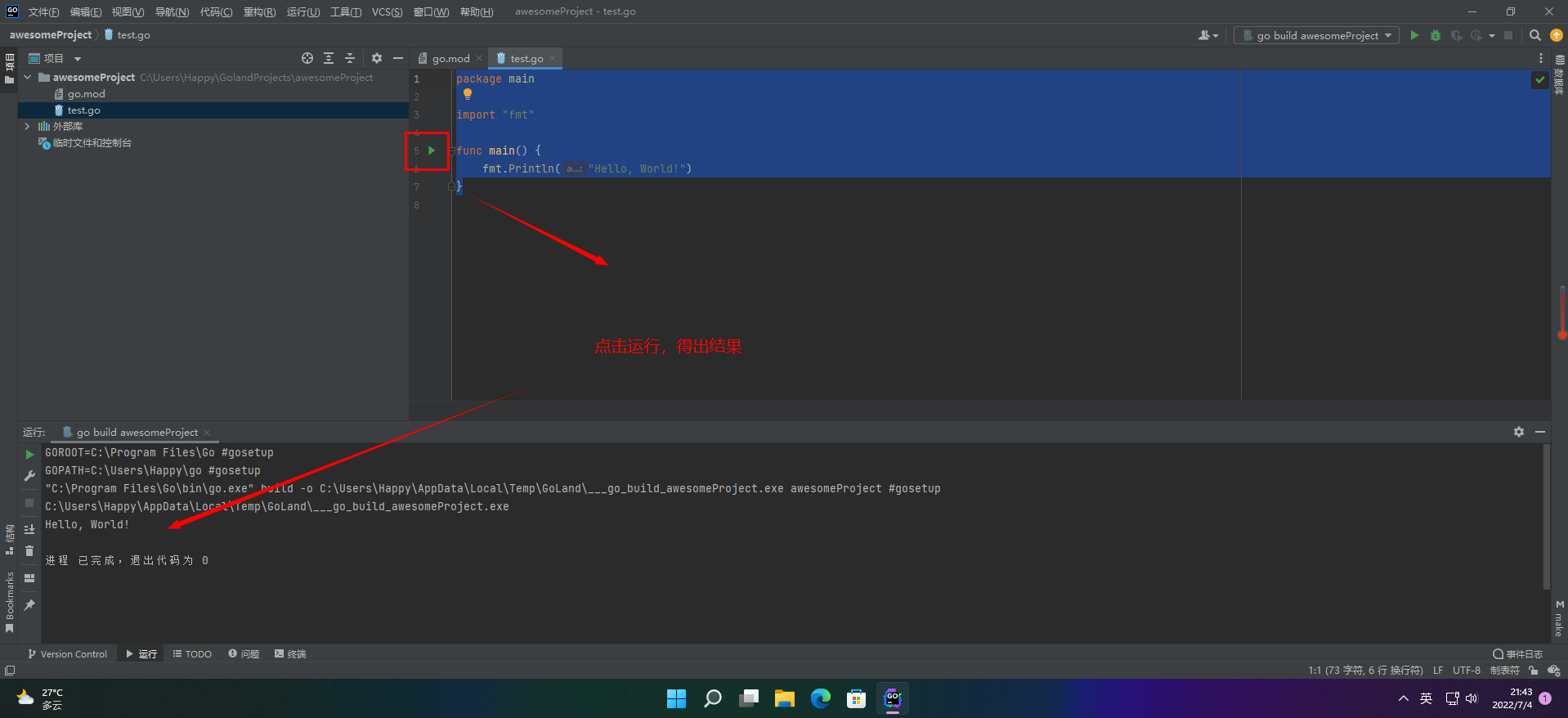

- 【愚公系列】2022年7月 Go教学课程 003-IDE的安装和基本使用

- Redis has three methods for checking big keys, which are necessary for optimization

- ACM Multimedia 2022 | 视觉语言预训练模型中社会偏见的反事实衡量和消除

- 置信区间的画法

- KDD2022 | 什么特征进行交互才是有效的?

猜你喜欢

Redis has three methods for checking big keys, which are necessary for optimization

【Acwing】第58场周赛 题解

bizchart+slider实现分组柱状图

Common open source codeless testing tools

A large number of virtual anchors in station B were collectively forced to refund: revenue evaporated, but they still owe station B; Jobs was posthumously awarded the U.S. presidential medal of freedo

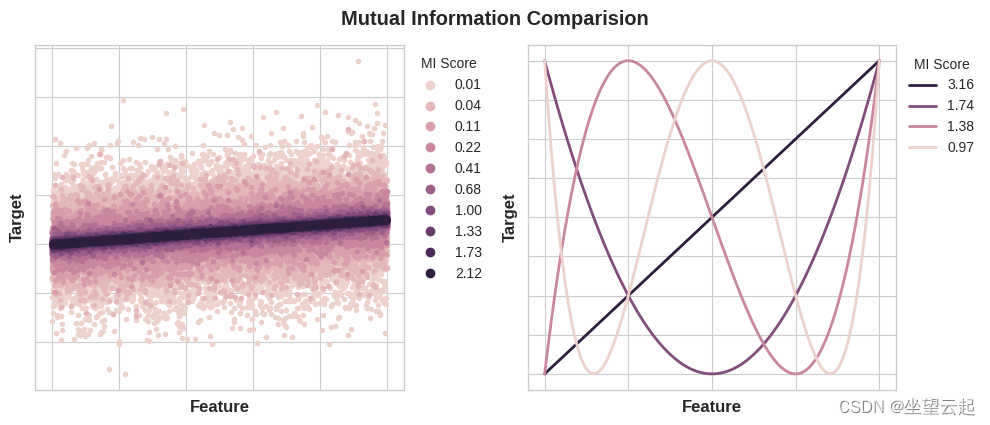

Machine learning notes mutual information

How to transfer to software testing, one of the high paying jobs in the Internet? (software testing learning roadmap attached)

【愚公系列】2022年7月 Go教学课程 003-IDE的安装和基本使用

Use blocconsumer to build responsive components and monitor status at the same time

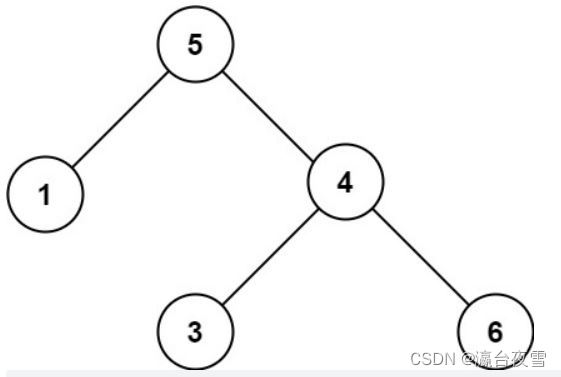

力扣98:验证二叉搜索树

随机推荐

WebGIS框架---kalrry

php短视频源码,点赞时会有大拇指动画飘起

GTEST from ignorance to proficiency (3) what are test suite and test case

i. Mx6ull driver development | 24 - platform based driver model lights LED

PostgreSQL服务端编程聚合和分组

【米哈游2023届秋招】开启【校招唯一专属内推码EYTUC】

保证接口数据安全的10种方案

QT - plot other problems

283. 移动零-c与语言辅助数组法

Visual task scheduling & drag and drop | scalph data integration based on Apache seatunnel

【C语言进阶篇】数组&&指针&&数组笔试题

Common shortcut keys for hbuilder x

Force buckle 3_ 383. Ransom letter

Convolutional neural network model -- lenet network structure and code implementation

AscendEX 上线 Walken (WLKN) - 一款卓越领先的“Walk-to-Earn”游戏

Xiangjiang Kunpeng joined the shengteng Wanli partnership program and continued to write a new chapter of cooperation with Huawei

MongoDB中的索引操作总结

PostgreSQLSQL高级技巧透视表

30余家机构联合发起数字藏品行业倡议,未来会如何前进?

Why do you have to be familiar with industry and enterprise business when doing Bi development?