当前位置:网站首页>Play with grpc - go deep into concepts and principles

Play with grpc - go deep into concepts and principles

2022-07-04 22:08:00 【InfoQ】

- 《 Get along well with gRPC—Go Use gRPC Communication practice 》

- 《 Get along well with gRPC— Communication between different programming languages 》

- 《 I'll take you to understand HTTP and RPC The similarities and differences of the agreement 》

- 《 from 1 Start , Expand Go Language backend business system RPC function 》

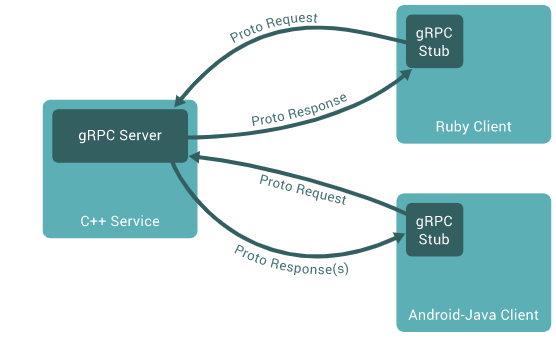

1 Use gRPC Basic architecture

- Service: Services provided

- Client:gRPC client

- gRPC Server:gRPC Server interface

- gRPC Stub:gRPC Client interface

- Proto Request/Proto Response(s): Intermediate document ( Code / agreement )

2 Protocol Buffers

2.1 What is? Protocol Buffers?

- Support for multiple programming languages

- Serialized data is small

- Fast deserialization

- Serialization and deserialization code are automatically generated

2.2 Protocol Buffers and gRPC What is the relationship ?

2.3 Protocol Buffers Basic grammar

.protomessage Person {

string name = 1;

int32 id = 2;

bool has_ponycopter = 3;

}

protocname()set_name()// The greeter service definition.

service Greeter {

// Sends a greeting

rpc SayHello (HelloRequest) returns (HelloReply) {}

}

// The request message containing the user's name.

message HelloRequest {

string name = 1;

}

// The response message containing the greetings

message HelloReply {

string message = 1;

}

protoc3 gRPC Four service delivery methods

3.1 Unary RPC

rpc SayHello(HelloRequest) returns (HelloResponse);

3.2 Server streaming RPC

rpc LotsOfReplies(HelloRequest) returns (stream HelloResponse);

3.3 Client streaming RPC

rpc LotsOfGreetings(stream HelloRequest) returns (HelloResponse);

3.4 Bidirectional streaming RPC

rpc BidiHello(stream HelloRequest) returns (stream HelloResponse)

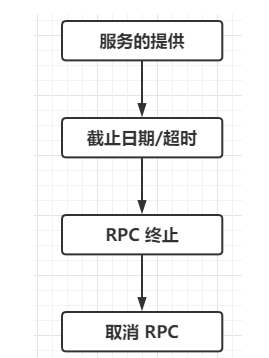

4 gRPC Life cycle of

4.1 Service delivery

4.2 Closing date / Overtime

DEADLINE_EXCEEDED4.3 RPC End

4.4 Cancel RPC

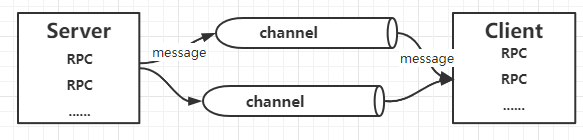

5 gRPC Communication principle

5.1 HTTP2

- HTTP/2 The specification of 2015 year 5 Published in , It aims to solve some scalability problems of its predecessor , Improved in many ways HTTP/1.1 The design of the , The most important thing is to provide semantic mapping on connections .

- establish HTTP The cost of connection is great . You must establish TCP Connect 、 Use TLS Protect the connection 、 Exchange headers and settings .HTTP/1.1 By treating connections as long-term 、 Reusable objects to simplify this process .HTTP/1.1 The connection remains idle , So that new requests can be sent to the same destination through existing idle connections . Although connection reuse alleviates this problem , But a connection can only process one request at a time —— They are 1:1 Coupled . If you want to send a big message , A new request must either wait for it to complete ( Lead to Queue blocking ), Or pay the price of starting another connection more often .

- HTTP/2 By providing a semantic layer above the connection :flow, This further extends the concept of persistent connections . A stream can be thought of as a series of semantically connected messages , be calledframe. The flow may be short , For example, a unary flow requesting user status ( stay HTTP/1.1 in , This may be equivalent to

GET /users/1234/status). As the frequency increases , It has a long life . The recipient may establish a long-term flow , So as to receive user status messages continuously in real time , Not to /users/1234/status The endpoint makes a separate request .The main advantage of flow is connection concurrency , That is, the ability to interleave messages on a single connection .

- flow control

- However , Concurrent flows contain some subtle pitfalls . Consider the following : Two streams on the same connection A and B. flow A Receive a lot of data , Far more than the data it can process in a short time . Final , The recipient's buffer is filled ,TCP The receiving window limits the sender . This is for TCP It's quite standard behavior , But this situation is unfavorable for flow , Because neither stream will receive more data . Ideally , flow B It should be free from flow A The impact of slow processing .

- HTTP/2 By providingflow controlThe mechanism solves this problem as part of the flow specification . Flow control is used to limit each flow ( And each connection ) Amount of unfinished data . It operates as a credit system , The receiver assigns a certain “ The budget ”, The sender “ cost ” This budget . More specifically , The receiver allocates some buffer size (“ The budget ”), The sender fills (“ cost ”) buffer . The receiver uses WINDOW_UPDATE The frame notifies the sender of the available additional buffer . When the receiver stops broadcasting additional buffers , The sender must be in the buffer ( Its “ The budget ”) Stop sending messages when exhausted .

- Use flow control , Concurrent streams can guarantee independent buffer allocation .Plus polling request sending , All sizes 、 The flow of processing speed and duration can be carried out on a single connectionMultiplexing, There is no need to care about cross flow issues .

- Smarter agents

- HTTP/2 The concurrency attribute of allows agents to have higher performance . for example , Consider a method of accepting and forwarding peak traffic HTTP/1.1 Load balancer : When there is a spike , The agent will start more connections to handle the load or queue the request . The former —— new connection —— Usually the first choice is ( In a way ); The disadvantage of these new connections is not only the time waiting for system calls and sockets , Still in happen TCP The connection time is not fully utilized during slow startup .

- by comparison , Consider a configuration for multiplexing each connection 100 A stream of HTTP/2 agent . Some peak requests will still cause new connections to be started , But with HTTP/1.1 The corresponding number of connections is only 1/100 A connection . More generally : Ifn individualHTTP/1.1 The request is sent to a proxy , ben individualHTTP/1.1 Request must go out ; Each request is a meaningful data request / Payload , The request is 1:1 The connection of . contrary , Use HTTP/2 Sent to the agentn Request needs n individualflow, but Unwantedn individualConnect !

5.2 gRPC And HTTP2

6 summary

边栏推荐

- Enlightenment of maker thinking in Higher Education

- Shutter textfield example

- Exclusive interview of open source summer | new committer Xie Qijun of Apache iotdb community

- gtest从一无所知到熟练运用(1)gtest安装

- Nat. Commun.| 机器学习对可突变的治疗性抗体的亲和力和特异性进行共同优化

- 面试题 01.08. 零矩阵

- 大厂的广告系统升级,怎能少了大模型的身影

- 283. 移动零-c与语言辅助数组法

- Shutter WebView example

- i.MX6ULL驱动开发 | 24 - 基于platform平台驱动模型点亮LED

猜你喜欢

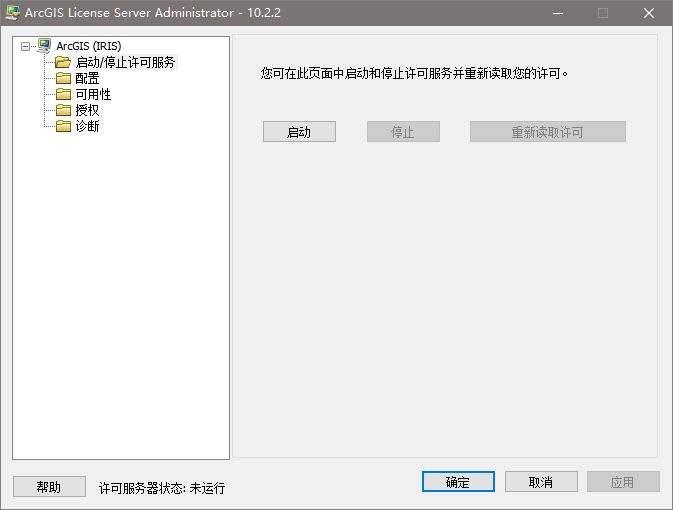

ArcGIS 10.2.2 | solution to the failure of ArcGIS license server to start

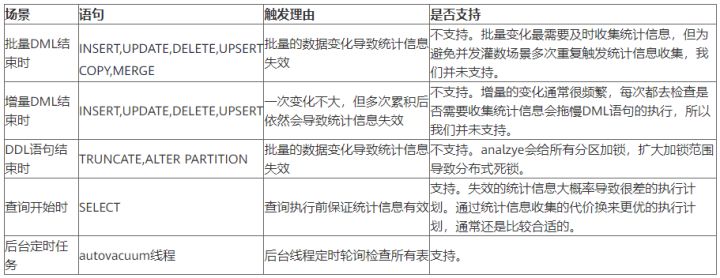

Master the use of auto analyze in data warehouse

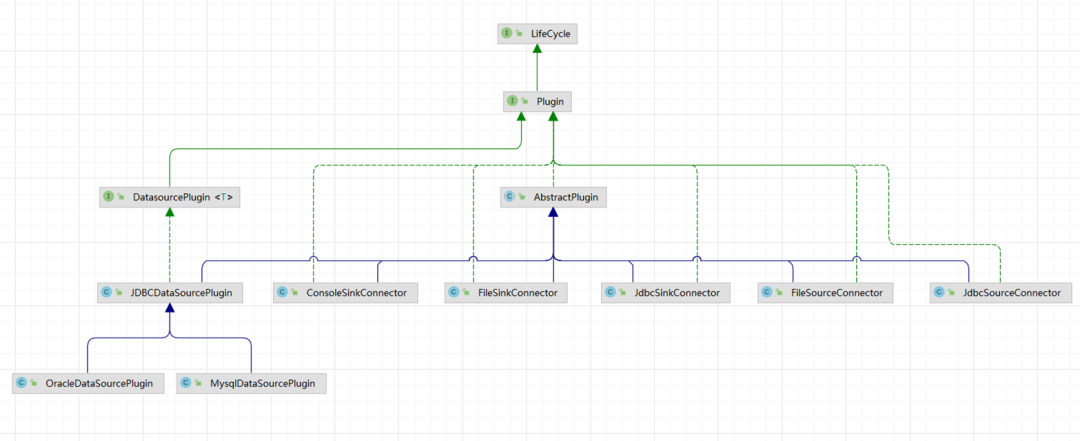

可视化任务编排&拖拉拽 | Scaleph 基于 Apache SeaTunnel的数据集成

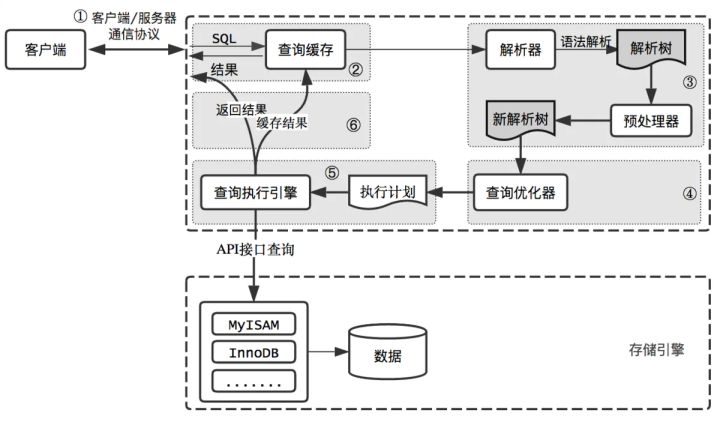

How is the entered query SQL statement executed?

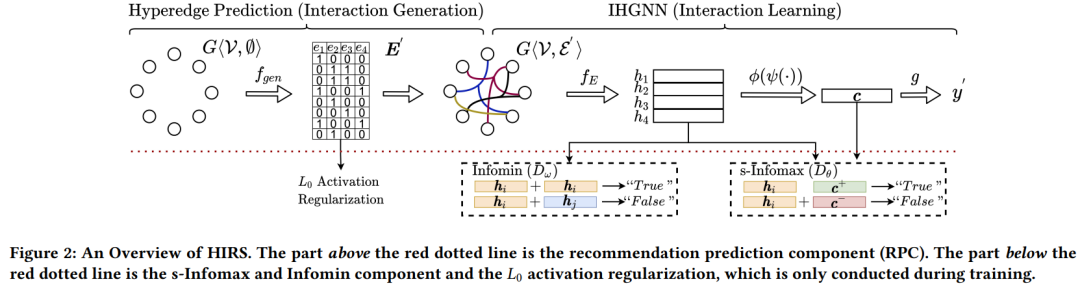

KDD2022 | 什么特征进行交互才是有效的?

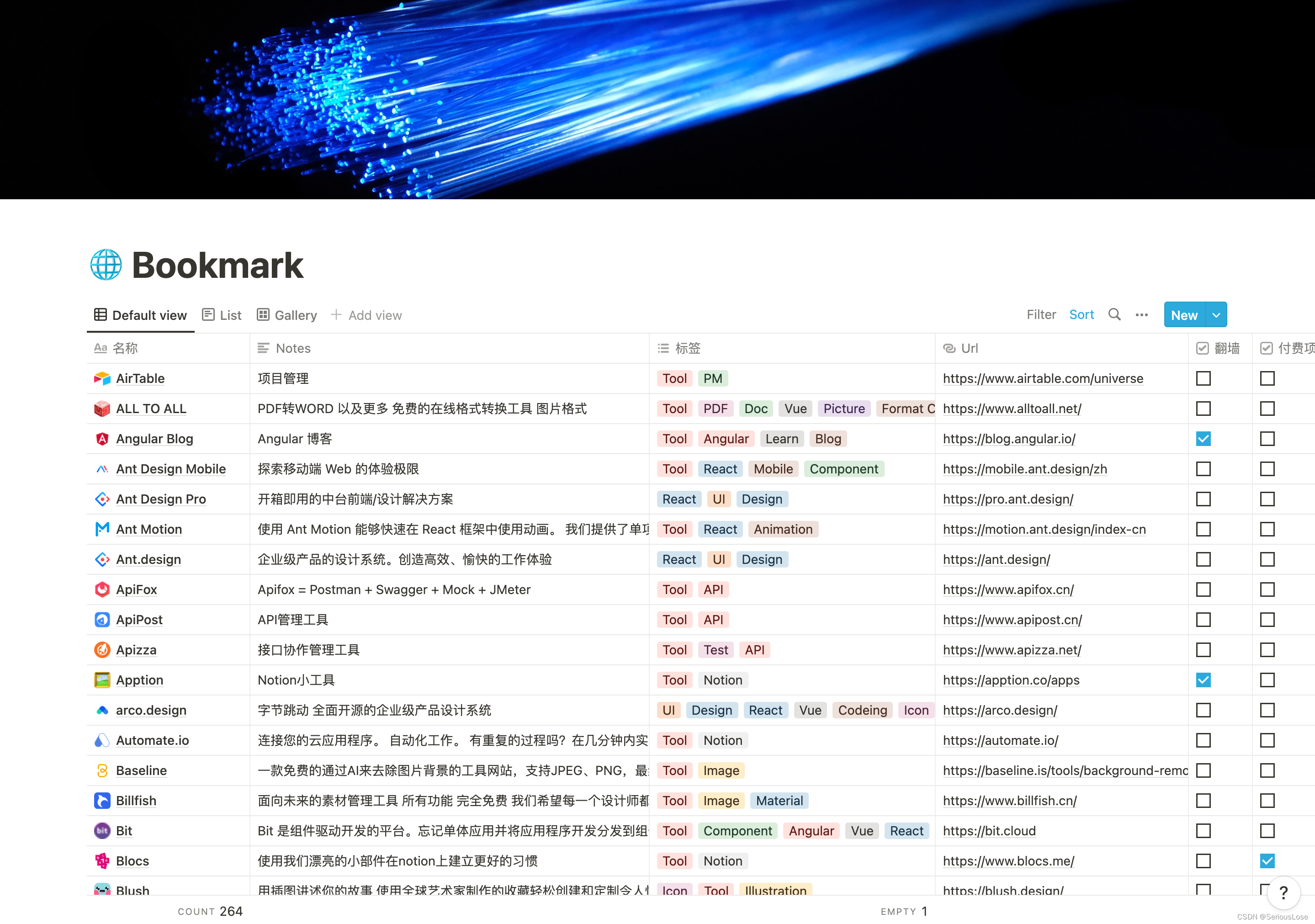

Bookmark

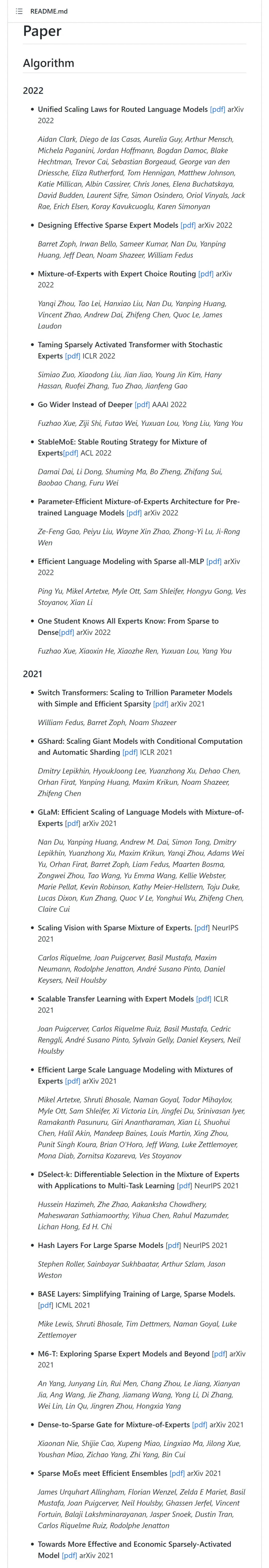

Sorting and sharing of selected papers, systems and applications related to the most comprehensive mixed expert (MOE) model in history

Redis has three methods for checking big keys, which are necessary for optimization

使用 BlocConsumer 同时构建响应式组件和监听状态

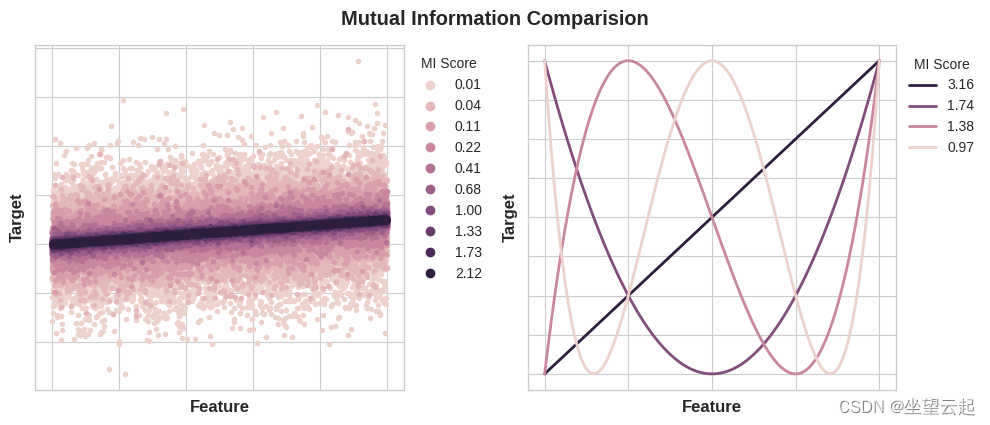

机器学习笔记 - 互信息Mutual Information

随机推荐

Exclusive interview of open source summer | new committer Xie Qijun of Apache iotdb community

Deveco device tool 3.0 release brings five capability upgrades to make intelligent device development more efficient

QT - double buffer plot

Super detailed tutorial, an introduction to istio Architecture Principle and practical application

复数在数论、几何中的用途 - 曹则贤

并列图的画法,多排多列

常用的开源无代码测试工具

Enlightenment of maker thinking in Higher Education

Delphi soap WebService server-side multiple soapdatamodules implement the same interface method, interface inheritance

HDU - 1078 fatmouse and cheese (memory search DP)

HUAWEI nova 10系列发布 华为应用市场筑牢应用安全防火墙

The drawing method of side-by-side diagram, multi row and multi column

Why do you have to be familiar with industry and enterprise business when doing Bi development?

Interviewer: what is XSS attack?

El tree combined with El table, tree adding and modifying operations

并发优化总结

开源之夏专访|Apache IoTDB社区 新晋Committer谢其骏

A large number of virtual anchors in station B were collectively forced to refund: revenue evaporated, but they still owe station B; Jobs was posthumously awarded the U.S. presidential medal of freedo

Visual task scheduling & drag and drop | scalph data integration based on Apache seatunnel

sqlserver对数据进行加密、解密