当前位置:网站首页>Spark实战1:单节点本地模式搭建Spark运行环境

Spark实战1:单节点本地模式搭建Spark运行环境

2022-07-03 12:39:00 【星哥玩云】

前言:

Spark本身用scala写的,运行在JVM之上。

JAVA版本:java 6 /higher edition.

1 下载Spark

http://spark.apache.org/downloads.html

你可以自己选择需要的版本,这里我的选择是:

http://d3kbcqa49mib13.cloudfront.net/spark-1.1.0-bin-hadoop1.tgz

如果你是奋发图强的好码农,你可以自己下载源码:http://github.com/apache/spark.

注意:我这里是运行在Linux环境下。没有条件的可以安装下虚拟机之上!

2 解压缩&进入目录

tar -zvxf spark-1.1.0-bin-Hadoop1.tgz

cd spark-1.1.0-bin-hadoop1/

3 启动shell

./bin/spark-shell

你会看到打印很多东西,最后显示

4 小试牛刀

先后执行下面几个语句

val lines = sc.textFile("README.md")

lines.count()

lines.first()

val pythonLines = lines.filter(line => line.contains("Python"))

scala> lines.first() res0: String = ## Interactive Python Shel

---解释,什么是sc

sc是默认产生的SparkContext对象。

比如

scala> sc res13: org.apache.spark.SparkContext = [email protected]

这里只是本地运行,先提前了解下分布式计算的示意图:

5 独立的程序

最后以一个例子结束本节

为了让它顺利运行,按照以下步骤来实施即可:

--------------目录结构如下:

/usr/local/spark-1.1.0-bin-hadoop1/test$ find . . ./src ./src/main ./src/main/scala ./src/main/scala/example.scala ./simple.sbt

然后simple.sbt的内容如下:

name := "Simple Project" version := "1.0" scalaVersion := "2.10.4" libraryDependencies += "org.apache.spark" %% "spark-core" % "1.1.0"example.scala的内容如下:

import org.apache.spark.SparkConf import org.apache.spark.SparkContext import org.apache.spark.SparkContext._

object example { def main(args: Array[String]) { val conf = new SparkConf().setMaster("local").setAppName("My App") val sc = new SparkContext("local", "My App") sc.stop() //System.exit(0) //sys.exit() println("this system exit ok!!!") } }

红色local:一个集群的URL,这里是local,告诉spark如何连接一个集群,local表示在本机上以单线程运行而不需要连接到某个集群。

橙黄My App:一个项目的名字,

然后执行:sbt package

成功之后执行

./bin/spark-submit --class "example" ./target/scala-2.10/simple-project_2.10-1.0.jar

结果如下:

说明确实成功执行了!

结束!

边栏推荐

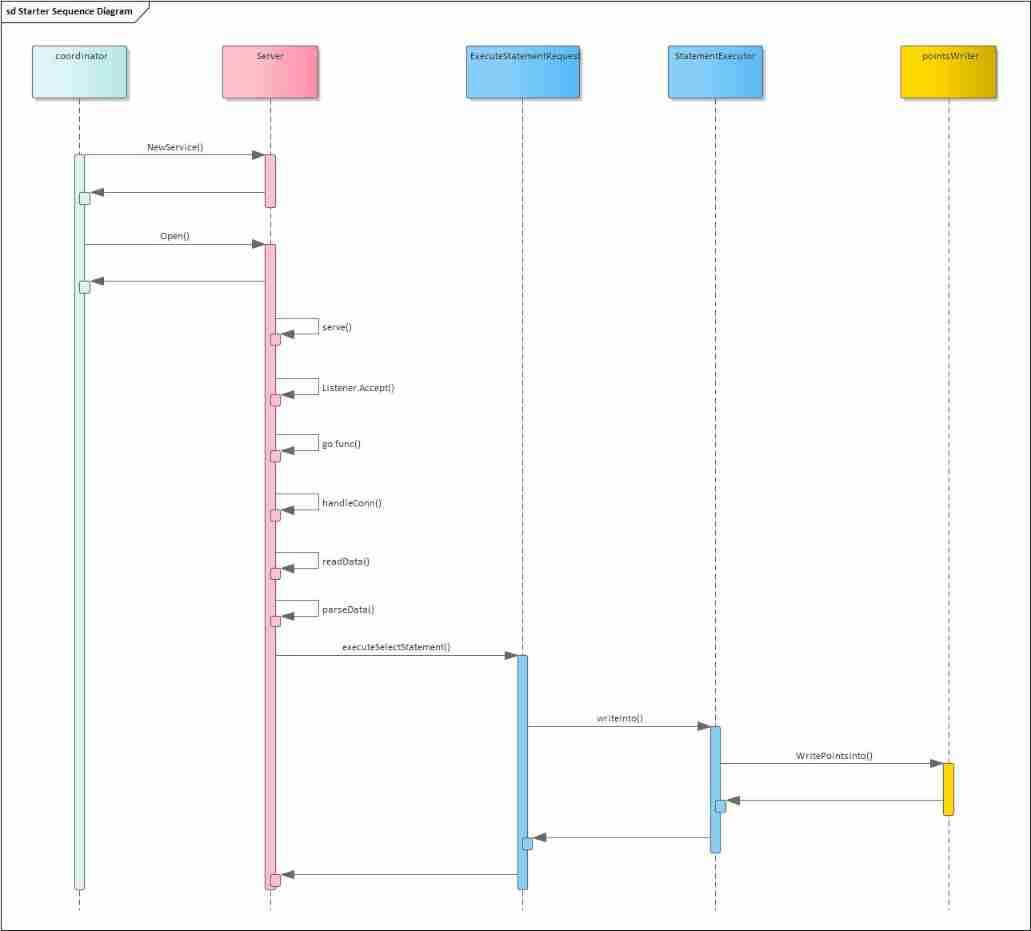

- 2022-02-14 analysis of the startup and request processing process of the incluxdb cluster Coordinator

- 106. 如何提高 SAP UI5 应用路由 url 的可读性

- Solve system has not been booted with SYSTEMd as init system (PID 1) Can‘t operate.

- [network counting] Chapter 3 data link layer (2) flow control and reliable transmission, stop waiting protocol, backward n frame protocol (GBN), selective retransmission protocol (SR)

- 2022-02-13 plan for next week

- Cadre de logback

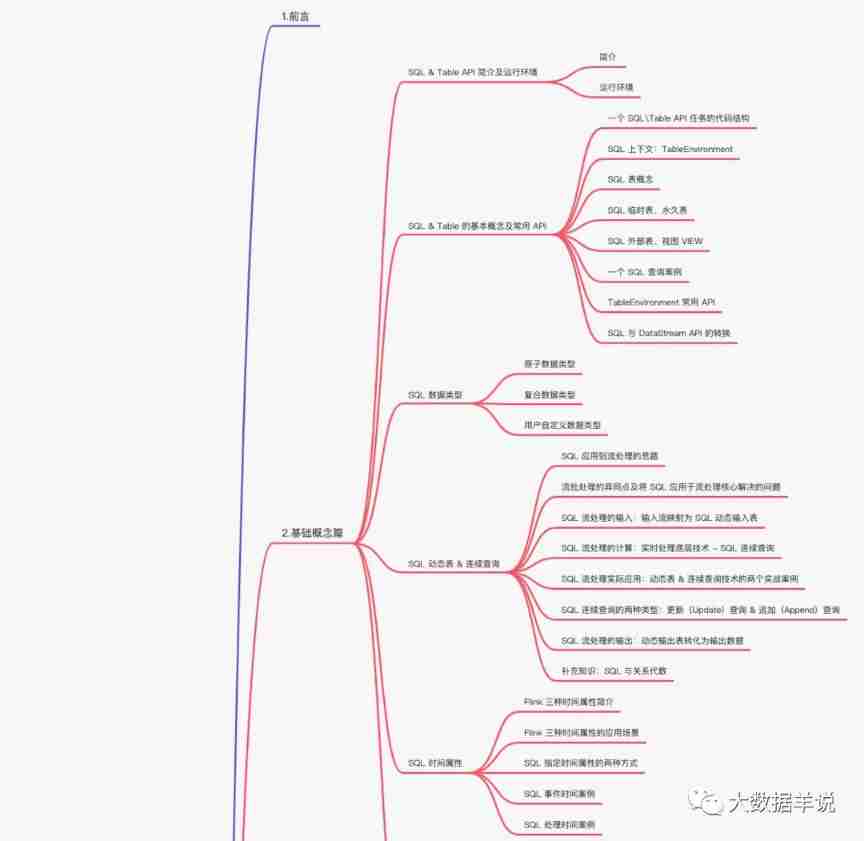

- 18W word Flink SQL God Road manual, born in the sky

- Task5: multi type emotion analysis

- 01 three solutions to knapsack problem (greedy dynamic programming branch gauge)

- [Database Principle and Application Tutorial (4th Edition | wechat Edition) Chen Zhibo] [Chapter IV exercises]

猜你喜欢

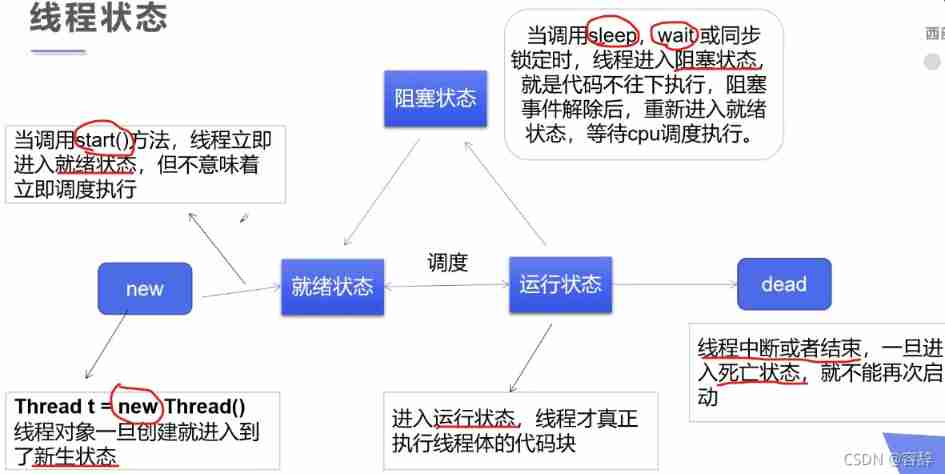

Detailed explanation of multithreading

Flink SQL knows why (13): is it difficult to join streams? (next)

mysql更新时条件为一查询

高效能人士的七个习惯

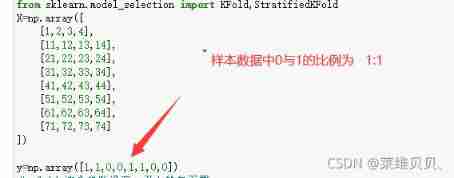

The difference between stratifiedkfold (classification) and kfold (regression)

18W word Flink SQL God Road manual, born in the sky

2022-02-14 analysis of the startup and request processing process of the incluxdb cluster Coordinator

Huffman coding experiment report

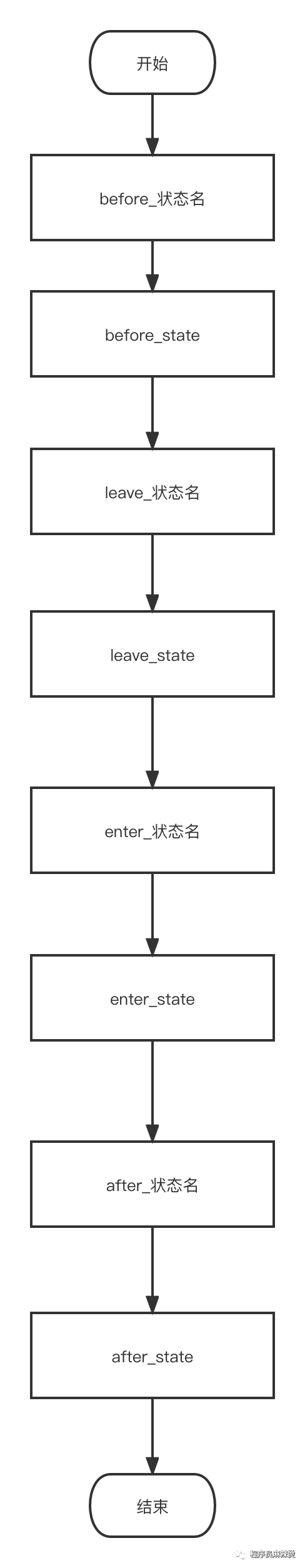

有限状态机FSM

My creation anniversary: the fifth anniversary

随机推荐

Sitescms v3.0.2 release, upgrade jfinal and other dependencies

Dojo tutorials:getting started with deferrals source code and example execution summary

Flink SQL knows why (XV): changed the source code and realized a batch lookup join (with source code attached)

剑指 Offer 11. 旋转数组的最小数字

MySQL

[combinatorics] permutation and combination (multiple set permutation | multiple set full permutation | multiple set incomplete permutation all elements have a repetition greater than the permutation

DQL basic query

C graphical tutorial (Fourth Edition)_ Chapter 13 entrustment: delegatesamplep245

[colab] [7 methods of using external data]

Sword finger offer 17 Print from 1 to the maximum n digits

【数据库原理及应用教程(第4版|微课版)陈志泊】【第六章习题】

Today's sleep quality record 77 points

【習題五】【數據庫原理】

The difference between session and cookie

【数据库原理及应用教程(第4版|微课版)陈志泊】【第七章习题】

2022-02-11 heap sorting and recursion

【Colab】【使用外部数据的7种方法】

人身变声器的原理

Mysql database basic operation - regular expression

CVPR 2022 image restoration paper