当前位置:网站首页>[CV] target detection: derivation of common terms and map evaluation indicators

[CV] target detection: derivation of common terms and map evaluation indicators

2022-07-06 09:47:00 【Demeanor 78】

Computer vision | Machine vision | machine learning | Deep learning

Editor's recommendation

mAP(mean average precision) It is an important artificially designed evaluation index to measure the recognition accuracy in target detection . The article first introduces several common terms in the field of target detection , Then gradually lead to today's protagonist mAP.

This article is mainly to introduce mAP, In other places that are too simple, you can search for detailed articles in the public ( Basically there will be ... If not, I'll make it up later ) understand .

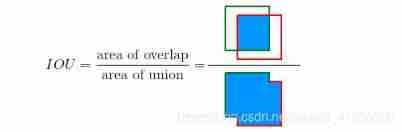

IOU(Intersection over Union, Occurring simultaneously than )

Prediction box (Prediction) Same as the original marker box (Ground truth) Divide the intersection area between them by the Union area between them .

Confidence Score

Confidence Score The confidence score is a classifier (Classifier) Predict an anchor box (Anchor Box) Contains the probability of an object (Probability). By setting Confidence Threshold The confidence threshold can be filtered out ( No display ) Less than threshold The object of prediction .

Confidence Score and IoU Jointly determine a test result (detection) yes Ture Positive still False Positive.

In target detection, when a detection result (detection) Is considered to be True Positive when , The following three conditions need to be satisfied at the same time :

1.Confidence Score > Confidence Threshold;

2. Forecast category matching (match) True value (Ground truth) Categories ;

3. Prediction bounding box (Bounding box) Of IoU Greater than the set threshold .

Not meeting the conditions 2 Or conditions 3, Think it is False Positive.

When there are multiple prediction results corresponding to the same truth value (In case multiple predictions correspond to the same ground-truth), Only the prediction result with the highest confidence score is considered True Positive, The rest are considered False Positive.

Positive sample & Negative sample

For the classification problem : Positive samples are the category samples we want to classify correctly , In principle, negative samples can choose any non positive samples , However, the actual application scenarios should be considered for selection ;

For testing problems : Common two-stage detection framework , Generally, some prediction boxes will be generated according to certain rules Anchor boxes, Select some of them as positive samples , Some of them are negative samples , The rest will be discarded , Although there are different selection strategies in different frameworks , But most of them are based on IOU To decide ( Usually, there is only one positive sample , There are many negative samples .CNN commonly 0.5 The above is considered as a positive sample ); The first stage detection framework is the same as above .

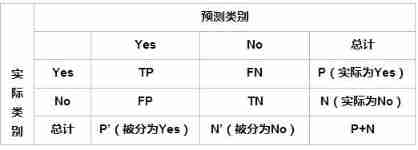

TP、FP、FN And TN( Confusion matrix (confusion matrix) The classification index obtained in )

TP(True Positives): Prediction box and Ground truth(“ The true value of the data ”, The category of the object and its real bounding box ) Between IOU Greater than threshold ( Usually take 0.5) The number of ( same Ground Truth Calculate only once );

FP(False Positives): Prediction box and Ground truth Between IOU The number less than or equal to the threshold ;

FN(False Negatives): Should have Ground truth, But the number of undetected .

Theoretically, the rest is TN(True Negative).

P.S. Because in general target detection , There is no real negative example . Nature does not exist TN.

Accuracy(ACC, Accuracy rate )、Precision(P,) And Recall (R, Recall rate )

Accuracy means : The proportion of the number of positive samples predicted to be positive in the number of all samples , Formula for :

Accuracy=TP/(TP+FP+TN+FN);

The precision rate means : The proportion of the number of positive samples predicted to be positive in all predicted positive samples , Formula for :

Precision = TP/(TP+FP);

Recall indicates : The proportion of the number of positive samples predicted to be positive in the number of all positive samples , Formula for :

Recall = TP/(TP+FN).

As can be seen from the above formula , Ideally, we want P(Precision) And R(Recall) The higher the value, the better , But in some cases P And R The value of is contradictory . Under different circumstances P And R Different emphasis , Can be introduced F1-Measure Or draw P-R Curve for comprehensive consideration .

F-Measure(F-Score) The evaluation index

F-Measure:

among :β Is the parameter ,P It's accuracy ,R It's the recall rate .

F-Measure It's accuracy ( Precision rate ,Precision) And recall rate ( Recall rate ,Recall) Weighted harmonic mean of , yes IR( Information retrieval ) A common evaluation standard in the field , It is often used to evaluate the quality of classification model .

When parameters β=1 when , become F1-Measure:

In different circumstances , The emphasis on accuracy and recall is different , By adjusting the parameters β The value of F-Measure Meet our requirements .

Let's analyze the parameters β( Value range 0- It's just infinite ) Yes F-Measure Influence .

When parameters β=0,F=P, Degenerate into accuracy ;

When parameters β>1 when , Recall rates have a greater impact , It can be considered as ,β At infinity , In the denominator R And in molecules 1 It's all negligible , be F=R, Only recall rate works ;

When parameters 0<β<1 when , Accuracy has a greater impact , It can be considered as ,β Infinitely close 0 when , In the denominator β2P And in molecules β2 It's all negligible , be F=P, Only accuracy works .

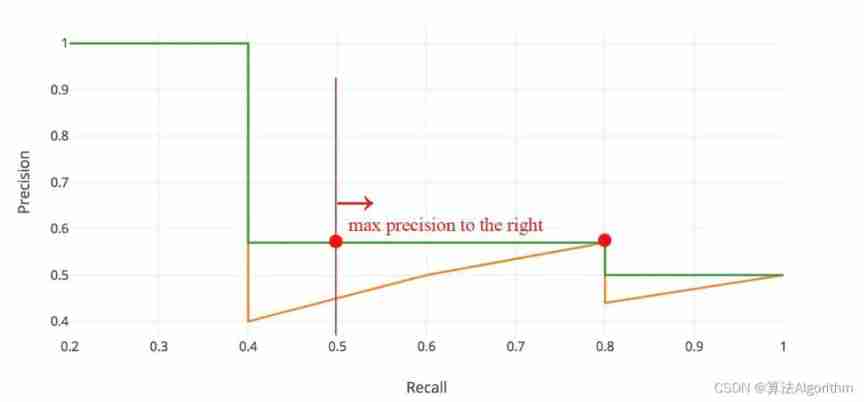

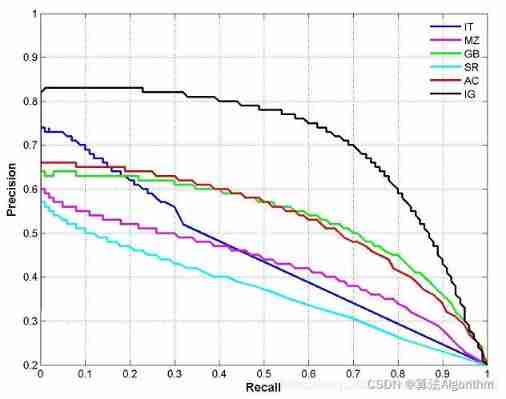

P-R curve

Ordinate for Precision, Abscissa for Recall.Precision-Recall The curve can measure the quality of the target detection model , But it is not convenient to compare models , So we introduced P-R Curve to solve such problems .

Change different confidence thresholds , You can get many pairs Precision and Recall value ,Recall Value play X Axis ,Precision Value play Y Axis , You can draw a Precision-Recall curve , abbreviation P-R curve .

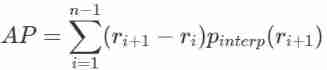

AP(Average precision)

according to 2010 The new standard after years , stay Precision-Recall Based on the curve , By calculating each recall Value corresponding Precision The average of the values , You can get a numerical form (numerical metric) The evaluation index of :AP(Average Precision), It is used to measure the detection ability of the trained model in the category of interest .

In the calculation AP front , To smooth P-R curve , Reduce the influence of curve jitter , First of all, P-R Curve interpolation (interpolation).

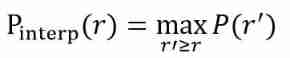

Given a recall value r, For interpolation P_interp For the next recall value r’, With the current r The largest value between Precision value .

The dynamic diagram of interpolation effect is shown in the following figure :

According to the new standard ,AP Calculation can also be defined as interpolated precision-recall curve 、X Shaft with Y The area of the polygon enclosed by the axis . This is called :AUC (Area under curve)

r1,r2,…,rn In ascending order Precision The first interpolation of the interpolation segment corresponds to recall value .

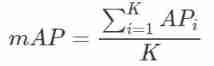

mAP(Mean Average Precision)

Multiple categories of target detection , Each category can draw a P-R curve , All categories AP The average of ( That is, all categories AP and / Number of categories ) That is mAP,mAP It measures the detection ability of the trained model in all categories .

Suppose there is K Species category ,K>1, that mAP The calculation formula of is :

summary

mAP It was mainly aimed at COCO Data sets ,AP It was mainly aimed at VOC Data sets , Both belong to artificially defined evaluation indicators , Beginners don't have to delve into why they design like this , First, understand their main functions , With serious deepening , Understanding will naturally become clearer .

—THE END—

Past highlights

It is suitable for beginners to download the route and materials of artificial intelligence ( Image & Text + video ) Introduction to machine learning series download Chinese University Courses 《 machine learning 》( Huang haiguang keynote speaker ) Print materials such as machine learning and in-depth learning notes 《 Statistical learning method 》 Code reproduction album

AI Basic download machine learning communication qq Group 955171419, Please scan the code to join wechat group :

边栏推荐

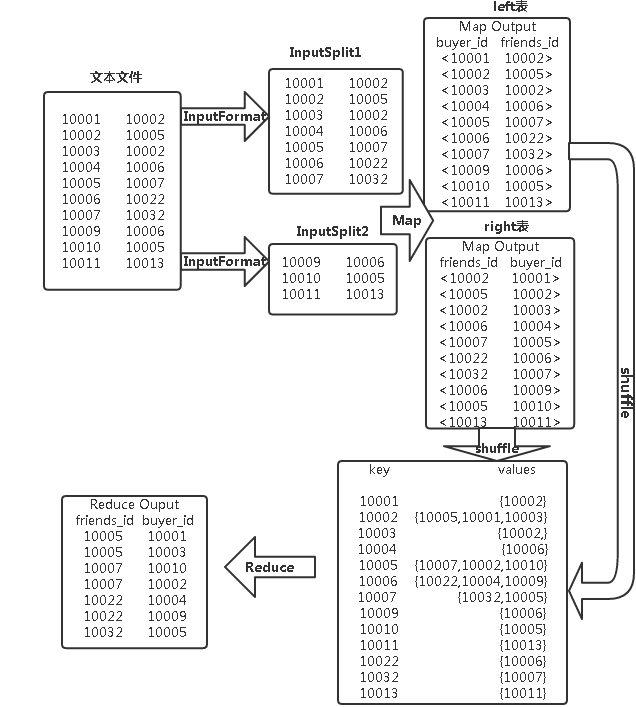

- MapReduce instance (VII): single table join

- 嵌入式開發中的防禦性C語言編程

- 068. Find the insertion position -- binary search

- Global and Chinese market for annunciator panels 2022-2028: Research Report on technology, participants, trends, market size and share

- Cap theory

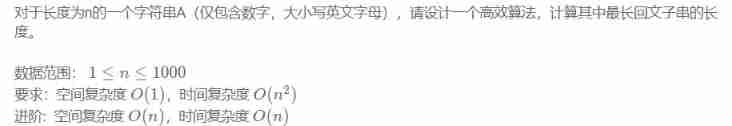

- 018. Valid palindromes

- [deep learning] semantic segmentation: thesis reading (neurips 2021) maskformer: per pixel classification is not all you need

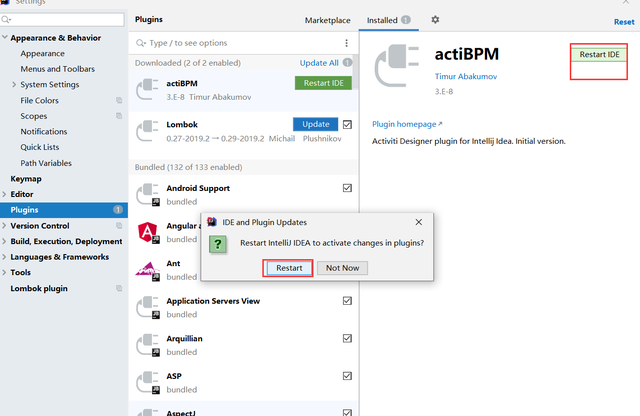

- 工作流—activiti7环境搭建

- 一大波開源小抄來襲

- 51单片机进修的一些感悟

猜你喜欢

一大波開源小抄來襲

Mapreduce实例(七):单表join

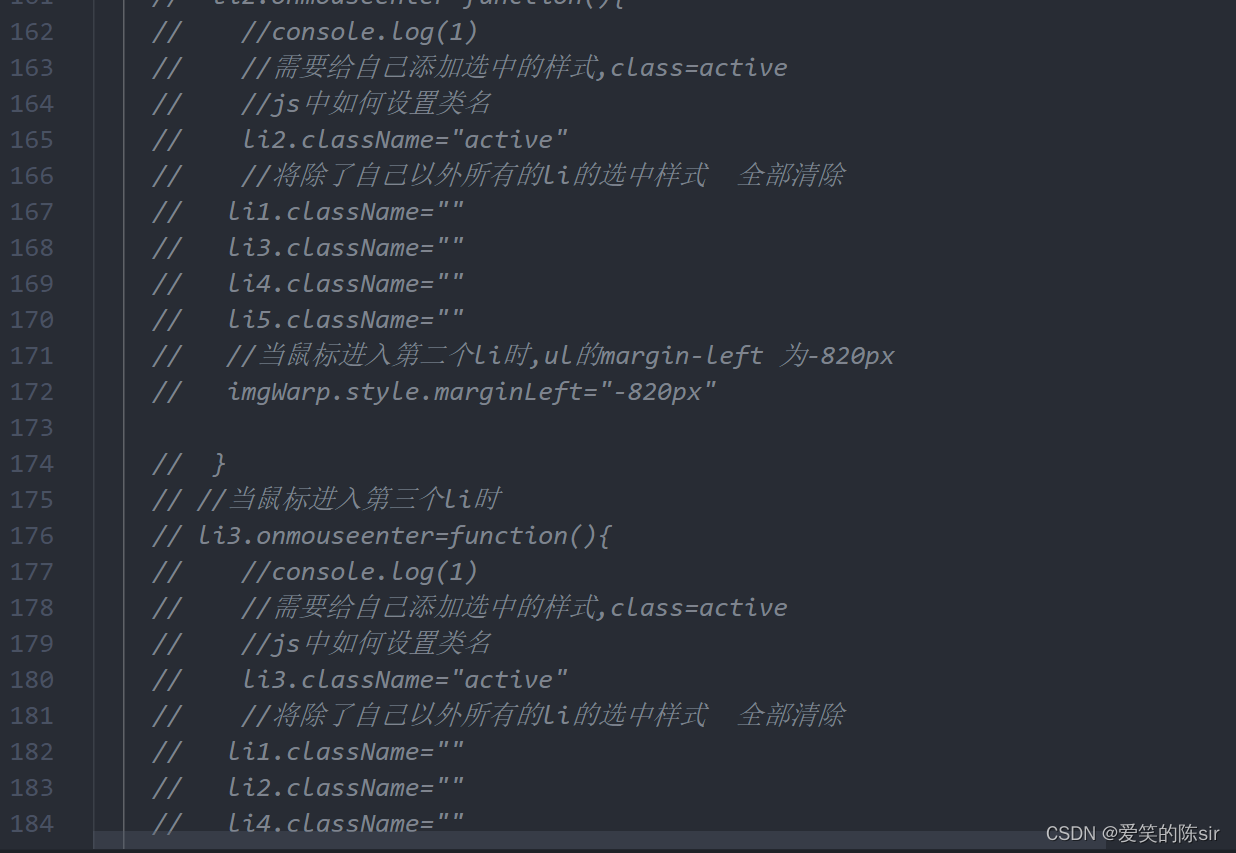

英雄联盟轮播图手动轮播

C#/. Net phase VI 01C Foundation_ 01: running environment, process of creating new C program, strict case sensitivity, meaning of class library

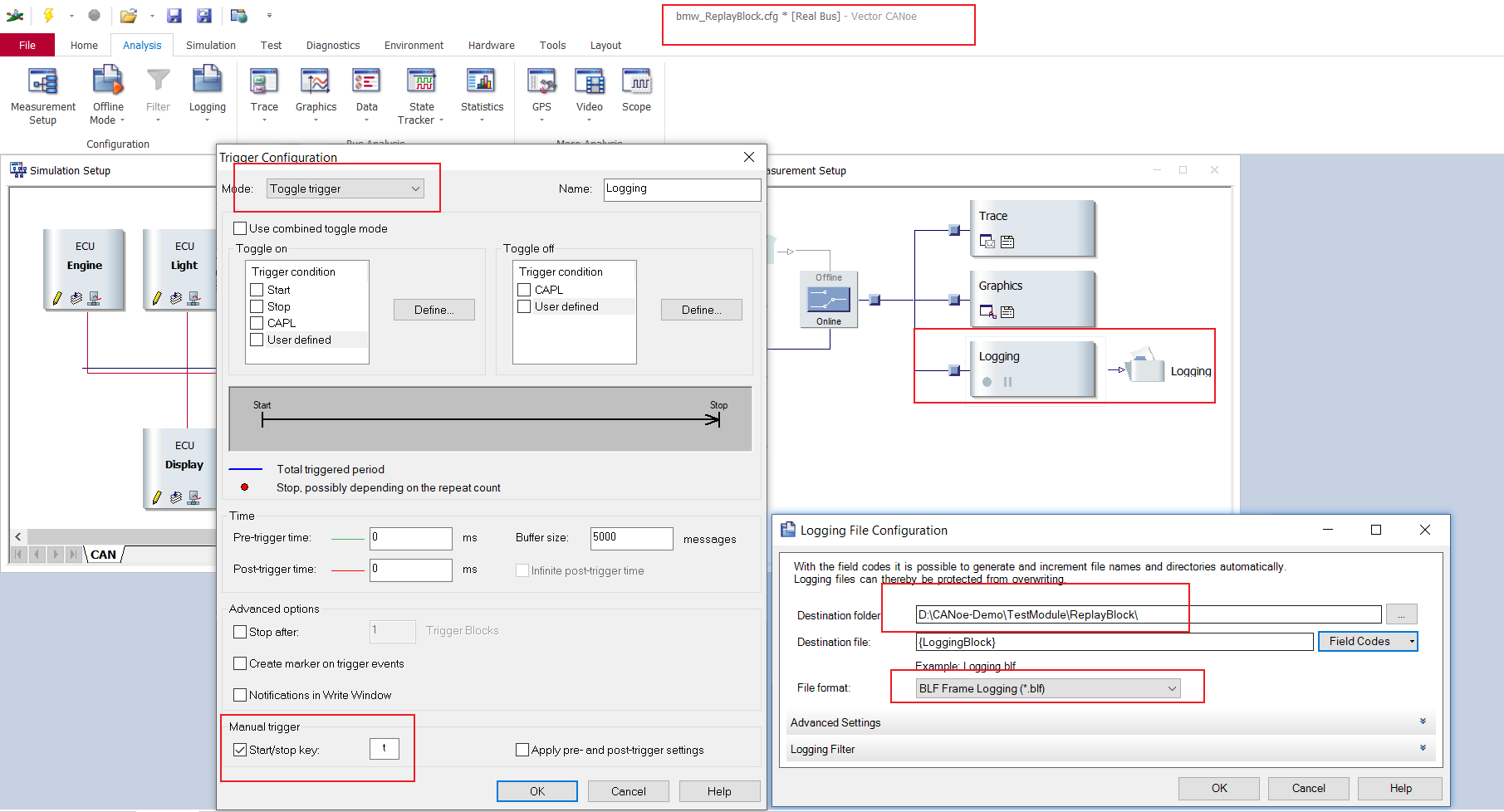

CANoe的数据回放(Replay Block),还是要结合CAPL脚本才能说的明白

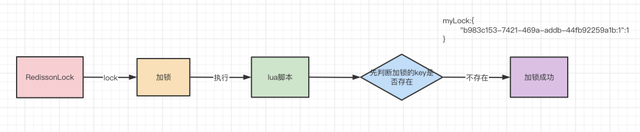

Redis distributed lock implementation redison 15 questions

Teach you how to write the first MCU program hand in hand

Nc17 longest palindrome substring

嵌入式开发比单片机要难很多?谈谈单片机和嵌入式开发设计经历

Workflow - activiti7 environment setup

随机推荐

Single chip microcomputer realizes modular programming: Thinking + example + system tutorial (the degree of practicality is appalling)

MapReduce working mechanism

max-flow min-cut

Redis distributed lock implementation redison 15 questions

Compilation of libwebsocket

单片机如何从上电复位执行到main函数?

CAP理论

Interview shock 62: what are the precautions for group by?

【深度學習】語義分割-源代碼匯總

[deep learning] semantic segmentation: paper reading: (CVPR 2022) mpvit (cnn+transformer): multipath visual transformer for dense prediction

CAPL 脚本打印函数 write ,writeEx ,writeLineEx ,writeToLog ,writeToLogEx ,writeDbgLevel 你真的分的清楚什么情况下用哪个吗?

Learning SCM is of great help to society

Elk project monitoring platform deployment + deployment of detailed use (II)

软件负载均衡和硬件负载均衡的选择

Function description of shell command parser

Research and implementation of hospital management inpatient system based on b/s (attached: source code paper SQL file)

Cap theory

Yarn organizational structure

五月刷题26——并查集

MapReduce instance (VI): inverted index