当前位置:网站首页>[PCL self study: feature9] global aligned spatial distribution (GASD) descriptor (continuously updated)

[PCL self study: feature9] global aligned spatial distribution (GASD) descriptor (continuously updated)

2022-07-05 04:31:00 【Stanford rabbit】

One 、 Global alignment spatial distribution (GASD) Descriptors introduce

This article describes the global alignment spatial distribution ([GASD]) global descriptor , For effective object recognition and pose estimation .

GASD Estimation based on the whole point cloud reference frame representing the object instance , This reference frame is used to align it with the normative coordinate system . then , According to the spatial distribution of its three-dimensional points , Compute descriptors for aligned point clouds . Such a descriptor can also be extended to the color distribution of the entire alignment point cloud . The global alignment transformation of the matching point cloud is used to calculate the pose of the target . For more information, see This paper

【 Theoretical basis :】

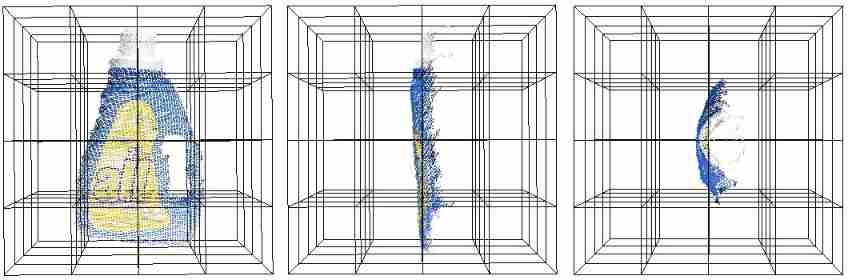

Globally aligned spatial distribution (global Aligned Spatial Distribution, abbreviation GASD) Global description method to represent a partial view of a given object 3D Point cloud as input . The first step is to estimate the reference frame of the point cloud ( The coordinate system established by the point cloud itself ), It is used to calculate the transformation of aligning it with the standard coordinate system , Keep the descriptor posture invariant . After alignment , Calculate the shape descriptor of point cloud according to the spatial distribution of three-dimensional points . You can also consider the color distribution of point clouds , Thus, shape and color descriptors with high resolution are obtained . Finally, by matching the query of partial views and training descriptors **( I understand that the query descriptor is the descriptor calculation of the matching point cloud , The training descriptor calculates the descriptor of the object point cloud )** Object recognition . The pose of each recognized object is also calculated by matching query and alignment transformation of training partial views .

For the first step, the reference frame of the point cloud is estimated using principal component analysis (PCA) Method estimation reference frame . Give a group 3D spot ( P i {P_i} Pi), Represents a detail view of an object , among i ∈ { 1 , … , n } i\in\{1,…, n\} i∈{ 1,…,n}, The first step is to calculate their centroids P ‾ {\overline{P}} P, It is the origin of the reference coordinate system . Then from P i {P_i} Pi and P ‾ {\overline{P}} P Calculate the covariance matrix C {C} C As shown below .

then , obtain C {C} C The eigenvalues of the λ j \lambda_j λj And its corresponding eigenvectors . Considering that the eigenvalues are arranged in ascending order , Adopt the eigenvector related to the minimum eigenvalue v 1 {v_1} v1 As a reference coordinate system z Axis . If v 1 {v_1} v1 The included angle with the observation direction is [ − 9 0 ∘ , 9 0 ∘ ] [-90^{\circ}, 90^{\circ}] [−90∘,90∘] Within the scope of , be v 1 {v_1} v1 Take the opposite . This ensures the z The axis always points to the viewer . Reference coordinate system x The axis is the eigenvector associated with the maximum eigenvalue v 3 {v3} v3.y Axis by v 2 {v_2} v2= v 1 {v_1} v1× v 3 {v3} v3 Express ( Cross riding ).

From the reference coordinate system , You can calculate a transformation j matrix [ R ∣ t ] [\boldsymbol{R} | \boldsymbol{t}] [R∣t], Align it with the standard coordinate system . Then all the points in the detail view ( P i ) (P_i) (Pi) after [ R ∣ t ] [\boldsymbol{R} | \boldsymbol{t}] [R∣t] convert , Its definition is as follows :

Once the point cloud aligns the reference frame , A posture invariant global shape descriptor can be calculated . The boundary cube aligned with the point cloud axis centered on the origin is divided into m s × m s × m s m_s \ × m_s \ × m_s ms ×ms ×ms Regular grid . For each grid cell , Calculation with l s b i n s l_s bins lsbins Histogram . If l s = 1 l_s=1 ls=1, So each histogram bin The storage belongs to 3D The number of points of the corresponding cell in the regular grid . If it is l s > 1 l_s>1 ls>1, So for each cell , The histogram of normalized distance between each cell and cloud centroid will be calculated .

The contribution of each sample to the histogram is normalized relative to the total number of points in the cloud . Optionally , Interpolation can be used to assign the value of each sample to adjacent cells , To avoid the boundary effect that may cause sudden changes in the histogram when a sample is transferred from one cell to another . then , The descriptor is obtained by connecting the calculated histogram .

Color information can also be incorporated into descriptors , To increase its identification ability . The color component of the descriptor is m c × m c × m c m_c \ × m_c \ × m_c mc ×mc ×mc Grid Computing , Similar to the mesh used by shape components , But the color histogram of each cell is generated based on the color of its points . The color of point cloud is HSV Represent... In space , The hue value is l c l_c lc Accumulate in the histogram of the container . Similar to shape component calculation , Normalize the points . Besides , You can also interpolate histogram samples . Shape and color components are connected , Generate the final descriptor .

Use the nearest neighbor search method (KDtree) Match query and training descriptor . then , For each matching object instance , Use the alignment transformation obtained from the reference frames of the respective query and training partial views To calculate the rough posture . By transforming the views of query and training parts respectively [ R q ∣ t q ] [\mathbf{R_{q}} | \mathbf{t_{q}}] [Rq∣tq] and [ R t ∣ t t ] [\mathbf{R_{t}} | \mathbf{t_{t}}] [Rt∣tt], Get the rough pose of the object [ R c ∣ t c ] [\mathbf{R_{c}} | \mathbf{t_{c}}] [Rc∣tc].

Then use the iterative closest point (ICP) Algorithm for rough pose [ R c ∣ t c ] [\mathbf{R_{c}} | \mathbf{t_{c}}] [Rc∣tc] Refinement .ICP Relevant contents can be referred to This blog post .

Two 、 Global alignment spatial distribution (GASD) Sample code analysis

The spatial distribution of global alignment is as pcl_features Part of the library is PCL Implemented in . With color information GASD The default value of the parameter is : m s = 6 m_s=6 ms=6( The size is 3 Half of ), l s = 1 l_s=1 ls=1, m c = 4 m_c=4 mc=4( The size is 2 Half of ) and l c = 12 l_c=12 lc=12, No histogram interpolation (INTERP_NONE). This will result in a containing 984 An array of floating-point values . They are stored in pcl::GASDSignature984 Point type . Only shape information GASD The default value of the parameter is : m s m_s ms=8(4 Half the size of ), l s = 1 l_s=1 ls=1 And trilinear histogram interpolation (INTERP_TRILINEAR). This will result in a containing 512 An array of floating-point values , The array can be stored in pcl::GASDSignature512 Point type . You can also use quartilinear histogram interpolation (interp_quadrillinear).

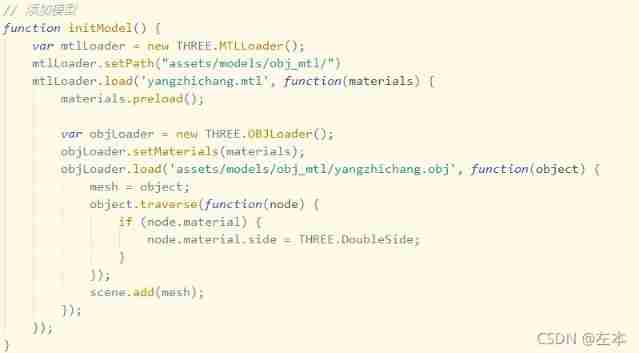

The following code snippet will estimate the input color point cloud GASD shape + Color descriptor .

#include <pcl/point_types.h>

#include <pcl/features/gasd.h>

{

pcl::PointCloud<pcl::PointXYZRGBA>::Ptr cloud (new pcl::PointCloud<pcl::PointXYZRGBA>);

// Here you need to add read PCD Format point cloud or create point cloud related code , I won't repeat

// establish GASD Estimation class , And press the point cloud data into it .

pcl::GASDColorEstimation<pcl::PointXYZRGBA, pcl::GASDSignature984> gasd;

gasd.setInputCloud (cloud);

// Declare a with color information and shape information GASD Descriptor total 984 Lattice floating point numbers represent

pcl::PointCloud<pcl::GASDSignature984> descriptor;

// Start calculating descriptors

gasd.compute (descriptor);

// Get alignment transformation matrix

Eigen::Matrix4f trans = gasd.getTransform (trans);

// Decompress the histogram

for (std::size_t i = 0; i < std::size_t( descriptor[0].descriptorSize ()); ++i)

{

descriptor[0].histogram[i];

}

}

The following code snippet will only estimate the input point cloud GASD Shape descriptor . The only difference is the descriptor statement pcl::GASDSignature984 Change it to pcl::GASDSignature512.

#include <pcl/point_types.h>

#include <pcl/features/gasd.h>

{

pcl::PointCloud<pcl::PointXYZ>::Ptr cloud (new pcl::PointCloud<pcl::PointXYZ>);

// Here you need to add read PCD Format point cloud or create point cloud related code , I won't repeat

// establish GASD Estimation class , And press the point cloud data into it .

pcl::GASDEstimation<pcl::PointXYZ, pcl::GASDSignature512> gasd;

gasd.setInputCloud (cloud);

// Declare a with color information and shape information GASD Descriptor total 512 Lattice floating point numbers represent

pcl::PointCloud<pcl::GASDSignature512> descriptor;

// Start calculating descriptors

gasd.compute (descriptor);

// Get alignment transformation matrix

Eigen::Matrix4f trans = gasd.getTransform (trans);

// Add compressed histogram

for (std::size_t i = 0; i < std::size_t( descriptor[0].descriptorSize ()); ++i)

{

descriptor[0].histogram[i];

}

}

summary : This article briefly introduces GASD Function and principle of , Combined with the official website example code for analysis . thus PCL Of Feature All modules are introduced .

【 About bloggers 】

Stanford rabbit , male , Master of mechanical engineering, Tianjin University . Since graduation, I have been engaged in optical three-dimensional imaging and point cloud processing . Because the three-dimensional processing library used in work is the internal library of the company , Not universally applicable , So I learned to open source by myself PCL Library and its related mathematical knowledge for use . I would like to share the self-study process with you .

Bloggers lack of talent and knowledge , Not yet able to guide , If you have any questions, please leave a message in the comments section for everyone to discuss .

If seniors have job opportunities, you are welcome to send a private letter .

边栏推荐

- About the project error reporting solution of mpaas Pb access mode adapting to 64 bit CPU architecture

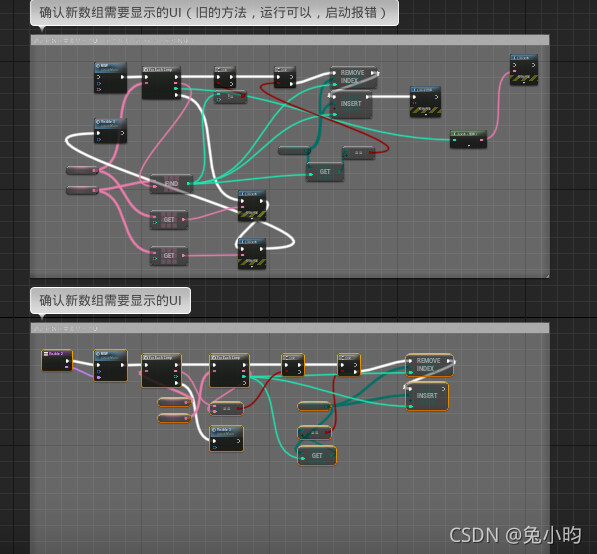

- [phantom engine UE] realize the animation production of mapping tripod deployment

- 网络安全-记录web漏洞修复

- 函数(易错)

- After the deployment of web resources, the navigator cannot obtain the solution of mediadevices instance (navigator.mediadevices is undefined)

- Managed service network: application architecture evolution in the cloud native Era

- Interview related high-frequency algorithm test site 3

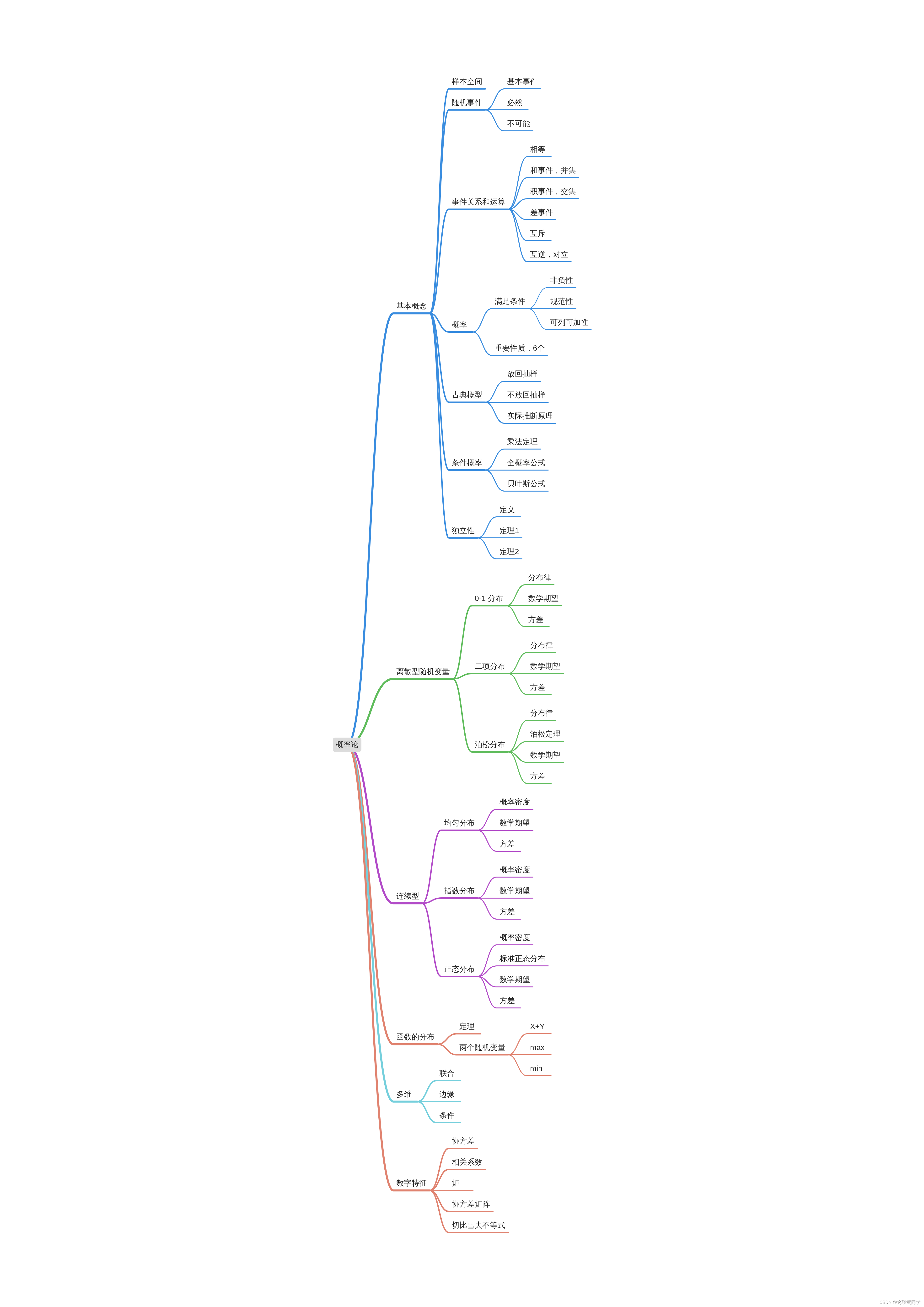

- Key review route of probability theory and mathematical statistics examination

- 防护电路中的元器件

- A solution to the problem that variables cannot change dynamically when debugging in keil5

猜你喜欢

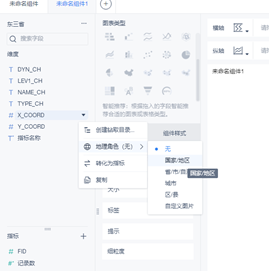

【FineBI】使用FineBI制作自定义地图过程

【虚幻引擎UE】运行和启动的区别,常见问题分析

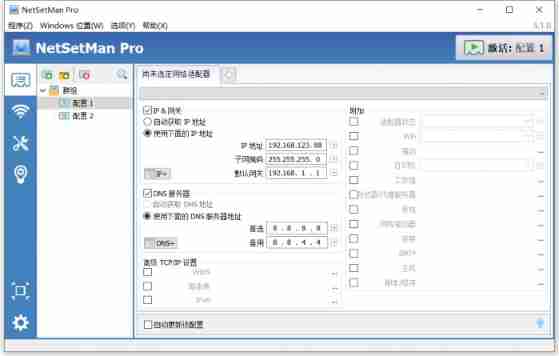

NetSetMan pro (IP fast switching tool) official Chinese version v5.1.0 | computer IP switching software download

Fuel consumption calculator

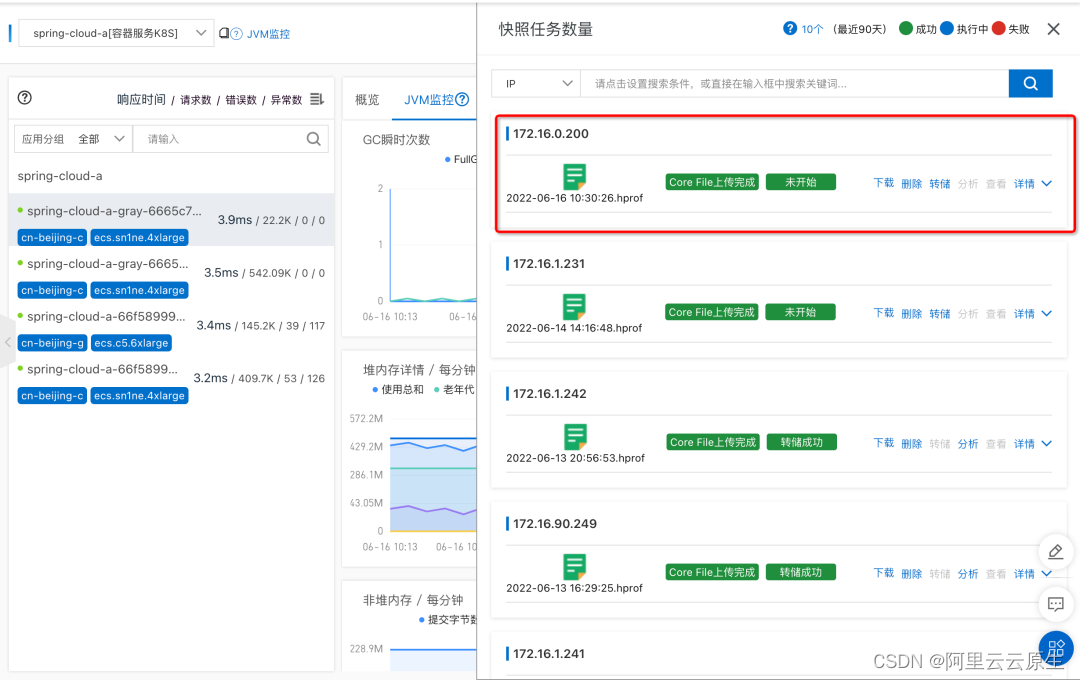

直播预告 | 容器服务 ACK 弹性预测最佳实践

网络安全-记录web漏洞修复

Threejs Internet of things, 3D visualization of farms (II)

Kwai, Tiktok, video number, battle content payment

概率论与数理统计考试重点复习路线

Is there a sudden failure on the line? How to make emergency diagnosis, troubleshooting and recovery

随机推荐

官宣!第三届云原生编程挑战赛正式启动!

windows下Redis-cluster集群搭建

[uniapp] system hot update implementation ideas

TPG x AIDU|AI领军人才招募计划进行中!

【FineBI】使用FineBI制作自定义地图过程

Neural networks and deep learning Chapter 6: Circular neural networks reading questions

Neural networks and deep learning Chapter 5: convolutional neural networks reading questions

指针函数(基础)

Sequence diagram of single sign on Certification Center

学习MVVM笔记(一)

How can CIOs use business analysis to build business value?

2022-2028 global and Chinese FPGA prototype system Market Research Report

Raki's notes on reading paper: code and named entity recognition in stackoverflow

Network security - record web vulnerability fixes

Construction d'un Cluster redis sous Windows

Web开发人员应该养成的10个编程习惯

Introduction to RT thread kernel (5) -- memory management

Stage experience

[Chongqing Guangdong education] 2408t Chinese contemporary literature reference test in autumn 2018 of the National Open University

Fonction (sujette aux erreurs)