当前位置:网站首页>Cereals Mall - Distributed Advanced p129~p339 (end)

Cereals Mall - Distributed Advanced p129~p339 (end)

2022-07-06 19:13:00 【Hu Yuqiao】

Grain Mall – Distributed Advanced P129~P339

Video address :https://www.bilibili.com/video/BV1np4y1C7Yf?p=339&vd_source=510ec700814c4e5dc4c4fda8f06c10e8

Code address :https://gitee.com/empirefree/gullimall/tree/%E5%B0%9A%E7%A1%85%E8%B0%B7–%E9%AB%98%E7%BA%A7%E7%AF%87/

Personal summary : Learned a lot of architecture design ideas , The code is not really typed , It takes time to complete the whole project from the front end to the service end to the back end , Suggest targeted learning . If you actually use the design idea later, review it again

【 Grain Mall – Distributed Foundation P1~P27】: https://blog.csdn.net/Empire_ing/article/details/118860147

【 Grain Mall – Distributed Foundation P28~P101】https://mp.weixin.qq.com/s/5kvXjLNyVn-GBhNMWyJdpg

[TOC]

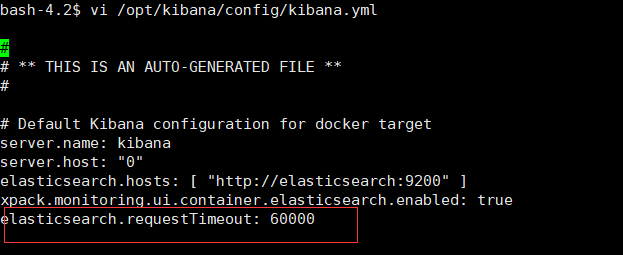

1.Kibana– Initiate error reporting

Suddenly found that kibana Error report timeout , Time changed to 60000 Restart solved , Then set the kibana Found again during automatic restart docker No forwarding , After setting, it is changed .

1.1.Kibana–Request Timeout after 30000ms

It is said on the Internet that it is set to 40000, But after I set it, I found that it still timed out , The server performance should be too poor , Change to 60000 It's settled. ( In addition, it can also be changed es Memory , But my server memory is not enough , Changed company es I can't get up , So it hasn't changed es, And changed in disguise kibana Time )

[[email protected] ~]# docker exec -it kibana /bin/bash

bash-4.2$ vi /opt/kibana/config/kibana.yml

elasticsearch.requestTimeout: 60000

notes : About kibana Whether timeout can pass docker logs kibana Check the last log to know kibana Whether it started successfully and why it failed .

1.2 Docker–IPv4 forwarding is disabled. Networking will not work

Reconfigure forwarding , And then restart docker The service inside is ok

# 1. Configure forwarding

vim /etc/sysctl.conf

net.ipv4.ip_forward=1

# 2. Restart the service , Make the configuration work

systemctl restart network

# 3. Check for success , If returned to “net.ipv4.ip_forward = 1” That means success

sysctl net.ipv4.ip_forward

# 4. restart docker service

service docker restart

# 5. View the containers that have been run

docker ps -a

notes : If network Initiate error reporting unit network.service could not be found, You can use the following methods :

# 1. download network

yum install network-scripts -y

# 2. see network state , Restart after normal network 了

systemctl status network

After the above steps are completed , You can see normal Kibana 了 .

2. Performance testing –Jmeter

P129~P140 It is the integration of products on the back shelf ES With the local Nginx Deploy , Because I use the public network server , So the domain name is not integrated , Simply go through it . However, the logic of goods on the shelves is similar to Nginx.conf The explanation of the document has expanded a lot , You can learn well , Let's start Jmeter Stress test content .

2.1. Basic introduction

- Performance considerations :( The black ones below should be the points needing attention in development , The rest can be left to the O & M to consider )

database ( Indexes , Library table design , Sub database, sub table and so on )、 Applications ( Avoid frequently operating the database and requesting third-party interfaces, etc )、 middleware (tomcat,Nginx)、 Network and operating system performance .

- Application properties

- CPU intensive : Applications require frequent calculations

- IO intensive : Applications need to read and write frequently

# Solve the service startup port occupation

netstat -ano | findstr "8080"

taskkill /F /pid 3572

2.2.Jmeter Report errors –Address Already in use

When Jmeter Visit local 10000 Port time , An error will be reported that the port is occupied , The solution is as follows :

1. Enter the computer \HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\Tcpip\Parameters

2. Right click paramters, newly build Dword32 Folder MaxUserPort, Input 65534, Radix decimal

3. Right click paramters, newly build Dword32 Folder TCPTimedWaitDelay, Input 30, Radix decimal

4. Just restart the computer .

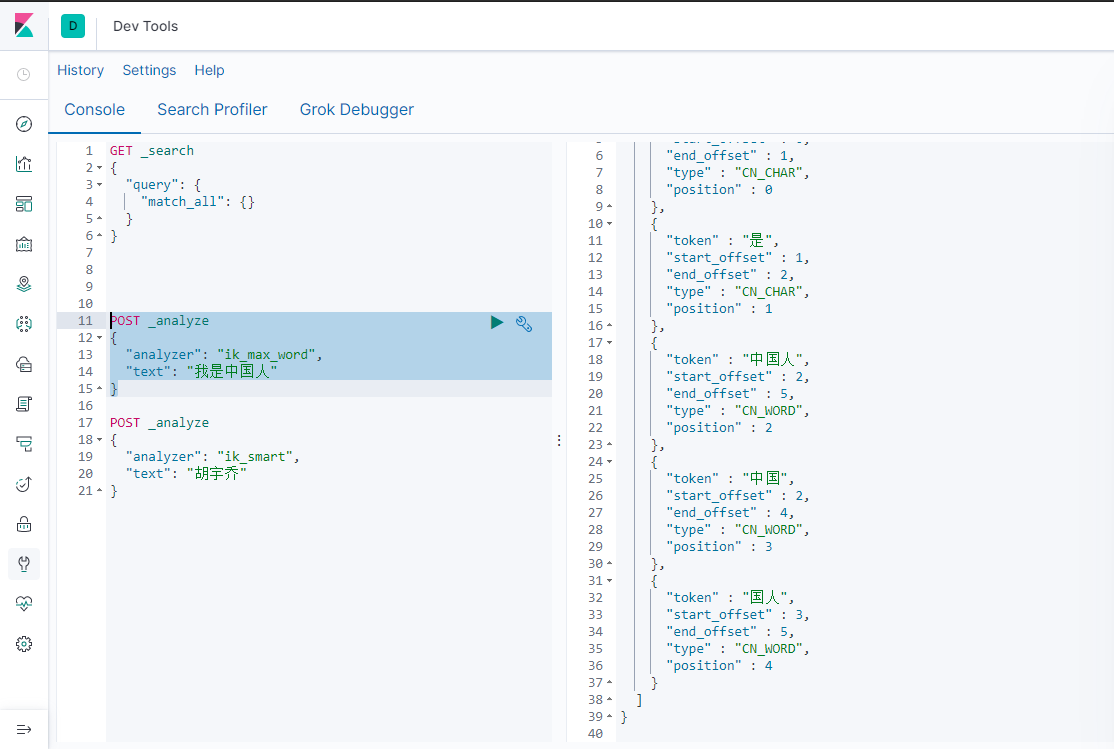

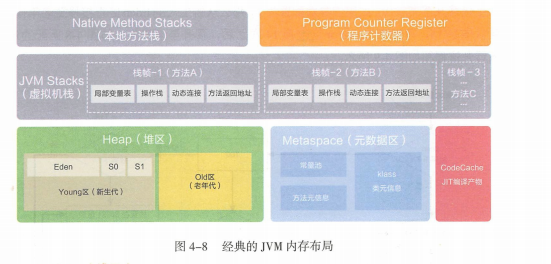

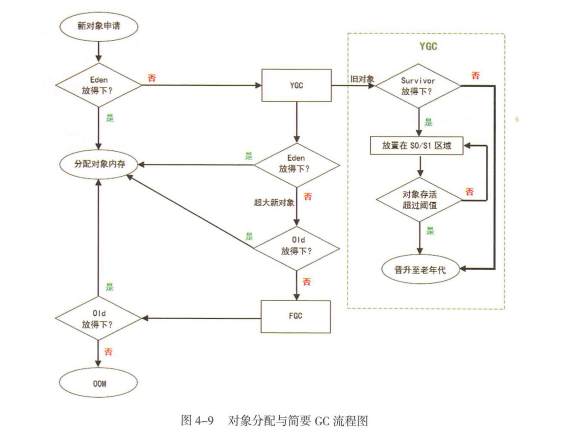

2.3 Jvm Garbage collection – Simple process introduction

JVM Memory layout involves Java Thread memory application allocation management , and JVM The middle heap is shared by threads , It is inevitable to design Java Multithreading security issues , So actually JVM And concurrent programming are inseparable .

Here is a brief introduction to JVM Garbage collection process , Reorganize the details later JVM.

1. Object to heap Eden District application , Put it down if you can , Can't put it down for a small YGC( For the new generation of GC, Garbage collection algorithm :MS,MC,CP,GC). Small YGC After the survival object is placed survivor0,survivor1.survivor0 After full, also carry out small YGC move survivor1. Those who have survived many times have moved into the elderly generation . The above small YGC Later, if Eden can't let go , Then put it into the old generation ( Every time YGC If a piece of memory is not GC transfer , Survival is +1, Threshold reached 15 Just upgrade to the old age ), The old generation can't let go , Report errors OOM.

2. stay survivor0/1 When moving, if the elderly can put it down , Those who can't let go should have a FGC,FGC It can't be moved to the elderly generation , Report errors OOM.

3.FGC In the post old age, it should be decentralized , Can't put down the newspaper OOM out of memory

3. cache – Local 、redis

3.1 Local cache

Similar to global variables , It won't be GC, There will always be , Until the end of the process , The code is as follows :

List<Student> menu = Stream.of(

new Student(" that ", 721, true, Student.GradeType.THREE),

new Student(" Chen er ", 637, true, Student.GradeType.THREE),

new Student(" Zhang San ", 666, true, Student.GradeType.THREE),

new Student(" Li Si ", 531, true, Student.GradeType.TWO),

new Student(" Wang Wu ", 483, false, Student.GradeType.THREE),

new Student(" Zhao Liu ", 367, true, Student.GradeType.THREE),

new Student(" Sun Qi ", 1499, false, Student.GradeType.ONE)).collect(Collectors.toList());

@GetMapping("/testLocalCache")

public void testLocalCache(){

if (!CollectionUtils.isEmpty(menu)){

menu.remove(0);

System.out.println(JSON.toJSONString(menu));

} else {

menu.add(new Student(" Hu Yuqiao ", 1499, false, Student.GradeType.ONE));

System.out.println(JSON.toJSONString(menu));

}

}

3.2 Redis cache

1. Redis Basic concepts :

Cache breakdown : A lot of requests redis Of key, But it's time to key Out of date , All of you db Inquire about terms of settlement : A large number of concurrent requests are locked , The first person checks first , Write the found results into redis. Subsequent queries are also locked accesses redis

Cache avalanche : A lot of requests redis Of key, But a lot key Be overdue , All of you db Inquire about terms of settlement : take key The expiration time is based on the original expiration time plus a certain random expiration time

Cache penetration : Request one that does not exist in redis、db Of key, Similar to malicious attacks terms of settlement : Store a short expired null value .

2. Redis serialize --TypeReference

Reids It's usually <string,jsonobject> Deposit in , But after taking it out, it needs to be converted into the original object , In addition to using specific classes , You can also use TypeReference

```java

//1、redis conversion -- Into a specific class

Student student = JSONObject.parseObject(result, Student.class);

//2、redis conversion -- Turn it into map or list Array

Map<String, List<Student>> resultMap = JSONObject.parseObject(result, new TypeReference<Map<String, List<Student>>>(){});

```

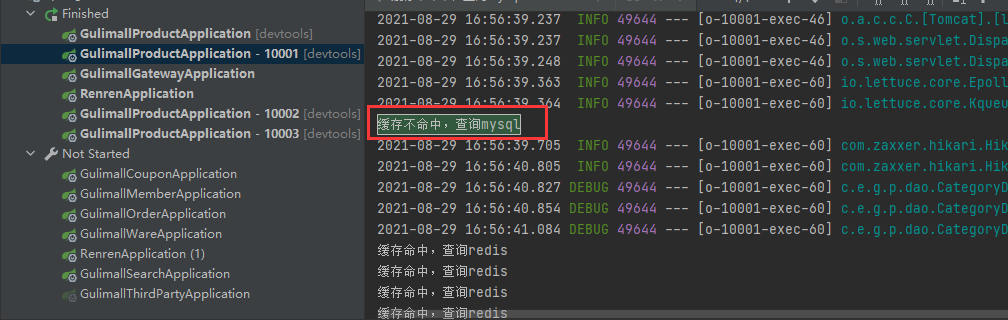

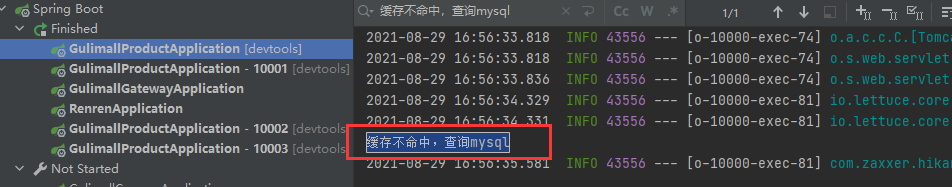

3. Redis-- Distributed lock

Single project :synchronized Lock the object ,static synchronized Locking method , Because multiple visits are the same project , Therefore, there will be no repeated database search problem

Distributed projects :

synchronized It doesn't matter , Because each project runs independently . You can see 10000 and 10001 I checked the database .

So it needs to be changed to redis Distributed lock , The box redis control .

Something to watch out for :( Set up setIfAbsent and del Values must be atomic )

1. Set up value The value should be unique 、 There should be an expiration date : If value They're all fixed values , When other processes delete distributed locks, they will delete the locks of all processes , So each process sets distributed locks value The value should be unique . The expiration time is to ensure that after locking , The service is down , Even if it is started later, there is no way to get the lock . Therefore, the code for setting the distributed lock is as follows

```java

Boolean lock = stringRedisTemplate.opsForValue().setIfAbsent("lock", uuid, 30, TimeUnit.SECONDS);

```

2. Delete value value : use lua Scripts guarantee atomicity of deletion , That is, if the expiration time set above is too short , As a result, the first process has not run to the delete script , Other processes have found that they can acquire locks , Then delete the first one , The values of most processes in the library will be deleted , In this way, more processes will find that they can obtain locks again , Gao Bingfa will still appear as above , Checked 2 The situation of the secondary Library , And when it comes to lua Script guarantees atomicity after operation ,redis Find the first process key It's overdue , It won't be deleted , So delete value Value must be atomic . Therefore, the code to delete the distributed lock is as follows

```java

String script = "if redis.call('get',KEYS[1]) == ARGV[1] then return redis.call('del',KEYS[1]) else return 0 end";

Long lockResult = stringRedisTemplate.execute(new DefaultRedisScript<>(script, Long.class),

Arrays.asList("lock"), uuid);

```

The complete code is as follows :

/** * Author: HuYuQiao * Description: redis-- Set up 、 Remove distributed lock -- Guaranteed atomicity */

@Override

public Map<String, List<Catelog2Vo>> getCatelogJsonFromDBWithRedisLock(){

// 1、 Occupy distributed locks , Set up 30 Seconds automatically delete

String uuid = UUID.randomUUID().toString();

Boolean lock = stringRedisTemplate.opsForValue().setIfAbsent("lock", uuid, 30, TimeUnit.SECONDS);

if (lock){

System.out.println(" Get distributed lock successfully ..");

Map<String, List<Catelog2Vo>> data = null;

try {

data = getCatelogJsonFromDb();

System.out.println(" Check the database successfully ");

} finally {

String script = "if redis.call('get',KEYS[1]) == ARGV[1] then return redis.call('del',KEYS[1]) else return 0 end";

Long lockResult = stringRedisTemplate.execute(new DefaultRedisScript<>(script, Long.class),

Arrays.asList("lock"), uuid);

System.out.println(lockResult);

}

System.out.println(" Return to success ");

return data;

} else {

System.out.println(" Failed to acquire a distributed lock ..");

try {

TimeUnit.SECONDS.sleep(1);

} catch (InterruptedException e) {

e.printStackTrace();

}

return getCatelogJsonFromDBWithRedisLock();

}

}

3.2 Redisson cache

3.2.1 Basic configuration

It USES redis Implemented distributed locks , Let's introduce redisson To implement distributed locking

1. Configure the relevant environment :dependency、config file

<!-- https://mvnrepository.com/artifact/org.redisson/redisson -->

<dependency>

<groupId>org.redisson</groupId>

<artifactId>redisson</artifactId>

<version>3.16.1</version>

</dependency>

package com.empirefree.gulimall.product.config;

import org.redisson.Redisson;

import org.redisson.api.RedissonClient;

import org.redisson.config.Config;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import java.io.IOException;

/** * @program: renren-fast * @description: * @author: huyuqiao * @create: 2021/09/05 11:28 */

@Configuration

public class MyRedissonConfig {

@Bean(destroyMethod="shutdown")

public RedissonClient redisson() throws IOException {

Config config = new Config();

/* config.useClusterServers() .addNodeAddress("127.0.0.1:7004", "127.0.0.1:7001");*/

config.useSingleServer().setAddress("redis://82.156.202.23:6379");

return Redisson.create(config);

}

}

2.redisson Configured with watchdog Mechanism , share 2 spot :( For those without defined expiration time key)

2.1.redisson Of key Value renewal : stay redisson Before the instance expires , The watchdog will continue to renew key Value expiration time , The default is to automatically continue to 30 second

2.2.redisson After the instance is closed key Values are automatically deleted : To prevent a deadlock after an instance goes down ( Before redis Is the expiration time set , Deadlocks can also be avoided ), When redisson Instance expired , such as A The service went down after being locked , Then the watchdog will not renew ,30 second ( Default time ) Delete after key value ,B The service can get the lock .

Add : Look at the door. The automatic renewal time is 【 Customize the time or 30】 / 3, If the setting custom time is too small , The business has not been handled yet ,redisson Of key It will be deleted when it expires , After the execution of the business, you will delete the locks of other businesses .

@Autowired

private RedissonClient redissonClient;

@ResponseBody

@RequestMapping("/hello")

public String hello(){

RLock lock = redissonClient.getLock("my-lock");

lock.lock();

try {

System.out.println(" Locking success ...." + Thread.currentThread().getId());

TimeUnit.SECONDS.sleep(30);

} catch (Exception e) {

e.printStackTrace();

} finally {

System.out.println(" Release the lock ..." + Thread.currentThread().getId());

lock.unlock();

}

return "hello";

}

3.2.2 Redisson Three blocking conditions

1.RReadWriteLock Read-write lock

A read lock is a shared lock , Everyone can read , Writing lock is exclusive lock , When one person writes, others have to wait , So when the write operation is in progress , Read operation will block , namely :

A read ,B read : It won't block

A read ,B Write :B Will wait A Write after reading

A Write ,B Write : Blocking ,B Have to wait A finish writing sth.

A Write ,B read :B Have to wait A finish writing sth.

@Autowired

private RedissonClient redissonClient;

@Autowired

private StringRedisTemplate stringRedisTemplate;

@GetMapping("/write")

@ResponseBody

public String writeValue(){

RReadWriteLock lock = redissonClient.getReadWriteLock("rw-lock");

String s = "";

RLock rLock = lock.writeLock();

try {

rLock.lock();

s = UUID.randomUUID().toString();

TimeUnit.SECONDS.sleep(30);

stringRedisTemplate.opsForValue().set("writeValue", s);

} catch (Exception e) {

e.printStackTrace();

} finally {

rLock.unlock();

}

return s;

}

@GetMapping("/read")

@ResponseBody

public String readValue(){

RReadWriteLock lock = redissonClient.getReadWriteLock("rw-lock");

String s = "";

RLock rLock = lock.readLock();

rLock.lock();

try {

s = stringRedisTemplate.opsForValue().get("writeValue");

} catch (Exception e) {

e.printStackTrace();

} finally {

rLock.unlock();

}

return s;

}

2.RSemaphore Semaphore

It is divided into blocking wait acquisition and non blocking acquisition ( Get no direct return false)

Block wait to get : Get it -1, Reduced to 0 It will block when , Until one is released

Non blocking access : Get it -1, Reduced to 0 Just go back to false

Release : constantly +1, There is no upper limit

@GetMapping("/park")

@ResponseBody

public String park() throws InterruptedException {

RSemaphore park = redissonClient.getSemaphore("park");

// park.acquire(); // Block waiting

boolean b = park.tryAcquire(); // Non blocking , Go straight back to false

return "ok" + b;

}

@GetMapping("/go")

@ResponseBody

public String go(){

RSemaphore park = redissonClient.getSemaphore("park");

park.release();

return "ok";

}

3.RCountDownLatch

Divide into countDown And Latch

countDown: constantly -1

Latch: Similar to door valve , above -1 When the accumulation reaches the specified value, it will trigger , Otherwise, it will be blocked all the time

@GetMapping("/lockDoor")

@ResponseBody

public String lockDoor() throws InterruptedException {

RCountDownLatch door = redissonClient.getCountDownLatch("door");

door.trySetCount(5);

door.await();

return " be on holiday ..";

}

@GetMapping("/gogogo/{id}")

@ResponseBody

public String gogaogao(@PathVariable("id") Long id) throws InterruptedException {

RCountDownLatch door = redissonClient.getCountDownLatch("door");

door.countDown();

return id + " go ....";

}

3.3 @Cacheable cache

3.3.1 Use

1. Import dependence

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-cache</artifactId>

</dependency>

2. Start class use @EnableCaching

3. Use @Cacheable({“category”}) Just comment

3.4 OAuth2 Social login

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-Ji3hbIFH-1656671677181)(C:\Users\EDY\AppData\Roaming\Typora\typora-user-images\image-20220128131937025.png)]

Process introduction : The front end writes the redirection address , Return after authorization by the third party code code , Page redirection jump , Third party information can be obtained by the background .

3.5 Distributed Session share

Question 1 :cookie Save the sessionid, There will be cross domain problems for different domain names (CORS, Front end and back end can be solved ), But it can lead to CSRF attack , You can change to jwt Of token check

Question two : In the case of clustering multiple servers , Need to use distributed session share .

namely : Integrate SpringSession+redis Realize different primary and secondary domain names session Sharing issues , But it can't solve the problem under different domain names session Sharing issues , This leads to SSO Single sign on

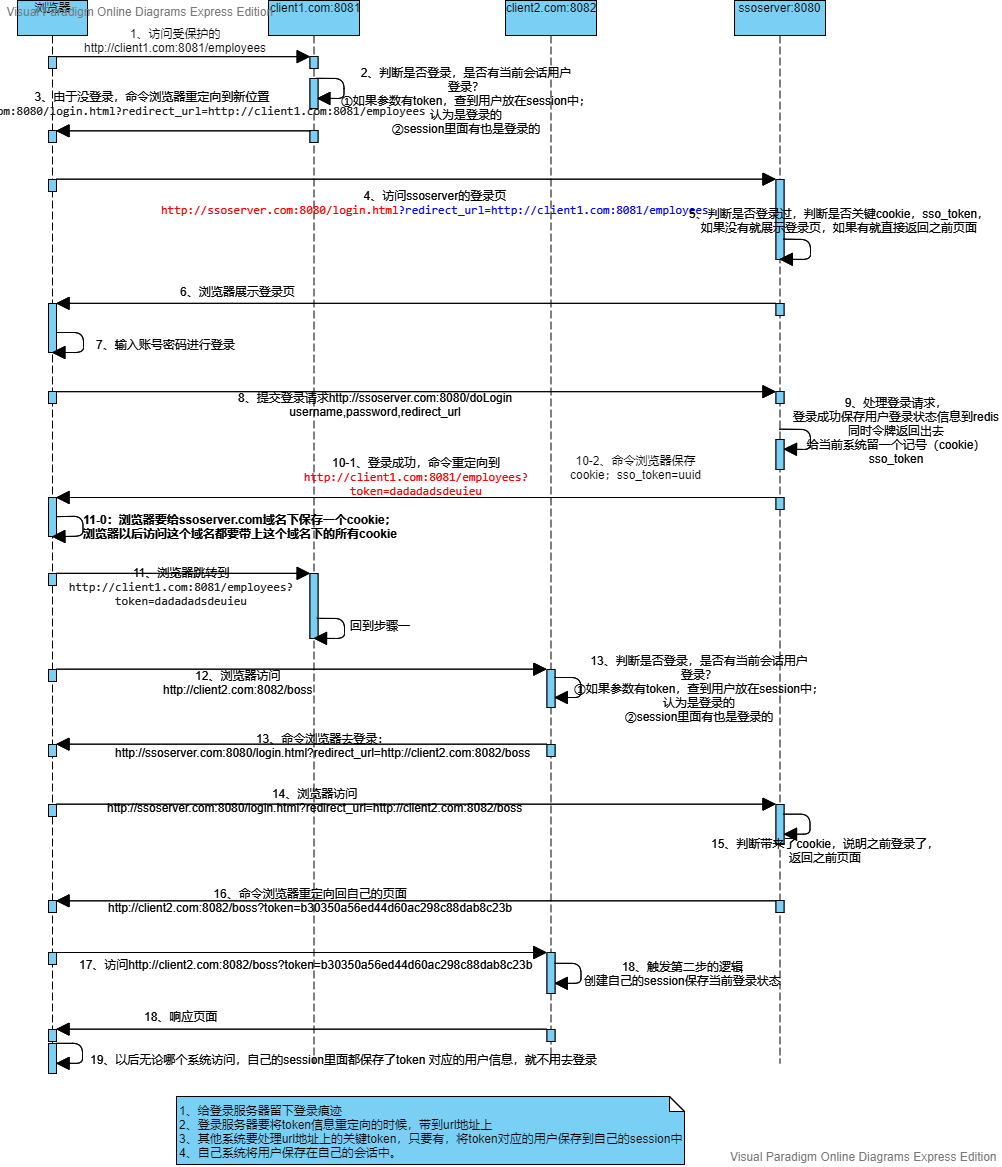

3.6 SSO Single sign on

Reference link :https://www.yuque.com/zhangshuaiyin/guli-mall

The above steps are analyzed as follows :

1~6 Step : In the local host Configure different domain names to represent clients and SSO Server side . Client access is accompanied by redirect_url then SSO The server can get and return ( When you are not logged in initially )

7~11 Step : The client logs in on the interface returned by the server , After receiving the information, the server needs to redirect to the client ( The client enters the meeting and carries redirect_url), Because the client is not logged in , It will redirect to the server circularly , Report errors 302, So the server needs to return a token Judge the client , meanwhile redis Keep a copy of , And in Cookie Save a copy of ( The core : Follow up other domain name login SSO Take it to the server to avoid login ) Then the client receives token Then it means that you don't need to jump to the server

notes : The client needs to do 2 thing : First, initial login access SSO Take it with you redirect_url Convenient for follow-up SSO The server jumps back , Second, for SSO Check the parameters that the server jumps back , prevent 302 Report errors

The server needs to do 2 thing : One is to extract the redirection address of the client and jump , The second is to save the login information , Then you can realize single sign on

12-19 Step : In addition to saving token Return to the client ,redis Save outside the data , And keep a copy Cookie, It is used to avoid login when other clients jump in the future , Then the client receives token after , have access to restTemplate Access to get redis User information in .

notes : The main core point here is that the server should generate cookie preservation .

4.RabbitMQ Message queue

4.1 Basic knowledge

4.1.1 Functional characteristics :

1. Asynchronous processing : Traditional asynchronism may need to wait for asynchronous return , The process is time-consuming , But putting it directly into the message queue reduces these times

2. The application of decoupling : The direct interface of each module may often change , Using message queue processing can reduce coupling , Each module does not interfere with each other

3. Traffic peak clipping : Seckill activity 、 Message callback will lead to a large number of requests , Put it in the queue and let the background application process slowly, which reduces the pressure on the background server .

4.1.2 Message service

The message service is divided into the following 2 Content

1. The message broker : Message service middleware

2. Destination : That is, how to send . Divide into queues ( Point to point message communication )、 The theme ( Release / subscribe )

Message services include 2 Type of implementation

1.JMS:Java Api, That is, the queue and topic mode above

2.AMQP: Network line level protocol ,5 A message model (direct,fanout,topic,headers,system【 The last four are the refinement of the theme 】)

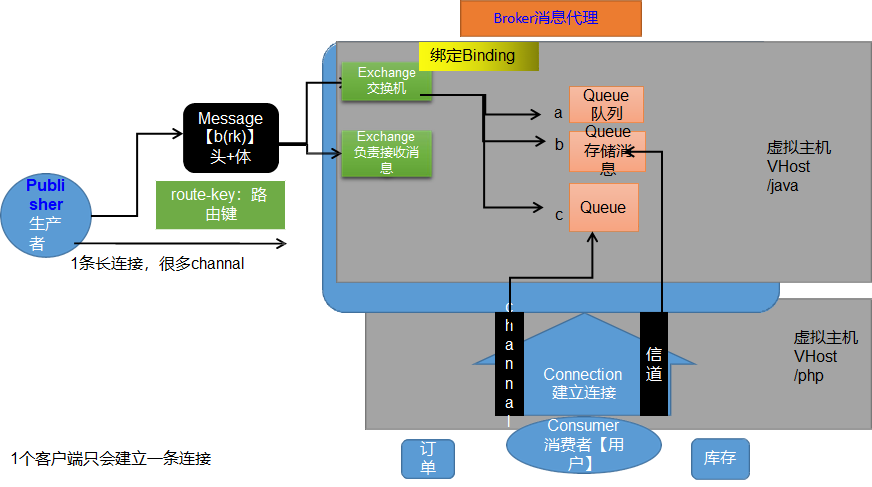

4.1.3 Rabbitmq Internal processes

The overall process is as follows :

1. Producers and consumers first establish a long connection with the message queue Connection( Inside many Channel, It can be multiplexed , After a consumer goes down rabbmitmq Will save the message , Will not be sent again ), And then send Message news ( Internally divided into message headers ( It contains route-key Routing key , That is, to which queue ) With the message body ( The real message content )).

2. When sending a message, it is sent to a virtual host ( There are message queues under multiple services , Then we should isolate ), Then the internal switch Exchange Send to Query queue , The subsequent queue is sent to the consumer .

4.2 Basic use

# install rabbitmq

docker run -d --name rabbitmq -p 5671:5671 -p 5672:5672 -p 4369:4369 -p 25672:25672 -p 15671:15671 -p 15672:15672 rabbitmq:management

docker update rabbitmq --restart=always

4.2.1 rabbitmq Message queue

1. Direct Pattern : One on one assignment routekesy Conduct Exchange And Queue Sending of

1. Fanout Pattern : Send to all binding 了 Exchange Of Queue.

1. Topic Pattern : Conduct Routekeys Theme matching (XXX.# representative 0 Or more ,*.XXX Represents at least one )

4.3 Message confirmation mechanism

4.3.1 The basic flow

[ Failed to transfer the external chain picture , The origin station may have anti-theft chain mechanism , It is suggested to save the pictures and upload them directly (img-WcLUufg5-1656671677183)(C:\Users\EDY\AppData\Roaming\Typora\typora-user-images\image-20220219123042054.png)]

1. From sender to broker agent , There is one confirmCallback Callback ( Sender acknowledgement mechanism )

1. from Exchange Send to Queue, Yes retureCallback Callback ( Sender acknowledgement mechanism )

1. from Queue Send to consumer , Yes ack Acknowledgement mechanism .( Consumer side confirmation mechanism )

5. Distributed transactions

5.1 Basic overview

5.1.1 CAP principle

C(Consistency): Uniformity , The data in the distributed system is consistent at the same time

A(Availability): Usability , After some nodes fail , Whether the cluster as a whole can respond to client requests .

P(Partition tolerance): Partition tolerance , There may be communication failure between subsystems of the distributed system .

notes :3 At most, they can only guarantee 2 spot , Generally, partition fault tolerance is guaranteed . Choose between consistency and availability

CP: because raft Spin and heartbeat selection of Algorithm leader Mechanism , In case of distributed system failure , When you don't choose a leader, you can't respond to the outside , That is, the system is unavailable .

AP( Default , Ensure the back of the system 99.N individual 9 Usability ): After system failure , Ensure that the system can return normally , Although there will be inconsistencies in the system data , But it can be recovered through subsequent business processing ( such as A->B Order warehousing , however A Rolled back B The order was not rolled back , You can use delete later B Order operation )

5.1.2 BASE theory

because CAP Ensure the strong consistency of data ( The data update can access the request immediately ), The actual distribution of some businesses is difficult to guarantee , So we introduced Base theory

principle :

1. It is basically available , A little later than the original response 、 Or jump to 404 Interface .( Weak consistency , Can wait or can't access )

2. Soft state : Buffer state , Like not paying -> Add a paid in the successful payment

3. Final consistency : You can access the updated data after a period of time , For example, the above has been paid to -> Successful payment can take a while .

5.1.3 Distributed transaction scheme

1、2PC Business : Also known as two-stage submission , The two transactions first determine whether the submission phase and the data submission phase can be performed , Rollback if a transaction cannot be committed

2、 Flexible business -TCC Business

1. Rigid business : Follow strictly ACID principle

2. Flexible business : follow Base The final consistency of the theory , That is, within a certain period of time , Different node data can be inconsistent , But in the end, we need to be consistent

5.1.4 SpringBoot- Integrate Seata

1. start-up seata-server-0.7.1.bat( Inside register.conf It's written in nacos You can register to nacos in )

2. Configure databases for multiple services of transaction management 、 Business . The main transaction enables global transaction management ( Put... Inside file.conf,register.conf, then file.conf modify )

3. The main transaction reports an error , If the transaction has been called , Will be rolled back together

notes : But this same transaction management requires locking , It's very time consuming , Not suitable for high concurrency , Suitable for background management system . flexible TCC This kind of transaction is sent to the message queue , Let the message queue rollback

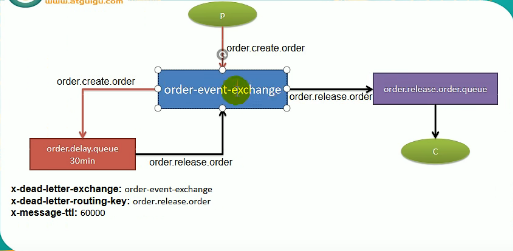

5.1.5 RabbitMq Delay queue

Time scan : If someone orders just after scanning , Then the next scan will not find , We can deal with it next time

TTL: time to live

Delay queue :60 Orders that expire in minutes are thrown by the switch to 60 Queue with minute delay ( Also called dead letter queue ) in , Then delay the queue for incoming data every 60 Minutes before being sent to the processing queue

5.1.6 common problem

1. Lost message ( For example, first store a message to be sent , Then the queue confirms and changes to reply , The consumer confirmed it and changed it to confirmed , If you fail in the midway and fail to modify successfully, you can compensate regularly ):

1. publisher-Broker Message confirmation mechanism of ,broker->consumer Message manual confirmation

2. Send messages and make records in the database

2. Duplicate message

1. Set the anti duplication table in the database , Ensure that messages are idempotent : use jwt-token token

3. There's a backlog of news

1. More online consumers . Save the database first , Later, it is processed asynchronously and slowly

5.2 Order service

5.2.1 symmetry / Asymmetric encryption

1. Symmetric encryption : The encryption and decryption parties use the same secret key

1. Asymmetric encryption : The encryptor saves the private key and the decryptor's public key , The decrypting party saves its private key and the encrypting Party's public key

5.2.2 Intranet through

Is to use a website to represent your own machine ( Software : Peanut shell 、 Zhexi )

5.3 Seckill service

5.3.1 Basic configuration

1. A microservice should be opened separately for seckill . It is divided into session table (sms_seckill_session) List of products associated with the event name (sms_seckill_sku_relation).

1. Idea-service Add memory limit in

5.3.2 Timing task

Add the second kill goods of the previous day to the inventory at a fixed time every day

Cron expression : second branch when Japan month Zhou year ( There may be conflicts between the day and the week , So one of them should use ? wildcard )

1, 3, 5 * * * * ? : Every time 1,3,5 Seconds to perform

7-20 * * * * ? : 7 To 20 Seconds per second

5.3.3 High concurrency problem

1. Seckill service is deployed separately

2. Seckill link encryption

3. Inventory preheating and quick deduction

4. nginx Separation of motion and stillness

5. Malicious request interception

6. Flow peak shifting : Add verification code , Shopping carts

7. Current limiting & Fuse & Downgrade : Limit access times , Blocking , Jump to other interfaces

8. Queue clipping : Seckill success requests to enter the queue , Slowly reduce inventory

5.3.4 Second kill design

1. Seckill link encryption , Use the same encryption method for a product name , The server verifies the legality of the second kill time and random code

1. Create an order for MQ, Follow up slowly

5.4 Sentinel( A little It didn't work )

5.4.1 Basic concepts

Fuse : Calling a service always fails , Subsequent direct calls are not allowed

Downgrade : When the server resources are tight , Stop an infrequent service

Current limiting : Be similar to Semaphore,cyclicbarrier, countdownLatch These allow only a specified number of threads to access

5.5 Sleuth( A little )

6. summary

High concurrency formula :

- cache

- asynchronous

- Queue up

边栏推荐

- How are you in the first half of the year occupied by the epidemic| Mid 2022 summary

- Test technology stack arrangement -- self cultivation of test development engineers

- wx小程序学习笔记day01

- test about BinaryTree

- LeetCode-1279. Traffic light intersection

- 三面蚂蚁金服成功拿到offer,Android开发社招面试经验

- Noninvasive and cuff free blood pressure measurement for telemedicine [translation]

- 渲大师携手向日葵,远控赋能云渲染及GPU算力服务

- AcWing 3537. Tree lookup complete binary tree

- Based on butterfly species recognition

猜你喜欢

![A wearable arm device for night and sleeveless blood pressure measurement [translation]](/img/fd/947a38742ab1c4009ec6aa7405a573.png)

A wearable arm device for night and sleeveless blood pressure measurement [translation]

Pytorch common loss function

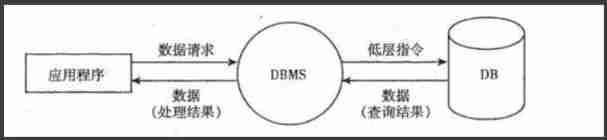

Simple understanding of MySQL database

Handwritten online chat system (principle part 1)

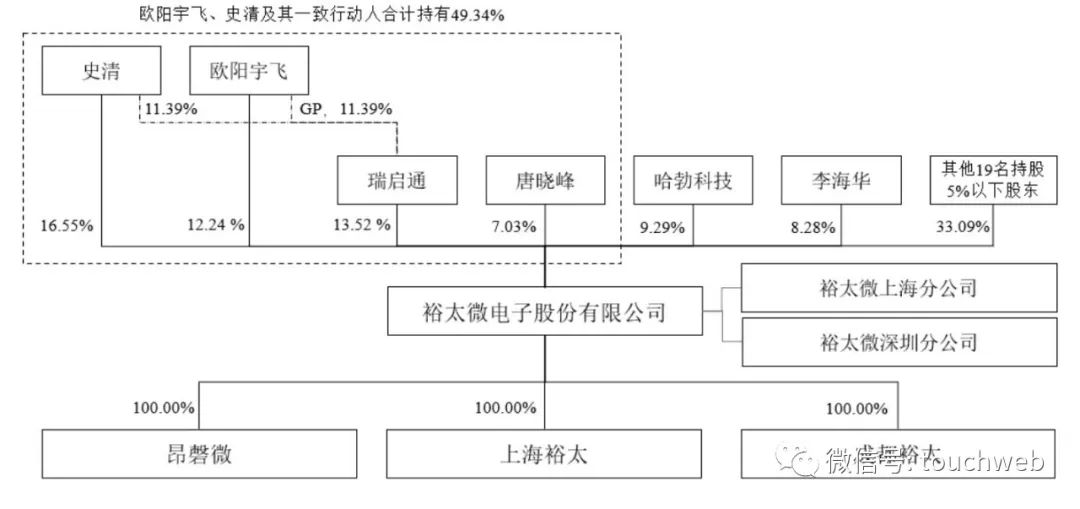

裕太微冲刺科创板:拟募资13亿 华为与小米基金是股东

涂鸦智能在香港双重主板上市:市值112亿港元 年营收3亿美元

Three years of Android development, Android interview experience and real questions sorting of eight major manufacturers during the 2022 epidemic

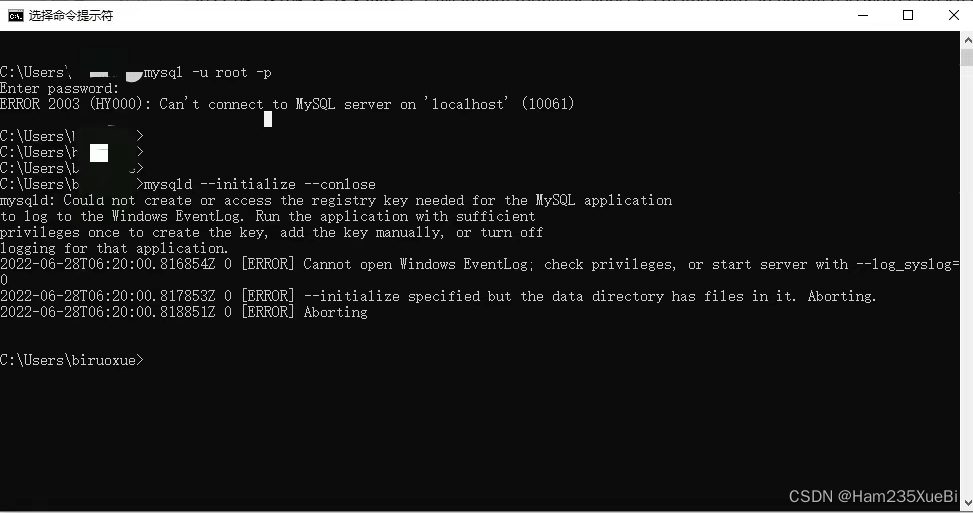

安装Mysql报错:Could not create or access the registry key needed for the...

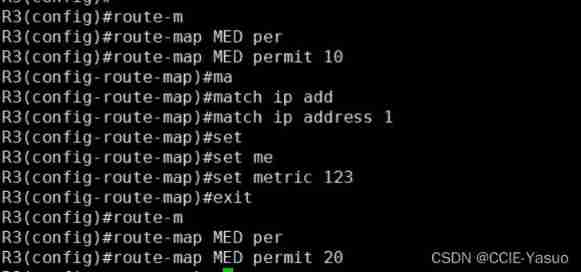

CCNP Part 11 BGP (III) (essence)

三年Android开发,2022疫情期间八家大厂的Android面试经历和真题整理

随机推荐

C language daily practice - day 22: Zero foundation learning dynamic planning

The nearest library of Qinglong panel

黑马--Redis篇

test about BinaryTree

Understanding disentangling in β- VAE paper reading notes

Openmv4 learning notes 1 --- one click download, background knowledge of image processing, lab brightness contrast

安装Mysql报错:Could not create or access the registry key needed for the...

Computer network: sorting out common network interview questions (I)

R语言使用dt函数生成t分布密度函数数据、使用plot函数可视化t分布密度函数数据(t Distribution)

R语言使用order函数对dataframe数据进行排序、基于单个字段(变量)进行降序排序(DESCENDING)

驼峰式与下划线命名规则(Camel case With hungarian notation)

上海部分招工市場對新冠陽性康複者拒絕招錄

openmv4 学习笔记1----一键下载、图像处理背景知识、LAB亮度-对比度

RT-Thread 组件 FinSH 使用时遇到的问题

Interface test tool - postman

[depth first search] Ji suanke: Square

Unlock 2 live broadcast themes in advance! Today, I will teach you how to complete software package integration Issues 29-30

ModuleNotFoundError: No module named ‘PIL‘解决方法

Interview assault 63: how to remove duplication in MySQL?

上海部分招工市场对新冠阳性康复者拒绝招录