当前位置:网站首页>Yolov5 Lite: ncnn+int8 deployment and quantification, raspberry pie can also be real-time

Yolov5 Lite: ncnn+int8 deployment and quantification, raspberry pie can also be real-time

2022-07-08 02:19:00 【pogg_】

The copyright of this article belongs to GiantPandaCV, Please do not reprint without permission

Preface : Remember the article I wrote two months ago , About yolov4-tiny+ncnn+int8 Detailed tutorial on quantification :

https://zhuanlan.zhihu.com/p/372278785

Later, I prepared to write yolov5+ncnn+int8 Quantitative tutorial , But in the yolov5 We have trouble quantifying , On the one hand, the speed is slower after quantification , On the other hand, the accuracy decreases seriously , There is a phenomenon that the screen is full of detection boxes , After many attempts , It all ended in failure .

later , Or decide to change another way yolov5 Quantify , One is even the smallest yolov5s The model can speed up after quantification , Still can't meet my demand for speed , Second, for Focus layer , No matter which forward reasoning framework is used , To add additional pairs Focus The splicing operation of layers is too cumbersome for me .

therefore , I am right. yolov5 Made a series of lightweight changes , Make his network structure more concise , It can also really speed up ( for example arm Raspberry pie of Architecture series , At least three times faster ;x86 Architecturally inter The processor can also speed up about twice ):

The model structure is shown in :https://zhuanlan.zhihu.com/p/400545131

This blog , Or continue with the previous article yolov4 Quantitative work , Yes yolov5 Conduct ncnn Deployment and quantification of .

One 、 Environmental preparation

There are two main tools needed :

ncnn The frame of reasoning

Address Links :https://github.com/Tencent/ncnn

YOLOv5-Lite Source code and weight

Address Links :https://github.com/ppogg/YOLOv5-Lite

The performance of the model is as follows :

About ncnn Build and install , There are many online tutorials , But recommended in linux Operation in environment ,window It's fine too , But there may be more holes .

Two 、onnx Model extraction

git clone https://github.com/ppogg/YOLOv5-Lite.git

python models/export.py --weights weights/yolov5-lite.pt --img 640 --batch 1

python -m onnxsim weights/yolov5-lite.onnx weights/yolov5-lite-sim.onnx

This process is usually very smooth ~

3、 ... and 、 Turn into ncnn Model

./onnx2ncnn yolov5ss-sim.onnx yolov5-lite.param yolov5-lite.bin

./ncnnoptimize yolov5-lite.param yolov5-lite.bin yolov5-lite-opt.param yolov5-lite-opt.bin 65536

This process still won't get stuck , It was extracted smoothly , At this point, there are fp32,fp16, Is the total 4 A model :

In order to realize dynamic size image processing , Need to be right yolov5ss-opt.param Of reshape Modify the operation :

Put the above three places reshape The scale of is all changed to -1:

Nothing else needs to be changed .

Four 、 Post processing modification

ncnn Official yolov5.cpp Two things need to be changed

anchor Information is in models/yolov5-lite.yaml, Need to cluster according to your own data set anchor Make corresponding modifications :

Output layer ID stay Permute Inside the floor , It also needs to be modified accordingly :

Revised as follows :

here , Only the above points are modified ,Focus Layer code can also be removed according to personal situation , again make You can test .

fp16 The effect of model detection is as follows :

5、 ... and 、Int8 quantitative

For more detailed tutorials, please refer to my Zhihu blog about yolov4-tiny A tutorial for , Many details will not be covered in this article ( A link is attached below ).

Here are some additional points :

- Please use the checklist data set coco_val that 5000 Data set ;

- mean and val The value of should be consistent with the value set in the original training model , stay yolov5ss.cpp It also needs to be consistent ;

- The verification process is quite long , Please wait patiently

Run code :

find images/ -type f > imagelist.txt

./ncnn2table yolov5-lite-opt.param yolov5-lite-opt.bin imagelist.txt yolov5-lite.table mean=[104,117,123] norm=[0.017,0.017,0.017] shape=[640,640,3] pixel=BGR thread=8 method=kl

./ncnn2int8 yolov5-lite-opt.param yolov5-lite-opt.bin yolov5-ite-opt-int8.param yolov5-lite-opt-int8.bin yolov5-lite.table

The quantified model is as follows :

The size of the quantized model is about 1.7m about , It should meet your obsessive-compulsive disorder of small model size ;

here , Quantitative shufflev2-yolov5 The model is tested :

There is a slight loss of accuracy after quantification , But it is still within the acceptable range . It is impossible that the accuracy of the model will not decline completely after quantification , For targets with obvious large-scale characteristics ,shufflev2-yolov5 For such goals score Can remain the same ( In fact, it will still drop a bit ), But for long-distance small-scale targets ,score It will go down 10%-30% Unequal , What can't be done , So please treat the model rationally .

Remove the first three preheating , The temperature of raspberry pie is 45° above , Test the model , After quantification benchmark as follows :

# The fourth time

[email protected]:~/Downloads/ncnn/build/benchmark $ ./benchncnn 8 4 0

loop_count = 8

num_threads = 4

powersave = 0

gpu_device = -1

cooling_down = 1

v5lite-s min = 90.86 max = 93.53 avg = 91.56

v5lite-s-int8 min = 83.15 max = 84.17 avg = 83.65

v5lite-s-416 min = 154.51 max = 155.59 avg = 155.09

yolov4-tiny min = 298.94 max = 302.47 avg = 300.69

nanodet_m min = 86.19 max = 142.79 avg = 99.61

squeezenet min = 59.89 max = 60.75 avg = 60.41

squeezenet_int8 min = 50.26 max = 51.31 avg = 50.75

mobilenet min = 73.52 max = 74.75 avg = 74.05

mobilenet_int8 min = 40.48 max = 40.73 avg = 40.63

mobilenet_v2 min = 72.87 max = 73.95 avg = 73.31

mobilenet_v3 min = 57.90 max = 58.74 avg = 58.34

shufflenet min = 40.67 max = 41.53 avg = 41.15

shufflenet_v2 min = 30.52 max = 31.29 avg = 30.88

mnasnet min = 62.37 max = 62.76 avg = 62.56

proxylessnasnet min = 62.83 max = 64.70 avg = 63.90

efficientnet_b0 min = 94.83 max = 95.86 avg = 95.35

efficientnetv2_b0 min = 103.83 max = 105.30 avg = 104.74

regnety_400m min = 76.88 max = 78.28 avg = 77.46

blazeface min = 13.99 max = 21.03 avg = 15.37

googlenet min = 144.73 max = 145.86 avg = 145.19

googlenet_int8 min = 123.08 max = 124.83 avg = 123.96

resnet18 min = 181.74 max = 183.07 avg = 182.37

resnet18_int8 min = 103.28 max = 105.02 avg = 104.17

alexnet min = 162.79 max = 164.04 avg = 163.29

vgg16 min = 867.76 max = 911.79 avg = 889.88

vgg16_int8 min = 466.74 max = 469.51 avg = 468.15

resnet50 min = 333.28 max = 338.97 avg = 335.71

resnet50_int8 min = 239.71 max = 243.73 avg = 242.54

squeezenet_ssd min = 179.55 max = 181.33 avg = 180.74

squeezenet_ssd_int8 min = 131.71 max = 133.34 avg = 132.54

mobilenet_ssd min = 151.74 max = 152.67 avg = 152.32

mobilenet_ssd_int8 min = 85.51 max = 86.19 avg = 85.77

mobilenet_yolo min = 327.67 max = 332.85 avg = 330.36

mobilenetv2_yolov3 min = 221.17 max = 224.84 avg = 222.60

It can be accelerated 5-10% about , I don't have it rv and rk A series of boards , So the test of other boards needs to be tested by friends in the community ~

As for the former yolov5s Why is the speed slower after quantification , Even the accuracy drops seriously , The only explanation lies in Focus layer , This thing is easy to collapse if it is slightly misaligned , It's also more brain consuming , Simply removed .

summary :

In this paper, yolov5-lite(s Model ) Deployment and quantification tutorial ;

Before dissecting yolov5s The reason why it is easy to collapse is quantified ;

ncnn Of fp16 Model contrast native torch The accuracy of the model can remain unchanged ;

[ Upper figure , Zuo Wei torch The original model , Right for fp16 Model ]ncnn Of int8 The accuracy of the model will decrease slightly , Speed can only be improved on raspberry pie 5-10%, Other boards have not been tested yet ;

[ Upper figure , Zuo Wei torch The original model , Right for int8 Model ]

For some scenes with small space , Like an elevator , Face detection , The resolution is generally 240*180,s The model in raspberry pie once reasoned forward as 55-60ms, stay 0.1T On the raspberry pie of Suanli , Basically, it can also achieve real-time !

Project address :https://github.com/ppogg/YOLOv5-Lite

Welcome white whoring ~

2021 year 08 month 20 Daily update : ----------------------------------------------------------

I have finished Android Version adaptation

This is my red rice mobile phone , The processor is Qualcomm snapdragon 730G, The test results are as follows :

This is quantified int8 Model detection effect :

Outdoor scene detection :

Reference resources :

【1】nihui: Record in detail u edition YOLOv5 object detection ncnn Realization

【2】pogg:NCNN+Int8+YOLOv4 Quantitative model and real-time reasoning

【3】pogg:ncnn+opencv+yolov5 Call the camera for detection

【4】https://github.com/ultralytics/yolov5

边栏推荐

- 分布式定时任务之XXL-JOB

- LeetCode精选200道--链表篇

- 需要思考的地方

- Le chemin du poisson et des crevettes

- JVM memory and garbage collection-3-object instantiation and memory layout

- In depth understanding of the se module of the attention mechanism in CV

- Clickhouse principle analysis and application practice "reading notes (8)

- 很多小夥伴不太了解ORM框架的底層原理,這不,冰河帶你10分鐘手擼一個極簡版ORM框架(趕快收藏吧)

- Strive to ensure that domestic events should be held as much as possible, and the State General Administration of sports has made it clear that offline sports events should be resumed safely and order

- Redismission source code analysis

猜你喜欢

VR/AR 的产业发展与技术实现

MQTT X Newsletter 2022-06 | v1.8.0 发布,新增 MQTT CLI 和 MQTT WebSocket 工具

![[knowledge map paper] attnpath: integrate the graph attention mechanism into knowledge graph reasoning based on deep reinforcement](/img/36/8aef4c9fcd9dd8a8227ac8ca57eb26.jpg)

[knowledge map paper] attnpath: integrate the graph attention mechanism into knowledge graph reasoning based on deep reinforcement

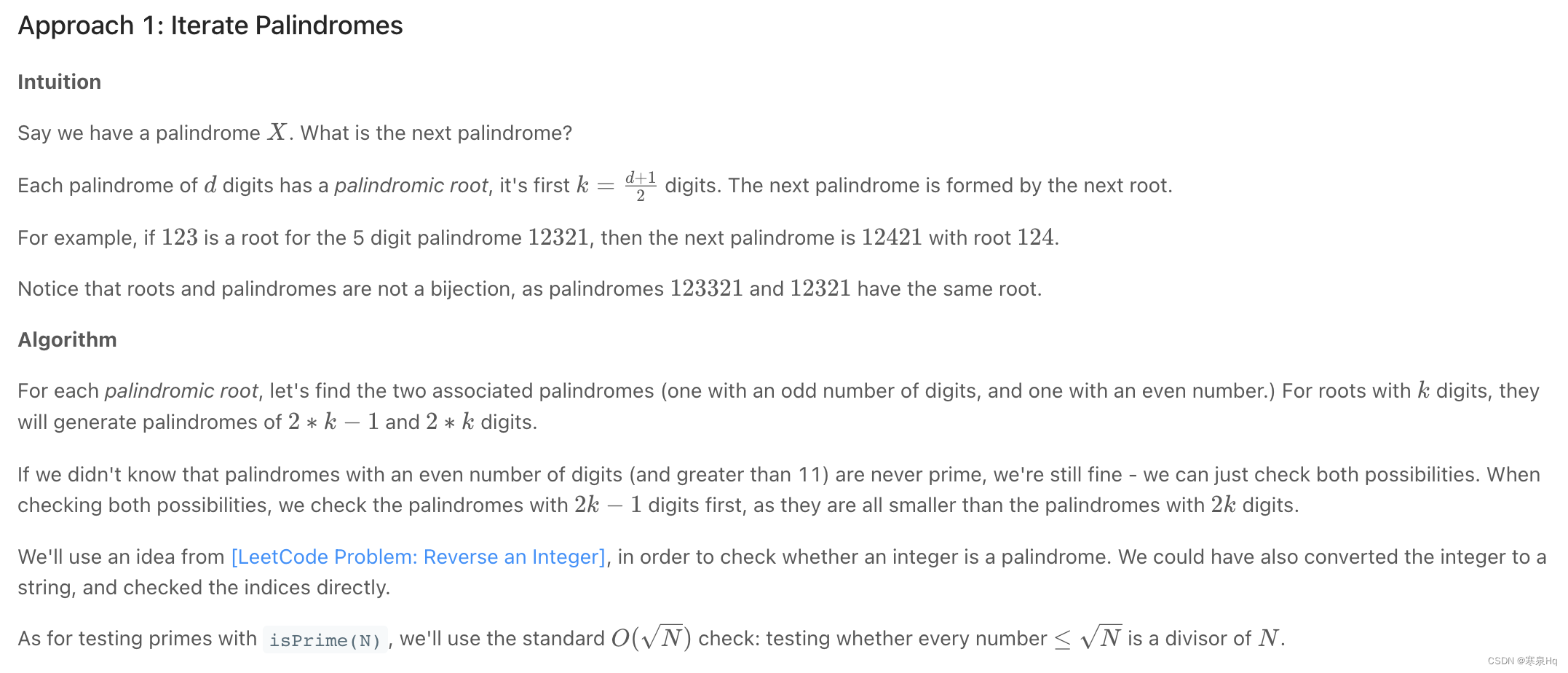

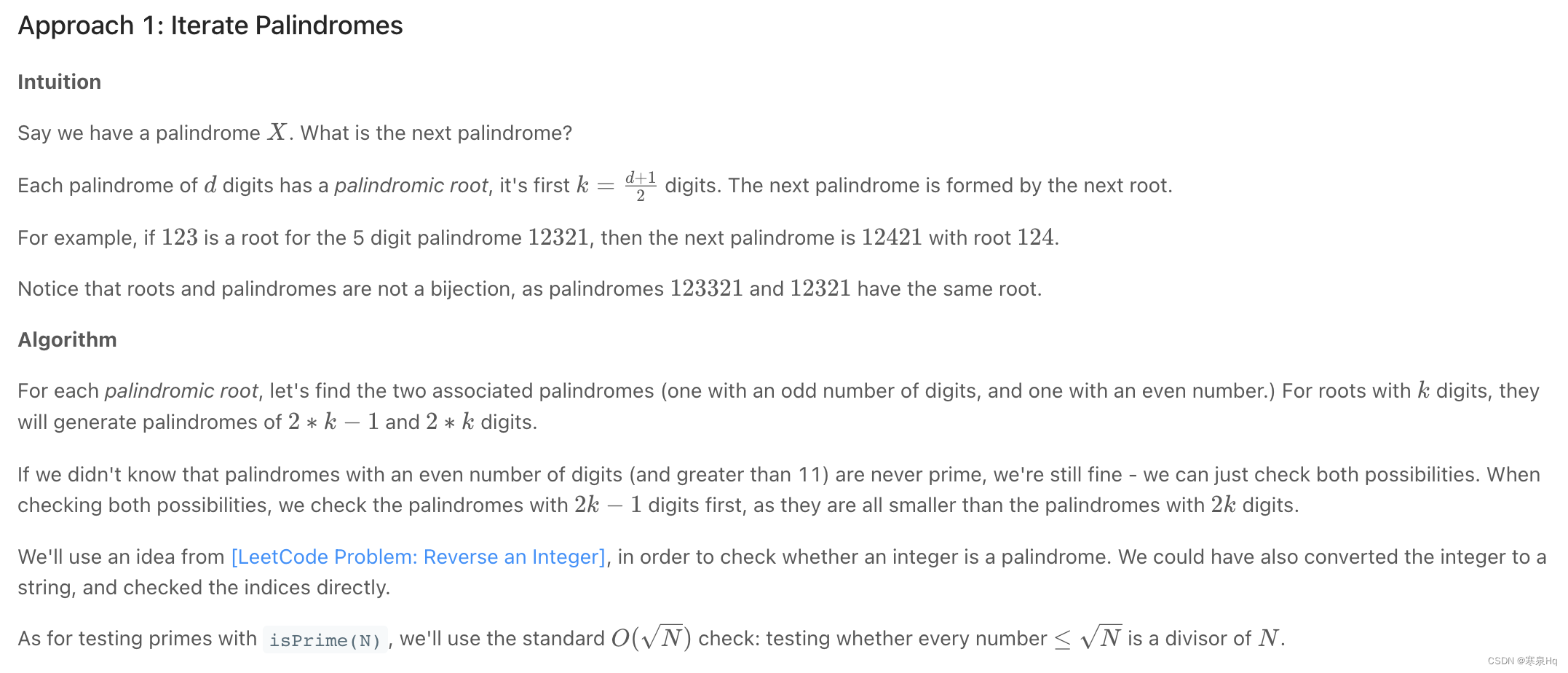

leetcode 866. Prime Palindrome | 866. 回文素数

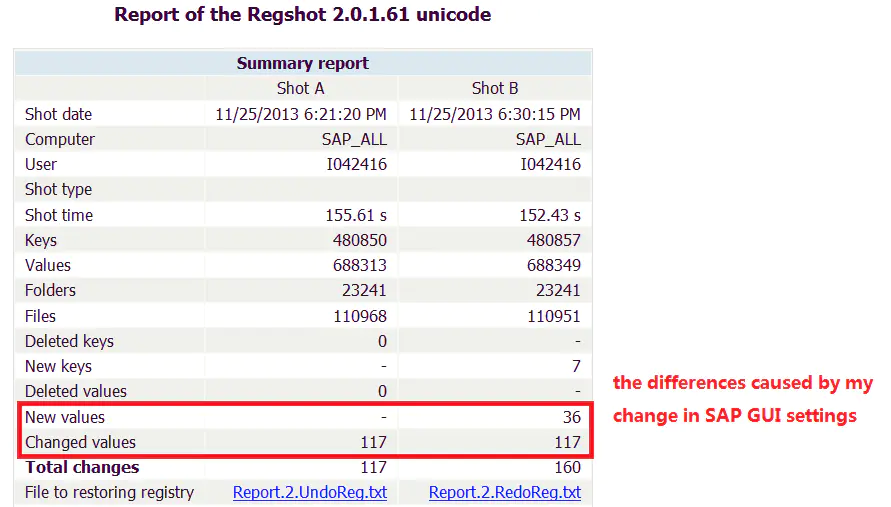

谈谈 SAP 系统的权限管控和事务记录功能的实现

分布式定时任务之XXL-JOB

leetcode 866. Prime Palindrome | 866. prime palindromes

OpenGL/WebGL着色器开发入门指南

Neural network and deep learning-5-perceptron-pytorch

Disk rust -- add a log to the program

随机推荐

The bank needs to build the middle office capability of the intelligent customer service module to drive the upgrade of the whole scene intelligent customer service

What are the types of system tests? Let me introduce them to you

Deeppath: a reinforcement learning method of knowledge graph reasoning

谈谈 SAP 系统的权限管控和事务记录功能的实现

See how names are added to namespace STD from cmath file

如何用Diffusion models做interpolation插值任务?——原理解析和代码实战

银行需要搭建智能客服模块的中台能力,驱动全场景智能客服务升级

企业培训解决方案——企业培训考试小程序

leetcode 866. Prime Palindrome | 866. prime palindromes

VIM use

Deep understanding of cross entropy loss function

Emqx 5.0 release: open source Internet of things message server with single cluster supporting 100million mqtt connections

[knowledge map paper] Devine: a generative anti imitation learning framework for knowledge map reasoning

阿锅鱼的大度

In depth analysis of ArrayList source code, from the most basic capacity expansion principle, to the magic iterator and fast fail mechanism, you have everything you want!!!

Why did MySQL query not go to the index? This article will give you a comprehensive analysis

Yolo fast+dnn+flask realizes streaming and streaming on mobile terminals and displays them on the web

Keras' deep learning practice -- gender classification based on inception V3

Industrial Development and technological realization of vr/ar

JVM memory and garbage collection-3-object instantiation and memory layout