Abstract : This article first briefly introduces Grouping Sets Usage of , And then to Spark SQL As an entry point , In depth analysis of Grouping Sets Implementation mechanism .

This article is shared from Huawei cloud community 《 In depth understanding of SQL Medium Grouping Sets sentence 》, author : Yuan Runzi .

Preface

SQL in Group By Everyone is familiar with the sentence , Group data according to specified rules , Often and Aggregate functions Use it together .

such as , Consider having a table dealer, The data in the table is as follows :

If you execute SQL sentence SELECT id, sum(quantity) FROM dealer GROUP BY id ORDER BY id, We will get the following results :

+---+-------------+

| id|sum(quantity)|

+---+-------------+

|100| 32|

|200| 33|

|300| 13|

+---+-------------+

Above SQL Statement means to press id Columns to group , Then pair quantity To sum .

Group By In addition to the simple usage above , There are more advanced uses , Common is Grouping Sets、RollUp and Cube, They are OLAP More commonly used when . among ,RollUp and Cube It's all about Grouping Sets Based on , therefore , I understand Grouping Sets, I understand RollUp and Cube .

This article first briefly introduces Grouping Sets Usage of , And then to Spark SQL As an entry point , In depth analysis of Grouping Sets Implementation mechanism .

Spark SQL yes Apache Spark A sub module of big data processing framework , Used to process structured information . It can be SQL Sentence translation multiple tasks in Spark Execute on cluster , Allow users to pass directly through SQL To process data , Greatly improved usability .

Grouping Sets brief introduction

Spark SQL In official documents SQL Syntax One section right Grouping Sets The statement is described below :

Groups the rows for each grouping set specified after GROUPING SETS. (... Some examples ) This clause is a shorthand for a

UNION ALLwhere each leg of theUNION ALLoperator performs aggregation of each grouping set specified in theGROUPING SETSclause. (... Some examples )

That is to say ,Grouping Sets The function of the statement is to specify several grouping set As Group By Grouping rules , Then combine the results . Its effect and , First of all, separate these grouping set Conduct Group By After grouping , Re pass Union All Combine the results , It's the same .

such as , about dealer surface ,Group By Grouping Sets ((city, car_model), (city), (car_model), ()) and Union All((Group By city, car_model), (Group By city), (Group By car_model), Global aggregation ) The effect is the same :

First look at Grouping Sets Implementation results of version :

spark-sql> SELECT city, car_model, sum(quantity) AS sum FROM dealer

> GROUP BY GROUPING SETS ((city, car_model), (city), (car_model), ())

> ORDER BY city, car_model;

+--------+------------+---+

| city| car_model|sum|

+--------+------------+---+

| null| null| 78|

| null|Honda Accord| 33|

| null| Honda CRV| 10|

| null| Honda Civic| 35|

| Dublin| null| 33|

| Dublin|Honda Accord| 10|

| Dublin| Honda CRV| 3|

| Dublin| Honda Civic| 20|

| Fremont| null| 32|

| Fremont|Honda Accord| 15|

| Fremont| Honda CRV| 7|

| Fremont| Honda Civic| 10|

|San Jose| null| 13|

|San Jose|Honda Accord| 8|

|San Jose| Honda Civic| 5|

+--------+------------+---+

Look again Union All Implementation results of version :

spark-sql> (SELECT city, car_model, sum(quantity) AS sum FROM dealer GROUP BY city, car_model) UNION ALL

> (SELECT city, NULL as car_model, sum(quantity) AS sum FROM dealer GROUP BY city) UNION ALL

> (SELECT NULL as city, car_model, sum(quantity) AS sum FROM dealer GROUP BY car_model) UNION ALL

> (SELECT NULL as city, NULL as car_model, sum(quantity) AS sum FROM dealer)

> ORDER BY city, car_model;

+--------+------------+---+

| city| car_model|sum|

+--------+------------+---+

| null| null| 78|

| null|Honda Accord| 33|

| null| Honda CRV| 10|

| null| Honda Civic| 35|

| Dublin| null| 33|

| Dublin|Honda Accord| 10|

| Dublin| Honda CRV| 3|

| Dublin| Honda Civic| 20|

| Fremont| null| 32|

| Fremont|Honda Accord| 15|

| Fremont| Honda CRV| 7|

| Fremont| Honda Civic| 10|

|San Jose| null| 13|

|San Jose|Honda Accord| 8|

|San Jose| Honda Civic| 5|

+--------+------------+---+

The query results of the two versions are exactly the same .

Grouping Sets Implementation plan of

In terms of execution results ,Grouping Sets Version and Union All Version of SQL It is equivalent. , but Grouping Sets The version is more concise .

that ,Grouping Sets It's just Union All An abbreviation of , Or grammar sugar ?

In order to further explore Grouping Sets Whether the underlying implementation of is consistent with Union All It's consistent , We can take a look at the implementation plan of the two .

First , We go through explain extended Check it out. Union All Version of Optimized Logical Plan:

spark-sql> explain extended (SELECT city, car_model, sum(quantity) AS sum FROM dealer GROUP BY city, car_model) UNION ALL(SELECT city, NULL as car_model, sum(quantity) AS sum FROM dealer GROUP BY city) UNION ALL (SELECT NULL as city, car_model, sum(quantity) AS sum FROM dealer GROUP BY car_model) UNION ALL (SELECT NULL as city, NULL as car_model, sum(quantity) AS sum FROM dealer) ORDER BY city, car_model;

== Parsed Logical Plan ==

...

== Analyzed Logical Plan ==

...

== Optimized Logical Plan ==

Sort [city#93 ASC NULLS FIRST, car_model#94 ASC NULLS FIRST], true

+- Union false, false

:- Aggregate [city#93, car_model#94], [city#93, car_model#94, sum(quantity#95) AS sum#79L]

: +- Project [city#93, car_model#94, quantity#95]

: +- HiveTableRelation [`default`.`dealer`, ..., Data Cols: [id#92, city#93, car_model#94, quantity#95], Partition Cols: []]

:- Aggregate [city#97], [city#97, null AS car_model#112, sum(quantity#99) AS sum#81L]

: +- Project [city#97, quantity#99]

: +- HiveTableRelation [`default`.`dealer`, ..., Data Cols: [id#96, city#97, car_model#98, quantity#99], Partition Cols: []]

:- Aggregate [car_model#102], [null AS city#113, car_model#102, sum(quantity#103) AS sum#83L]

: +- Project [car_model#102, quantity#103]

: +- HiveTableRelation [`default`.`dealer`, ..., Data Cols: [id#100, city#101, car_model#102, quantity#103], Partition Cols: []]

+- Aggregate [null AS city#114, null AS car_model#115, sum(quantity#107) AS sum#86L]

+- Project [quantity#107]

+- HiveTableRelation [`default`.`dealer`, ..., Data Cols: [id#104, city#105, car_model#106, quantity#107], Partition Cols: []]

== Physical Plan ==

...

From the above Optimized Logical Plan It can be seen clearly Union All Implementation logic of version :

Execute each subquery statement , Calculate the query results . among , The logic of each query statement is like this :

stay HiveTableRelation Node pair

dealerTable scanning .stay Project The node selects the columns related to the query statement results , For example, for sub query statements

SELECT NULL as city, NULL as car_model, sum(quantity) AS sum FROM dealer, Just keepquantityJust column .stay Aggregate Node to complete

quantityColumn pair aggregation . In the above Plan in ,Aggregate Immediately following is the column used for grouping , such asAggregate [city#902]It means according tocityColumn to group .

stay Union The node completes the union of each sub query result .

Last , stay Sort The node finishes sorting the data , Above Plan in

Sort [city#93 ASC NULLS FIRST, car_model#94 ASC NULLS FIRST]It means according tocityandcar_modelSort columns in ascending order .

Next , We go through explain extended Check it out. Grouping Sets Version of Optimized Logical Plan:

spark-sql> explain extended SELECT city, car_model, sum(quantity) AS sum FROM dealer GROUP BY GROUPING SETS ((city, car_model), (city), (car_model), ()) ORDER BY city, car_model;

== Parsed Logical Plan ==

...

== Analyzed Logical Plan ==

...

== Optimized Logical Plan ==

Sort [city#138 ASC NULLS FIRST, car_model#139 ASC NULLS FIRST], true

+- Aggregate [city#138, car_model#139, spark_grouping_id#137L], [city#138, car_model#139, sum(quantity#133) AS sum#124L]

+- Expand [[quantity#133, city#131, car_model#132, 0], [quantity#133, city#131, null, 1], [quantity#133, null, car_model#132, 2], [quantity#133, null, null, 3]], [quantity#133, city#138, car_model#139, spark_grouping_id#137L]

+- Project [quantity#133, city#131, car_model#132]

+- HiveTableRelation [`default`.`dealer`, org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe, Data Cols: [id#130, city#131, car_model#132, quantity#133], Partition Cols: []]

== Physical Plan ==

...

from Optimized Logical Plan Look at ,Grouping Sets The version should be much simpler ! The specific execution logic is as follows :

stay HiveTableRelation Node pair

dealerTable scanning .stay Project The node selects the columns related to the query statement results .

Next Expand Nodes are the key , After the data passes through this node , There's more

spark_grouping_idColumn . from Plan You can see that ,Expand The node containsGrouping SetsEvery one of them grouping set Information , such as[quantity#133, city#131, null, 1]The corresponding is(city)this grouping set. and , Every grouping set Correspondingspark_grouping_idThe values of the columns are fixed , such as(city)Correspondingspark_grouping_idby1.stay Aggregate Node to complete

quantityColumn pair aggregation , The grouping rule iscity, car_model, spark_grouping_id. Be careful , Data after Aggregate After node ,spark_grouping_idThe column was deleted !Last , stay Sort The node finishes sorting the data .

from Optimized Logical Plan Look at , although Union All Version and Grouping Sets The effect of the version is consistent , But there are huge differences in their underlying implementations .

among ,Grouping Sets Version of Plan The most important thing is Expand node , at present , We only know that after the data passes through it , There's more spark_grouping_id Column . And from the end result ,spark_grouping_id It's just Spark SQL Internal implementation details of , Not for users . that :

Expand What is the implementation logic of , Why can we achieve

Union AllThe effect of ?Expand What is the output data of the node ?

spark_grouping_idWhat is the function of columns ?

adopt Physical Plan, We found that Expand The operator name corresponding to the node is also Expand:

== Physical Plan ==

AdaptiveSparkPlan isFinalPlan=false

+- Sort [city#138 ASC NULLS FIRST, car_model#139 ASC NULLS FIRST], true, 0

+- Exchange rangepartitioning(city#138 ASC NULLS FIRST, car_model#139 ASC NULLS FIRST, 200), ENSURE_REQUIREMENTS, [plan_id=422]

+- HashAggregate(keys=[city#138, car_model#139, spark_grouping_id#137L], functions=[sum(quantity#133)], output=[city#138, car_model#139, sum#124L])

+- Exchange hashpartitioning(city#138, car_model#139, spark_grouping_id#137L, 200), ENSURE_REQUIREMENTS, [plan_id=419]

+- HashAggregate(keys=[city#138, car_model#139, spark_grouping_id#137L], functions=[partial_sum(quantity#133)], output=[city#138, car_model#139, spark_grouping_id#137L, sum#141L])

+- Expand [[quantity#133, city#131, car_model#132, 0], [quantity#133, city#131, null, 1], [quantity#133, null, car_model#132, 2], [quantity#133, null, null, 3]], [quantity#133, city#138, car_model#139, spark_grouping_id#137L]

+- Scan hive default.dealer [quantity#133, city#131, car_model#132], HiveTableRelation [`default`.`dealer`, ..., Data Cols: [id#130, city#131, car_model#132, quantity#133], Partition Cols: []]

With the previous questions , Next, let's go deep into Spark SQL Of Expand Operator source code to find the answer .

Expand The realization of operators

Expand The operator is in Spark SQL The implementation in the source code is ExpandExec class (Spark SQL The names of operator implementation classes in are XxxExec The format of , among Xxx Is the specific operator name , such as Project The implementation class of the operator is ProjectExec), The core code is as follows :

/**

* Apply all of the GroupExpressions to every input row, hence we will get

* multiple output rows for an input row.

* @param projections The group of expressions, all of the group expressions should

* output the same schema specified bye the parameter `output`

* @param output The output Schema

* @param child Child operator

*/

case class ExpandExec(

projections: Seq[Seq[Expression]],

output: Seq[Attribute],

child: SparkPlan)

extends UnaryExecNode with CodegenSupport {

...

// Key points 1, take child.output, That is, the output data of the upstream operator schema,

// Bound to an expression array exprs, To calculate the output data

private[this] val projection =

(exprs: Seq[Expression]) => UnsafeProjection.create(exprs, child.output)

// doExecute() Method is Expand Where the operator executes logic

protected override def doExecute(): RDD[InternalRow] = {

val numOutputRows = longMetric("numOutputRows")

// Processing the output data of upstream operators ,Expand The input data of the operator starts from iter Iterator gets

child.execute().mapPartitions { iter =>

// Key points 2,projections Corresponding Grouping Sets Each of them grouping set The expression of ,

// Expression output data schema by this.output, such as (quantity, city, car_model, spark_grouping_id)

// The logic here is to generate a UnsafeProjection object , Through the apply The method will lead to Expand The output data of the operator

val groups = projections.map(projection).toArray

new Iterator[InternalRow] {

private[this] var result: InternalRow = _

private[this] var idx = -1 // -1 means the initial state

private[this] var input: InternalRow = _

override final def hasNext: Boolean = (-1 < idx && idx < groups.length) || iter.hasNext

override final def next(): InternalRow = {

// Key points 3, For each record of input data , Are reused N Time , among N The size of corresponds to projections Size of array ,

// That is to say Grouping Sets In the specified grouping set The number of

if (idx <= 0) {

// in the initial (-1) or beginning(0) of a new input row, fetch the next input tuple

input = iter.next()

idx = 0

}

// Key points 4, For each record of input data , adopt UnsafeProjection Calculate the output data ,

// Every grouping set Corresponding UnsafeProjection Will be the same input Do a calculation

result = groups(idx)(input)

idx += 1

if (idx == groups.length && iter.hasNext) {

idx = 0

}

numOutputRows += 1

result

}

}

}

}

...

}

ExpandExec The implementation of is not complicated , Want to understand how it works , The key is to understand what is mentioned in the above source code 4 A key point .

Key points 1 and Key points 2 It's the foundation , Key points 2 Medium groups It's a UnsafeProjection[N] An array type , Each of them UnsafeProjection On behalf of Grouping Sets Specified in the statement grouping set, It's defined like this :

// A projection that returns UnsafeRow.

abstract class UnsafeProjection extends Projection {

override def apply(row: InternalRow): UnsafeRow

}

// The factory object for `UnsafeProjection`.

object UnsafeProjection

extends CodeGeneratorWithInterpretedFallback[Seq[Expression], UnsafeProjection] {

// Returns an UnsafeProjection for given sequence of Expressions, which will be bound to

// `inputSchema`.

def create(exprs: Seq[Expression], inputSchema: Seq[Attribute]): UnsafeProjection = {

create(bindReferences(exprs, inputSchema))

}

...

}

UnsafeProjection It is similar to the function of column projection , among , apply Method is based on the parameters passed during creation exprs and inputSchema, Column projection of input records , Get the output record .

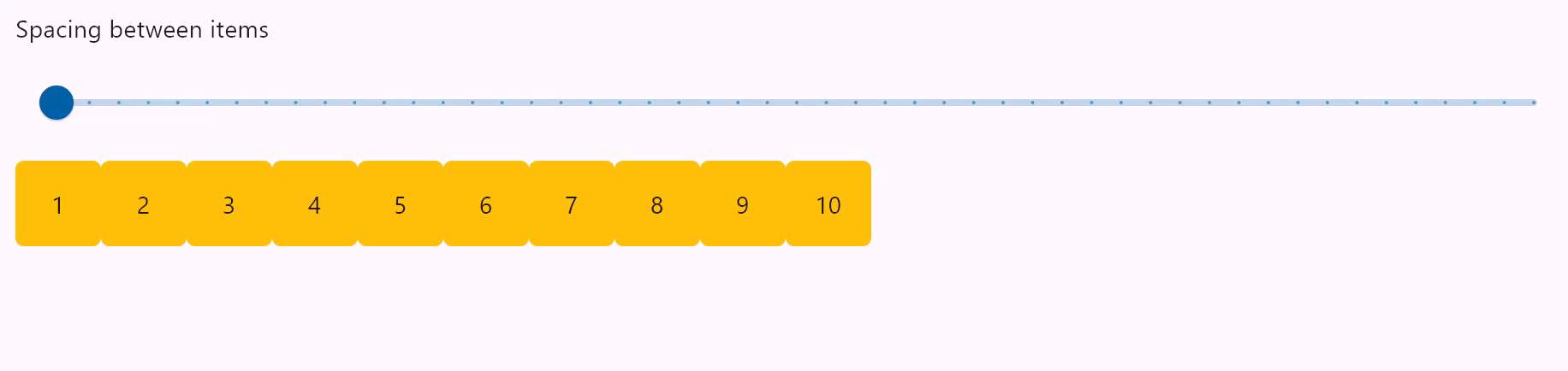

such as , Ahead GROUPING SETS ((city, car_model), (city), (car_model), ()) Example , Its corresponding groups That's true :

among ,AttributeReference Expression of type , At the time of calculation , It will directly reference the value of the corresponding column of the input data ;Iteral Expression of type , At the time of calculation , The value is fixed .

Key points 3 and Key points 4 yes Expand The essence of operators ,ExpandExec Through these two paragraphs of logic , Record every input , Expand (Expand) become N Output records .

Key points 4ingroups(idx)(input)Equate togroups(idx).apply(input).

Or the front GROUPING SETS ((city, car_model), (city), (car_model), ()) As an example , The effect is this :

Come here , We have made it clear Expand How the operator works , Look back at the previous mentioned 3 A question , It's not difficult to answer :

Expand What is the implementation logic of , Why can we achieve

Union AllThe effect of ?if

Union AllIt is to aggregate first and then unite , that Expand Is to unite first and then aggregate .Expand utilizegroupsInside N An expression evaluates each input record , Expanded into N Output records . When we aggregate later , Can achieve andUnion AllThe same effect .Expand What is the output data of the node ?

stay schema On ,Expand The output data will be more than the input data

spark_grouping_idColumn ; On the record number , Is the number of input data records N times .spark_grouping_idWhat is the function of columns ?spark_grouping_idFor each grouping set Number , such , Even in Expand The stage combines the data first , stay Aggregate Stage ( holdspark_grouping_idAdd to the grouping rule ) It can also ensure that the data can be in accordance with each grouping set Aggregate separately , Ensure the correctness of the results .

Query performance comparison

From the above, we can see that ,Grouping Sets and Union All Two versions of SQL Statements have the same effect , But there are huge differences in their implementation plans . below , We will compare the performance differences between the two versions .

spark-sql After execution SQL The time-consuming information will be printed after the statement , We have two versions of SQL Separately 10 Time , Get the following information :

// Grouping Sets Version execution 10 Time consuming information

// SELECT city, car_model, sum(quantity) AS sum FROM dealer GROUP BY GROUPING SETS ((city, car_model), (city), (car_model), ()) ORDER BY city, car_model;

Time taken: 0.289 seconds, Fetched 15 row(s)

Time taken: 0.251 seconds, Fetched 15 row(s)

Time taken: 0.259 seconds, Fetched 15 row(s)

Time taken: 0.258 seconds, Fetched 15 row(s)

Time taken: 0.296 seconds, Fetched 15 row(s)

Time taken: 0.247 seconds, Fetched 15 row(s)

Time taken: 0.298 seconds, Fetched 15 row(s)

Time taken: 0.286 seconds, Fetched 15 row(s)

Time taken: 0.292 seconds, Fetched 15 row(s)

Time taken: 0.282 seconds, Fetched 15 row(s)

// Union All Version execution 10 Time consuming information

// (SELECT city, car_model, sum(quantity) AS sum FROM dealer GROUP BY city, car_model) UNION ALL (SELECT city, NULL as car_model, sum(quantity) AS sum FROM dealer GROUP BY city) UNION ALL (SELECT NULL as city, car_model, sum(quantity) AS sum FROM dealer GROUP BY car_model) UNION ALL (SELECT NULL as city, NULL as car_model, sum(quantity) AS sum FROM dealer) ORDER BY city, car_model;

Time taken: 0.628 seconds, Fetched 15 row(s)

Time taken: 0.594 seconds, Fetched 15 row(s)

Time taken: 0.591 seconds, Fetched 15 row(s)

Time taken: 0.607 seconds, Fetched 15 row(s)

Time taken: 0.616 seconds, Fetched 15 row(s)

Time taken: 0.64 seconds, Fetched 15 row(s)

Time taken: 0.623 seconds, Fetched 15 row(s)

Time taken: 0.625 seconds, Fetched 15 row(s)

Time taken: 0.62 seconds, Fetched 15 row(s)

Time taken: 0.62 seconds, Fetched 15 row(s)

You can work out ,Grouping Sets Version of SQL The average time taken is 0.276s;Union All Version of SQL The average time taken is 0.616s, It's the former 2.2 times !

therefore ,Grouping Sets Version of SQL It is not only more concise in expression , It is also more efficient in performance .

RollUp and Cube

Group By In advanced usage , also RollUp and Cube Two are commonly used .

First , Let's take a look at RollUp sentence .

Spark SQL In official documents SQL Syntax One section right RollUp The statement is described below :

Specifies multiple levels of aggregations in a single statement. This clause is used to compute aggregations based on multiple grouping sets.

ROLLUPis a shorthand forGROUPING SETS. (... Some examples )

In official documents , hold RollUp Described as Grouping Sets Abbreviation , The equivalence rule is :RollUp(A, B, C) == Grouping Sets((A, B, C), (A, B), (A), ()).

such as ,Group By RollUp(city, car_model) Is equivalent to Group By Grouping Sets((city, car_model), (city), ()).

below , We go through expand extended look down RollUp edition SQL Of Optimized Logical Plan:

spark-sql> explain extended SELECT city, car_model, sum(quantity) AS sum FROM dealer GROUP BY ROLLUP(city, car_model) ORDER BY city, car_model;

== Parsed Logical Plan ==

...

== Analyzed Logical Plan ==

...

== Optimized Logical Plan ==

Sort [city#2164 ASC NULLS FIRST, car_model#2165 ASC NULLS FIRST], true

+- Aggregate [city#2164, car_model#2165, spark_grouping_id#2163L], [city#2164, car_model#2165, sum(quantity#2159) ASsum#2150L]

+- Expand [[quantity#2159, city#2157, car_model#2158, 0], [quantity#2159, city#2157, null, 1], [quantity#2159, null, null, 3]], [quantity#2159, city#2164, car_model#2165, spark_grouping_id#2163L]

+- Project [quantity#2159, city#2157, car_model#2158]

+- HiveTableRelation [`default`.`dealer`, ..., Data Cols: [id#2156, city#2157, car_model#2158, quantity#2159], Partition Cols: []]

== Physical Plan ==

...

From the above Plan It can be seen that ,RollUp The underlying implementation is also used Expand operator , explain RollUp It's really based on Grouping Sets Realized . and Expand [[quantity#2159, city#2157, car_model#2158, 0], [quantity#2159, city#2157, null, 1], [quantity#2159, null, null, 3]] Also shows RollUp Conform to the equivalence rule .

below , We follow the same line of thinking , look down Cube sentence .

Spark SQL In official documents SQL Syntax One section right Cube The statement is described below :

CUBEclause is used to perform aggregations based on combination of grouping columns specified in theGROUP BYclause.CUBEis a shorthand forGROUPING SETS. (... Some examples )

Again , Official documents put Cube Described as Grouping Sets Abbreviation , The equivalence rule is :Cube(A, B, C) == Grouping Sets((A, B, C), (A, B), (A, C), (B, C), (A), (B), (C), ()).

such as ,Group By Cube(city, car_model) Is equivalent to Group By Grouping Sets((city, car_model), (city), (car_model), ()).

below , We go through expand extended look down Cube edition SQL Of Optimized Logical Plan:

spark-sql> explain extended SELECT city, car_model, sum(quantity) AS sum FROM dealer GROUP BY CUBE(city, car_model) ORDER BY city, car_model;

== Parsed Logical Plan ==

...

== Analyzed Logical Plan ==

...

== Optimized Logical Plan ==

Sort [city#2202 ASC NULLS FIRST, car_model#2203 ASC NULLS FIRST], true

+- Aggregate [city#2202, car_model#2203, spark_grouping_id#2201L], [city#2202, car_model#2203, sum(quantity#2197) ASsum#2188L]

+- Expand [[quantity#2197, city#2195, car_model#2196, 0], [quantity#2197, city#2195, null, 1], [quantity#2197, null, car_model#2196, 2], [quantity#2197, null, null, 3]], [quantity#2197, city#2202, car_model#2203, spark_grouping_id#2201L]

+- Project [quantity#2197, city#2195, car_model#2196]

+- HiveTableRelation [`default`.`dealer`, ..., Data Cols: [id#2194, city#2195, car_model#2196, quantity#2197], Partition Cols: []]

== Physical Plan ==

...

From the above Plan It can be seen that ,Cube The bottom layer is also used Expand operator , explain Cube It's based on Grouping Sets Realization , And it also conforms to the equivalence rule .

therefore ,RollUp and Cube It can be seen as Grouping Sets The grammar sugar of , The underlying implementation and performance are the same .

Last

This paper focuses on Group By Advanced usage Groupings Sets The function and underlying implementation of the statement .

although Groupings Sets The function of , adopt Union All Can also be realized , But the former is not the grammatical sugar of the latter , Their underlying implementation is completely different .Grouping Sets It adopts the idea of combining first and then aggregating , adopt spark_grouping_id Columns to ensure the correctness of data ;Union All Then we adopt the idea of first aggregation and then combination .Grouping Sets stay SQL Statement expression and performance have greater advantages .

Group By The other two advanced uses of RollUp and Cube It can be seen as Grouping Sets The grammar sugar of , Their bottom layer is based on Expand Operator implementation , In terms of performance and direct use Grouping Sets It's the same , But in SQL More concise expression .

Reference resources

[1] Spark SQL Guide, Apache Spark

[2] apache spark 3.3 Version source code , Apache Spark, GitHub

Click to follow , The first time to learn about Huawei's new cloud technology ~

Detailed explanation SQL in Groupings Sets More articles about the function of statements and the underlying implementation logic

- Go into detail SQL Medium Null

Go into detail SQL Medium Null NULL In the computer and programming world, it means the unknown , Not sure . Although the Chinese translation is “ empty ”, But it's empty (null) Not that empty (empty). Null It represents an unknown state , The future state , For example, in Xiao Ming's pocket ...

- Detailed explanation SQL Medium GROUP BY sentence

Here's how to introduce SQL In the sentence GROUP BY sentence ,GROUP BY Statement is used in conjunction with the aggregate function , Group result sets according to one or more columns . Hope to learn from you SQL Statements help . SQL GROUP BY grammar SELEC ...

- Detailed explanation Python The usage of loop statements in

One . brief introduction Python Conditions and loop statements for , Determine the control process of the program , Reflect the diversity of structure . It is important to understand ,if.while.for And what goes with them else. elif.break.continue and pass sentence ...

- [Android Rookie zone ] SQLite Operation details --SQL grammar

The article is completely excerpted from : Peking blue bird [Android Rookie zone ] SQLite Operation details --SQL grammar :http://home.bdqn.cn/thread-49363-1-1.html SQLite The library can solve ...

- [ Re posting ]【Oracle】 Detailed explanation Oracle in NLS_LANG Use of variables

[Oracle] Detailed explanation Oracle in NLS_LANG Use of variables https://www.cnblogs.com/HDK2016/p/6880560.html NLS_LANG=LANGUAGE_TERR ...

- use IDEA Detailed explanation Spring Medium IoC and DI( Very thorough , Come in and have a look )

use IDEA Detailed explanation Spring Medium IoC and DI One .Spring IoC Basic concepts of Inversion of control (IoC) It's a more abstract concept , It is mainly used to reduce the coupling problem of computer programs , yes Spring The core of the framework . Dependency injection (DI) yes ...

- Detailed explanation Linux Medium cat Text output command usage

Make a system > LINUX > Detailed explanation Linux Medium cat Text output command usage Linux Command manuals Release time :2016-01-14 14:14:35 author : Zhang Ying I want to comment on This article ...

- jQuery: Detailed explanation jQuery In the event ( Two )

The last one said jQuery In the event , In depth learning DOM Knowledge about event binding , This article mainly discusses in depth jQuery Composite events in events . Event bubbling and event removal . Next chapter jQuery: Detailed explanation jQuery In the event ( One ) ...

- Graphic, Unity3D in Material Of Tiling and Offset What's going on?

Graphic, Unity3D in Material Of Tiling and Offset What's going on? Tiling and Offset summary Tiling Express UV The scaling factor of the coordinates ,Offset Express UV The starting position of the coordinates . This is, of course, an alternative ...

- 【 turn 】 Detailed explanation C# Reflection in

Link to the original post here : Detailed explanation C# Reflection in Reflection (Reflection) 2008 year 01 month 02 Japan Wednesday 11:21 Two real-world examples : 1.B super : We probably did it when we had a physical examination B Super ,B Super can detect you inside through your belly ...

Random recommendation

- Linux2.6 Kernel stack family --TCP agreement 1. send out

Introducing tcp Before sending a function, we need to introduce a key structure sk_buff, stay linux in ,sk_buff Structure represents a message : Then see sending function source code , There's no focus on the fragmentation of hardware support - Gather : /* sendmsg System call in ...

- turn --android Toast Complete works of ( Five situations ) Build your own Toast

Toast Used to show users some help / Tips . Now I do 5 Medium effect , To illustrate Toast A powerful , Define your own Toast. 1. Default effect Code Toast.makeText(getApplicationCo ...

- Getting Error "Invalid Argument to LOCATOR.CONTROL: ORG_LOCATOR_CONTROL='' in Material Requirements Form ( file ID 1072379.1)

APPLIES TO: Oracle Work in Process - Version 11.5.10.2 and later Information in this document applie ...

- Android example - Call the system APP(XE10+ millet 2)

Related information : Group number 383675978 Example source code : unit Unit1; interface uses System.SysUtils, System.Types, System.UITypes, Sys ...

- Shortest path (Floyd The template questions )

subject :http://acm.sdut.edu.cn/sdutoj/showproblem.php?pid=2143&cid=1186 #include<stdio.h> #incl ...

- AIR Detection network

package com.juyou.util.net { import flash.events.StatusEvent; import flash.net.URLRequest; import ai ...

- Linux Of selinux

SELinux Operation mode Discipline (Subject):SELinux order , So you can put 『 The main body 』 Follow process In the equal sign : The goal is (Object): Whether the main program can access 『 Target resources 』 One ...

- 【 shortest path 】 poj 2387

#include <iostream> #include <stdlib.h> #include <limits.h> #include <string.h& ...

- stay ubuntu linux Write your own python Script

stay ubuntu linux Write your own simple bash Script . Realization function : Enter a simple command in the terminal ( With pmpy For example (play music python), In order to distinguish what I said before bash Script added py suffix ), Come on ...

- shell test and find Command instance parsing

shell test and find Command instance parsing Let's say \build\core\product.mk Learn the relevant parts define _find-android-products-files $(shell t ...