当前位置:网站首页>MongoDB 遇见 spark(进行整合)

MongoDB 遇见 spark(进行整合)

2022-07-07 11:17:00 【cui_yonghua】

基础篇(能解决工作中80%的问题):

进阶篇:

其它:

一. 与HDFS相比,MongoDB的优势

1、在存储方式上,HDFS以文件为单位,每个文件大小为 64M~128M, 而mongo则表现的更加细颗粒化;

2、MongoDB支持HDFS没有的索引概念,所以在读取速度上更快;

3、MongoDB更加容易进行修改数据;

4、HDFS响应级别为分钟,而MongoDB响应类别为毫秒;

5、可以利用MongoDB强大的 Aggregate功能进行数据筛选或预处理;

6、如果使用MongoDB,就不用像传统模式那样,到Redis内存数据库计算后,再将其另存到HDFS上。

二. 大数据的分层架构

MongoDB可以替换HDFS, 作为大数据平台中最核心的部分,可以分层如下:

第1层:MongoDB或者HDFS;

第2层:资源管理 如 YARN、Mesos、K8S;

第3层:计算引擎 如 MapReduce、Spark;

第4层:程序接口 如 Pig、Hive、Spark SQL、Spark Streaming、Data Frame等

参考:

mongo-python-driver: https://github.com/mongodb/mongo-python-driver/

三. 源码介绍

mongo-spark/examples/src/test/python/introduction.py

# -*- coding: UTF-8 -*-

#

# Copyright 2016 MongoDB, Inc.

#

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# To run this example use:

# ./bin/spark-submit --master "local[4]" \

# --conf "spark.mongodb.input.uri=mongodb://127.0.0.1/test.coll?readPreference=primaryPreferred" \

# --conf "spark.mongodb.output.uri=mongodb://127.0.0.1/test.coll" \

# --packages org.mongodb.spark:mongo-spark-connector_2.11:2.0.0 \

# introduction.py

from pyspark.sql import SparkSession

if __name__ == "__main__":

spark = SparkSession.builder.appName("Python Spark SQL basic example").getOrCreate()

logger = spark._jvm.org.apache.log4j

logger.LogManager.getRootLogger().setLevel(logger.Level.FATAL)

# Save some data

characters = spark.createDataFrame([("Bilbo Baggins", 50), ("Gandalf", 1000), ("Thorin", 195), ("Balin", 178), ("Kili", 77), ("Dwalin", 169), ("Oin", 167), ("Gloin", 158), ("Fili", 82), ("Bombur", None)], ["name", "age"])

characters.write.format("com.mongodb.spark.sql").mode("overwrite").save()

# print the schema

print("Schema:")

characters.printSchema()

# read from MongoDB collection

df = spark.read.format("com.mongodb.spark.sql").load()

# SQL

df.registerTempTable("temp")

centenarians = spark.sql("SELECT name, age FROM temp WHERE age >= 100")

print("Centenarians:")

centenarians.show()

边栏推荐

- 10 张图打开 CPU 缓存一致性的大门

- 迅为iTOP-IMX6ULL开发板Pinctrl和GPIO子系统实验-修改设备树文件

- 详细介绍六种开源协议(程序员须知)

- 在字符串中查找id值MySQL

- 【Presto Profile系列】Timeline使用

- 2022-07-07 Daily: Ian Goodfellow, the inventor of Gan, officially joined deepmind

- [untitled]

- 【学习笔记】AGC010

- Star Enterprise Purdue technology layoffs: Tencent Sequoia was a shareholder who raised more than 1billion

- [untitled]

猜你喜欢

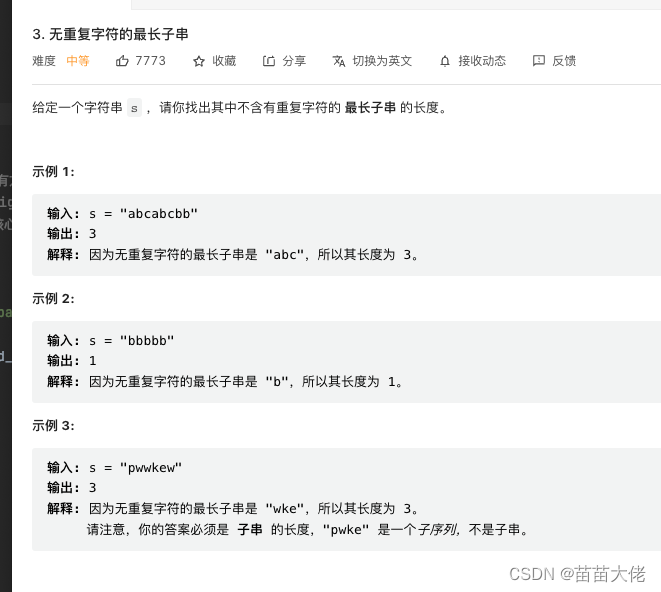

leecode3. 无重复字符的最长子串

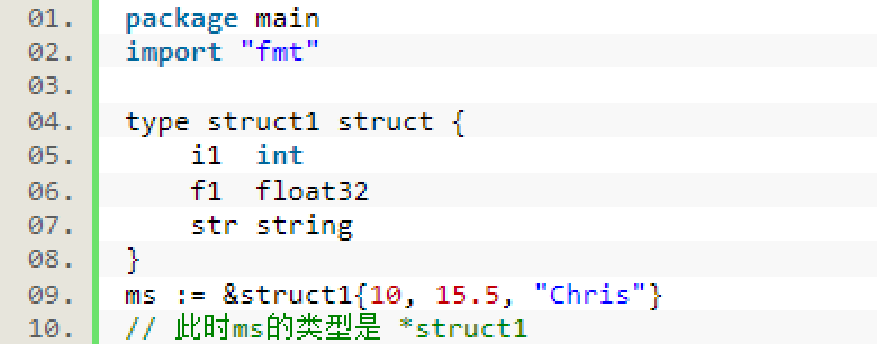

Go language learning notes - structure

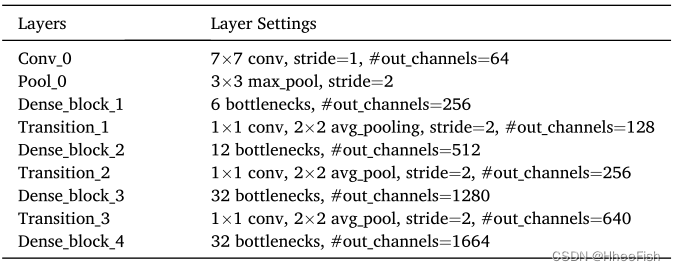

Isprs2021/ remote sensing image cloud detection: a geographic information driven method and a new large-scale remote sensing cloud / snow detection data set

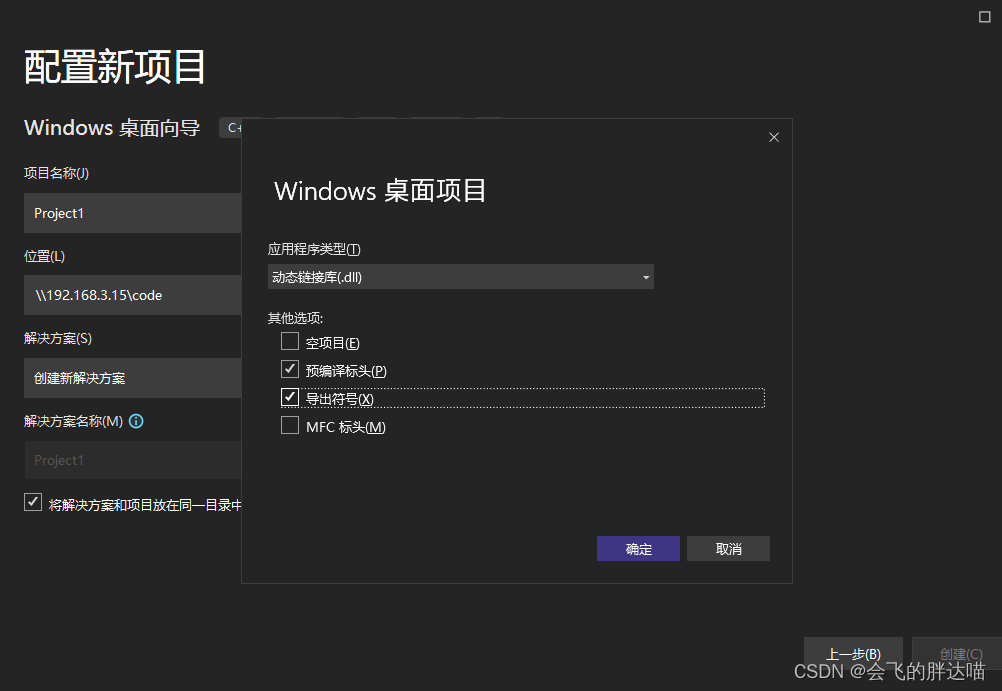

error LNK2019: 无法解析的外部符号

“新红旗杯”桌面应用创意大赛2022

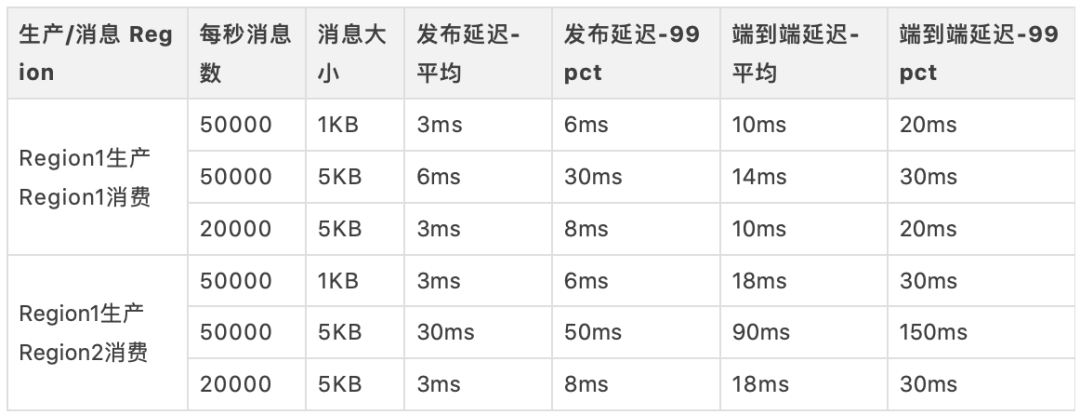

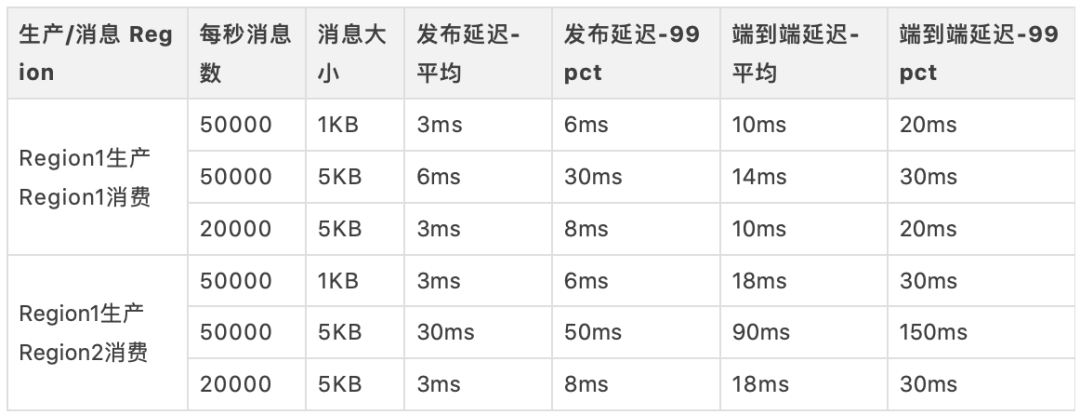

Blog recommendation | Apache pulsar cross regional replication scheme selection practice

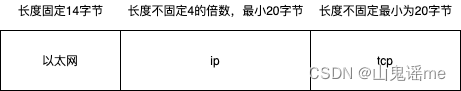

PACP学习笔记一:使用 PCAP 编程

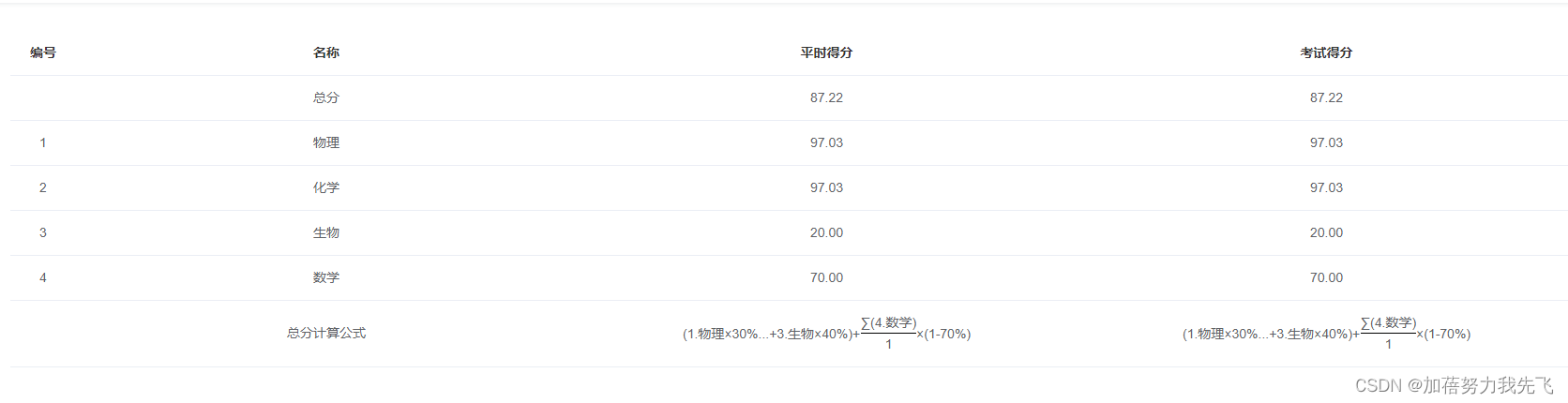

将数学公式在el-table里面展示出来

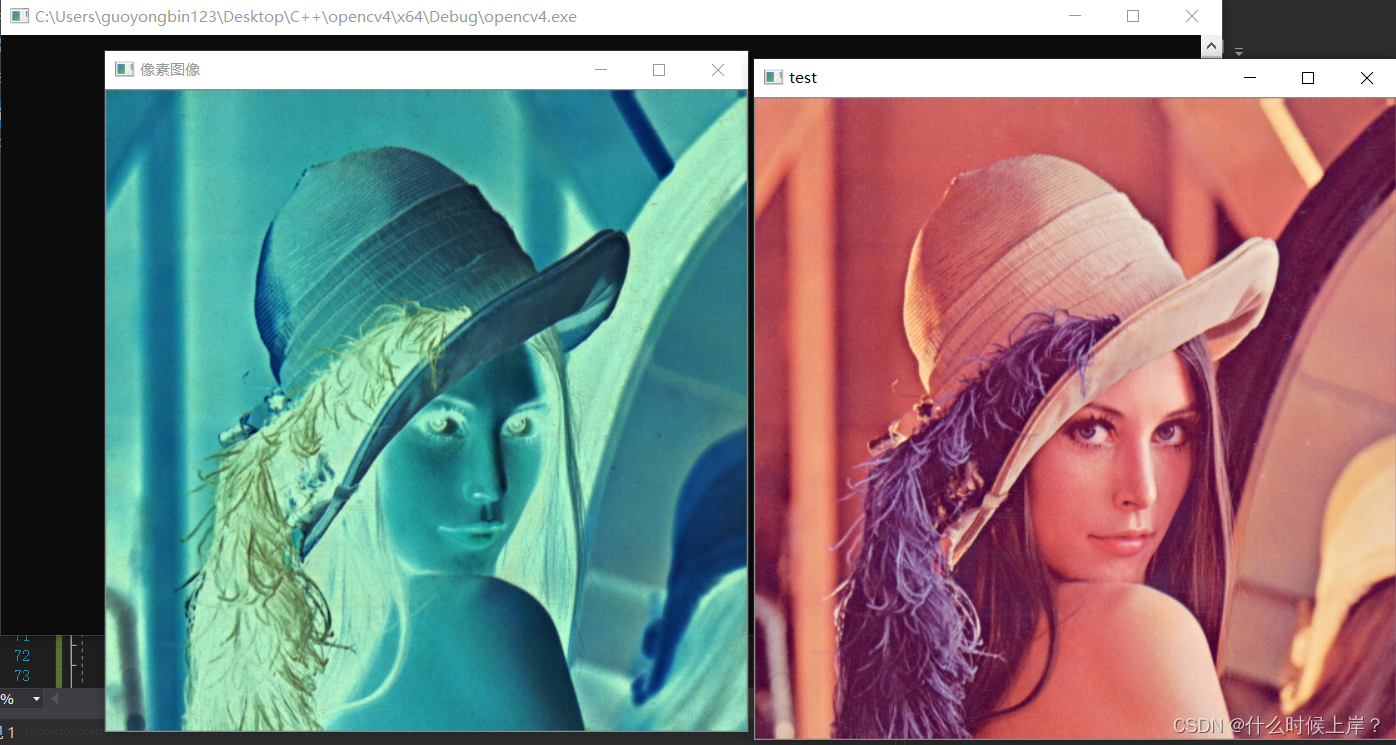

Image pixel read / write operation

博文推荐|Apache Pulsar 跨地域复制方案选型实践

随机推荐

Day26 IP query items

《开源圆桌派》第十一期“冰与火之歌”——如何平衡开源与安全间的天然矛盾?

测试下摘要

Grep of three swordsmen in text processing

regular expression

基于鲲鹏原生安全,打造安全可信的计算平台

2022 polymerization process test question simulation test question bank and online simulation test

Awk of three swordsmen in text processing

HZOJ #240. Graphic printing IV

.Net下极限生产力之efcore分表分库全自动化迁移CodeFirst

Cloud detection 2020: self attention generation countermeasure network for cloud detection in high-resolution remote sensing images

. Net ultimate productivity of efcore sub table sub database fully automated migration codefirst

Leetcode skimming: binary tree 20 (search in binary search tree)

在字符串中查找id值MySQL

Star Enterprise Purdue technology layoffs: Tencent Sequoia was a shareholder who raised more than 1billion

解决缓存击穿问题

PCAP学习笔记二:pcap4j源码笔记

红杉中国完成新一期90亿美元基金募集

CMU15445 (Fall 2019) 之 Project#2 - Hash Table 详解

Layer pop-up layer closing problem