当前位置:网站首页>Some superficial understanding of word2vec

Some superficial understanding of word2vec

2022-07-07 10:29:00 【strawberry47】

Recently, a friend asked word2vec What's the matter , So I reviewed the relevant knowledge again , Record some of your thoughts , Prevent forgetting ~

word2vec Is the means to obtain word vectors , It's in NNLM Improved on the basis of .

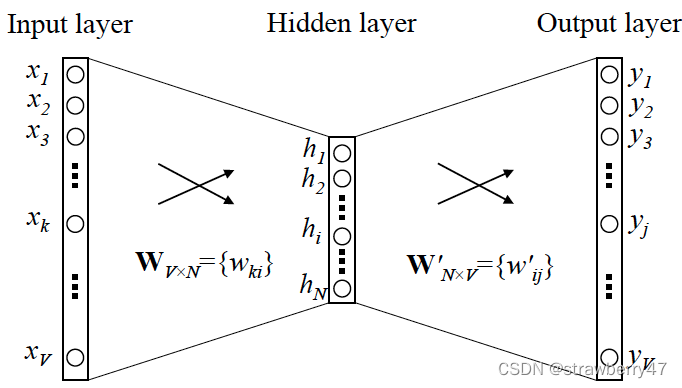

The training model is essentially a neural network with only one hidden layer .

It comes in two forms ① skip-gram: Predict the middle from both sides ② C-BOW: Predict both sides from the middle ;

Be careful , These two forms only represent two different training methods , Finally, the input layer is taken -> The weight of the hidden layer , As word vector .

During training , With CBOW For example , Suppose the corpus is “ It's a fine day today ”; The input to the model is " today God Of God really good " Six word one-hot vector, The output is a bunch of probabilities , We hope “ gas ” The probability of occurrence is the greatest .

When writing code , Usually called gensim library , The word vector can be trained by inputting the corpus .

Some small training trick:Negative Sampling, Huffman tree

Reference resources :[NLP] Second vector Word2vec The essence of , summary word2vec( Blog written by lab senior brother )

边栏推荐

- HDU-2196 树形DP学习笔记

- Study summary of postgraduate entrance examination in September

- [higherhrnet] higherhrnet detailed heat map regression code of higherhrnet

- 01 use function to approximate cosine function (15 points)

- EasyExcel读取写入简单使用

- 移动端通过设置rem使页面内容及字体大小自动调整

- Elegant controller layer code

- Study summary of postgraduate entrance examination in October

- Multisim--软件相关使用技巧

- OpenGL glLightfv 函数的应用以及光源的相关知识

猜你喜欢

LLVM之父Chris Lattner:為什麼我們要重建AI基礎設施軟件

【acwing】789. 数的范围(二分基础)

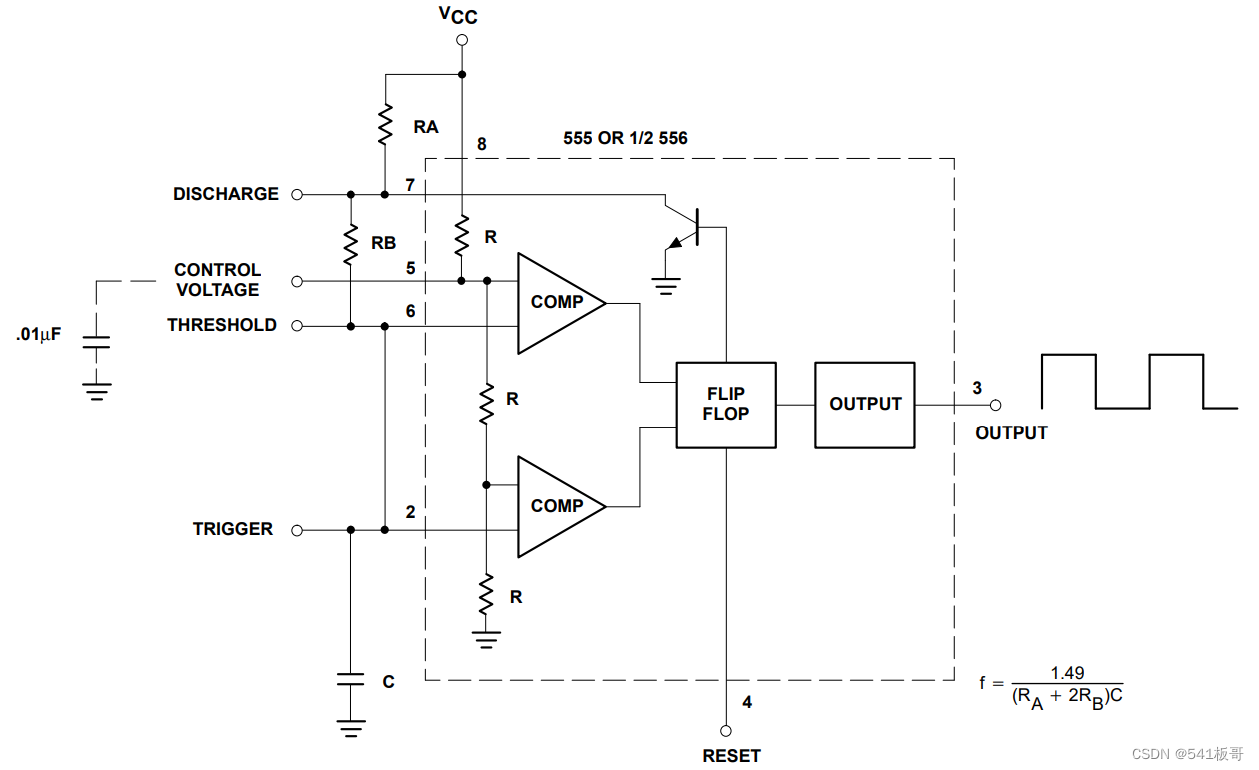

555电路详解

The story of Plato and his three disciples: how to find happiness? How to find the ideal partner?

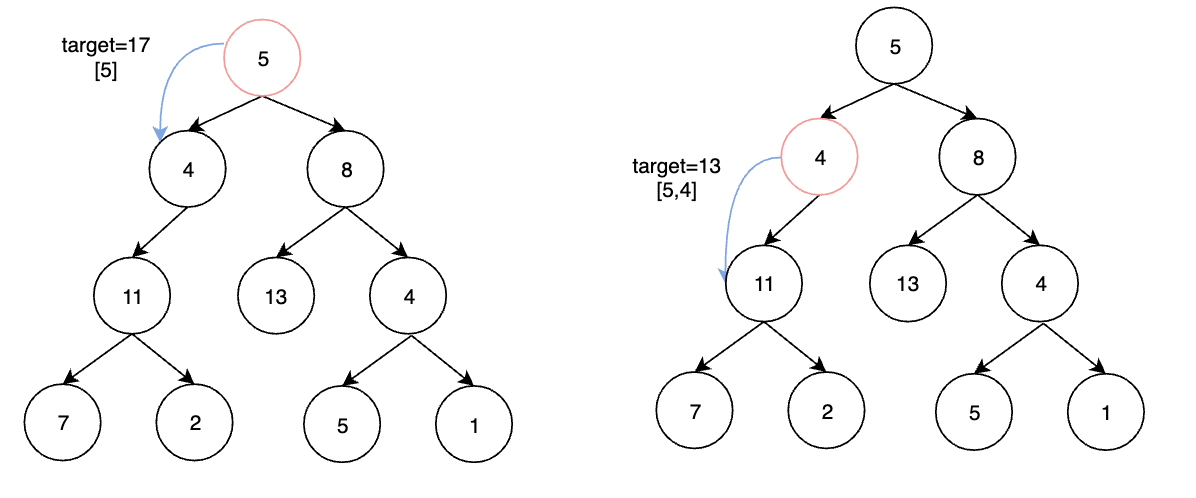

Leetcode exercise - 113 Path sum II

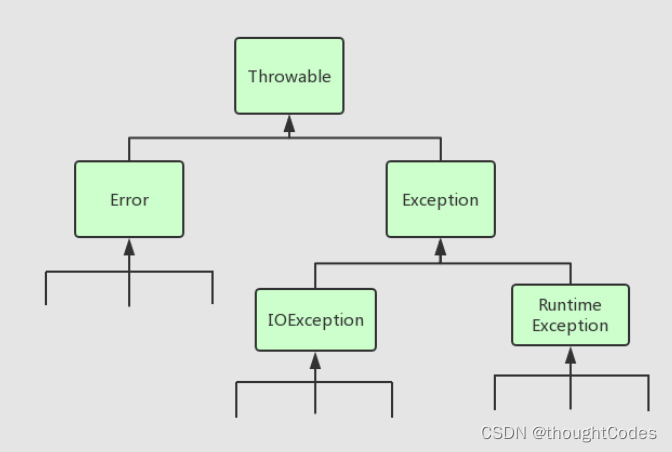

Talking about the return format in the log, encapsulation format handling, exception handling

Chris LATTNER, the father of llvm: why should we rebuild AI infrastructure software

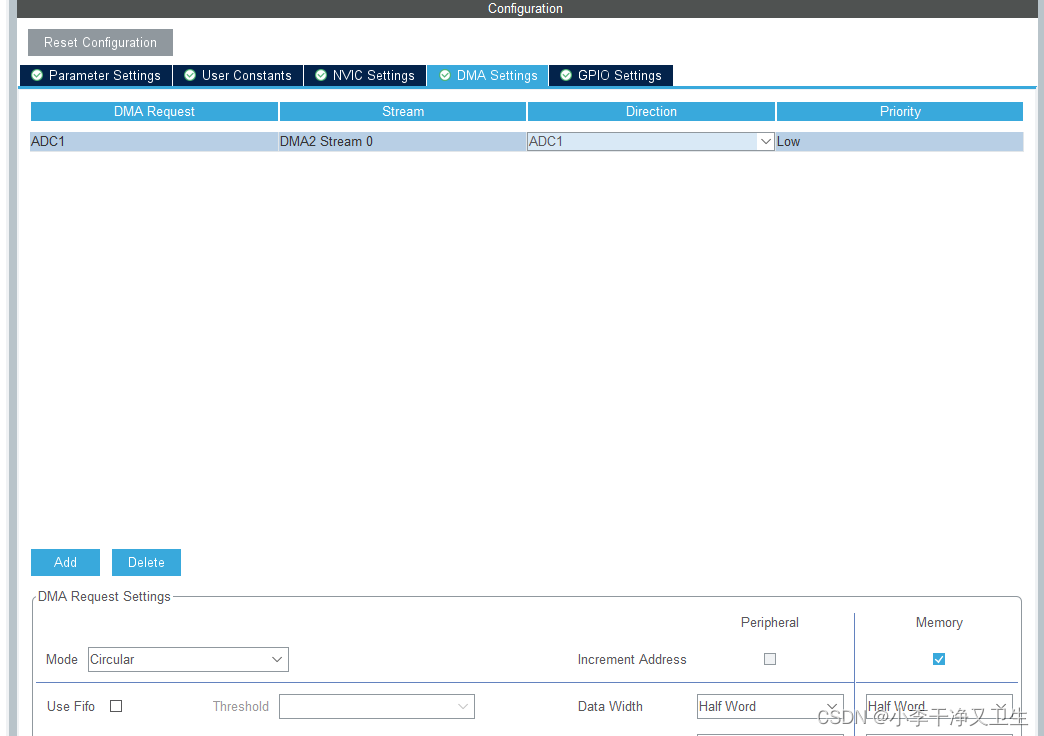

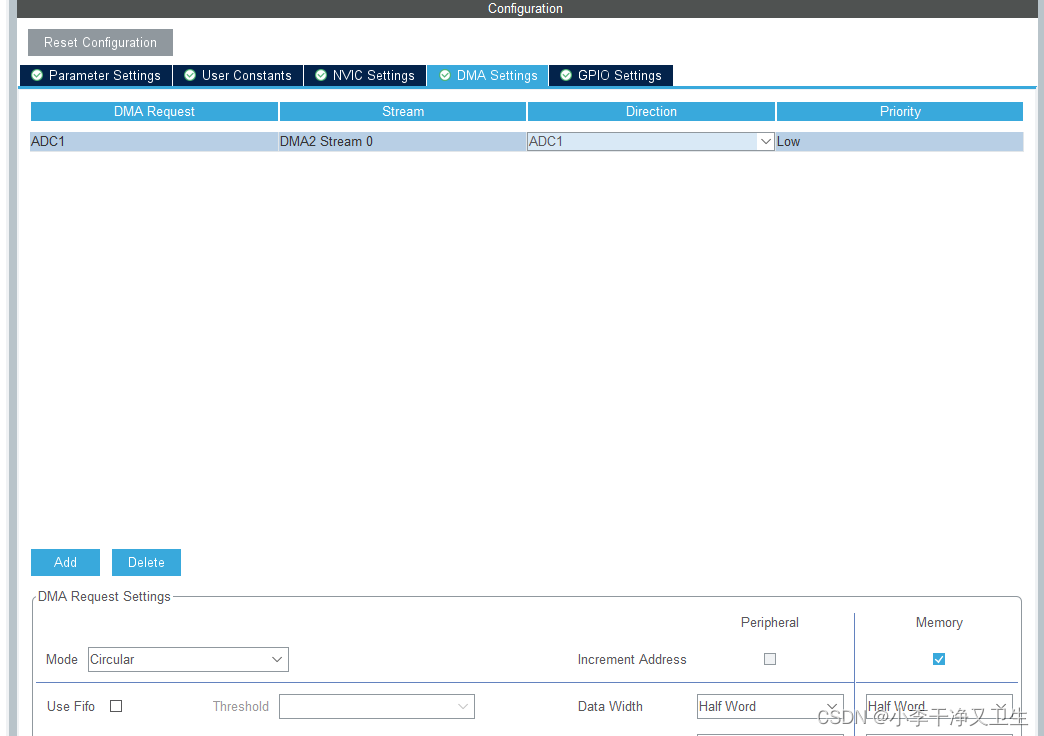

The Hal library is configured with a general timer Tim to trigger ADC sampling, and then DMA is moved to the memory space.

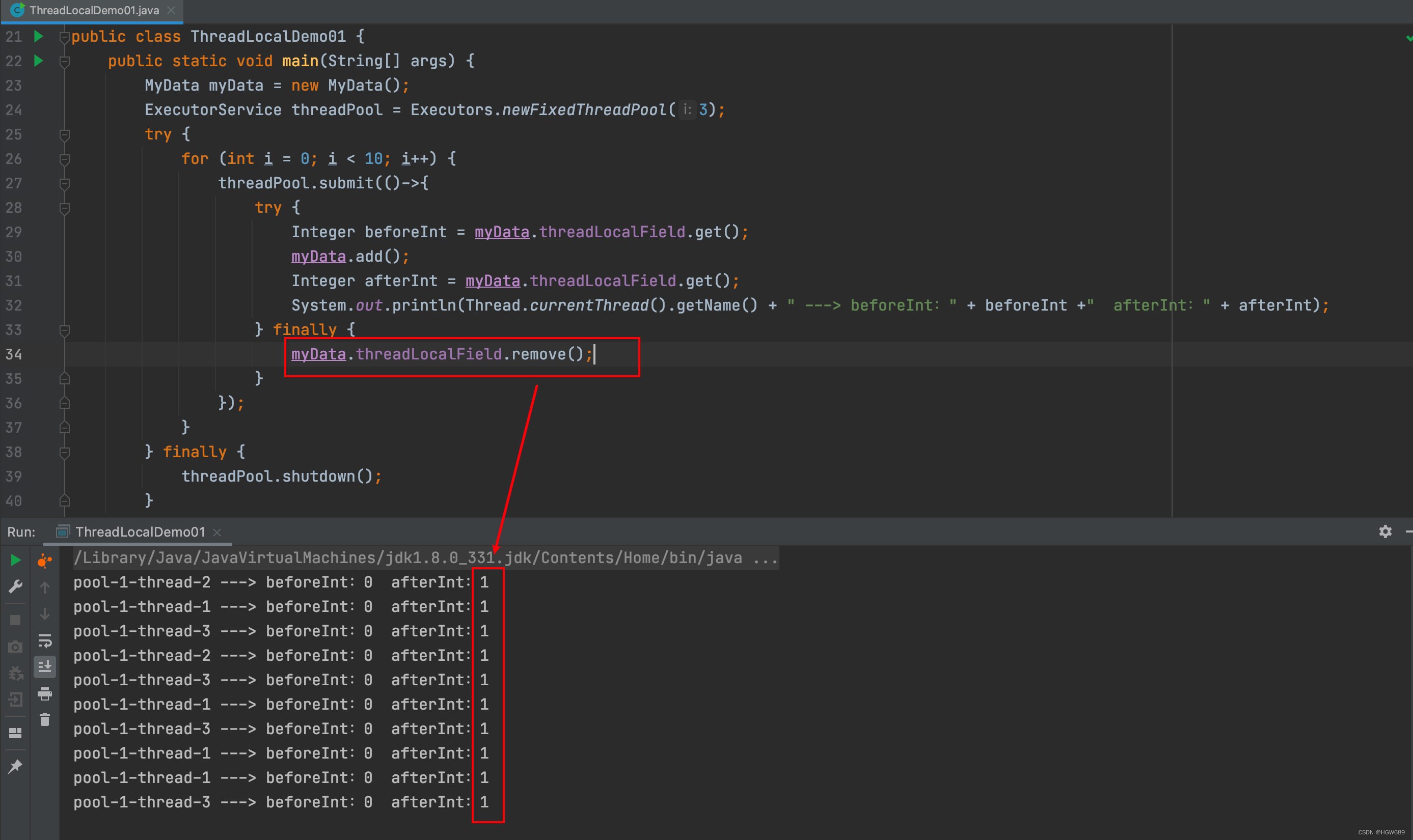

ThreadLocal会用可不够

HAL库配置通用定时器TIM触发ADC采样,然后DMA搬运到内存空间。

随机推荐

leetcode-304:二维区域和检索 - 矩阵不可变

Some properties of leetcode139 Yang Hui triangle

Sword finger offer 38 Arrangement of strings [no description written]

How to cancel automatic saving of changes in sqlyog database

HAL库配置通用定时器TIM触发ADC采样,然后DMA搬运到内存空间。

优雅的 Controller 层代码

柏拉图和他的三个弟子的故事:如何寻找幸福?如何寻找理想伴侣?

JMeter installation

The story of Plato and his three disciples: how to find happiness? How to find the ideal partner?

Study summary of postgraduate entrance examination in August

BigDecimal数值比较

LLVM之父Chris Lattner:为什么我们要重建AI基础设施软件

IO model review

Jump to the mobile terminal page or PC terminal page according to the device information

Embedded background - chip

Socket通信原理和实践

Hdu-2196 tree DP learning notes

Review of the losers in the postgraduate entrance examination

.NET配置系统

Study summary of postgraduate entrance examination in September