当前位置:网站首页>Debiasing word embeddings | talking about word embedding and deviation removal # yyds dry goods inventory #

Debiasing word embeddings | talking about word embedding and deviation removal # yyds dry goods inventory #

2022-07-01 17:42:00 【LolitaAnn】

This article is my note sharing , The content mainly comes from teacher Wu Enda's in-depth learning course . [1]

The existence of stereotypes

word embedding It has a very important impact on the generalization of our model , Therefore, we should also ensure that they are not affected by unexpected forms of bias . Such as sexism , Racial discrimination , Religious discrimination and so on .

Of course, I think the word hint is a little serious , Here we can understand it as stereotype .

Take a chestnut :

My father is a doctor , My mother is _______ .

My father is a company employee , My mother is _______ .

Boys like _______ . Girls like _______ .

The first empty one, of course, is likely to be “ The nurse ”. The second empty answer is likely to be “ housewife ”. The third empty answer is likely to be “ The transformers ”. The fourth empty answer is likely to be “ Barbie doll ”.

What is this ? This is the so-called gender stereotype . These stereotypes are related to socio-economic status .

Learning algorithms are not stereotyped , But the words written by human beings are stereotyped . and Word embedding Can “ very good ” Learn these stereotypes .

So we need to modify the learning algorithm as much as possible , Minimize or idealize , Eliminate these unexpected types of bias .

Over many decades, over many centuries,I think humanity has made progress in reducing these types of bias. And I think maybe fortunately AI, I think we actually have better ideas for quickly reducing the bias in AI than for quickly reducing the bias in the human race. Although I think we are by no means done for AI as well, and there is still a lot of research and hard work to be done to reduce these types of biases in our learning of learning algorithms.

Eliminate word embedding stereotypes

With the aid of arXiv:1607.06520 [2] Methods .

It is mainly divided into the following three steps :

- Identify bias direction.

- Neutralize: For every word that is not definitional, project to get rid of bias.

- Equalize pairs.

Suppose now we have a good student word embedding.

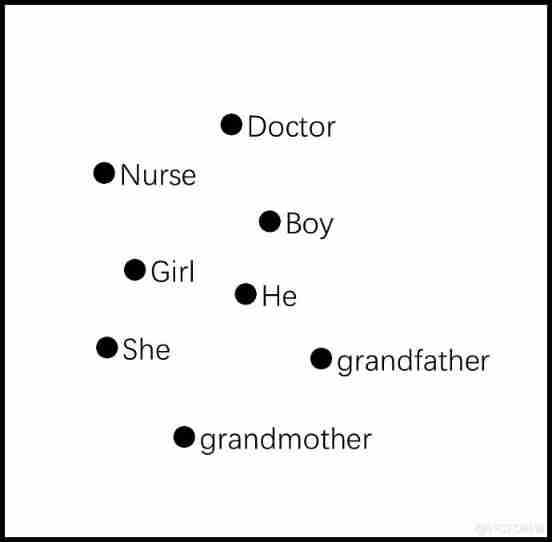

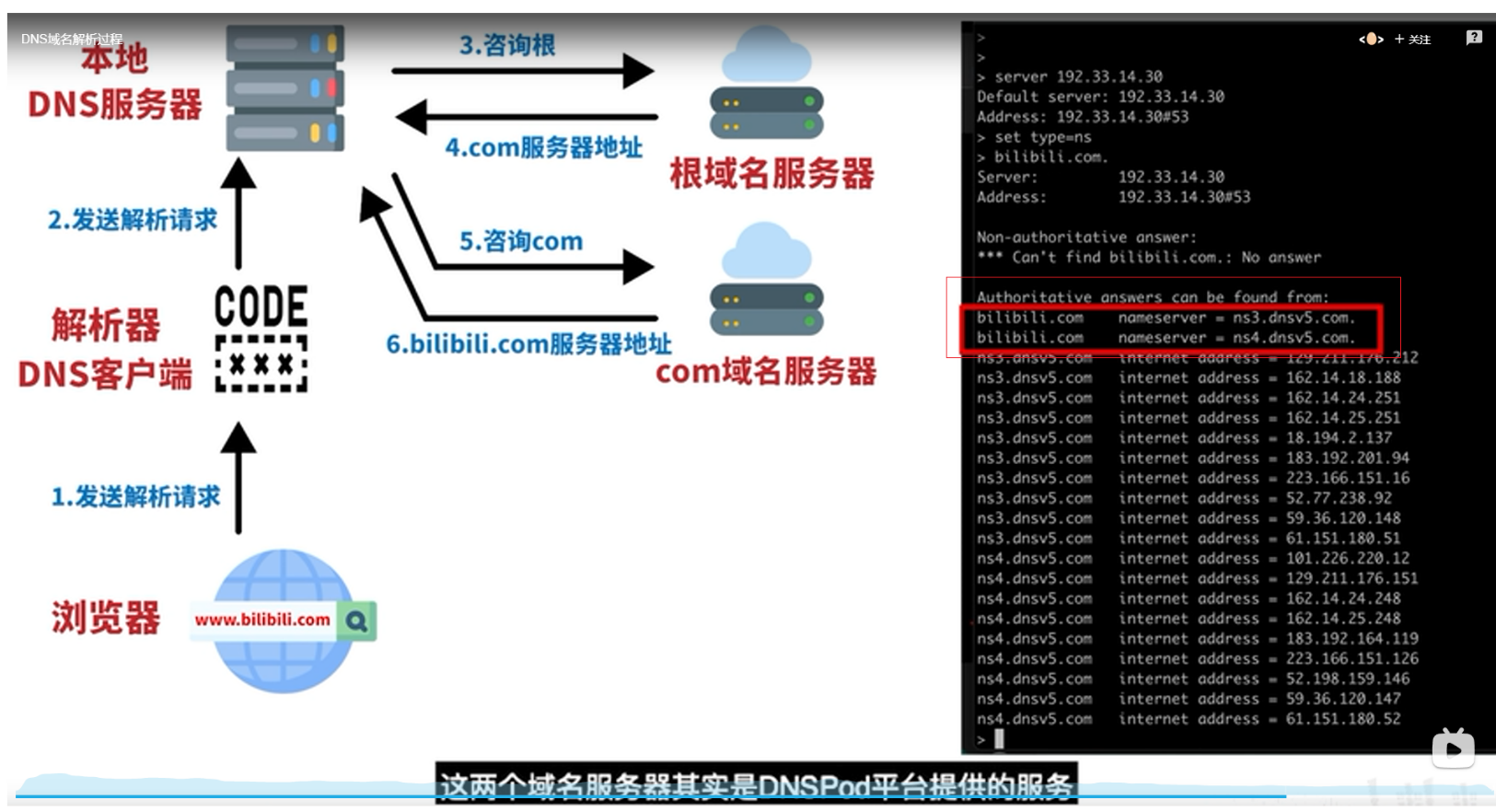

Or continue our previous style . It uses 300 Dimension characteristics , Then we map it to a two-dimensional plane . The distribution of these words on the plane is shown in the figure .

1. Find a way

To find out the main direction of stereotype between two words , This method we talked about earlier word embedding I mentioned the feature once . Is to subtract two vectors to get the main dimension of their difference .

After subtracting the above, you will find that their differences are mainly in gender In this dimension .

Then make a for the above Average .

We can get the following result :

We can find out the main direction of our stereotype bias . Then you can also find a direction that is not related to a particular bias .

Be careful : In this case , We think our bias is in the direction of “gender” It's a one-dimensional space , And the other irrelevant direction is 299 The subspace of dimension . This is simplified compared with the original paper . Specifically, you can read the references provided at the end of the article .

2. Neutralization treatment

There is this word, which is clearly gender differentiated , But some words should exist fairly without gender distinction .

Gender specific words , such as grandmother and grandfather, There is no gender distinction , such as nurse,doctor. For this kind of words, we should neutralize them , That is, reduce the horizontal distance in the direction of bias .

3. Balancing

The second step is to deal with words that are gender neutral . What's wrong with gender specific words .

We can clearly see from the above figure . about nurse The word , It is associated with girl The distance is significantly longer than boy A more recent . So if the text is generated , mention nurse, appear girl Will be more likely . So we need to balance the distance through calculation .

After calculation, translate it , It's a gender neutral word. It's . The distance between words with gender distinction is equal .

边栏推荐

- Object. fromEntries()

- Research Report on China's enzyme Market Forecast and investment strategy (2022 Edition)

- DRF --- response rewrite

- Reflective XSS vulnerability

- [C language supplement] judge which day tomorrow is (tomorrow's date)

- Redis -- data type and operation

- 换掉UUID,NanoID更快更安全!

- Common design parameters of solid rocket motor

- 【牛客网刷题系列 之 Verilog快速入门】~ 优先编码器电路①

- Leetcode records - sort -215, 347, 451, 75

猜你喜欢

整形数组合并【JS】

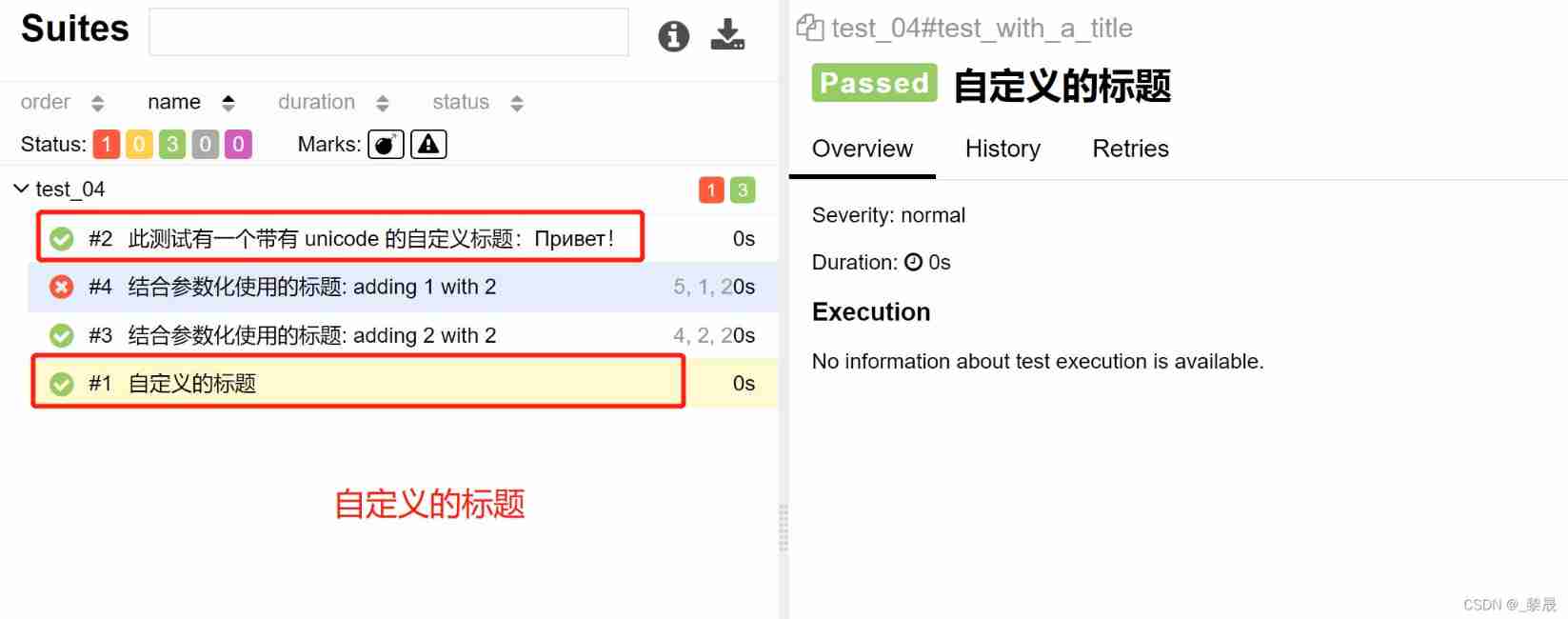

Pytest learning notes (13) -allure of allure Description () and @allure title()

SQL注入漏洞(Mysql与MSSQL特性)

Penetration practice vulnhub range Keyring

Good looking UI mall source code has been scanned, no back door, no encryption

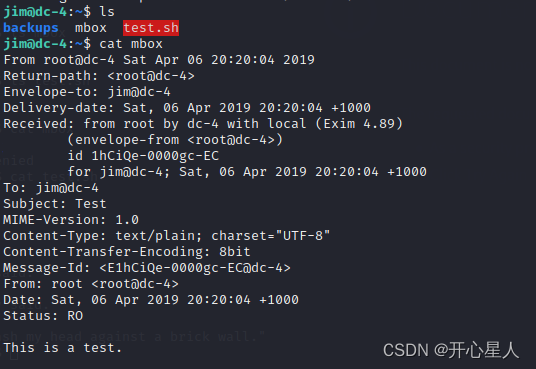

【Try to Hack】vulnhub DC4

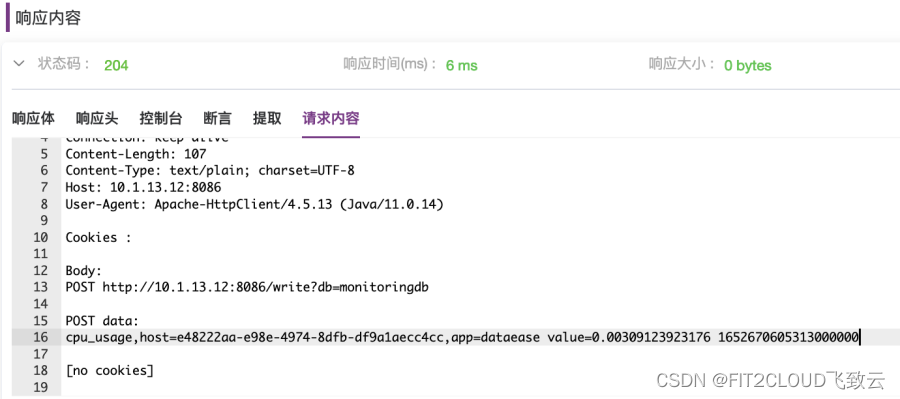

How to use JMeter function and mockjs function in metersphere interface test

DNS

Rotation order and universal lock of unity panel

Heavy disclosure! Hundreds of important information systems have been invaded, and the host has become a key attack target

随机推荐

麦趣尔:媒体报道所涉两批次产品已下架封存,受理消费者诉求

Product service, operation characteristics

Redis -- data type and operation

存在安全隐患 起亚召回部分K3新能源

为什么你要考虑使用Prisma

[splishsplash] about how to receive / display user parameters, MVC mode and genparam on GUI and JSON

Mysql database - Advanced SQL statement (2)

LeetCode中等题之TinyURL 的加密与解密

《中国智慧环保产业发展监测与投资前景研究报告(2022版)》

【Try to Hack】vulnhub DC4

阿里云李飞飞:中国云数据库在很多主流技术创新上已经领先国外

中国超高分子量聚乙烯产业调研与投资前景报告(2022版)

Report on research and investment prospects of China's silicon nitride ceramic substrate industry (2022 Edition)

[Verilog quick start of Niuke network question brushing series] ~ priority encoder circuit ①

[wrung Ba wrung Ba is 20] [essay] why should I learn this in college?

Integer array merge [JS]

SQL注入漏洞(Mysql与MSSQL特性)

DNS

Gold, silver and four want to change jobs, so we should seize the time to make up

反射型XSS漏洞