当前位置:网站首页>Cs231n notes (bottom) - applicable to 0 Foundation

Cs231n notes (bottom) - applicable to 0 Foundation

2022-07-05 16:43:00 【Small margin, rush】

Catalog

Transfer learning and object location monitoring

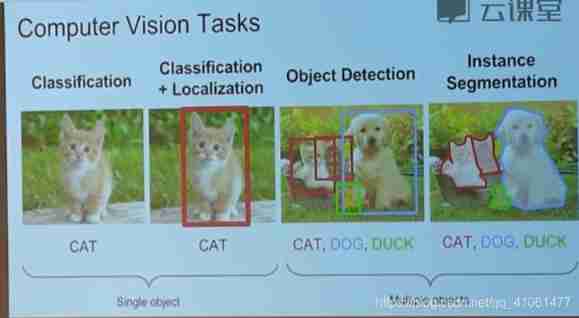

Object segmentation & Semantic segmentation

Transfer learning and object location monitoring

Can target detection be regarded as a regression problem ? The bounding box of multiple objects output for different pictures , May output 4 A bounding box may also output 8 A bounding box , The number of outputs is not fixed , So it can't be regarded as a return problem .

The migration study

In practice , Because few data sets are large enough , So few people choose to train the network from scratch . The common way is : Pre train one on a very large data set CNN, Then use the pre trained network as the initialization network to fine tune 、 Or as a feature extractor .

- Convolutional neural network as a feature extractor . Use in ImageNet Pre trained CNN, Remove the last full connection layer ( namely : The last layer used for classification ), Then use the rest as a feature extractor .

- Fine-tuning CNN. Replace the input layer of the network ( data ), Continue training with new data .

Transfer learning scenarios :

- The new dataset is relatively small , And it is highly similar to the original data set . It is not recommended to CNN Conduct Fine-tune, It is recommended to use pre trained CNN As a feature extractor , Then train a linear classifier .

- The new data set is relatively large , And it is highly similar to the original data set . Because the new data set is large enough , Sure fine-tune The whole network

- The new dataset is smaller , And it is very different from the original data set . Because the data set is very small , So it is best to train a linear classifier . And because the data set is not similar to the original data set , The best way is to train a linear classifier from the shallow output of the pre training network as a feature .

- The new data set is relatively large , And it is very different from the original data set . Because the new data set is big enough , You can retrain the network .

Object positioning & testing

After labeling the picture , Also frame where the object is - In classification and positioning , The number of output boxes is known in advance , And object detection is uncertain , And classification + The difference between positioning tasks is , The number of objects to be detected in object detection is uncertain , Therefore, the regression framework cannot be used directly .

The sliding window -overfeat

Randomly select several windows of different sizes and positions

RCNN

- Pre train a CNN

- Build a training set : First apply Selective Search The algorithm selects 2000~3000 Candidate box .

- Each candidate region is preprocessed , Deliver to CNN Extract image features , Then send the image features to SVM In the classifier , Calculate the loss of label classification . At the same time, the image features are also sent to the regressor , Calculate the offset distance L2 Loss .

- Back propagation training

fast R-CNN

solve R-CNN The problem of slow training prediction , The whole image is CNN feature extraction , Then select the candidate area

- And R-CNN Same use Selective Search Method generation 2000 Multiple candidate boxes

- Input the whole picture directly CNN in , Feature extraction

- Put the 2000 Boxes map to just CNN The last layer extracted feature map On

faster R-CNN:

SSD

SSD The idea is to divide the image into many grids , Several can be derived from the center of each lattice base boxes. Use neural network to classify these grids at one time , For these baseboxes Regression .

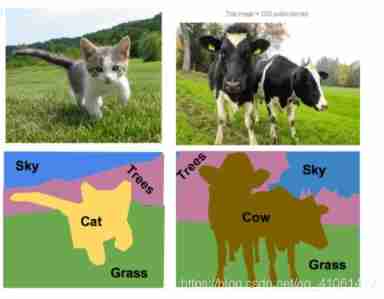

Object segmentation & Semantic segmentation

Semantic segmentation is to classify each pixel in the image , Do not distinguish between objects , Only care about pixels , Often costly , May first The framework of undersampling and oversampling

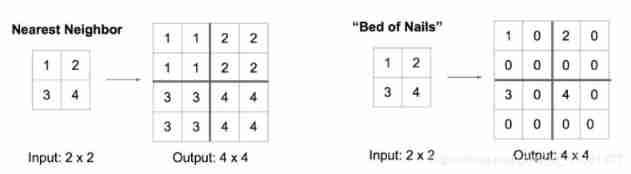

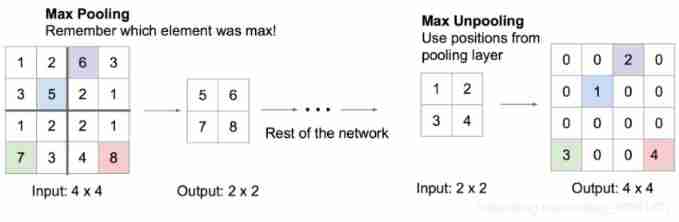

Under sampling can use convolution layer and pooling , Over sampling adopts de pooling , Transposition convolution

There is another one called Max Unpooling Methods , This method records the previous use max pooling The index of the previous maximum values in the array , When de pooling, put the value in the index , Fill in other positions 0:

Object segmentation -Mask RCNN

What we need to do is to go further in object detection , Segment the objects from the pixel level .

Use images CNN Process as a feature , And then through a RPN Network generation candidate area , Project to the previous feature map. Here with faster RCNN equally . Then there are two branches , A branch and faster RCNN identical , Predict the classification and boundary value of the candidate box , Another branch is similar to semantic segmentation , Classify each pixel .

边栏推荐

- 一些认知的思考

- Spring Festival Limited "forget trouble in the year of the ox" gift bag waiting for you to pick it up~

- OneForAll安装使用

- 漫画:什么是服务熔断?

- Single merchant v4.4 has the same original intention and strength!

- 迁移/home分区

- Enter a command with the keyboard

- tf. sequence_ Mask function explanation case

- Pspnet | semantic segmentation and scene analysis

- Record a 'very strange' troubleshooting process of cloud security group rules

猜你喜欢

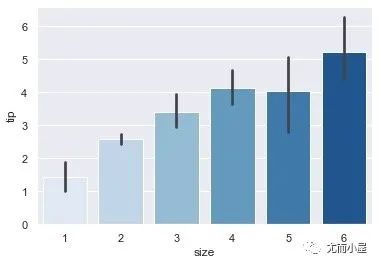

Seaborn绘制11个柱状图

Benji Banas membership pass holders' second quarter reward activities update list

Enter a command with the keyboard

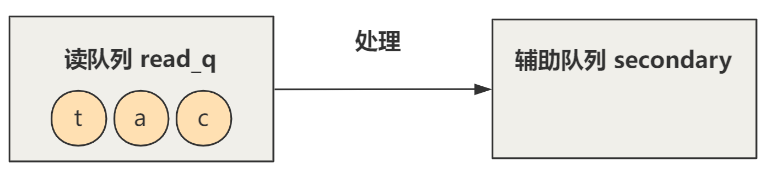

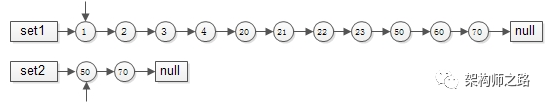

Summary of methods for finding intersection of ordered linked list sets

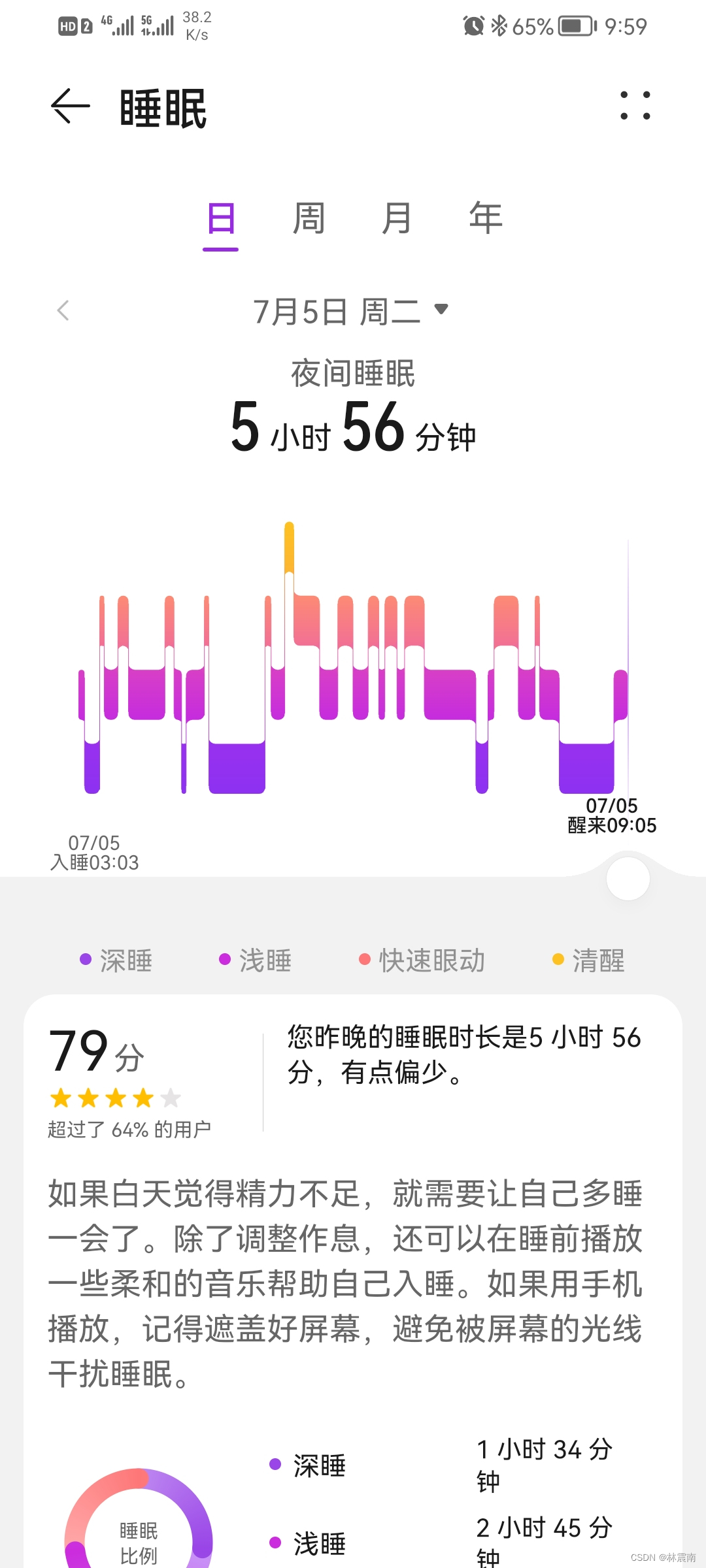

今日睡眠质量记录79分

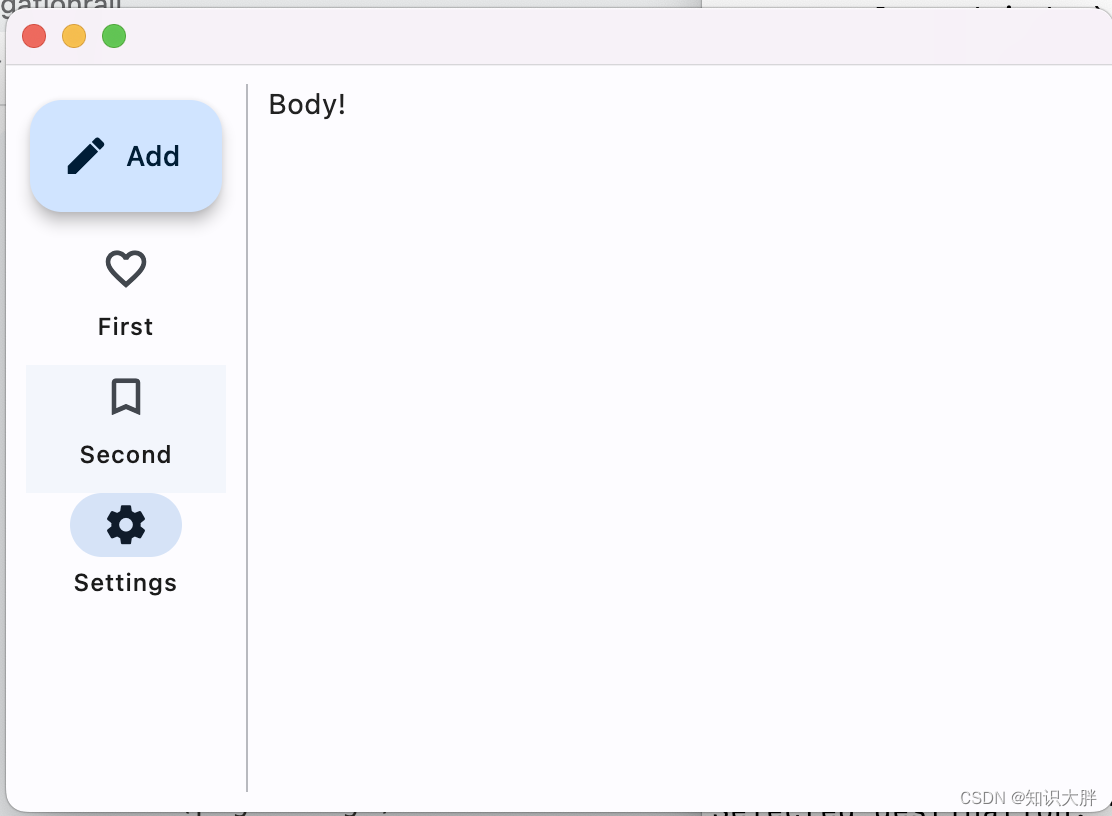

Fleet tutorial 09 basic introduction to navigationrail (tutorial includes source code)

【深度学习】深度学习如何影响运筹学?

Android privacy sandbox developer preview 3: privacy, security and personalized experience

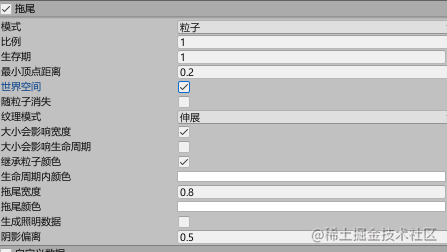

养不起真猫,就用代码吸猫 -Unity 粒子实现画猫咪

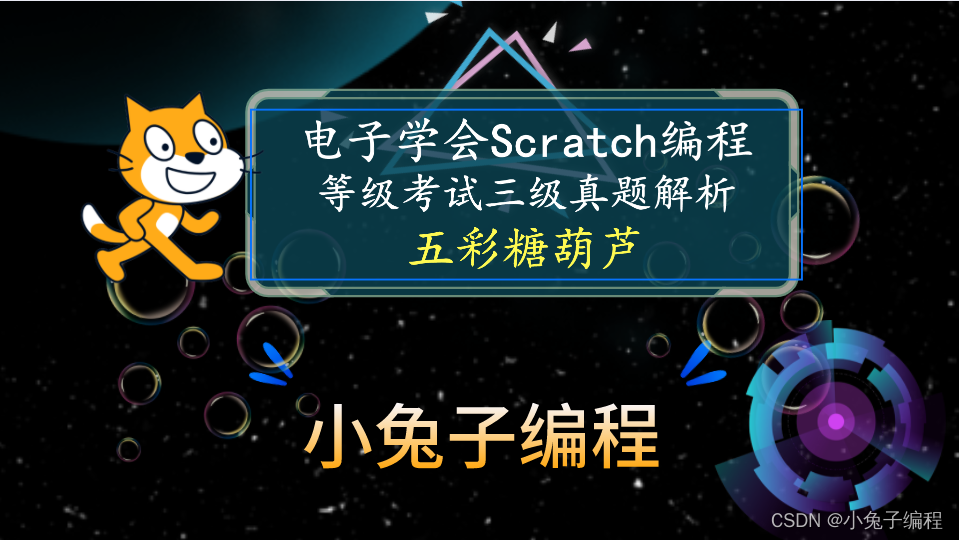

scratch五彩糖葫芦 电子学会图形化编程scratch等级考试三级真题和答案解析2022年6月

随机推荐

Can you help me see what the problem is? [ERROR] Could not execute SQL stateme

记一次'非常诡异'的云安全组规则问题排查过程

You should have your own persistence

践行自主可控3.0,真正开创中国人自己的开源事业

Flet教程之 11 Row组件在水平数组中显示其子项的控件 基础入门(教程含源码)

Pspnet | semantic segmentation and scene analysis

Reduce the cost by 40%! Container practice of redis multi tenant cluster

如何安装mysql

Solve the Hanoi Tower problem [modified version]

Dare not buy thinking

[deep learning] how does deep learning affect operations research?

Jarvis OJ Webshell分析

Seaborn draws 11 histograms

How to use FRP intranet penetration +teamviewer to quickly connect to the intranet host at home when mobile office

阿掌的怀念

10分钟帮你搞定Zabbix监控平台告警推送到钉钉群

Benji Banas membership pass holders' second quarter reward activities update list

How to set the WiFi password of the router on the computer

Jarvis OJ Flag

Win11如何给应用换图标?Win11给应用换图标的方法