当前位置:网站首页>[slam] orb-slam3 parsing - track () (3)

[slam] orb-slam3 parsing - track () (3)

2022-07-06 03:20:00 【iwander。】

After building the latest frame Frame after , Begin SLAM Main course Tracking It's the process . This function is very long , There's a lot of if-else It's very annoying .Track() What did you do ?

First turn on the active map To find out ; then IMU Preintegration Not yet , It needs to be supplemented here ; Next, you have to decide whether to track the map creation state normally or the initialization state , If not initialized, go initialization ; If initialized , to Inter frame matching , That is, the initial value of the current frame pose is obtained by short-term data association , First try and Adjacent frames match , No, just the latest Key frame matching , Not yet relocation , If you still can't, open a new map and run the odometer again ; then and local Map matching , That is, the mid-term data association makes the pose more accurate . Whether it's with the previous frame , Match with key frame or local map , Although the details are different , But I've been looking for something , Find a match , Optimize the pose 3 Stride . Last , if necessary establish KeyFrame Words , Then create a .

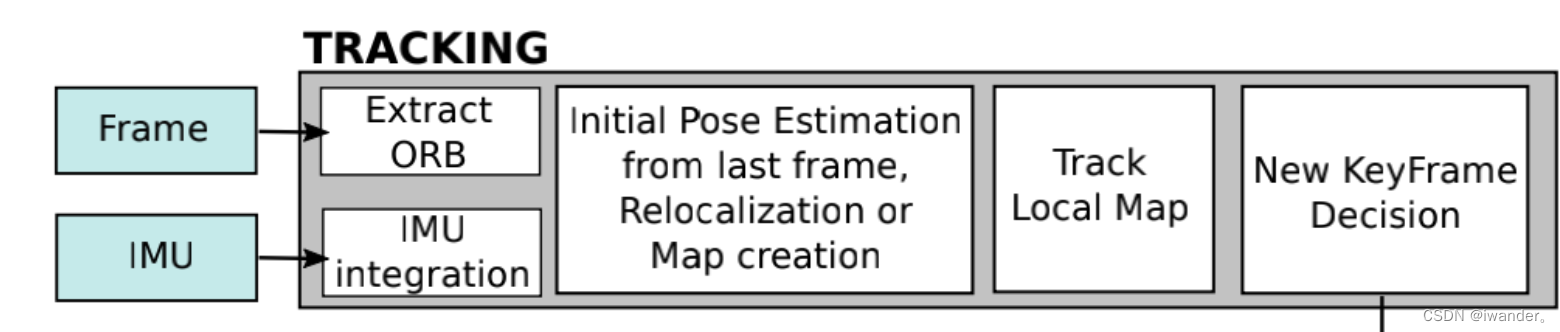

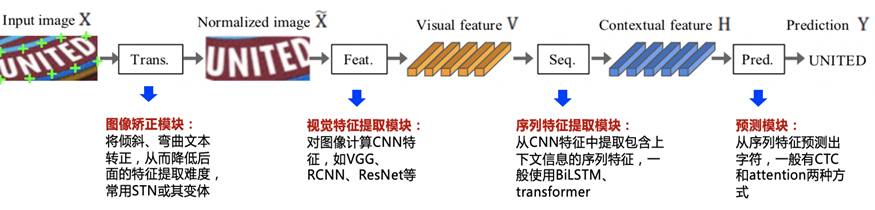

Let's take a look at the classic flow chart in the paper ( part ), Include :Frame->Extract ORB Corresponding Frame The construction of ( See link ),IMU integration( see 3.1); Next, find the current frame pose Initial value of , Corresponding to the short-term data association of the paper , By matching with the previous frame ( see 3.3) Or match with the latest key frame ( see 3.2) Realization ; Next is Track Local Map( see 3.5)

Improve pose accuracy , Corresponding to the interim data association of the paper ; Finally, increase KeyFrame 了 ( see 3.6).

Step1: obtain Altas Multi graph system active map

First use pCurrentMap The pointer handles active map Take it out , without , then CreateMapInAtlas() perhaps mpSystem->ResetActiveMap().

Map* pCurrentMap = mpAtlas->GetCurrentMap();

if(mState!=NO_IMAGES_YET)

{

// The timestamp is out of order

if(mLastFrame.mTimeStamp>mCurrentFrame.mTimeStamp)

{

unique_lock<mutex> lock(mMutexImuQueue);

mlQueueImuData.clear();

CreateMapInAtlas();

return;

}

// The timestamp is not disordered

else if(mCurrentFrame.mTimeStamp>mLastFrame.mTimeStamp+1.0)

{

if(mpAtlas->isInertial())

{

if(mpAtlas->isImuInitialized())

{

if(!pCurrentMap->GetIniertialBA2()) mpSystem->ResetActiveMap();

else CreateMapInAtlas();

}

else mpSystem->ResetActiveMap();

return;

}

}

}Step2:IMU Preintegration

mCurrentFrame.SetNewBias(mpLastKeyFrame->GetImuBias());

PreintegrateIMU();Step3: initialization

if(mState==NOT_INITIALIZED)

{

if(mSensor==System::STEREO || mSensor==System::RGBD || mSensor==System::IMU_STEREO || mSensor==System::IMU_RGBD) StereoInitialization();

else MonocularInitialization();

if(mState!=OK) // If rightly initialized, mState=OK

{

mLastFrame = Frame(mCurrentFrame);

return;

}

if(mpAtlas->GetAllMaps().size() == 1) mnFirstFrameId = mCurrentFrame.mnId;

}Step4:( Between adjacent frames ) Short term data matching acquisition pose initial value

This blog skips the pure positioning mode , Let's look at the positioning + Drawing (!mbOnlyTracking) The pattern of . stay 【SLAM】ORB-SLAM3 analysis —— review (1) When introducing the paper , We remember ORB-SLAM3 One of the main characteristics of ① Short term data matching ,② Interim data matching and ③ Long term data matching , This part corresponds to short-term data matching , That is to use the feature matching relationship between adjacent frames to obtain the pose of the latest frame . Of course, the error here is great , So it can only be used as the current frame pose Initial value of .

// Initial camera pose estimation using motion model or relocalization (if tracking is lost)

if(!mbOnlyTracking)

{

...

}This part encounters 3 In this case , Namely mState very OK, Just lost , I lost it , Let's have a look at :

(1) situation 1: State very OK(mState==OK)

// State OK

// Local Mapping is activated. This is the normal behaviour, unless you explicitly activate the "only tracking" mode.

if(mState==OK)

{

// Local Mapping might have changed some MapPoints tracked in last frame

// to update Local Mapping Inside MapPoints

CheckReplacedInLastFrame();

if((!mbVelocity && !pCurrentMap->isImuInitialized()) || mCurrentFrame.mnId<mnLastRelocFrameId+2)

{

// If There is no inter frame speed and the current frame IMU Not initialized , Or just finished relocating , Then with KeyFrame Match between frames

bOK = TrackReferenceKeyFrame();

}

else

{

// First match a wave with a constant speed model , If you fail, you have to pay back TrackReferenceKeyFrame()

bOK = TrackWithMotionModel();

if(!bOK) bOK = TrackReferenceKeyFrame();

}

// If TrackReferenceKeyFrame() and TrackWithMotionModel() It's not done

if (!bOK)

{

//VI In mode , If it has just been repositioned, it has not been long , At this time, I can track failures , That's really hopeless

if ( mCurrentFrame.mnId<=(mnLastRelocFrameId+mnFramesToResetIMU) &&

(mSensor==System::IMU_MONOCULAR || mSensor==System::IMU_STEREO || mSensor == System::IMU_RGBD))

{

mState = LOST;

}

// If the current frame is map There's a lot of KeyFrames, It can also be rescued

else if(pCurrentMap->KeyFramesInMap()>10)

{

mState = RECENTLY_LOST;

mTimeStampLost = mCurrentFrame.mTimeStamp;

}

// Not saved ,8BQ 了

else mState = LOST;

}

}(2) situation 2: Just lost , You can also rescue (mState==RECENTLY_LOST)

else if (mState == RECENTLY_LOST)

{

Verbose::PrintMess("Lost for a short time", Verbose::VERBOSITY_NORMAL);

bOK = true;

if((mSensor == System::IMU_MONOCULAR || mSensor == System::IMU_STEREO || mSensor == System::IMU_RGBD))

{

//VI In mode , use IMU Data rescue wave

if(pCurrentMap->isImuInitialized()) PredictStateIMU();

else bOK = false;

if (mCurrentFrame.mTimeStamp-mTimeStampLost>time_recently_lost)

{

mState = LOST;

Verbose::PrintMess("Track Lost...", Verbose::VERBOSITY_NORMAL);

bOK=false;

}

}

else

{

//Visual In mode ,Relocalization

bOK = Relocalization();

if(mCurrentFrame.mTimeStamp-mTimeStampLost>3.0f && !bOK)

{

mState = LOST;

Verbose::PrintMess("Track Lost...", Verbose::VERBOSITY_NORMAL);

bOK=false;

}

}

}(3) situation 3: I lost it , Reopen this one (mState==LOST)

else if (mState == LOST)

{

Verbose::PrintMess("A new map is started...", Verbose::VERBOSITY_NORMAL);

if (pCurrentMap->KeyFramesInMap()<10)

{

mpSystem->ResetActiveMap();

Verbose::PrintMess("Reseting current map...", Verbose::VERBOSITY_NORMAL);

}else

CreateMapInAtlas();

if(mpLastKeyFrame) mpLastKeyFrame = static_cast<KeyFrame*>(NULL);

Verbose::PrintMess("done", Verbose::VERBOSITY_NORMAL);

return;

}

}Step5:( And local map Of ) Interim data matching makes pose More precise

if(!mCurrentFrame.mpReferenceKF) mCurrentFrame.mpReferenceKF = mpReferenceKF;

if(bOK) bOK = TrackLocalMap();

if(bOK) mState = OK;

else if (mState == OK)

{

if (mSensor == System::IMU_MONOCULAR || mSensor == System::IMU_STEREO || mSensor == System::IMU_RGBD)

{

Verbose::PrintMess("Track lost for less than one second...", Verbose::VERBOSITY_NORMAL);

if(!pCurrentMap->isImuInitialized() || !pCurrentMap->GetIniertialBA2())

{

mpSystem->ResetActiveMap();

}

mState=RECENTLY_LOST;

}

else mState=RECENTLY_LOST; // visual to lost

mTimeStampLost = mCurrentFrame.mTimeStamp;

}Step6: Update every few frames IMU Preintegration

// Save frame if recent relocalization, since they are used for IMU reset (as we are making copy, it should be once mCurrFrame is completely modified)

if((mCurrentFrame.mnId<(mnLastRelocFrameId+mnFramesToResetIMU)) && (mCurrentFrame.mnId > mnFramesToResetIMU) &&

(mSensor == System::IMU_MONOCULAR || mSensor == System::IMU_STEREO || mSensor == System::IMU_RGBD) && pCurrentMap->isImuInitialized())

{

// TODO check this situation

Verbose::PrintMess("Saving pointer to frame. imu needs reset...", Verbose::VERBOSITY_NORMAL);

Frame* pF = new Frame(mCurrentFrame);

pF->mpPrevFrame = new Frame(mLastFrame);

// Load preintegration

pF->mpImuPreintegratedFrame = new IMU::Preintegrated(mCurrentFrame.mpImuPreintegratedFrame);

}

if(pCurrentMap->isImuInitialized())

{

if(bOK)

{

// every mnFramesToResetIMU The frame is about ResetFrameIMU() once

if(mCurrentFrame.mnId==(mnLastRelocFrameId+mnFramesToResetIMU))

{

ResetFrameIMU();

}

// If the current frame's idx and mnLastRelocFrameId Far away , that mLastBias It should be the current frame bias

else if(mCurrentFrame.mnId>(mnLastRelocFrameId+30))

mLastBias = mCurrentFrame.mImuBias;

}

}Step7: Save the speed of the constant speed model

That is, the relative pose of the current frame and the previous frame , In the next cycle, multiply the relative pose by the pose of the previous frame to find the pose of the latest frame . Be careful Step7-10 All in the same if Inside .

if(bOK || mState==RECENTLY_LOST)

{

// Update motion model

if(mLastFrame.isSet() && mCurrentFrame.isSet())

{

Sophus::SE3f LastTwc = mLastFrame.GetPose().inverse();

mVelocity = mCurrentFrame.GetPose() * LastTwc;

mbVelocity = true;

}

else mbVelocity = false;Step8: Empty the containers for storing matching data and temporary road marking containers

// Clean VO matches

for(int i=0; i<mCurrentFrame.N; i++)

{

MapPoint* pMP = mCurrentFrame.mvpMapPoints[i];

if(pMP)

if(pMP->Observations()<1)

{

mCurrentFrame.mvbOutlier[i] = false;

mCurrentFrame.mvpMapPoints[i]=static_cast<MapPoint*>(NULL);

}

}

// Delete temporal MapPoints

for(list<MapPoint*>::iterator lit = mlpTemporalPoints.begin(), lend = mlpTemporalPoints.end(); lit!=lend; lit++)

{

MapPoint* pMP = *lit;

delete pMP;

}

mlpTemporalPoints.clear();Step9: Create keyframes

bool bNeedKF = NeedNewKeyFrame();

// Check if we need to insert a new keyframe

// if(bNeedKF && bOK)

if(bNeedKF && (bOK || (mInsertKFsLost && mState==RECENTLY_LOST &&

(mSensor == System::IMU_MONOCULAR || mSensor == System::IMU_STEREO || mSensor == System::IMU_RGBD))))

CreateNewKeyFrame();Step10: Clear the outer point in the current frame road punctuation

// We allow points with high innovation (considererd outliers by the Huber Function)

// pass to the new keyframe, so that bundle adjustment will finally decide

// if they are outliers or not. We dont want next frame to estimate its position

// with those points so we discard them in the frame. Only has effect if lastframe is tracked

for(int i=0; i<mCurrentFrame.N;i++)

{

if(mCurrentFrame.mvpMapPoints[i] && mCurrentFrame.mvbOutlier[i])

mCurrentFrame.mvpMapPoints[i]=static_cast<MapPoint*>(NULL);

}Step11: If you lose it , Reopen

// Reset if the camera get lost soon after initialization

if(mState==LOST)

{

if(pCurrentMap->KeyFramesInMap()<=10)

{

mpSystem->ResetActiveMap();

return;

}

if (mSensor == System::IMU_MONOCULAR || mSensor == System::IMU_STEREO || mSensor == System::IMU_RGBD)

if (!pCurrentMap->isImuInitialized())

{

Verbose::PrintMess("Track lost before IMU initialisation, reseting...", Verbose::VERBOSITY_QUIET);

mpSystem->ResetActiveMap();

return;

}

CreateMapInAtlas();

return;

}Step12: Pass some auxiliary data

if(!mCurrentFrame.mpReferenceKF) mCurrentFrame.mpReferenceKF = mpReferenceKF;

mLastFrame = Frame(mCurrentFrame);

}

if(mState==OK || mState==RECENTLY_LOST)

{

// Store frame pose information to retrieve the complete camera trajectory afterwards.

if(mCurrentFrame.isSet())

{

Sophus::SE3f Tcr_ = mCurrentFrame.GetPose() * mCurrentFrame.mpReferenceKF->GetPoseInverse();

mlRelativeFramePoses.push_back(Tcr_);

mlpReferences.push_back(mCurrentFrame.mpReferenceKF);

mlFrameTimes.push_back(mCurrentFrame.mTimeStamp);

mlbLost.push_back(mState==LOST);

}

else

{

// This can happen if tracking is lost

mlRelativeFramePoses.push_back(mlRelativeFramePoses.back());

mlpReferences.push_back(mlpReferences.back());

mlFrameTimes.push_back(mlFrameTimes.back());

mlbLost.push_back(mState==LOST);

}

}3.1 IMU Preintegration

This part of the reference blog :

https://zhuanlan.zhihu.com/p/374372286

https://blog.csdn.net/qq_39266065/article/details/115703313

https://github.com/electech6/ORB_SLAM3_detailed_comments

https://blog.csdn.net/weixin_37835423/article/details/122370251?spm=1001.2014.3001.5502 recommend

This part involves complex formula derivation , See the reference link above for details .

If the current is the first frame , Or not IMU data , It would be return.

void Tracking::PreintegrateIMU()

{

// Step 1. Get the pre integration data to be processed between two frames , Make a collection

// The previous frame does not exist , It means that there is no imu data , No pre integration

if(!mCurrentFrame.mpPrevFrame)

{

Verbose::PrintMess("non prev frame ", Verbose::VERBOSITY_NORMAL);

mCurrentFrame.setIntegrated();

return;

}

mvImuFromLastFrame.clear();

mvImuFromLastFrame.reserve(mlQueueImuData.size());

// No, imu data , No pre integration

if(mlQueueImuData.size() == 0)

{

Verbose::PrintMess("Not IMU data in mlQueueImuData!!", Verbose::VERBOSITY_NORMAL);

mCurrentFrame.setIntegrated();

return;

}Then, the IMU Take out all the data .

while(true)

{

// Data is not yet available , Will wait for a while , until mlQueueImuData There is imu data . There is no need to wait at first

bool bSleep = false;

{

unique_lock<mutex> lock(mMutexImuQueue);

if(!mlQueueImuData.empty())

{

// Get the first one imu Data as starting data

IMU::Point* m = &mlQueueImuData.front();

cout.precision(17);

// imu The starting data will be earlier than the timestamp of the previous frame of the current frame , If there is a difference 0.001 Then abandon this imu data

if(m->t<mCurrentFrame.mpPrevFrame->mTimeStamp-mImuPer)

{

mlQueueImuData.pop_front();

}

// Also the last one imu The data timestamp can't deal with the redundant time interval of the current frame 0.001

else if(m->t<mCurrentFrame.mTimeStamp-mImuPer)

{

mvImuFromLastFrame.push_back(*m);

mlQueueImuData.pop_front();

}

else

{

// Get the... Between two frames imu Put data in mvImuFromLastFrame in , Get the processing data of the later pre integration

mvImuFromLastFrame.push_back(*m);

break;

}

}

else

{

break;

bSleep = true;

}

}

if(bSleep) usleep(500);

}Carry out median integral processing between two frames . Here we make a special analysis of the beginning and end , It is essentially linear interpolation .

// m individual imu Group data will have m-1 Pre product components

const int n = mvImuFromLastFrame.size()-1;

if(n==0) return;

// structure imu The preprocessor , And initialize the calibration data

IMU::Preintegrated* pImuPreintegratedFromLastFrame = new IMU::Preintegrated(mLastFrame.mImuBias,mCurrentFrame.mImuCalib);

// According to the different positions of pre integration, do different median integration processing

/**

* According to the above imu Frame filtering ,IMU The sequence with the image frame is as follows :

* Frame---IMU0---IMU1---IMU2---IMU3---IMU4---------------IMUx---Frame---IMUx+1

* T_------T0-----T1-----T2-----T3-----T4-----------------Tx-----_T------Tx+1

* A_------A0-----A1-----A2-----A3-----A4-----------------Ax-----_T------Ax+1

* W_------W0-----W1-----W2-----W3-----W4-----------------Wx-----_T------Wx+1

* T_ and _T Represents the timestamp of the previous image frame and the current image frame respectively ,A( Acceleration data ),W( Gyroscope data ), Empathy

*/

for(int i=0; i<n; i++)

{

float tstep;

Eigen::Vector3f acc, angVel;

// The first frame of data, but not the last two frames ,imu The total number of frames is greater than 2

if((i==0) && (i<(n-1)))

{

// Get two adjacent segments imu Time interval of

float tab = mvImuFromLastFrame[i+1].t-mvImuFromLastFrame[i].t;

// Get current imu Time interval to the previous frame

float tini = mvImuFromLastFrame[i].t-mCurrentFrame.mpPrevFrame->mTimeStamp;

// Set the current time imu Acceleration a0, Acceleration at the next moment a1, The time interval tab by t10,tini t0p

// Under normal circumstances, in order to find the previous frame to the current time imu An average acceleration of , however imu Time will not fall at the moment of the last frame , Compensation is needed , Ask for a0 The amount of acceleration change from time to the previous frame

// With this change, add it to a0 After that, you can express the acceleration of the previous frame . among a0 - (a1-a0)*(tini/tab) Add a1 Then divide by 2 It's the average acceleration during this period

// among tstep Express a1 Time interval to the previous frame ,a0 - (a1-a0)*(tini/tab) In this formula tini It can be positive or negative, indicating the sequence of time ,(a1-a0) Is the same , In many cases, this formula still holds

acc = (mvImuFromLastFrame[i].a+mvImuFromLastFrame[i+1].a-

(mvImuFromLastFrame[i+1].a-mvImuFromLastFrame[i].a)*(tini/tab))*0.5f;

// The calculation process is similar to acceleration

angVel = (mvImuFromLastFrame[i].w+mvImuFromLastFrame[i+1].w-

(mvImuFromLastFrame[i+1].w-mvImuFromLastFrame[i].w)*(tini/tab))*0.5f;

tstep = mvImuFromLastFrame[i+1].t-mCurrentFrame.mpPrevFrame->mTimeStamp;

}

else if(i<(n-1))

{

// There is no frame interference in the intermediate data , Normal calculation

acc = (mvImuFromLastFrame[i].a+mvImuFromLastFrame[i+1].a)*0.5f;

angVel = (mvImuFromLastFrame[i].w+mvImuFromLastFrame[i+1].w)*0.5f;

tstep = mvImuFromLastFrame[i+1].t-mvImuFromLastFrame[i].t;

}

// Until the penultimate imu At the moment , The calculation process is similar to the first moment , Both frames and imu The relationship of time

else if((i>0) && (i==(n-1)))

{

float tab = mvImuFromLastFrame[i+1].t-mvImuFromLastFrame[i].t;

float tend = mvImuFromLastFrame[i+1].t-mCurrentFrame.mTimeStamp;

acc = (mvImuFromLastFrame[i].a+mvImuFromLastFrame[i+1].a-

(mvImuFromLastFrame[i+1].a-mvImuFromLastFrame[i].a)*(tend/tab))*0.5f;

angVel = (mvImuFromLastFrame[i].w+mvImuFromLastFrame[i+1].w-

(mvImuFromLastFrame[i+1].w-mvImuFromLastFrame[i].w)*(tend/tab))*0.5f;

tstep = mCurrentFrame.mTimeStamp-mvImuFromLastFrame[i].t;

}

// For two data, use the first moment , There should be no such situation ,, You should try it later

else if((i==0) && (i==(n-1)))

{

acc = mvImuFromLastFrame[i].a;

angVel = mvImuFromLastFrame[i].w;

tstep = mCurrentFrame.mTimeStamp-mCurrentFrame.mpPrevFrame->mTimeStamp;

}

// Step 3. Perform pre integral calculation in turn

// It must exist , One is relative to the previous key frame , One is relative to the previous frame

mpImuPreintegratedFromLastKF->IntegrateNewMeasurement(acc,angVel,tstep);

pImuPreintegratedFromLastFrame->IntegrateNewMeasurement(acc,angVel,tstep);

}

// Record the image frame of the current pre integration

mCurrentFrame.mpImuPreintegratedFrame = pImuPreintegratedFromLastFrame;

mCurrentFrame.mpImuPreintegrated = mpImuPreintegratedFromLastKF;

mCurrentFrame.mpLastKeyFrame = mpLastKeyFrame;

mCurrentFrame.setIntegrated();

//Verbose::PrintMess("Preintegration is finished!! ", Verbose::VERBOSITY_DEBUG);

} We enter IntegrateNewMeasurement(acc,angVel,tstep) Take a look at the function , This part directly refers to the annotated code in the reference link , It did 2 thing ,IMU Pre integration and recurrence of error transfer matrix , About the latter , I am currently

I don't dare to scribble before I fully understand ,ctrl C+V Great way :

void Preintegrated::IntegrateNewMeasurement(const Eigen::Vector3f &acceleration, const Eigen::Vector3f &angVel, const float &dt)

{

// preservation imu data , Using the results of median integration, a pre product classification is constructed and saved in mvMeasurements in

mvMeasurements.push_back(integrable(acceleration, angVel, dt));

// Position is updated firstly, as it depends on previously computed velocity and rotation.

// Velocity is updated secondly, as it depends on previously computed rotation.

// Rotation is the last to be updated.

// Matrices to compute covariance

// Step 1. Construct covariance matrix

// Transfer matrix of noise matrix , This part is used to calculate i To j-1 Historical noise or covariance

Eigen::Matrix<float, 9, 9> A;

A.setIdentity();

// Transfer matrix of noise matrix , This part is used to calculate j-1 New noise or covariance , The numbers in these two matrices are at the current moment , The calculation is mainly for the next moment

Eigen::Matrix<float, 9, 6> B;

B.setZero();

// Consider the acceleration after offset 、 angular velocity

Eigen::Vector3f acc(acceleration(0) - b.bax, acceleration(1) - b.bay, acceleration(2) - b.baz);

Eigen::Vector3f accW(angVel(0) - b.bwx, angVel(1) - b.bwy, angVel(2) - b.bwz);

// Record the average acceleration and angular velocity

avgA = (dT * avgA + dR * acc * dt) / (dT + dt);

avgW = (dT * avgW + accW * dt) / (dT + dt);

// Update delta position dP and velocity dV (rely on no-updated delta rotation)

// According to no updated dR To update dP And dV eq.(38)

dP = dP + dV * dt + 0.5f * dR * acc * dt * dt;

dV = dV + dR * acc * dt;

// Compute velocity and position parts of matrices A and B (rely on non-updated delta rotation)

// according to η_ij = A * η_i,j-1 + B_j-1 * η_j-1 Medium A Matrix and B The matrix updates the velocity and displacement

Eigen::Matrix<float, 3, 3> Wacc = Sophus::SO3f::hat(acc);

A.block<3, 3>(3, 0) = -dR * dt * Wacc;

A.block<3, 3>(6, 0) = -0.5f * dR * dt * dt * Wacc;

A.block<3, 3>(6, 3) = Eigen::DiagonalMatrix<float, 3>(dt, dt, dt);

B.block<3, 3>(3, 3) = dR * dt;

B.block<3, 3>(6, 3) = 0.5f * dR * dt * dt;

// Update position and velocity jacobians wrt bias correction

// Because over time , It is impossible to recalculate the Jacobian matrix every time , So it needs to be done J(k+1) = j(k) + (~) Such things , Decomposition method and AB The matrix is the same

// The author of the paper is right forster On the basis of the formula in this paper, a deformation is made , Then update recursively , See https://github.com/UZ-SLAMLab/ORB_SLAM3/issues/212

JPa = JPa + JVa * dt - 0.5f * dR * dt * dt;

JPg = JPg + JVg * dt - 0.5f * dR * dt * dt * Wacc * JRg;

JVa = JVa - dR * dt;

JVg = JVg - dR * dt * Wacc * JRg;

// Update delta rotation

// Step 2. Constructors , According to the updated bias Perform angular integration

IntegratedRotation dRi(angVel, b, dt);

// Force normalization to make it conform to the format of rotation matrix

dR = NormalizeRotation(dR * dRi.deltaR);

// Compute rotation parts of matrices A and B

// Add AB matrix

A.block<3, 3>(0, 0) = dRi.deltaR.transpose();

B.block<3, 3>(0, 0) = dRi.rightJ * dt;

// small amount delta For the initial 0, After the update, it is usually 0, Therefore, a small amount of updates are omitted

// Update covariance

// Step 3. Update covariance ,frost Chapter of the classic pre integral thesis 63 A formula , The noise (ηa, ηg) Yes dR dV dP Influence

C.block<9, 9>(0, 0) = A * C.block<9, 9>(0, 0) * A.transpose() + B * Nga * B.transpose(); // B The matrix of the 9*6 matrix Nga 6*6 Diagonal matrix ,3 Square of gyroscope noise ,3 The square of the accelerometer noise

// This part starts with 0 matrix , As the number of integrals increases , Add random walk every time , Biased information matrix

C.block<6, 6>(9, 9) += NgaWalk;

// Update rotation jacobian wrt bias correction

// Calculate the offset Jacobian matrix ,r Yes bg The derivative of ,∂ΔRij/∂bg = (ΔRjj-1) * ∂ΔRij-1/∂bg - Jr(j-1)*t

// The author of the paper is right forster On the basis of the formula in this paper, a deformation is made , Then update recursively , See https://github.com/UZ-SLAMLab/ORB_SLAM3/issues/212

// ? Why update first JPa、JPg、JVa、JVg The last update JRg? answer : Here must be updated first dRi Can be updated to this value , But why JPg and JVg Dependent on the previous JRg Values are updated ?

JRg = dRi.deltaR.transpose() * JRg - dRi.rightJ * dt;

// Total integrated time

// Total update time

dT += dt;

}3.2 TrackReferenceKeyFrame()

Match with the key frame to solve the current frame pose , We need to find out the descriptors of the feature points of the two frames , Then get the matching relationship between the feature points of the two frames , And then 3D-2D Re projection error solves the current frame pose .

bool Tracking::TrackReferenceKeyFrame()

{

// Compute Bag of Words vector

// Step 1: Converts the descriptor of the current frame to BoW vector

mCurrentFrame.ComputeBoW();

// We perform first an ORB matching with the reference keyframe

// If enough matches are found we setup a PnP solver

ORBmatcher matcher(0.7,true);

vector<MapPoint*> vpMapPointMatches;

// Step 2: Through word bag BoW Accelerate the feature point matching between the current frame and the reference frame

int nmatches = matcher.SearchByBoW(mpReferenceKF,mCurrentFrame,vpMapPointMatches);

// The number of matches is less than 15, Think the tracking failed

if(nmatches<15) return false;

// Step 3: Take the pose of the previous frame as the initial value of the pose of the current frame

mCurrentFrame.mvpMapPoints = vpMapPointMatches;

mCurrentFrame.SetPose(mLastFrame.GetPose()); // Use the last one Tcw Set the initial value , stay PoseOptimization It can converge faster

// Step 4: By optimizing the 3D-2D To get the position and pose by using the re projection error

Optimizer::PoseOptimization(&mCurrentFrame);

// Discard outliers

// Step 5: Eliminate the outer points in the optimized matching points

// The reason why the outer points are eliminated after optimization , It is because there are marks for these outer points in the process of optimization

int nmatchesMap = 0;

for(int i =0; i<mCurrentFrame.N; i++)

{

//if(i >= mCurrentFrame.Nleft) break;

if(mCurrentFrame.mvpMapPoints[i])

{

// If the corresponding feature point is an outer point

if(mCurrentFrame.mvbOutlier[i])

{

// Clear its traces in the current frame

MapPoint* pMP = mCurrentFrame.mvpMapPoints[i];

mCurrentFrame.mvpMapPoints[i]=static_cast<MapPoint*>(NULL);

mCurrentFrame.mvbOutlier[i]=false;

if(i < mCurrentFrame.Nleft) pMP->mbTrackInView = false;

else pMP->mbTrackInViewR = false;

pMP->mbTrackInView = false;

pMP->mnLastFrameSeen = mCurrentFrame.mnId;

nmatches--;

}

else if(mCurrentFrame.mvpMapPoints[i]->Observations()>0)

// Matched interior point count ++

nmatchesMap++;

}

}

if (mSensor == System::IMU_MONOCULAR || mSensor == System::IMU_STEREO || mSensor == System::IMU_RGBD) return true;

else return nmatchesMap>=10; // The number of successful tracking exceeds 10 I think the tracking is successful , Otherwise, the tracking fails

}3.2.1 Get current frame descriptor

void Frame::ComputeBoW()

{

// Judge whether it has been calculated before , After calculation, skip

if(mBowVec.empty())

{

// Will describe mDescriptors Convert to DBOW Required input format

vector<cv::Mat> vCurrentDesc = Converter::toDescriptorVector(mDescriptors);

// The descriptors of feature points are converted into word bag vectors mBowVec And eigenvectors mFeatVec

mpORBvocabulary->transform(vCurrentDesc, // Current descriptor vector

mBowVec, // Output , Word bag vector , What is recorded is word id And its corresponding weight TF-IDF value

mFeatVec, // Output , Record node id And its corresponding image feature The corresponding index

4); //4 Represents the number of layers from the leaf node forward

}

}3.2.2 Feature matching using word bag ORBmatcher::SearchByBoW()

The most important purpose of this step is to use the current frame 2D Point descriptor and key frame 2D Descriptors of points match , And the key frame 2D Point and 3D Points are one-to-one correspondence , In this way, we can finally find the current frame 2D Points and keyframes 3D Matching relationship of points .

Get key frame feature points and descriptors , Initialize the container for storing the matching points of the current frame ;

// Get the map point of the key frame

const vector<MapPoint*> vpMapPointsKF = pKF->GetMapPointMatches();

// And normal frames F The index of feature points is consistent

vpMapPointMatches = vector<MapPoint*>(F.N,static_cast<MapPoint*>(NULL));

// Take out the word bag feature vector of the key frame

const DBoW2::FeatureVector &vFeatVecKF = pKF->mFeatVec;

int nmatches=0;

// Histogram for statistics of angular rotation difference of feature points

vector<int> rotHist[HISTO_LENGTH];

for(int i=0;i<HISTO_LENGTH;i++) rotHist[i].reserve(500);

// take 0~360 Convert the number of to 0~HISTO_LENGTH The coefficient of

const float factor = 1.0f/HISTO_LENGTH;Of course, make sure you belong to the same node ( Specific layer ) Of ORB Feature matching :

// We perform the matching over ORB that belong to the same vocabulary node (at a certain level)

DBoW2::FeatureVector::const_iterator KFit = vFeatVecKF.begin();

DBoW2::FeatureVector::const_iterator Fit = F.mFeatVec.begin();

DBoW2::FeatureVector::const_iterator KFend = vFeatVecKF.end();

DBoW2::FeatureVector::const_iterator Fend = F.mFeatVec.end();

while(KFit != KFend && Fit != Fend)

{

// Step 1: Take out the parts belonging to the same node Of ORB Characteristic point ( Only belong to the same node, It's a little... It's possible )

// first Element is node id, Traverse

if(KFit->first == Fit->first)

{

...

}

else if(KFit->first < Fit->first)

{

// alignment

KFit = vFeatVecKF.lower_bound(Fit->first);

}

else

{

// alignment

Fit = F.mFeatVec.lower_bound(KFit->first);

}If it belongs to the same layer , Then traverse each feature point of the key frame , Then compare the feature points on each current frame with it , Find the one with the smallest descriptor distance , Let's go to the ellipsis in the code above :

// Step 2: Traverse KF It belongs to node Characteristics of

for(size_t iKF=0; iKF<vIndicesKF.size(); iKF++)

{

// Key frame the index of feature points in this node

const unsigned int realIdxKF = vIndicesKF[iKF];

// Take out KF Map point corresponding to this feature in

MapPoint* pMP = vpMapPointsKF[realIdxKF];

if(!pMP) continue;

if(pMP->isBad()) continue;

// Take out the key frame KF The descriptor corresponding to this feature in

const cv::Mat &dKF= pKF->mDescriptors.row(realIdxKF);

int bestDist1=256; // The best distance ( Minimum distance )

int bestIdxF =-1 ;

int bestDist2=256; // Second best distance ( The penultimate distance )

int bestDist1R=256;

int bestIdxFR =-1 ;

int bestDist2R=256;

// Step 3: Traverse F It belongs to node Characteristics of , Find the best match

for(size_t iF=0; iF<vIndicesF.size(); iF++)

{

// there realIdxF It refers to the index of feature points in this node of ordinary frame

const unsigned int realIdxF = vIndicesF[iF];

// If map points exist , It means that this point has been matched , No longer match , Speed up

if(vpMapPointMatches[realIdxF]) continue;

// Take out the normal frame F The descriptor corresponding to this feature in

const cv::Mat &dF = F.mDescriptors.row(realIdxF);

// Calculate the distance of the descriptor

const int dist = DescriptorDistance(dKF,dF);

// Traverse , Record the best distance 、 The index corresponding to the best distance 、 Second best distance, etc

// If dist < bestDist1 < bestDist2, to update bestDist1 bestDist2

if(realIdxF < F.Nleft && dist<bestDist1){

bestDist2=bestDist1;

bestDist1=dist;

bestIdxF=realIdxF;

}

else if(realIdxF < F.Nleft && dist<bestDist2) bestDist2=dist;

if(realIdxF >= F.Nleft && dist<bestDist1R){

bestDist2R=bestDist1R;

bestDist1R=dist;

bestIdxFR=realIdxF;

}

else if(realIdxF >= F.Nleft && dist<bestDist2R) bestDist2R=dist;

}Next , Starting to eliminate outliers , First, according to the threshold , Then there is angle consistency :

// Step 4: According to the threshold and Angle voting eliminates mismatches

// Step 4.1: The first level is screening : The matching distance must be less than the set threshold

if(bestDist1<=TH_LOW)

{

// Step 4.2: The second level is screening : The best match is obviously better than the second best match , Then the best match is really reliable

if(static_cast<float>(bestDist1)<mfNNratio*static_cast<float>(bestDist2))

{

// Step 4.3: Record the corresponding map points that successfully match the feature points ( From keyframes )

vpMapPointMatches[bestIdxF]=pMP;

// there realIdxKF Is the feature point of the currently traversed key frame id

const cv::KeyPoint &kp =

(!pKF->mpCamera2) ? pKF->mvKeysUn[realIdxKF] :

(realIdxKF >= pKF -> NLeft) ? pKF -> mvKeysRight[realIdxKF - pKF -> NLeft]

: pKF -> mvKeys[realIdxKF];

// Step 4.4: Calculate the histogram of the rotation angle difference of the matching point

if(mbCheckOrientation)

{

cv::KeyPoint &Fkp = (!pKF->mpCamera2 || F.Nleft == -1) ? F.mvKeys[bestIdxF] :

(bestIdxF >= F.Nleft) ? F.mvKeysRight[bestIdxF - F.Nleft] : F.mvKeys[bestIdxF];

// The angle changes of all feature points should be consistent , Get the most accurate angle change value through histogram statistics

float rot = kp.angle-Fkp.angle;

if(rot<0.0) rot+=360.0f;

int bin = round(rot*factor);// take rot Assigned to bin Group , rounding , In fact, it is dispersed into the corresponding histogram Group

if(bin==HISTO_LENGTH) bin=0;

assert(bin>=0 && bin<HISTO_LENGTH);

rotHist[bin].push_back(bestIdxF);

}

nmatches++;

}

}

}

KFit++;

Fit++;Eliminate the outer points according to the angle :

// Step 5 Eliminate mismatched points according to the direction

if(mbCheckOrientation)

{

// index

int ind1=-1;

int ind2=-1;

int ind3=-1;

// Filter out the top three with the largest number of rotation angle differences in the histogram interval bin The index of

ComputeThreeMaxima(rotHist,HISTO_LENGTH,ind1,ind2,ind3);

for(int i=0; i<HISTO_LENGTH; i++)

{

// If the rotation angle change of feature points belongs to these three groups , The retention

if(i==ind1 || i==ind2 || i==ind3)

continue;

// Eliminate the matching pairs that are not in the first three , Because they don't meet “ Main stream rotation direction ”

for(size_t j=0, jend=rotHist[i].size(); j<jend; j++)

{

vpMapPointMatches[rotHist[i][j]]=static_cast<MapPoint*>(NULL);

nmatches--;

}

}

}

return nmatches;

}3.2.3 3D-2D Re projection for pose

Refer to the connection :https://blog.csdn.net/Walking_roll/article/details/119817174

int Optimizer::PoseOptimization(Frame *pFrame)

{

// Step 1: Here we first construct a big boss--g2o Sparse optimizer , BlockSolver_6_3 Express : The posture is 6 dimension , The signpost point is 3 dimension

g2o::SparseOptimizer optimizer;// Graph model

g2o::BlockSolver_6_3::LinearSolverType * linearSolver;// Linear solver declaration

// Create a linear solver

linearSolver = new g2o::LinearSolverDense<g2o::BlockSolver_6_3::PoseMatrixType>();

// Create a matrix solver and initialize with the above linear solver

g2o::BlockSolver_6_3 * solver_ptr = new g2o::BlockSolver_6_3(linearSolver);

// Create a total solver , And initialized with the above matrix solver , You can see that there is LM Algorithm

g2o::OptimizationAlgorithmLevenberg* solver = new g2o::OptimizationAlgorithmLevenberg(solver_ptr);

// Setup solver

optimizer.setAlgorithm(solver);

// In the input frame , Effective , Participate in the optimization process 2D-3D Point to point

int nInitialCorrespondences=0;

// Step 2: Add vertex : The pose of the current frame to be optimized

g2o::VertexSE3Expmap * vSE3 = new g2o::VertexSE3Expmap();// Create a vertex

vSE3->setEstimate(Converter::toSE3Quat(pFrame->mTcw));// Into quaternions + The form of translation vector

// Set up id

vSE3->setId(0);

// Variables to be optimized , So it can't be fixed

vSE3->setFixed(false);

optimizer.addVertex(vSE3);// Add vertices to the optimizer

// Number of map points , That is, the number of map points to be added to the optimizer . Used to calculate the error edge ( Reprojection error )

const int N = pFrame->N;

vector<ORB_SLAM3::EdgeSE3ProjectXYZOnlyPose*> vpEdgesMono;

vector<ORB_SLAM3::EdgeSE3ProjectXYZOnlyPoseToBody *> vpEdgesMono_FHR;

vector<size_t> vnIndexEdgeMono, vnIndexEdgeRight;

vpEdgesMono.reserve(N);

vpEdgesMono_FHR.reserve(N);

vnIndexEdgeMono.reserve(N);

vnIndexEdgeRight.reserve(N);

vector<g2o::EdgeStereoSE3ProjectXYZOnlyPose*> vpEdgesStereo;

vector<size_t> vnIndexEdgeStereo;

vpEdgesStereo.reserve(N);

vnIndexEdgeStereo.reserve(N);

// The following involves the knowledge of removing outliers from Chi square distribution , There is no explanation here

// The degree of freedom is 2 Chi square distribution of , The significance level is 0.05, Corresponding critical threshold 5.991

const float deltaMono = sqrt(5.991);

// The degree of freedom is 3 Chi square distribution of , The significance level is 0.05, Corresponding critical threshold 7.815

const float deltaStereo = sqrt(7.815);

// Step 3: Add a unary edge ( Because this function only optimizes the current pose )

{

// Lock map points . Because the system is multithreaded , Therefore, when fetching data, it is necessary to lock to ensure thread safety .

// On the other hand , You need to use map points to construct vertices and edges , Therefore, we do not want some map points to be rewritten in the process of construction, resulting in inconsistency or even segment errors

unique_lock<mutex> lock(MapPoint::mGlobalMutex);

// Traverse all map points in the current map

for(int i=0; i<N; i++)

{

MapPoint* pMP = pFrame->mvpMapPoints[i];

// If this map point exists

if(pMP)

{

if(!pFrame->mpCamera2)

{

// Monocular situation

if(pFrame->mvuRight[i]<0)

{

nInitialCorrespondences++;

pFrame->mvbOutlier[i] = false;// Default that this map point is not an outer point

// Observation of this map point

const cv::KeyPoint &kpUn = pFrame->mvKeysUn[i];

Eigen::Matrix<double,2,1> obs(kpUn.pt.x, kpUn.pt.y);

// New edge , This side only optimizes the pose Pose

ORB_SLAM3::EdgeSE3ProjectXYZOnlyPose* e = new ORB_SLAM3::EdgeSE3ProjectXYZOnlyPose();

e->setVertex(0, dynamic_cast<g2o::OptimizableGraph::Vertex*>(optimizer.vertex(0)));

e->setMeasurement(obs);// Set the measured value

// The confidence of this point , It is related to the layer where the feature points are located . Use information matrix ( The inverse of the covariance matrix ) To express .

const float invSigma2 = pFrame->mvInvLevelSigma2[kpUn.octave];

e->setInformation(Eigen::Matrix2d::Identity()*invSigma2);

// The robust kernel function is used here

g2o::RobustKernelHuber* rk = new g2o::RobustKernelHuber;

e->setRobustKernel(rk);

rk->setDelta(deltaMono);

// Set camera internal parameters

e->pCamera = pFrame->mpCamera;

// Spatial location of map points , As the initial value of the iteration

cv::Mat Xw = pMP->GetWorldPos();

e->Xw[0] = Xw.at<float>(0);

e->Xw[1] = Xw.at<float>(1);

e->Xw[2] = Xw.at<float>(2);

optimizer.addEdge(e);// Add this side to the optimizer

vpEdgesMono.push_back(e);// The recording edge belongs to monocular situation

vnIndexEdgeMono.push_back(i);// Index of records

}

else // Stereo observation

{

nInitialCorrespondences++;

pFrame->mvbOutlier[i] = false;

// There is one more right target coordinate

const cv::KeyPoint &kpUn = pFrame->mvKeysUn[i];

const float &kp_ur = pFrame->mvuRight[i];

Eigen::Matrix<double,3,1> obs(kpUn.pt.x, kpUn.pt.y, kp_ur);

// The new node , Note that here is also only to optimize the posture

g2o::EdgeStereoSE3ProjectXYZOnlyPose* e = new g2o::EdgeStereoSE3ProjectXYZOnlyPose();

e->setVertex(0, dynamic_cast<g2o::OptimizableGraph::Vertex*>(optimizer.vertex(0)));

e->setMeasurement(obs);

// The degree of confidence mainly depends on the layer where the left eye feature point is located

const float invSigma2 = pFrame->mvInvLevelSigma2[kpUn.octave];

Eigen::Matrix3d Info = Eigen::Matrix3d::Identity()*invSigma2;

e->setInformation(Info);

g2o::RobustKernelHuber* rk = new g2o::RobustKernelHuber;

e->setRobustKernel(rk);

rk->setDelta(deltaStereo);

// Get coordinates of the internal reference map points as initial values

e->fx = pFrame->fx;

e->fy = pFrame->fy;

e->cx = pFrame->cx;

e->cy = pFrame->cy;

e->bf = pFrame->mbf;

cv::Mat Xw = pMP->GetWorldPos();

e->Xw[0] = Xw.at<float>(0);

e->Xw[1] = Xw.at<float>(1);

e->Xw[2] = Xw.at<float>(2);

optimizer.addEdge(e);

vpEdgesStereo.push_back(e);

vnIndexEdgeStereo.push_back(i);

}

}

}

}

}

// If there are not enough matching points , Then I have to give up

if(nInitialCorrespondences<3) return 0;

// Step 4: Start optimizing , A total of four optimizations , Each optimization iteration 10 Time , After each optimization , Divide observations into outlier and inlier,outlier Do not participate in the next optimization

// Threshold value calculated based on Chi square test ( Suppose the measurement has a deviation of one pixel )

const float chi2Mono[4]={5.991,5.991,5.991,5.991}; // Monocular

const float chi2Stereo[4]={7.815,7.815,7.815, 7.815}; // Binocular

const int its[4]={10,10,10,10};// Four iterations , Number of iterations per iteration

// bad Number of map points

int nBad=0;

// A total of four optimizations

for(size_t it=0; it<4; it++)

{

// The re projection error is calculated .

vSE3->setEstimate(Converter::toSE3Quat(pFrame->mTcw));

// It is actually initializing the optimizer , Parameters here 0 Even if you don't fill in , The default is 0, That's right level by 0 Optimize the edges of

optimizer.initializeOptimization(0);

// Start optimizing , Optimize 10 Time

optimizer.optimize(its[it]);

nBad=0;

// Optimization is over , Start traversing each error edge involved in optimization ( Monocular ), In fact, it is the re projection error

for(size_t i=0, iend=vpEdgesMono.size(); i<iend; i++)

{

ORB_SLAM3::EdgeSE3ProjectXYZOnlyPose* e = vpEdgesMono[i];

const size_t idx = vnIndexEdgeMono[i];

// The following is chi square test

// Since chi square test is performed for all observations after each optimization outlier and inlier Distinguish , Therefore, it was previously judged as outlier It's possible to become inlier, vice versa

// If this error edge comes from outlier

if(pFrame->mvbOutlier[idx]) e->computeError();

// Namely error*\Omega*error, The error of this point is characterized ( After considering confidence )

const float chi2 = e->chi2();

// The test failed

if(chi2>chi2Mono[it])

{

pFrame->mvbOutlier[idx]=true;

e->setLevel(1); // Set to outlier , level 1 Corresponding to the outer point , In the above process, we set it as not optimized

nBad++;

}

else

{

pFrame->mvbOutlier[idx]=false;

e->setLevel(0); // Set to inlier, level 0 Corresponds to the interior point , In the above process, we just want to optimize these relationships

}

if(it==2) e->setRobustKernel(0); // Except for the first two optimizations RobustKernel outside , The rest of the optimizations don't need -- Because the error of re projection has decreased significantly

}

// ……

// Step 5 Get the optimized pose of the current frame

g2o::VertexSE3Expmap* vSE3_recov = static_cast<g2o::VertexSE3Expmap*>(optimizer.vertex(0));

g2o::SE3Quat SE3quat_recov = vSE3_recov->estimate();

cv::Mat pose = Converter::toCvMat(SE3quat_recov);

pFrame->SetPose(pose);// Set the optimized posture

// And return the number of interior points

return nInitialCorrespondences-nBad;

}3.3 TrackWithMotionModel()

It is very similar to the previous one , It is also necessary to have an initial estimation of the pose of the current frame , You need to find the matching relationship of the corresponding points , use 3D-2D Seeking position and pose , Only the matching object becomes the last frame 3D O 'clock . And for IMU The situation of ,

Direct use IMU The pose obtained by integration is given to the current frame , So the speed will be faster .

bool Tracking::TrackWithMotionModel()

{

// Minimum distance < 0.9* Second small distance The match is successful , Check the rotation

ORBmatcher matcher(0.9,true);

// Update last frame pose according to its reference keyframe

// Create "visual odometry" points if in Localization Mode

// Step 1: Update the pose of the previous frame ; For binocular or RGB-D The camera , Temporary map points will also be generated according to the depth value

UpdateLastFrame();

// Step 2: according to IMU Or the constant speed model obtains the initial pose of the current frame .

if (mpAtlas->isImuInitialized() && (mCurrentFrame.mnId>mnLastRelocFrameId+mnFramesToResetIMU))

{

// Predict state with IMU if it is initialized and it doesnt need reset

// IMU Initialization complete also It takes a long time to relocate, so it doesn't need to be reset IMU, use IMU To estimate the pose , There is no one in the back

PredictStateIMU();

return true;

}

else

{

// According to the previously estimated speed , Use the constant speed model to get the initial pose of the current frame .

mCurrentFrame.SetPose(mVelocity * mLastFrame.GetPose());

}

// Clear the map points of the current frame

fill(mCurrentFrame.mvpMapPoints.begin(),mCurrentFrame.mvpMapPoints.end(),static_cast<MapPoint*>(NULL));

// Project points seen in previous frame

// Set the matching radius in the search process

int th;

if(mSensor==System::STEREO)

th=7;

else

th=15;

// Step 3: Use the map points of the previous frame for projection matching , If there are not enough matching points , Expand the search radius and do it again

int nmatches = matcher.SearchByProjection(mCurrentFrame,mLastFrame,th,mSensor==System::MONOCULAR || mSensor==System::IMU_MONOCULAR);

// If few matches, uses a wider window search

// If there are too few matching points , Expand the search radius and do it again

if(nmatches<20)

{

Verbose::PrintMess("Not enough matches, wider window search!!", Verbose::VERBOSITY_NORMAL);

fill(mCurrentFrame.mvpMapPoints.begin(),mCurrentFrame.mvpMapPoints.end(),static_cast<MapPoint*>(NULL));

nmatches = matcher.SearchByProjection(mCurrentFrame,mLastFrame,2*th,mSensor==System::MONOCULAR || mSensor==System::IMU_MONOCULAR);

Verbose::PrintMess("Matches with wider search: " + to_string(nmatches), Verbose::VERBOSITY_NORMAL);

}

// This is different from ORB-SLAM2 The way

if(nmatches<20)

{

Verbose::PrintMess("Not enough matches!!", Verbose::VERBOSITY_NORMAL);

if (mSensor == System::IMU_MONOCULAR || mSensor == System::IMU_STEREO || mSensor == System::IMU_RGBD)

return true;

else

return false;

}

// Optimize frame pose with all matches

// Step 4: utilize 3D-2D Projection relation , Optimize the pose of the current frame

Optimizer::PoseOptimization(&mCurrentFrame);

// Discard outliers

// Step 5: Eliminate the outer and outer points of map points

int nmatchesMap = 0;

for(int i =0; i<mCurrentFrame.N; i++)

{

if(mCurrentFrame.mvpMapPoints[i])

{

if(mCurrentFrame.mvbOutlier[i])

{

// If a map point is judged to be an outer point after optimization , Clear all its relationships

MapPoint* pMP = mCurrentFrame.mvpMapPoints[i];

mCurrentFrame.mvpMapPoints[i]=static_cast<MapPoint*>(NULL);

mCurrentFrame.mvbOutlier[i]=false;

if(i < mCurrentFrame.Nleft){

pMP->mbTrackInView = false;

}

else{

pMP->mbTrackInViewR = false;

}

pMP->mnLastFrameSeen = mCurrentFrame.mnId;

nmatches--;

}

else if(mCurrentFrame.mvpMapPoints[i]->Observations()>0)

// Accumulate the number of map points successfully matched

nmatchesMap++;

}

}

// In pure positioning mode : If very few map points are successfully tracked , So here mbVO The flag will be set

if(mbOnlyTracking)

{

mbVO = nmatchesMap<10;

return nmatches>20;

}

if (mSensor == System::IMU_MONOCULAR || mSensor == System::IMU_STEREO || mSensor == System::IMU_RGBD)

return true;

else

return nmatchesMap>=10; // Matches more than 10 One point is considered to be successful tracking

}3.3.1 Update the pose of the previous frame and add road markings UpdateLastFrame

The key frame of the previous frame is known , The relative pose relationship between the key frame of the previous frame and the previous frame is known , Then the pose of the previous frame can be obtained . Supplementary road markings are for RGBD And binocular situation , Because the depth value of feature points

In the build Frame It's time to find out , Monocular mode has no depth information of feature points .

Step 1: Use the reference key frame to update the pose of the previous frame in the world coordinate system

void Tracking::UpdateLastFrame()

{

// Update pose according to reference keyframe

// The reference key frame of the previous normal frame , Note that the reference key frame is used here ( Accurate posture ) Instead of the normal frame of the previous frame

KeyFrame* pRef = mLastFrame.mpReferenceKF;

// ref_keyframe To lastframe Position and pose transformation of

Sophus::SE3f Tlr = mlRelativeFramePoses.back();

// Calculate the pose in the world coordinate system of the previous frame

// l:last, r:reference, w:world

// Tlw = Tlr*Trw

mLastFrame.SetPose(Tlr * pRef->GetPose());

// If the previous frame is a keyframe , Or monocular / Monocular inertia ,SLAM Mode , The exit

if(mnLastKeyFrameId==mLastFrame.mnId || mSensor==System::MONOCULAR || mSensor==System::IMU_MONOCULAR || !mbOnlyTracking)

return;Step 2: For binocular or rgbd The camera , Generate new temporary map points for the previous frame

// Note that these map points are only used for tracking , Don't add to the map , It will be deleted after tracking

// Create "visual odometry" MapPoints

// We sort points according to their measured depth by the stereo/RGB-D sensor

// Step 2.1: Get the feature points with effective depth value in the previous frame ( Not necessarily map points )

vector<pair<float,int> > vDepthIdx;

const int Nfeat = mLastFrame.Nleft == -1? mLastFrame.N : mLastFrame.Nleft;

vDepthIdx.reserve(Nfeat);

for(int i=0; i<Nfeat;i++)

{

float z = mLastFrame.mvDepth[i];

if(z>0)

{

// vDepthIdx The first element is the depth of a point , The second element is the corresponding feature point id

vDepthIdx.push_back(make_pair(z,i));

}

}

// If there is no point with effective depth in the previous frame , So just quit

if(vDepthIdx.empty()) return;

// Sort by depth from small to large

sort(vDepthIdx.begin(),vDepthIdx.end());

// We insert all close points (depth<mThDepth)

// If less than 100 close points, we insert the 100 closest ones.

// Step 2.2: Find out the parts that are not map points

int nPoints = 0;

for(size_t j=0; j<vDepthIdx.size();j++)

{

int i = vDepthIdx[j].second;

bool bCreateNew = false;

// If this point does not correspond to the map point in the previous frame , Or not observed after creation , Then generate a temporary map point

MapPoint* pMP = mLastFrame.mvpMapPoints[i];

if(!pMP)

bCreateNew = true;

else if(pMP->Observations()<1)

// Map points are not observed after they are created , I don't think it's reliable , You also need to recreate

bCreateNew = true;

if(bCreateNew)

{

// Step 2.3: Points to be created , Package as map points . Just to improve binocular and RGBD Tracking success rate , No complex attributes are added , Because it will be thrown away later

// Back projection into the world coordinate system

Eigen::Vector3f x3D;

if(mLastFrame.Nleft == -1){

mLastFrame.UnprojectStereo(i, x3D);

}

else{

x3D = mLastFrame.UnprojectStereoFishEye(i);

}

// Add to the map points of the previous frame

MapPoint* pNewMP = new MapPoint(x3D,mpAtlas->GetCurrentMap(),&mLastFrame,i);

mLastFrame.mvpMapPoints[i]=pNewMP;

// Marked as temporarily added MapPoint, After the CreateNewKeyFrame All will be deleted before

mlpTemporalPoints.push_back(pNewMP);

nPoints++;

}

else

{

// Because sort from near to far , Record the number of map points that do not need to be created

nPoints++;

}

// Step 2.4: If the quality of map points is poor , Stop creating map points

// To stop adding temporary map points, the following conditions must be met at the same time :

// 1、 The depth of the current point has exceeded the set depth threshold (35 Double baseline )

// 2、nPoints Has more than 100 A little bit , It means that the distance is far away , May not be accurate , Stop and exit

if(vDepthIdx[j].first>mThDepth && nPoints>100) break;

}

}3.3.2 use IMU Estimate the current state quantity PredictStateIMU()

The state quantity at the current time is added by the state quantity at the previous time IMU The pre product component goes to world Tie it down and estimate .

/**

* @brief When the tracking is unsuccessful , Initialized with imu Data tracking processing , adopt IMU Forecast status

* Two places to use :

* 1. Calculation speed of uniform model , But the pose of the current frame is not assigned ;

* 2. When the tracking is lost, it is not directly determined to be lost , Use this function to predict the pose of the current frame and see if it can be dragged back , Instead of repositioning in pure vision

*

* @return true

* @return false

*/

bool Tracking::PredictStateIMU()

{

if(!mCurrentFrame.mpPrevFrame)

{

Verbose::PrintMess("No last frame", Verbose::VERBOSITY_NORMAL);

return false;

}

// Summarize when the map is updated , That is to say mbMapUpdated by true

// 1. Loop back or merge

// 2. Local map LocalBundleAdjustment

// 3. IMU Three stage initialization

// The following code is as like as two peas. , It's just that the relative frames are different in calculation , The map has been updated relative to the previous key frame , On the contrary, relative to the previous frame

// When the map is updated, the keyframes and are updated MP, So it's more accurate than keyframes

// Without updating , Closer to the previous frame , The calculation error is smaller

// When the map is updated , And the last image key frame exists

if(mbMapUpdated && mpLastKeyFrame)

{

const Eigen::Vector3f twb1 = mpLastKeyFrame->GetImuPosition();

const Eigen::Matrix3f Rwb1 = mpLastKeyFrame->GetImuRotation();

const Eigen::Vector3f Vwb1 = mpLastKeyFrame->GetVelocity();

const Eigen::Vector3f Gz(0, 0, -IMU::GRAVITY_VALUE);

const float t12 = mpImuPreintegratedFromLastKF->dT;

// Calculate the pose of the current frame in the world coordinate system , The principle is to use the position and posture of pre integration ( The value of the pre integral does not change ) And the pose of the previous frame ( Will change iteratively ) updated

// rotate R_wb2 = R_wb1 * R_b1b2

Eigen::Matrix3f Rwb2 = IMU::NormalizeRotation(Rwb1 * mpImuPreintegratedFromLastKF->GetDeltaRotation(mpLastKeyFrame->GetImuBias()));

// Displacement

Eigen::Vector3f twb2 = twb1 + Vwb1*t12 + 0.5f*t12*t12*Gz+ Rwb1*mpImuPreintegratedFromLastKF->GetDeltaPosition(mpLastKeyFrame->GetImuBias());

// Speed

Eigen::Vector3f Vwb2 = Vwb1 + t12*Gz + Rwb1 * mpImuPreintegratedFromLastKF->GetDeltaVelocity(mpLastKeyFrame->GetImuBias());

// Set the camera pose of the world coordinate system of the current frame

mCurrentFrame.SetImuPoseVelocity(Rwb2,twb2,Vwb2);

// Record bias

mCurrentFrame.mImuBias = mpLastKeyFrame->GetImuBias();

mCurrentFrame.mPredBias = mCurrentFrame.mImuBias;

return true;

}

// When the map is not updated

else if(!mbMapUpdated)

{

const Eigen::Vector3f twb1 = mLastFrame.GetImuPosition();

const Eigen::Matrix3f Rwb1 = mLastFrame.GetImuRotation();

const Eigen::Vector3f Vwb1 = mLastFrame.GetVelocity();

const Eigen::Vector3f Gz(0, 0, -IMU::GRAVITY_VALUE);

// mpImuPreintegratedFrame Is the previous frame of the current frame , Not necessarily keyframes

const float t12 = mCurrentFrame.mpImuPreintegratedFrame->dT;

Eigen::Matrix3f Rwb2 = IMU::NormalizeRotation(Rwb1 * mCurrentFrame.mpImuPreintegratedFrame->GetDeltaRotation(mLastFrame.mImuBias));

Eigen::Vector3f twb2 = twb1 + Vwb1*t12 + 0.5f*t12*t12*Gz+ Rwb1 * mCurrentFrame.mpImuPreintegratedFrame->GetDeltaPosition(mLastFrame.mImuBias);

Eigen::Vector3f Vwb2 = Vwb1 + t12*Gz + Rwb1 * mCurrentFrame.mpImuPreintegratedFrame->GetDeltaVelocity(mLastFrame.mImuBias);

mCurrentFrame.SetImuPoseVelocity(Rwb2,twb2,Vwb2);

mCurrentFrame.mImuBias = mLastFrame.mImuBias;

mCurrentFrame.mPredBias = mCurrentFrame.mImuBias;

return true;

}

return false;

}

3.3.3 Use projection to find the matching relationship between the feature points of the current frame and the previous frame SearchByProjection

According to the initial value of the current frame pose , Put the last frame 3D The point is projected onto the current frame , Then, compare all feature points with this in the grid where the projection point is located 3D Distance of point descriptor , Choose the smallest one . Then compare the two

The included angle of the orientation , And put it in the corresponding square diagram . After all matching relationships are found , Their orientation should be consistent , So find the points in the top three directions of the histogram , This is the last remaining matching result .

Step1&2, Compare the two frames pose Work it out , Judge the direction of movement , And initialize the container

int ORBmatcher::SearchByProjection(Frame &CurrentFrame, const Frame &LastFrame, const float th, const bool bMono)

{

int nmatches = 0;

// Rotation Histogram (to check rotation consistency)

// Step 1 Create a rotation histogram , Used to detect rotation consistency

vector<int> rotHist[HISTO_LENGTH];

for(int i=0;i<HISTO_LENGTH;i++)

rotHist[i].reserve(500);

const float factor = 1.0f/HISTO_LENGTH;

// Step 2 Calculate the translation vector of the current frame and the previous frame

// Camera pose of the current frame

const Sophus::SE3f Tcw = CurrentFrame.GetPose();

const Eigen::Vector3f twc = Tcw.inverse().translation();

const Sophus::SE3f Tlw = LastFrame.GetPose();

const Eigen::Vector3f tlc = Tlw * twc;

// Judge forward or backward

const bool bForward = tlc(2)>CurrentFrame.mb && !bMono; // Non monocular case , If Z Greater than baseline , It means that the camera is obviously moving forward

const bool bBackward = -tlc(2)>CurrentFrame.mb && !bMono; // Non monocular case , If -Z Less than baseline , It means that the camera is obviously backward Step3&4, Put the last frame 3D The point is projected onto the current frame , See which grid it falls into , Then pull out all the points in this grid

// Step 3 For each map point in the previous frame , Project the model through the camera , Get the pixel coordinates projected to the current frame

for(int i=0; i<LastFrame.N; i++)

{

MapPoint* pMP = LastFrame.mvpMapPoints[i];

if(pMP)

{

if(!LastFrame.mvbOutlier[i])

{

// Valid for the previous frame MapPoints Project to the current frame coordinate system

Eigen::Vector3f x3Dw = pMP->GetWorldPos();

Eigen::Vector3f x3Dc = Tcw * x3Dw;

const float xc = x3Dc(0);

const float yc = x3Dc(1);

const float invzc = 1.0/x3Dc(2);

if(invzc<0) continue;

// Project into the current frame

Eigen::Vector2f uv = CurrentFrame.mpCamera->project(x3Dc);

if(uv(0)<CurrentFrame.mnMinX || uv(0)>CurrentFrame.mnMaxX) continue;

if(uv(1)<CurrentFrame.mnMinY || uv(1)>CurrentFrame.mnMaxY) continue;

// It is considered that the scale information of map points before and after projection is unchanged

int nLastOctave = (LastFrame.Nleft == -1 || i < LastFrame.Nleft) ? LastFrame.mvKeys[i].octave

: LastFrame.mvKeysRight[i - LastFrame.Nleft].octave;

// Search in a window. Size depends on scale

// Monocular :th = 7, Binocular :th = 15

float radius = th*CurrentFrame.mvScaleFactors[nLastOctave]; // The larger the scale , The larger the search

// Record candidate match points id

vector<size_t> vIndices2;

// Step 4 Judge the search scale range according to the forward and backward direction of the camera .

// It can be understood as follows , For example, a dot with a certain area , On a certain scale n Next, it is a feature point

// As the camera moves forward , The area of the dot increases , On a certain scale m Next, it is a feature point , As the area increases , It needs to be detected at a higher scale

// When the camera goes back , The area of the dot decreases , On a certain scale m Next, it is a feature point , As the area decreases , It needs to be detected at a lower scale

if(bForward) // Forward , Then the point of interest of the previous frame is at the scale nLastOctave<=nCurOctave

vIndices2 = CurrentFrame.GetFeaturesInArea(uv(0),uv(1), radius, nLastOctave);

else if(bBackward) // back off , Then the point of interest of the previous frame is at the scale 0<=nCurOctave<=nLastOctave

vIndices2 = CurrentFrame.GetFeaturesInArea(uv(0),uv(1), radius, 0, nLastOctave);

else // stay [nLastOctave-1, nLastOctave+1] Mid search

vIndices2 = CurrentFrame.GetFeaturesInArea(uv(0),uv(1), radius, nLastOctave-1, nLastOctave+1);

if(vIndices2.empty()) continue;How to find the lattice ?

vector<size_t> Frame::GetFeaturesInArea(

const float &x, const float &y, const float &r,

const int minLevel,const int maxLevel, const bool bRight) const

{

// Store search results vector

vector<size_t> vIndices;

vIndices.reserve(N);

float factorX = r, factorY = r;

// Step 1 The calculated radius is r Of the grid columns and rows where the left and right upper and lower boundaries of the circle are located id

// Find radius is r The grid column coordinates of the left boundary of the circle . This place is a little winding , Slowly understand :

// (mnMaxX-mnMinX)/FRAME_GRID_COLS: Indicates that each grid in the column direction can be divided into several pixels on average ( Certainly more than 1)

// mfGridElementWidthInv=FRAME_GRID_COLS/(mnMaxX-mnMinX) It's the countdown above , It means that each pixel can be divided into several grid columns ( Definitely less than 1)

// (x-mnMinX-r), It can be seen from the left edge of the image mnMinX To radius r The number of pixel columns occupied by the left boundary area of the circle

// Multiply the two , Is to find that the radius is r In which grid column is the left boundary of the circle

// Guarantee nMinCellX Result greater than or equal to 0

const int nMinCellX = max(0,(int)floor((x-mnMinX-factorX)*mfGridElementWidthInv));

// If the grid column of the left boundary of the finally obtained circle exceeds the set upper limit , Then it means that the calculation is wrong , No characteristic points meeting the requirements can be found , Returns an empty vector

if(nMinCellX>=FRAME_GRID_COLS) return vIndices;

// Calculate the index of the right boundary grid column where the circle is located

const int nMaxCellX = min((int)FRAME_GRID_COLS-1,(int)ceil((x-mnMinX+factorX)*mfGridElementWidthInv));

// If the grid of the right boundary of the calculated circle is illegal , It indicates that this feature point is not good , Direct return null vector

if(nMaxCellX<0) return vIndices;

// The following operations are similar , Calculate the grid row where the upper and lower boundaries of the circle are located id

const int nMinCellY = max(0,(int)floor((y-mnMinY-factorY)*mfGridElementHeightInv));

if(nMinCellY>=FRAME_GRID_ROWS) return vIndices;

const int nMaxCellY = min((int)FRAME_GRID_ROWS-1,(int)ceil((y-mnMinY+factorY)*mfGridElementHeightInv));

if(nMaxCellY<0) return vIndices;

// Check whether the pyramid level range of the image to be searched meets the requirements

//? Suspected bug.(minLevel>0) Later conditions (maxLevel>=0) Certainly

//? Change it to const bool bCheckLevels = (minLevel>=0) || (maxLevel>=0);

const bool bCheckLevels = (minLevel>0) || (maxLevel>=0);

// Step 2 Traverse all meshes in the circular area , Find the candidate feature points that meet the conditions , And its index Put it in the output

for(int ix = nMinCellX; ix<=nMaxCellX; ix++)

{

for(int iy = nMinCellY; iy<=nMaxCellY; iy++)

{

// Get all the feature points in this grid in Frame::mvKeysUn Index in

const vector<size_t> vCell = (!bRight) ? mGrid[ix][iy] : mGridRight[ix][iy];

// If there are no characteristic points in this grid , Then skip this grid and move on to the next

if(vCell.empty()) continue;

// If there are characteristic points in this grid , Then traverse all the feature points in the image grid

for(size_t j=0, jend=vCell.size(); j<jend; j++)

{

// Read this feature point first according to the index

const cv::KeyPoint &kpUn = (Nleft == -1) ? mvKeysUn[vCell[j]] : (!bRight) ? mvKeys[vCell[j]] : mvKeysRight[vCell[j]];

if(bCheckLevels)

{

// cv::KeyPoint::octave What is represented in is the data extracted from which layer of the pyramid

// Ensure that the feature points are at the pyramid level minLevel and maxLevel Between , If not, skip

if(kpUn.octave<minLevel) continue;

if(maxLevel>=0) if(kpUn.octave>maxLevel) continue;

}

// clear through , Calculate the distance from the candidate feature point to the center of the circle , Check whether it is within this circular area

const float distx = kpUn.pt.x-x;

const float disty = kpUn.pt.y-y;

// If x Direction and y The distance in both directions is within the specified radius , Store it index Are candidate feature points

if(fabs(distx)<factorX && fabs(disty)<factorY) vIndices.push_back(vCell[j]);

}

}

}

return vIndices;

}Step5 Traverse the candidate matching points in the lattice , Find the best matching point with the smallest descriptor distance Step 6 Classify according to the rotation angle difference of matching points

// Step 6 Calculate the histogram of the rotation angle difference of the matching point

if(mbCheckOrientation)

{

cv::KeyPoint kpLF = (LastFrame.Nleft == -1) ? LastFrame.mvKeysUn[i]

: (i < LastFrame.Nleft) ? LastFrame.mvKeys[i]

: LastFrame.mvKeysRight[i - LastFrame.Nleft];

cv::KeyPoint kpCF = (CurrentFrame.Nleft == -1) ? CurrentFrame.mvKeysUn[bestIdx2]

: (bestIdx2 < CurrentFrame.Nleft) ? CurrentFrame.mvKeys[bestIdx2]

: CurrentFrame.mvKeysRight[bestIdx2 - CurrentFrame.Nleft];

float rot = kpLF.angle-kpCF.angle;

if(rot<0.0) rot+=360.0f;

int bin = round(rot*factor);

if(bin==HISTO_LENGTH) bin=0;

rotHist[bin].push_back(bestIdx2);

}

Of course , For the right eye , Re projection is also required , Find the grid , Calculate descriptors to find matching points , The operation of classifying according to the angle difference .

if(CurrentFrame.Nleft != -1){

...

}

}

}

} Step 7 Perform rotation consistency detection , Eliminate inconsistent matches

if(mbCheckOrientation)

{

int ind1=-1;

int ind2=-1;

int ind3=-1;

ComputeThreeMaxima(rotHist,HISTO_LENGTH,ind1,ind2,ind3);

for(int i=0; i<HISTO_LENGTH; i++)

{

// For quantity is not before 3 The points are right , To eliminate

if(i!=ind1 && i!=ind2 && i!=ind3)

{

for(size_t j=0, jend=rotHist[i].size(); j<jend; j++)

{

CurrentFrame.mvpMapPoints[rotHist[i][j]]=static_cast<MapPoint*>(NULL);

nmatches--;

}

}

}

}

return nmatches;

}

3.4 relocation Relocalization()

If you match the previous frame according to the constant speed model , Or use word bags and KeyFrame Match , Or use IMU Integral data , If these three moves cannot predict the current posture , That leaves only the last trick : relocation .

First, find several alternative loopback frames according to the word bag , Reuse MLPnP The algorithm estimates the pose , If it is estimated, then BA Optimize posture .

Step 1: Calculate the number of feature points in the current frame Bow mapping

bool Tracking::Relocalization()

{

Verbose::PrintMess("Starting relocalization", Verbose::VERBOSITY_NORMAL);

// Compute Bag of Words Vector

mCurrentFrame.ComputeBoW();Step 2: Find a candidate keyframe group similar to the current frame

// Relocalization is performed when tracking is lost

// Track Lost: Query KeyFrame Database for keyframe candidates for relocalisation

vector<KeyFrame*> vpCandidateKFs = mpKeyFrameDB->DetectRelocalizationCandidates(&mCurrentFrame, mpAtlas->GetCurrentMap());

if(vpCandidateKFs.empty()) return false;

const int nKFs = vpCandidateKFs.size();

// We perform first an ORB matching with each candidate

// If enough matches are found we setup a PnP solver

ORBmatcher matcher(0.75,true);

// Solver for each keyframe

vector<MLPnPsolver*> vpMLPnPsolvers;

vpMLPnPsolvers.resize(nKFs);

// The matching relationship between each key frame and the feature points in the current frame

vector<vector<MapPoint*> > vvpMapPointMatches;

vvpMapPointMatches.resize(nKFs);

// Discard the marking of a key frame

vector<bool> vbDiscarded;

vbDiscarded.resize(nKFs);

// Number of valid candidate keyframes

int nCandidates=0;Step 3: Traverse all candidate keyframes , adopt BoW Make a quick match , Initialize with matching results PnP Solver

for(int i=0; i<nKFs; i++)

{

KeyFrame* pKF = vpCandidateKFs[i];

if(pKF->isBad()) vbDiscarded[i] = true;

else

{

// The current frame and candidate key frames are used BoW Make a quick match , The matching results are recorded in vvpMapPointMatches,nmatches Indicates the number of matches

int nmatches = matcher.SearchByBoW(pKF,mCurrentFrame,vvpMapPointMatches[i]);

// If the number of matches with the current frame is less than 15, Then you can only abandon this key frame

if(nmatches<15)

{

vbDiscarded[i] = true;

continue;

}

else

{

// If the matching number is enough , Initialize with matching results MLPnPsolver

// ? Why MLPnP? Because the fisheye camera model is considered , Decouple certain relationships ?

// Reference paper 《MLPNP-A REAL-TIME MAXIMUM LIKELIHOOD SOLUTION TO THE PERSPECTIVE-N-POINT PROBLEM》

MLPnPsolver* pSolver = new MLPnPsolver(mCurrentFrame,vvpMapPointMatches[i]);

// The constructor is called once , Here reset the parameters

pSolver->SetRansacParameters(

0.99, // Maximum probability value of the model , Default 0.9

10, // The minimum threshold of the interior point , Default 8

300, // Maximum number of iterations , Default 300

6, // The minimum set , Six points are sampled each time , That is, the minimum set should be set to 6, The paper says I > 5

0.5, // The theoretical minimum number of interior points , Here is the proportion of the total , therefore epsilon Is the proportion , The default is 0.4

5.991); // Chi square test threshold //This solver needs at least 6 points

vpMLPnPsolvers[i] = pSolver;

nCandidates++; // 1.0 New version

}

}

}Step 4: Through a series of operations , Until you find the key frame that can match

// Alternatively perform some iterations of P4P RANSAC

// Until we found a camera pose supported by enough inliers

// Enough interior points can be matched PNP Algorithm ,MLPnP You need at least 6 A little bit

// A flag indicating whether a matching key frame has been found

bool bMatch = false;

ORBmatcher matcher2(0.9,true);

// Why is it so complicated ? answer : It's fear of false closed loop

while(nCandidates>0 && !bMatch)

{

// Traverse all current candidate keyframes

for(int i=0; i<nKFs; i++)

{

// Ignore the abandoned

if(vbDiscarded[i]) continue;

// Perform 5 Ransac Iterations

// Inner point marker

vector<bool> vbInliers;

// Internal points

int nInliers;

// Express RANSAC There are no more iterations available -- In other words, the data is not good enough ,RANSAC I've tried my best ...

bool bNoMore;

// Step 4.1: adopt MLPnP The algorithm estimates the attitude , iteration 5 Time

MLPnPsolver* pSolver = vpMLPnPsolvers[i];

Eigen::Matrix4f eigTcw;

// PnP The entry function of the algorithm

bool bTcw = pSolver->iterate(5,bNoMore,vbInliers,nInliers, eigTcw);

// If Ransac reachs max. iterations discard keyframe

// bNoMore by true It means that it has exceeded RANSAC Maximum number of iterations , Just discard the current keyframe

if(bNoMore)

{

vbDiscarded[i]=true;

nCandidates--;

}

// If a Camera Pose is computed, optimize

if(bTcw)

{

// Step 4.2: If MLPnP Calculate the posture , Carry out BA Optimize

Sophus::SE3f Tcw(eigTcw);

mCurrentFrame.SetPose(Tcw);

// Tcw.copyTo(mCurrentFrame.mTcw);

// MLPnP in RANSAC Set of interior points after

set<MapPoint*> sFound;

const int np = vbInliers.size();

// Traverse all interior points

for(int j=0; j<np; j++)

{

if(vbInliers[j])

{

mCurrentFrame.mvpMapPoints[j]=vvpMapPointMatches[i][j];

sFound.insert(vvpMapPointMatches[i][j]);

}

else mCurrentFrame.mvpMapPoints[j]=NULL;

}

// Only optimize posture , Do not optimize the coordinates of map points , Returns the number of interior points

int nGood = Optimizer::PoseOptimization(&mCurrentFrame);

// If the number of interior points after optimization is small , Skipped the current candidate keyframe , But it doesn't give up the relocation of the current frame

if(nGood<10) continue;

// Delete the map point corresponding to the outer point , Here it is directly set as null pointer

for(int io =0; io<mCurrentFrame.N; io++)

if(mCurrentFrame.mvbOutlier[io])

mCurrentFrame.mvpMapPoints[io]=static_cast<MapPoint*>(NULL);

// If few inliers, search by projection in a coarse window and optimize again

// Step 4.3: If there are fewer interior points , Then the previously unmatched points are matched by projection , Then optimize the solution

// The previous matching relationship is obtained by the word bag matching process

if(nGood<50)

{

// The unmatched map points in the key frame are projected into the current frame by projection , Generate a new match