当前位置:网站首页>solver. Learning notes of prototxt file parameters

solver. Learning notes of prototxt file parameters

2022-07-05 07:47:00 【Fall in love with wx】

Reference resources :https://blog.csdn.net/xygl2009/article/details/77484522 Thank you very much !

# -*- coding: UTF-8 -*-

train_net: "examples/YOLO_Helmet/train.prototxt" # Training profile

test_net: "examples/YOLO_Helmet/test.prototxt" # Test profile

test_iter: 4952 # Represents the number of tests ; such as , Yours test Stage batchsize=100, And your test data is 10000 A picture , Then your test times are 10000/100=100 Time ; namely , Yours test_iter=100

test_interval: 2000 # It indicates how many times your network iterations are tested , You can set up a generation of online training , Just do a test .

base_lr: 0.0005 # Indicates the basic learning rate , In the process of parameter gradient descent optimization , The learning rate will be adjusted , And the adjustment strategy can be passed lr_policy This parameter is set ;

display: 10

max_iter: 120000 # Maximum number of iterations

lr_policy: "multistep" # Set to multistep, You also need to set a stepvalue. The sum of this parameter step Very similar ,step It's a uniform, equidistant change , and mult-step It is based on stepvalue Value change

gamma: 0.5

weight_decay: 0.00005 # Indicates weight attenuation , Used to prevent over fitting

snapshot: 2000 # Save model interval

snapshot_prefix: "models/MobileNet/mobilenet_yolov3_deploy" # Save the prefix of the model

solver_mode: GPU # Whether to use GPU

debug_info: false

snapshot_after_train: true

test_initialization: false

average_loss: 10 # Take multiple times foward Of loss Average , Display output

stepvalue: 25000

stepvalue: 50000

stepvalue: 75000

iter_size: 4 # Handle batchsize*itersize After a picture , Just once ApplyUpdate The function depends on the learning rate 、method(SGD、AdaSGD etc. ) Make a gradient descent

type: "RMSProp"

eval_type: "detection"

ap_version: "11point"

show_per_class_result: true

边栏推荐

- STM32 knowledge points

- Close of office 365 reading

- Global and Chinese market of core pallets 2022-2028: Research Report on technology, participants, trends, market size and share

- Global and Chinese markets for recycled boilers 2022-2028: Research Report on technology, participants, trends, market size and share

- [professional literacy] core conferences and periodicals in the field of integrated circuits

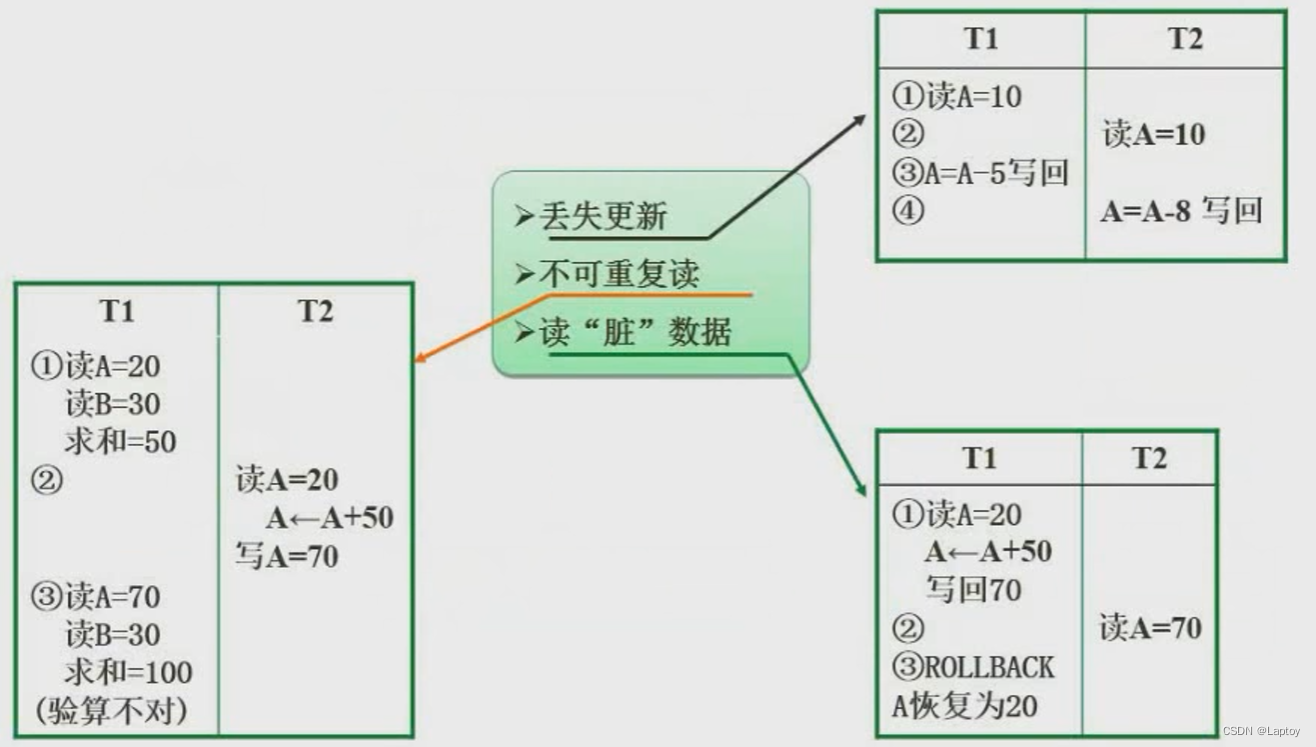

- Software designer: 03 database system

- Numpy——1. Creation of array

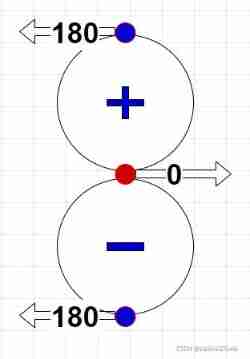

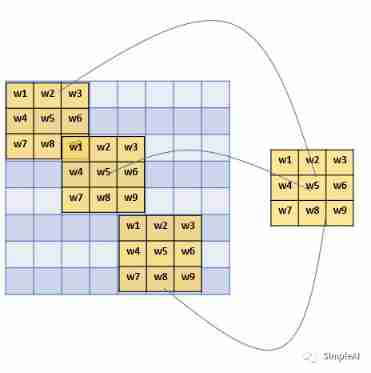

- From then on, I understand convolutional neural network (CNN)

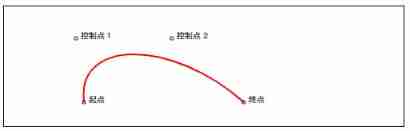

- What is Bezier curve? How to draw third-order Bezier curve with canvas?

- How to realize audit trail in particle counter software

猜你喜欢

Altium designer 19.1.18 - Import frame

Opendrive arc drawing script

软件设计师:03-数据库系统

From then on, I understand convolutional neural network (CNN)

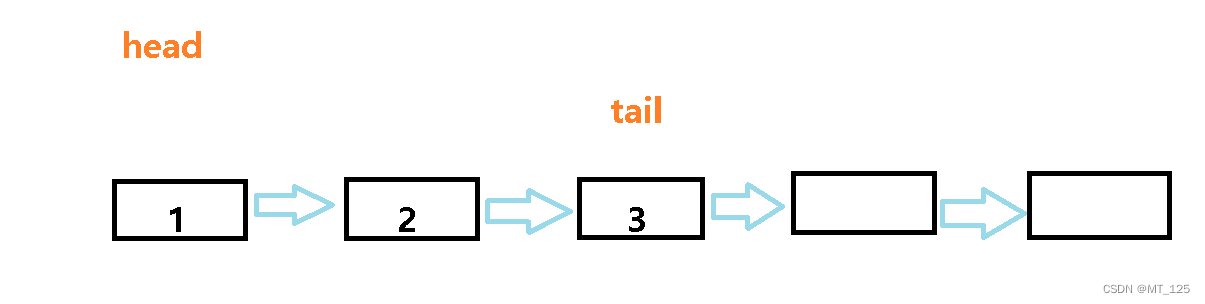

I implement queue with C I

What is Bezier curve? How to draw third-order Bezier curve with canvas?

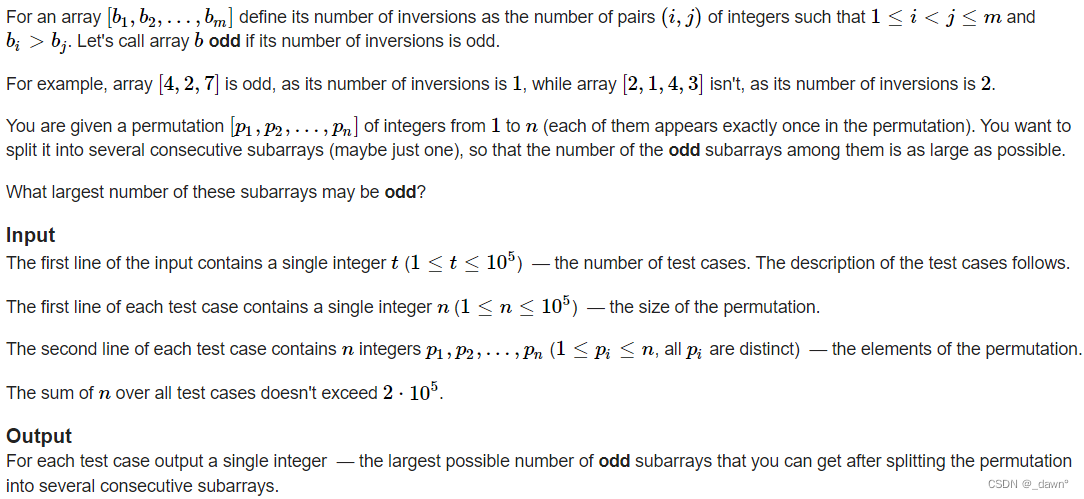

Daily Practice:Codeforces Round #794 (Div. 2)(A~D)

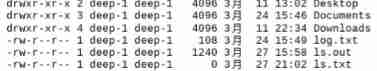

Deepin get file (folder) list

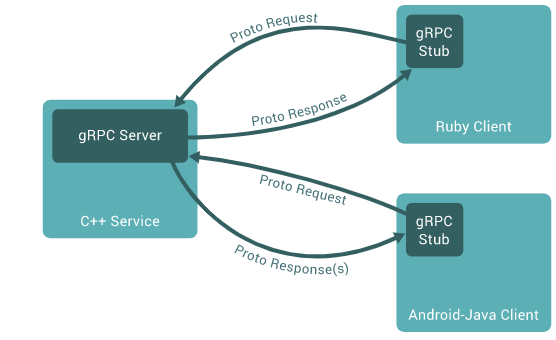

Play with grpc - go deep into concepts and principles

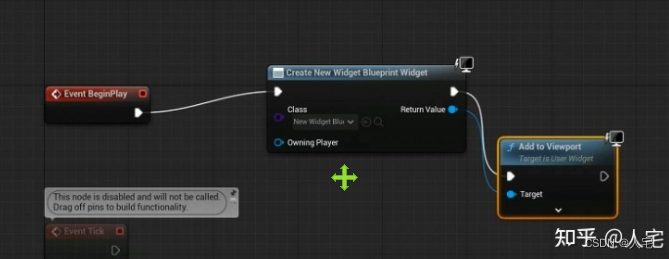

Ue5 hot update - remote server automatic download and version detection (simplehotupdate)

随机推荐

Anaconda pyhton multi version switching

NSIS search folder

Temperature sensor DS18B20 principle, with STM32 routine code

TCP and UDP

MySql——存储引擎

Extended application of single chip microcomputer-06 independent key

Selenium element positioning

Idea common settings

Deepin, help ('command ') output saved to file

Day08 ternary operator extension operator character connector symbol priority

A series of problems in offline installation of automated test environment (ride)

Altium Designer 19.1.18 - 更改铺铜的透明度

·Practical website·

QT excellent articles

Altium designer 19.1.18 - clear information generated by measuring distance

Use of orbbec Astra depth camera of OBI Zhongguang in ROS melody

Altium Designer 19.1.18 - 导入板框

The folder directly enters CMD mode, with the same folder location

GBK error in web page Chinese display (print, etc.), solution

Deepin get file (folder) list