当前位置:网站首页>Natural language processing (NLP) roadmap - KDnuggets

Natural language processing (NLP) roadmap - KDnuggets

2020-11-09 00:40:00 【On jdon】

because In the past ten years big data The development of . Enterprises now need to analyze a large amount of data from various sources every day .

natural language processing (NLP) It's the field of artificial intelligence , Dedicated to processing and using text and voice data to create intelligent machines and insights .

Pretreatment technology

To prepare text data for reasoning , Some of the most common techniques are :

- Tokenization : Used to split input text into its constituent words ( Mark ). such , It's easier to convert our data into digital format .

- Stop words remove : Used to remove all prepositions from our text ( for example ,“ One ”,“ This ” etc. ), These prepositions can only be regarded as noise sources in our data ( Because they don't carry any additional words ) The information in our data ).

- Word stem : Finally, it is used to remove all affixes from the data ( Such as prefixes or suffixes ). such , actually , For our algorithm , Think of it as actually having a similar meaning ( for example , Insightful opinions ) It's much easier to use proper words for .

standards-of-use Python NLP library ( for example NLTK and Spacy), All of these preprocessing techniques can be easily applied to different types of text .

in addition , In order to infer the grammar and text structure of a language , We can use parts of speech such as (POS) Tags and shallow parsing ( chart 1) Technology like that . actually , Using these technologies , We can use lexical categories of words ( Based on the context of phrase grammar ) Mark each word explicitly .

modeling technique

- Speech pack

Bag of Words It's a kind of natural language processing and Computer vision technology , The goal is to create new features for training classifiers ( chart 2). This technique is implemented by constructing a histogram that counts all the words in the document ( Regardless of word order and grammar rules ).

One of the main problems that may limit the effectiveness of this technique is the presence of prepositions in our text , pronouns , Articles, etc . actually , All of these can be thought of as words that often appear in our text , Even if you don't really know what the main features and themes of our documents are .

To solve this type of problem , Commonly referred to as “ The term frequency - Anti document frequency ”(TFIDF) Technology .TFIDF The purpose of this paper is to adjust the frequency of word count in text by considering the frequency of each word appearing in a large number of texts . then , Using this technology , We're going to reward words that are very common in text but rarely in other texts ( Increase the frequency value proportionally ), At the same time, for the words that appear frequently in the text and other texts ( Scale down the frequency value ) To punish ( For example, prepositions , Pronouns, etc ).

- Potential Dirichlet distribution (LDA)

Potential Dirichlet distribution (LDA) It's a topic modeling technique . Topic modeling is an area of research , Focus on finding ways to cluster documents , In order to find potential distinguishing markers which can characterize their characteristics according to their contents ( chart 3). therefore , Topic modeling can also be seen as drop Dimension Technology , Because it allows us to reduce the initial data to a limited set of clusters .

Potential Dirichlet distribution (LDA) It's an unsupervised learning technology , It is used to find potential topics that can represent different documents and cluster similar documents together . The algorithm will Considered to exist N Topics as input , Then group the different documents into N Document clusters closely related to each other .

LDA With other clustering techniques ( for example K Mean clustering ) The difference is that LDA It's a soft clustering technique ( Each document is assigned to clusters based on probability distribution ). for example , Documents can be assigned to clusters A, Because the possibility that the algorithm determines that the document belongs to this category is 80%, Some features embedded in this document are still taken into account ( rest 20%) More likely to belong to the second cluster B.

- Word embedding

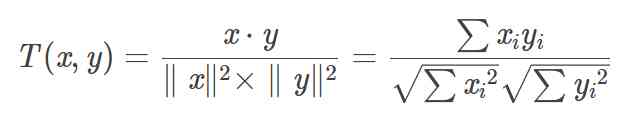

Word embedding is one of the most common ways to encode words into digital vectors , Then we can input it into our machine learning model for reasoning . Word embedding aims to transform our words into vector space reliably , So that similar words are represented by similar vectors .

Now , There's something to create Word There are three main techniques for surface embedding :Word2Vec, glove and fastText. All three techniques use shallow neural networks to create the required word embedding .

- Sentiment analysis

Emotional analysis is a kind of NLP technology , Usually used to understand some form of text is about the positive side of the subject , Negative or neutral emotions . for example , Trying to find out about a subject , General public opinion of a product or company ( Through online reviews , Tweets, etc ) when , This can be particularly useful .

In emotional analysis , Emotion in a text is usually expressed as -1( Negative emotion ) and 1( Positive emotions ) Between the value of the , It's called polarity .

Affective analysis can be regarded as an unsupervised learning technique , Because we don't usually provide handmade tags for data . To overcome this obstacle , We use pre marked dictionaries ( A collection of words ), The dictionary is used to quantify the emotions of a large number of words in different contexts . Some examples of widely used words in affective analysis are TextBlob and VADER.

- Transformer

Represents the latest NLP Model , In order to analyze text data .BERT and GTP3 It's something well known Transformers Model Example .

Creating Transformer Before , Recursive neural network (RNN) It is the most effective way to analyze text data in order to make prediction , But it's hard to reliably exploit long-term dependencies , for example , Our network may find it difficult to understand that words entered in previous iterations may be useful for the current iteration .

With the help of a method called “ attention ” (Attention) The mechanism of , Successfully overcome this limitation ( The mechanism Used to determine which parts of the text need to be focused and given more attention ). Besides ,Transformers Make parallel processing of text data easy , Not sequential processing ( So it improves the execution speed ).

Now , With the help of Hugging Face library , It's easy to be in Python To realize Transfer .

版权声明

本文为[On jdon]所创,转载请带上原文链接,感谢

边栏推荐

- 梁老师小课堂|谈谈模板方法模式

- Introduction to nmon

- Python features and building environment

- How to make scripts compatible with both Python 2 and python 3?

- Salesforce connect & external object

- Concurrent linked queue: a non blocking unbounded thread safe queue

- A few lines of code can easily transfer traceid across systems, so you don't have to worry about losing the log!

- 链表

- 如何将 PyTorch Lightning 模型部署到生产中

- salesforce零基础学习(九十八)Salesforce Connect & External Object

猜你喜欢

移动大数据自有网站精准营销精准获客

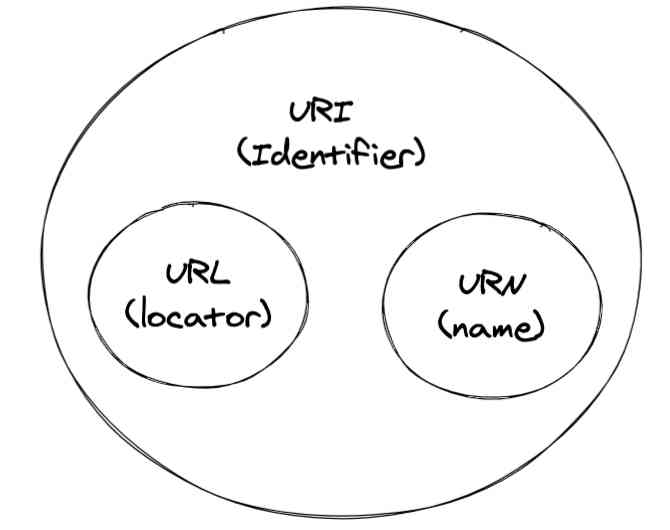

Programmers should know the URI, a comprehensive understanding of the article

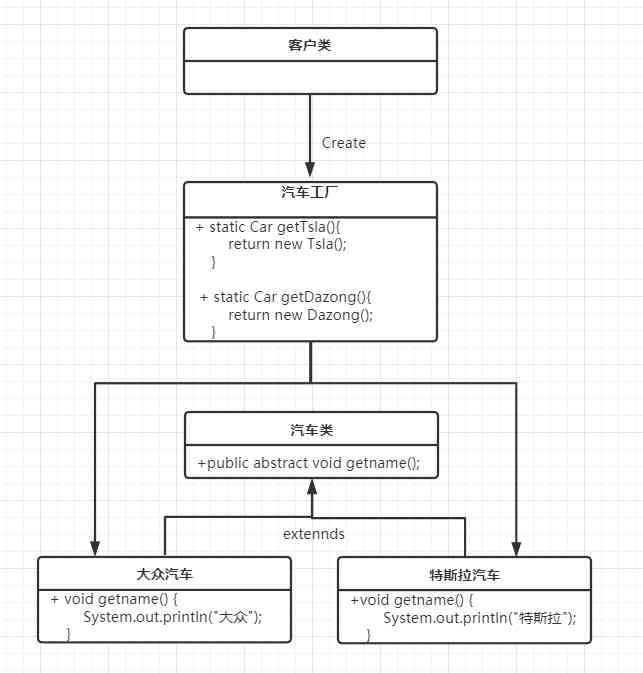

Factory pattern pattern pattern (simple factory, factory method, abstract factory pattern)

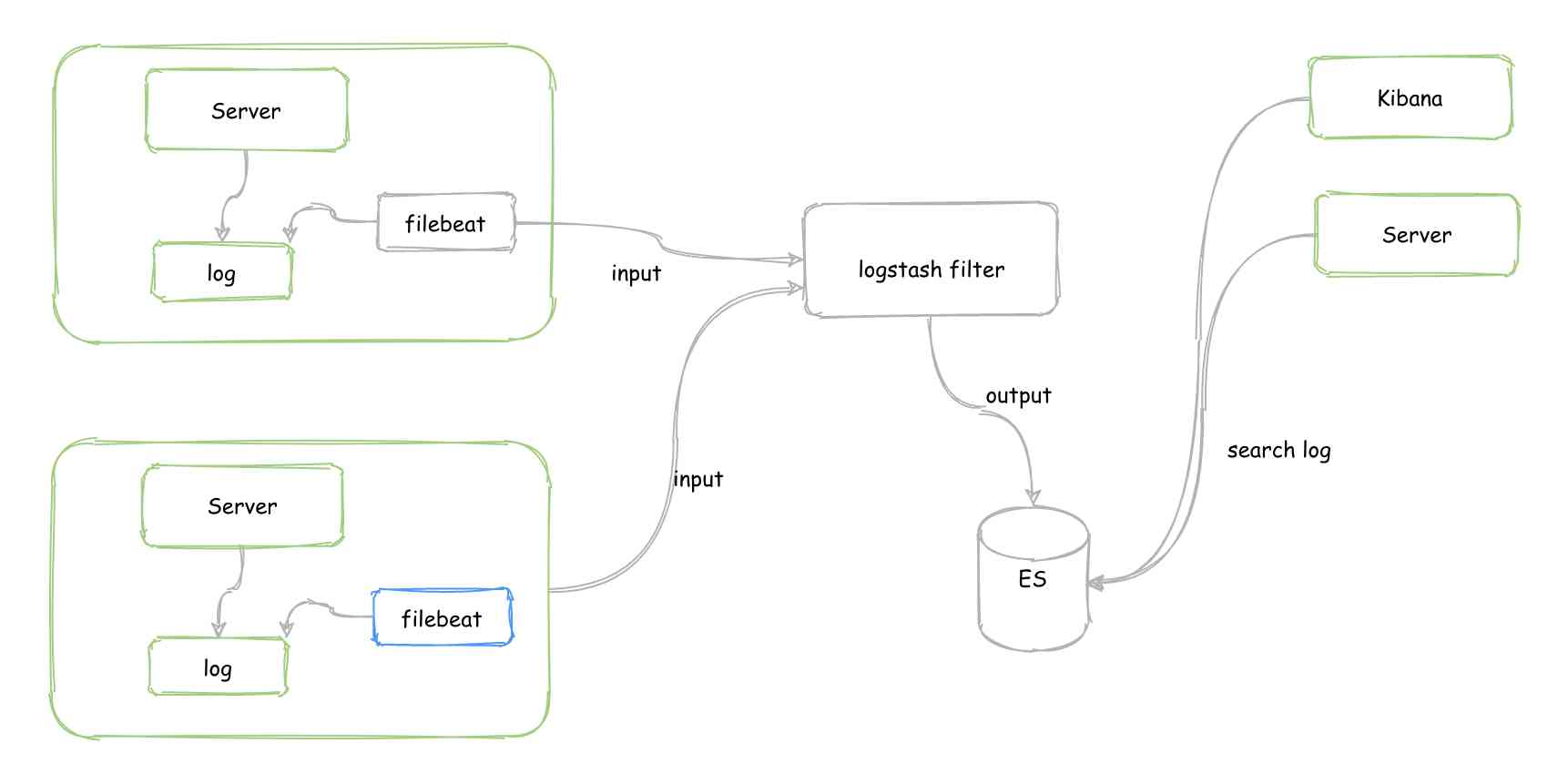

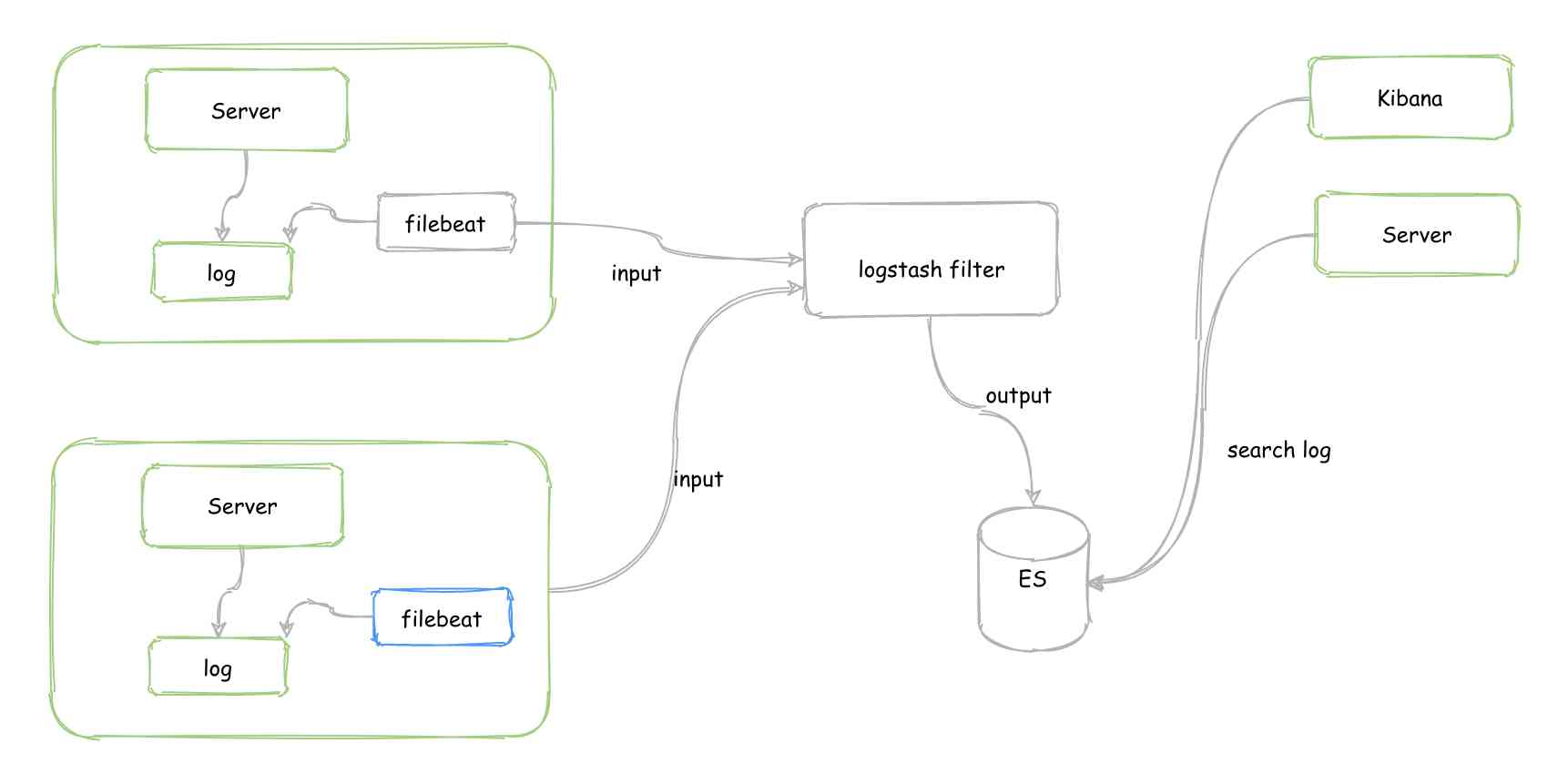

A few lines of code can easily transfer traceid across systems, so you don't have to worry about losing the log!

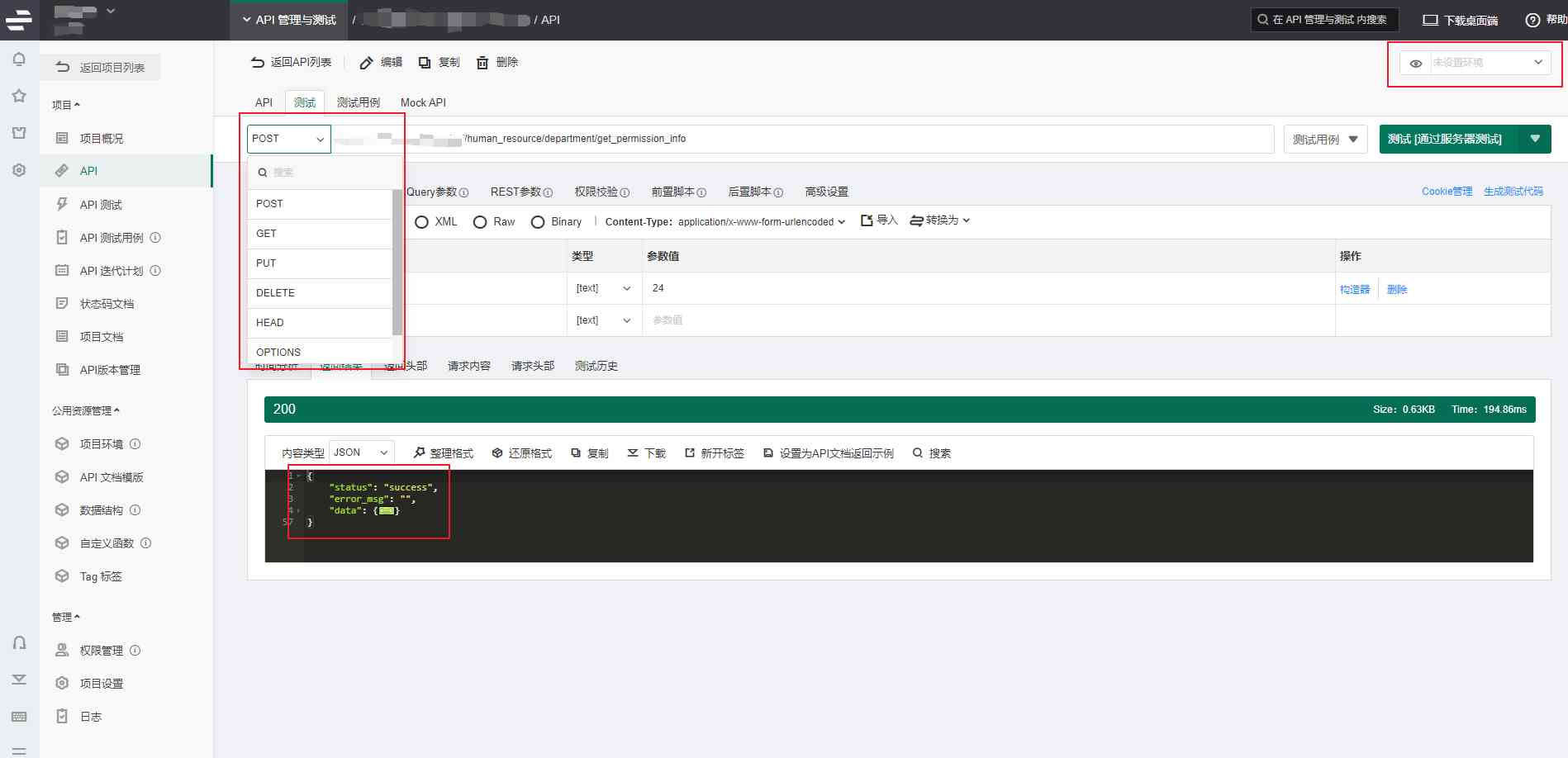

The interface testing tool eolinker makes post request

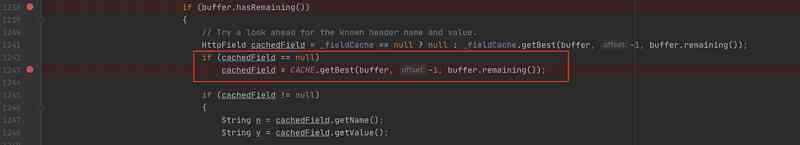

APP 莫名崩溃,开始以为是 Header 中 name 大小写的锅,最后发现原来是容器的错!

How to make scripts compatible with both Python 2 and python 3?

几行代码轻松实现跨系统传递 traceId,再也不用担心对不上日志了!

23张图,带你入门推荐系统

VIM Introduction Manual, (vs Code)

随机推荐

VIM Introduction Manual, (vs Code)

Realization of file copy

装饰器(一)

Platform in architecture

Five phases of API life cycle

Concurrent linked queue: a non blocking unbounded thread safe queue

Combine theory with practice to understand CORS thoroughly

Introduction to nmon

Installation record of SAP s / 4hana 2020

Chapter 5 programming

Leetcode-15: sum of three numbers

FC 游戏机的工作原理是怎样的?

The vowels in the inverted string of leetcode

Decorator (2)

SQL语句的执行

作业2020.11.7-8

STC转STM32第一次开发

Bifrost 之 文件队列(一)

Tips in Android Development: requires permission android.permission write_ Settings solution

1.操作系统是干什么的?