当前位置:网站首页>Dynamic relu: Microsoft's refreshing device may be the best relu improvement | ECCV 2020

Dynamic relu: Microsoft's refreshing device may be the best relu improvement | ECCV 2020

2020-11-08 21:03:00 【Xiaofei's notes on algorithm Engineering】

> The paper puts forward the dynamic ReLU, It can dynamically adjust the corresponding segment activation function according to the input , And ReLU And its variety contrast , Only a small amount of extra computation can lead to a significant performance improvement , It can be embedded into the current mainstream model seamlessly

source : Xiaofei's algorithm Engineering Notes official account

The paper : Dynamic ReLU

- Address of thesis :https://arxiv.org/abs/2003.10027

- Paper code :https://github.com/Islanna/DynamicReLU

Introduction

ReLU It's a very important milestone in deep learning , Simple but powerful , It can greatly improve the performance of neural networks . There are a lot of ReLU Improved version , such as Leaky ReLU and PReLU, And the final parameters of the improved version and the original version are fixed . So the paper naturally thought of , If you can adjust according to the input characteristics ReLU Parameters of might be better .

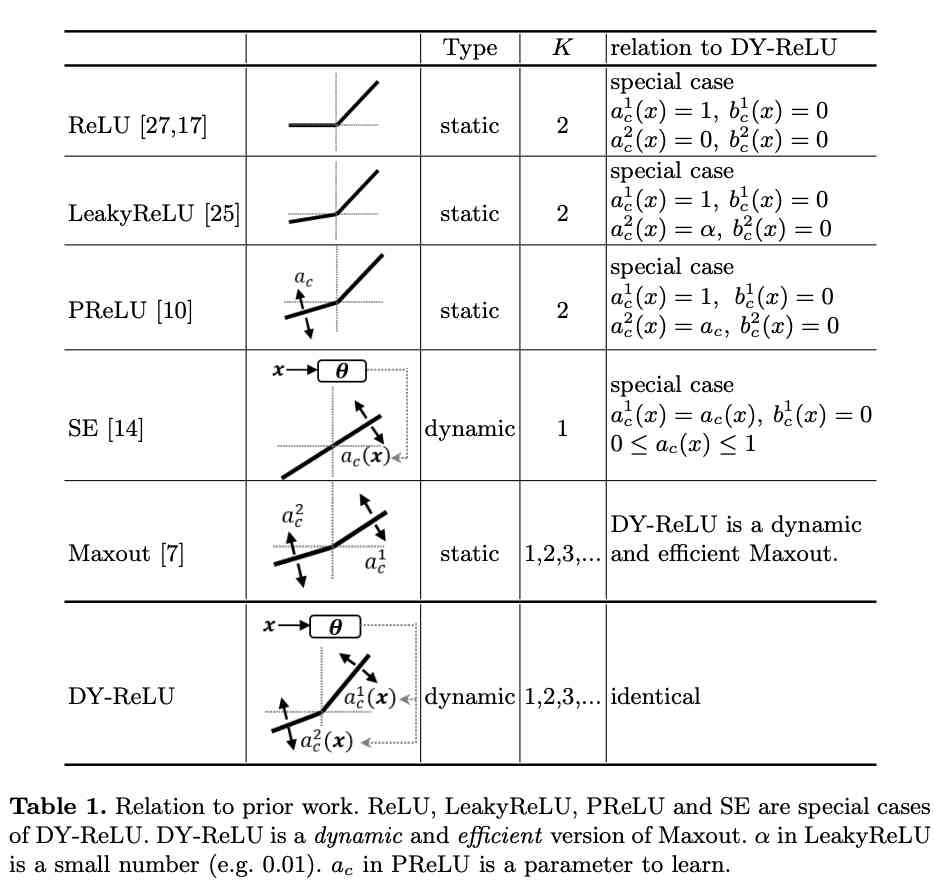

Based on the above ideas , The paper puts forward the dynamic ReLU(DY-ReLU). Pictured 2 Shown ,DY-ReLU It's a piecewise function $f_{\theta{(x)}}(x)$, Parameters are defined by the hyperfunction $\theta{(x)}$ According to input $x$ obtain . Hyperfunctions $\theta(x)$ The context of each dimension of the integrated input comes from the adaptation activation function $f_{\theta{(x)}}(x)$, Can bring a small amount of extra computation , Significantly improve the expression of the network . in addition , This paper provides three forms of DY-ReLU, There are different sharing mechanisms in spatial location and dimension . Different forms of DY-ReLU For different tasks , The paper is also verified by experiments ,DY-ReLU In the key point recognition and image classification have a good improvement .

Definition and Implementation of Dynamic ReLU

Definition

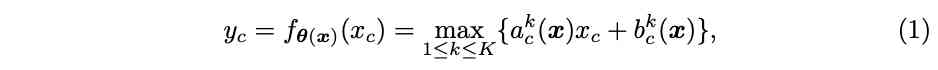

Define the original ReLU by $y=max{x, 0}$,$x$ Is the input vector , For the input $c$ Whitman's sign $x_c$, The activation value is calculated as $y_c=max{x_c, 0}$.ReLU It can be expressed as piecewise linear function $y_c=max_k{a^k_c x_c+b^k_c}$, Based on this piecewise function, this paper extends dynamic ReLU, Based on all the inputs $x={x_c}$ The adaptive $a^k_c$,$b^k_c$:

factor $(a^k_c, b^k_c)$ It's a hyperfunction $\theta(x)$ Output :

$K$ Is the number of functions ,$C$ Is the number of dimensions , Activation parameters $(a^k_c, b^k_c)$ Not only with $x_c$ relevant , Also with the $x_{j\ne c}$ relevant .

Implementation of hyper function $\theta(x)$

This paper adopts the method similar to SE Module lightweight network for the implementation of hyperfunctions , For size $C\times H\times W$ The input of $x$, First, use global average pooling for compression , Then use two full connection layers ( The middle contains ReLU) To deal with , Finally, a normalization layer is used to constrain the results to -1 and 1 Between , The normalization layer uses $2\sigma(x) - 1$,$\sigma$ by Sigmoid function . The subnet has a common output $2KC$ Elements , They correspond to each other $a^{1:K}{1:C}$ and $b^{1:K}{1:C}$ Residual of , The final output is the sum of the initial value and the residual error :

$\alpha^k$ and $\beta^k$ by $a^k_c$ and $b^k_c$ The initial value of the ,$\lambda_a$ and $\lambda_b$ Is the scalar used to control the size of the residual . about $K=2$ The situation of , The default parameter is $\alpha^1=1$,$\alpha^2=\beta^1=\beta^2=0$, It's the original ReLU, Scalar defaults to $\lambda_a=1.0$,$\lambda_b=0.5$.

Relation to Prior Work

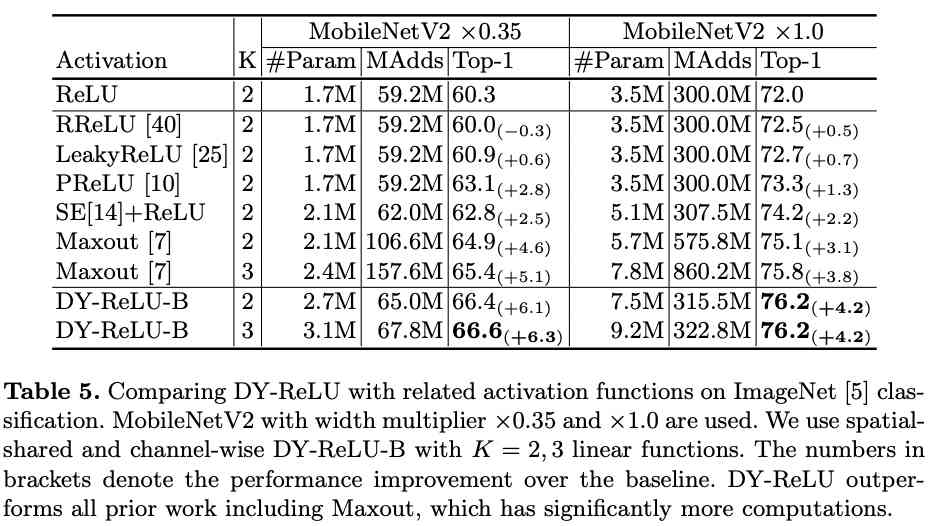

DY-ReLU There's a lot of possibility , surface 1 It shows DY-ReLU With the original ReLU And the relationship between its variants . After learning the specific parameters ,DY-ReLU It is equivalent to ReLU、LeakyReLU as well as PReLU. And when $K=1$, bias $b^1_c=0$ when , Is equivalent to SE modular . in addition DY-ReLU It can also be a dynamic and efficient Maxout operator , Is equivalent to Maxout Of $K$ Convolutions are converted to $K$ A dynamic linear change , And then output the maximum again .

Variations of Dynamic ReLU

This paper provides three forms of DY-ReLU, There are different sharing mechanisms in spatial location and dimension :

DY-ReLU-A

Spatial location and dimensions are shared (spatial and channel-shared), The calculation is shown in the figure 2a Shown , Just output $2K$ Parameters , The calculation is the simplest , Expression is also the weakest .

DY-ReLU-B

Only spatial location sharing (spatial-shared and channel-wise), The calculation is shown in the figure 2b Shown , Output $2KC$ Parameters .

DY-ReLU-C

Spatial location and dimensions are not shared (spatial and channel-wise), Each element of each dimension has a corresponding activation function $max_k{a^k_{c,h,w} x_{c, h, w} + b^k_{c,h,w} }$. Although it's very expressive , But the parameters that need to be output ($2KCHW$) That's too much , Like the previous one, using full connection layer output directly will bring too much extra computation . Therefore, the paper has been improved , The calculation is shown in the figure 2c Shown , Decompose the spatial location to another attention Branch , Finally, the dimension parameter $[a^{1:K}{1:C}, b^{1:K}{1:C}]$ Multiply by the space position attention$[\pi_{1:HW}]$.attention The calculation of is simple to use $1\times 1$ Convolution and normalization methods , Normalization uses constrained softmax function :

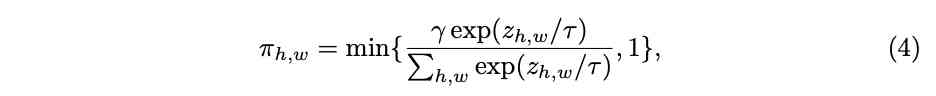

$\gamma$ Is used to attention Average , The paper is set as $\frac{HW}{3}$,$\tau$ Is the temperature , Set a larger value at the beginning of training (10) Used to prevent attention Too sparse .

Experimental Results

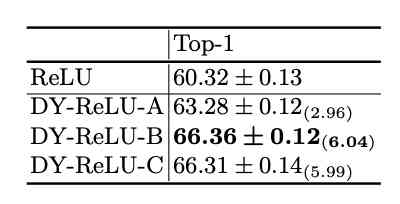

Image classification and contrast experiment .

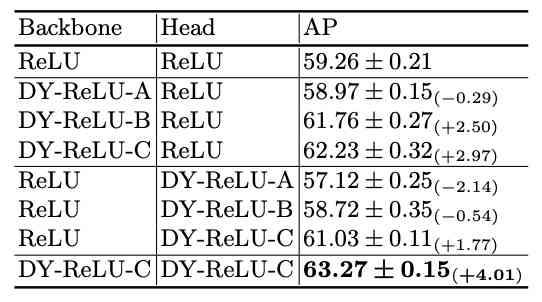

Key point recognition contrast experiment .

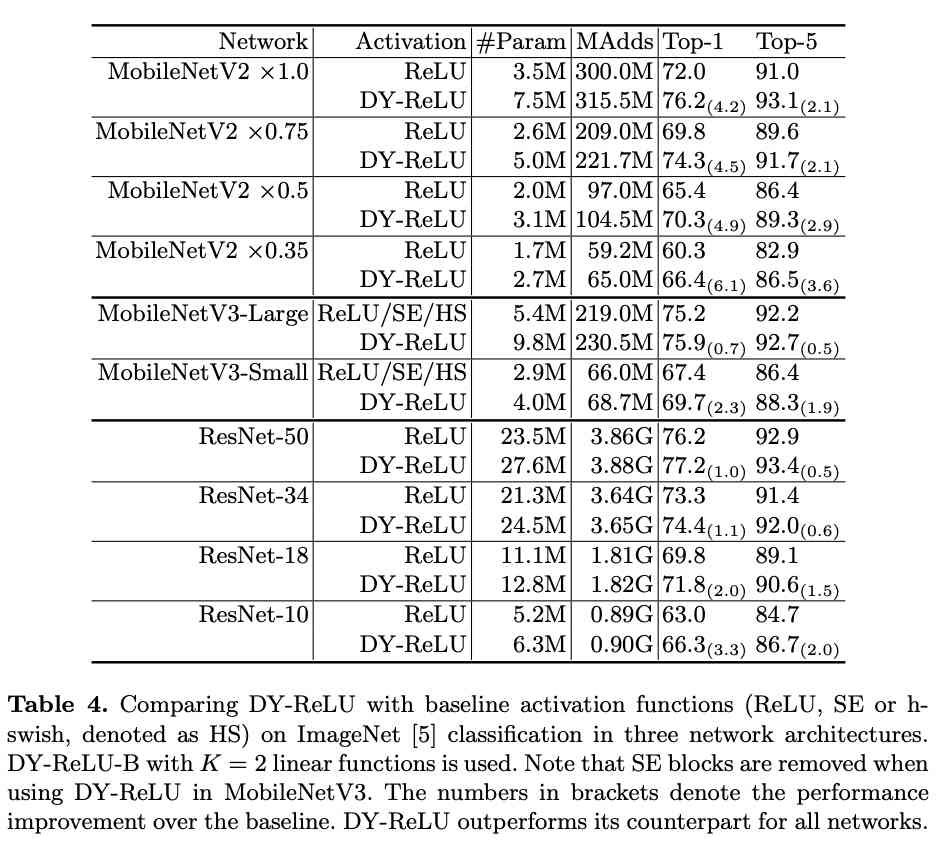

And ReLU stay ImageNet Compare in many ways .

Compared with other activation functions .

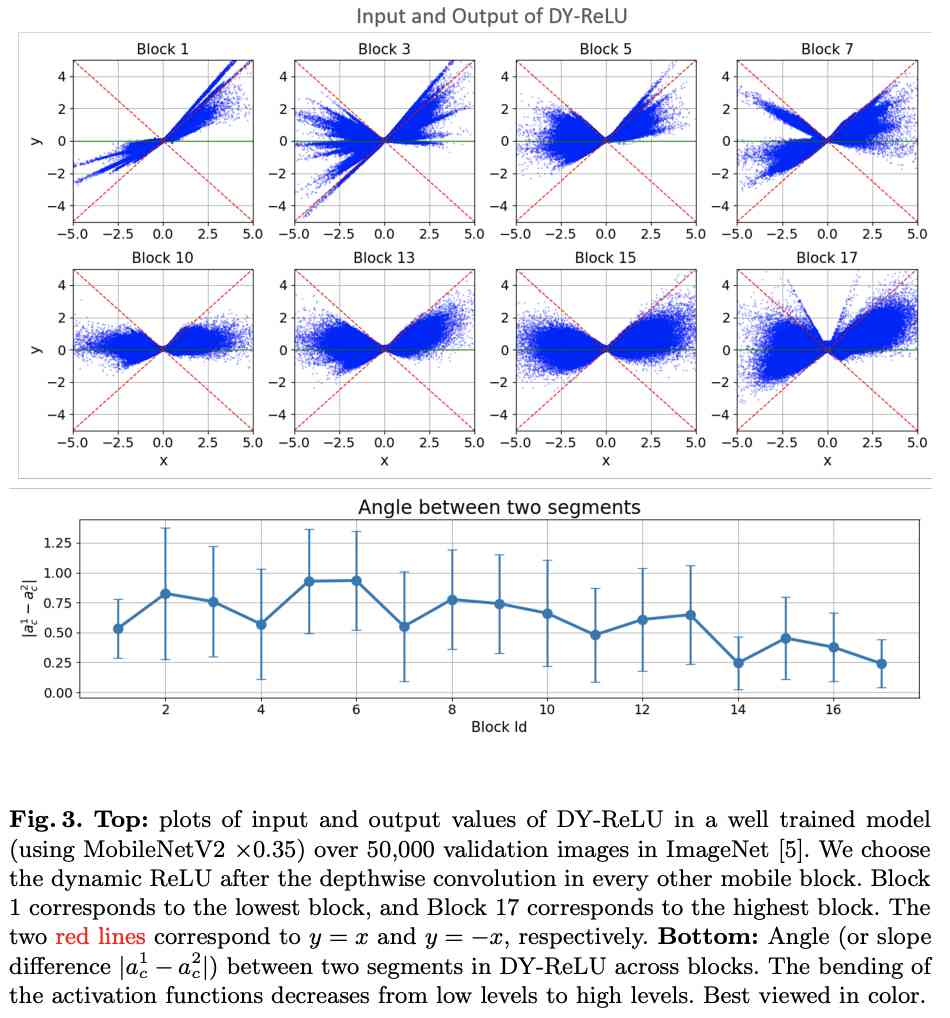

visualization DY-ReLU In different block I / O and slope change , We can see its dynamic nature .

Conclustion

The paper puts forward the dynamic ReLU, It can dynamically adjust the corresponding segment activation function according to the input , And ReLU And its variety contrast , Only a small amount of extra computation can bring about huge performance improvement , It can be embedded into the current mainstream model seamlessly . There is a reference to APReLU, It's also dynamic ReLU, The subnet structure is very similar , but DY-ReLU because $max_{1\le k \le K}$ The existence of , The possibility and the effect are better than APReLU Bigger .

> If this article helps you , Please give me a compliment or watch it ~

More on this WeChat official account 【 Xiaofei's algorithm Engineering Notes 】

版权声明

本文为[Xiaofei's notes on algorithm Engineering]所创,转载请带上原文链接,感谢

边栏推荐

猜你喜欢

The interface testing tool eolinker makes post request

为什么需要使用API管理平台

装饰器(二)

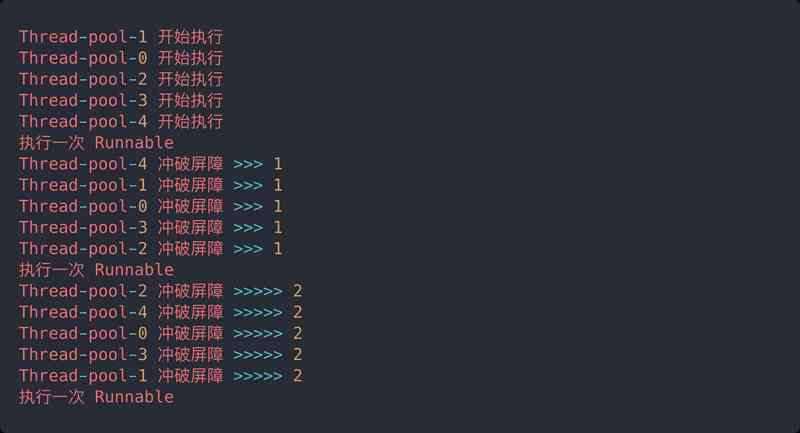

CountDownLatch 瞬间炸裂!同基于 AQS,凭什么 CyclicBarrier 可以这么秀?

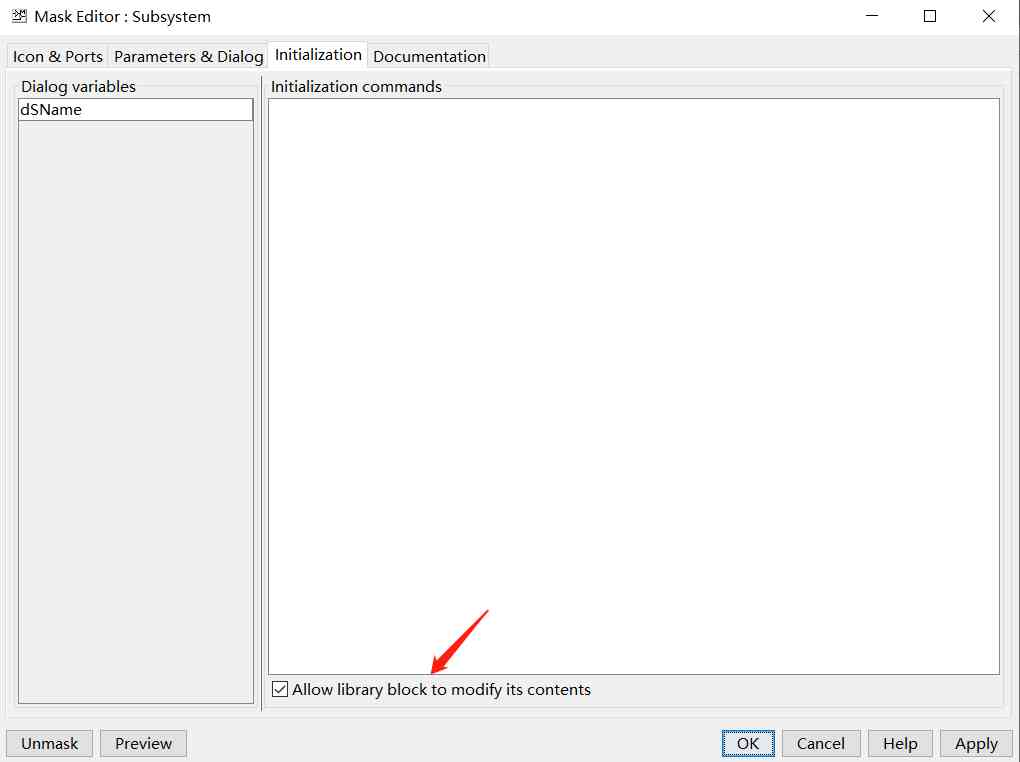

Package subsystem in Simulink

Opencv solves the problem of ippicv download failure_ 2019_ lnx_ intel64_ general_ 20180723.tgz offline Download

Development and deployment of image classifier application with fastai

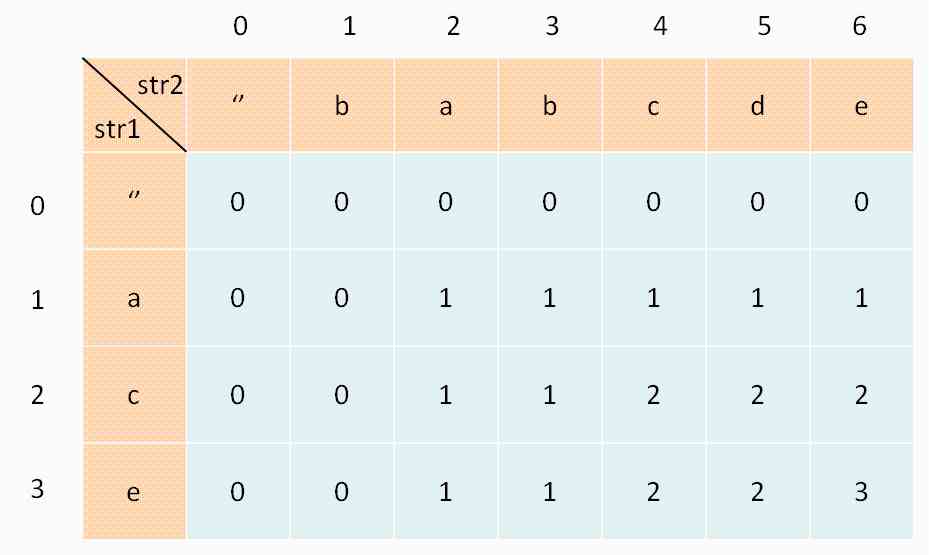

Classical dynamic programming: longest common subsequence

解决IE、firefox浏览器下JS的new Date()的值为Invalid Date、NaN-NaN的问题

Process thread coroutine

随机推荐

简明 VIM 练级攻略

C/C++学习日记:原码、反码和补码

Countdownlatch explodes instantly! Based on AQS, why can cyclicbarrier be so popular?

第一部分——第1章概述

WordPress网站程序和数据库定时备份到七牛云图文教程

用两个栈实现队列

Countdownlatch explodes instantly! Based on AQS, why can cyclicbarrier be so popular?

解决go get下载包失败问题

选择排序

Programmers should know the URI, a comprehensive understanding of the article

npm install 无响应解决方案

. net core cross platform resource monitoring library and dotnet tool

使用Fastai开发和部署图像分类器应用

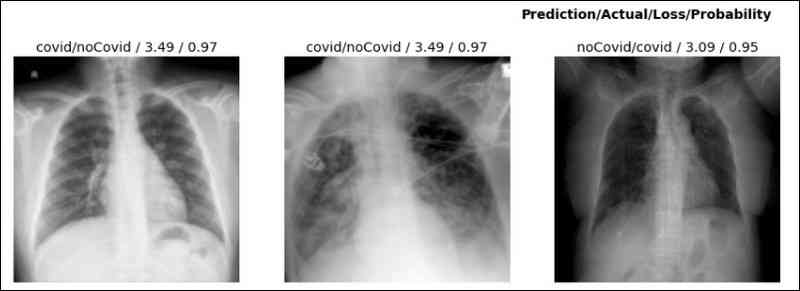

Using GaN based oversampling technique to improve the accuracy of model for mortality prediction of unbalanced covid-19

Why need to use API management platform

The interface testing tool eolinker makes post request

If the programming language as martial arts unique! C++ is Jiu Yin Jing. What about programmers?

Tasks of the first week of information security curriculum design (analysis of 7 instructions)

快来看看!AQS 和 CountDownLatch 有怎么样的关系?

Infix expression to suffix expression