当前位置:网站首页>The classification effect of converting video classification data set to picture classification data set on vgg16

The classification effect of converting video classification data set to picture classification data set on vgg16

2022-06-11 06:13:00 【Glutinous rice balls】

Dataset processing

Recently, experiments are being done to extract frames from video classification data sets into image classification data sets , Then put it into the existing model for training and evaluation .

This article Refer to the post The process of building video classification model is introduced in detail , But it mainly deals with data sets . So my experiment uses the method of data set processing in this blog post for reference , But there are some modifications to individual contents , And added some understanding .

Easy to handle , Only take UCF101 Before 10 Categories , Mainly to test the effect of video frame extraction processing into image classification data set , Other factors are not considered for the time being .

Key points : Tag every video

The boss blog is based on Video class name Divide the label , But in fact, it can also be shown in the video Numbers Divide , such , There is no need to separate Text labels to digital labels 了 .

Division of leaders :

# Create tags for training videos

train_video_tag = []# Tag list

for i in range(train.shape[0]):#shape[0], Traverse each video name ( From back to front )

train_video_tag.append(train['video_name'][i].split('/')[0])# According to the data frame No ['video_name'] Column for each row “/” The division is taken as 0 Dimension and add to the tag list

train['tag'] = train_video_tag# Add a column to the data frame and name the category , Assign the tag list to this column

train.head()# Before display 5 That's ok effect :

Divide by numbers :

# Create tags for training videos

train_video_tag = []# Tag list

for i in range(train.shape[0]):

train_video_tag.append(train['video_name'][i].split('avi')[1])

train['tag'] = train_video_tag

train.head()effect :

I think this is to see what you need to choose , And I went around in a big guy's way .

Main points two : Extract frames from the training group video

This is the core code , But the blogger's code indentation problem is still something that has not come out . So I changed it all , Very successful . Reference resources

# Save the frames extracted from the training video

for i in tqdm(range(train.shape[0])):#tqmd Progress bar extraction process

count = 0

videoFile = train['trainvideo_name'][i]# Video name in data frame

# Global variables

VIDEO_PATH = 'Desktop/UCF10/videos_10/'+videoFile.split(' ')[0]# Video address , The video name is divided by a space

EXTRACT_FOLDER = 'Desktop/UCF10/train_1/' # Where to store frame pictures

EXTRACT_FREQUENCY = 50 # Frame extraction frequency ( How many frames per second , One video is 25 frame /s, Therefore, the number of frames drawn is small )

def extract_frames(video_path, dst_folder, index):

# Main operation

import cv2

video = cv2.VideoCapture()# Turn on the video or camera, etc

if not video.open(video_path):

print("can not open the video")

exit(1)

count = 1

while True:

_, frame = video.read()# Read video by frame

# Exit after reading the video

if frame is None:

break

# every 50 Frame extraction

if count % EXTRACT_FREQUENCY == 0:

# Storage format of frames , Change... As needed , It is best to keep the same with the video name

save_path ='Desktop/UCF10/train_1/'+videoFile.split('/')[1].split(' ')[0] +"_frame%d.jpg" % count

cv2.imwrite(save_path, frame)# Save frame

count += 1

video.release()

# Print out the total number of extracted frames

print("Totally save {:d} pics".format(index-1))

def main():

'''

Generate pictures in batches , You can not use this code

'''

# # Recursively delete the folder where the frame pictures were stored before , And build a new

# import shutil

# try:

# shutil.rmtree(EXTRACT_FOLDER)

# except OSError:

# pass

# import os

# os.mkdir(EXTRACT_FOLDER)

# Extract frame picture , And save to the specified path

extract_frames(VIDEO_PATH, EXTRACT_FOLDER, 1)

if __name__ == '__main__':

main()effect :

Main points three : Save the name of the frame and the corresponding label in .csv In the document . Creating this file will help us read the frames we need in the next phase

from tqdm import tqdm

from glob import glob

import pandas as pd

# Get the names of all images

images = glob("Desktop/UCF10/train_1_50/*.jpg")

train_image = []

train_class = []

for i in tqdm(range(len(images))):

# Name of the created image

train_image.append(images[i].split('50\\')[1])

# Create a label for the image

train_class.append(images[i].split('v_')[1].split('_')[0])

# Save images and their labels in data frames

train_data = pd.DataFrame()

train_data['image'] = train_image

train_data['class'] = train_class

# Convert data frame to csv. file

train_data.to_csv('Desktop/UCF10/train_new.csv',header=True, index=False)In this step, the string division between the image and the label name can be modified appropriately , I can't tell by the big guy ...

Read the csv file :

import pandas as pd

train = pd.read_csv('Desktop/UCF10/train_new.csv')

train.head()effect :

Point four : Use this .csv file , Extract the frame and save it as a Numpy Array . Improvements have been made here Turn multiple pictures into one .npy File store

Point five : Divide the training set, verification set and corresponding labels

import pandas as pd

from sklearn.model_selection import train_test_split

# Read the file containing the picture name and the corresponding class alias .csv file

train = pd.read_csv('train_new.csv')

# Distinguish between goals

y = train['class']

# Create training sets and validation sets

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=42, test_size=0.2, stratify = y)Later, the article of the boss is to build vgg16 The classification model of , The data columns of the target variables are created for the training set and the verification set . But I just need data sets , So save the above results .

Save training sets and validation sets

import numpy as np

np.save("Desktop/UCF10/train1/Xtr01", X_train)

np.save("Desktop/UCF10/train1/Ytr01", y_train)

np.save("Desktop/UCF10/test1/Xte01", X_test)

np.save("Desktop/UCF10/test1/Yte01", y_test)

Last , take Convert text labels to digital labels , If the labels are divided by numbers , You don't have to do this .

Put the data set into the model test

existing vgg16 The hyperparametric settings of the model are used to run cifar_10 Of ,learning_rate = 0.02,batch_size = 100,epoch = 1,momentuma = 0.5

But enter this processed data set , result , The loss is nan, The accuracy of one iteration is only 11% about , And the accuracy of each test is the same .

So adjust the super parameters :learning_rate = 0.002,batch_size = 20,epoch = 1,momentuma = 0.5

The loss and accuracy of the test are :

![]()

Then you can view the results of real tags and predicted tags :

It doesn't really make sense to see , The teacher said to look at the label , Actually, it depends on the test accuracy .

边栏推荐

- What happened to the young man who loved to write code -- approaching the "Yao Guang young man" of Huawei cloud

- Cocoatouch framework and building application interface

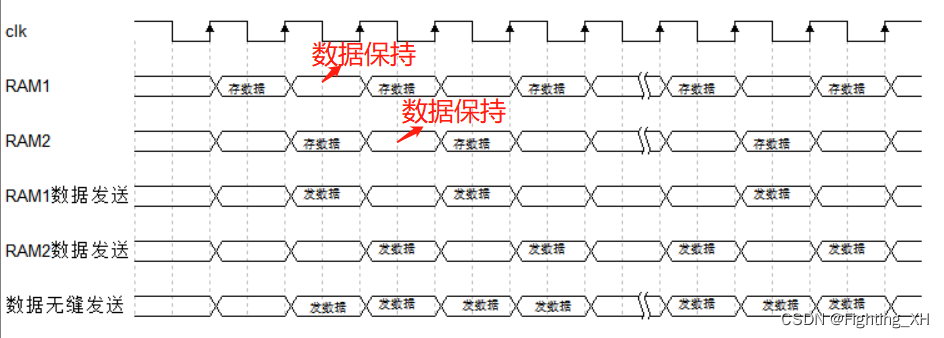

- FPGA面试题目笔记(三)——跨时钟域中握手信号同步的实现、任意分频、进制转换、RAM存储器等、原码反码和补码

- Print sparse arrays and restore

- Sword finger offer 50: the first character that appears only once

- Functional interface lambda, elegant code development

- 山东大学项目实训之examineListActivity

- Sword finger offer 04: find in 2D array

- Méthode de la partie du tableau

- Servlet

猜你喜欢

Yonghong Bi product experience (I) data source module

![Chapter 1 of machine learning [series] linear regression model](/img/e2/1f092d409cb57130125b0d59c8fd27.jpg)

Chapter 1 of machine learning [series] linear regression model

FPGA设计——乒乓操作实现与modelsim仿真

![Chapter 2 of machine learning [series] logistic regression model](/img/8f/b4c302c0309f5c91c7a40e682f9269.jpg)

Chapter 2 of machine learning [series] logistic regression model

verilog实现双目摄像头图像数据采集并modelsim仿真,最终matlab进行图像显示

FPGA面试题目笔记(二)——同步异步D触发器、静动态时序分析、分频设计、Retiming

Squid agent

Cenos7 builds redis-3.2.9 and integrates jedis

![[must see for game development] 3-step configuration p4ignore + wonderful Q & A analysis (reprinted from user articles)](/img/4c/42933ac0fde18798ed74a23279c826.jpg)

[must see for game development] 3-step configuration p4ignore + wonderful Q & A analysis (reprinted from user articles)

Servlet

随机推荐

数组部分方法

What should the cross-border e-commerce evaluation team do?

verilog实现双目摄像头图像数据采集并modelsim仿真,最终matlab进行图像显示

FIFO最小深度计算的题目合集

autojs,读取一行删除一行,停止自己外的脚本

MySQL implements over partition by (sorting the data in the group after grouping)

CCF 2013 12-5 I‘m stuck

Goodbye 2021 Hello 2022

This is probably the most comprehensive project about Twitter information crawler search on the Chinese Internet

Fix [no Internet, security] problem

Shuffleerror:error in shuffle in fetcher solution

Detailed steps for installing mysql-5.6.16 64 bit green version

[must see for game development] 3-step configuration p4ignore + wonderful Q & A analysis (reprinted from user articles)

JS -- reference type

Quartz2d drawing technology

The difference between call and apply and bind

使用Meshlab对CAD模型采样点云,并在PCL中显示

Using idea to add, delete, modify and query database

Completabilefuture asynchronous task choreography usage and explanation

Analyze the capacity expansion mechanism of ArrayList