当前位置:网站首页>SSD technical features

SSD technical features

2022-07-06 12:23:00 【wx5caecf2ed0645】

NOR and NAND Are a kind of flash memory technology ,NOR yes Intel company-developed , It's a bit like memory , Allow direct access to any memory unit through address , The disadvantage is that : Low density ( Small capacity ), Writing and erasing are slow .NAND Developed by Toshiba , It has a high density ( Large capacity ), The speed of writing and erasing is very fast , But it must be through specific IO The interface can only be accessed after address translation , Some are similar to disks .

We are now widely used U disc ,SD card ,SSD All belong to NAND type , Manufacturers will flash memory Encapsulate into different interfaces , such as Intel Of SSD That is to say SATA The interface of , Access and general SATA It's like a disk , There are also some enterprise flash cards , such as FusionIO, Then the package is PCIe Interface .

SLC and MLC

SLC It is a unipolar unit ,MLC It is a multi-level unit , The difference between the two is the amount of data stored in each unit ( density ),SLC Only one bit is stored in each cell , Contains only 0 and 1 Two voltage symbols ,MLC Each unit can store two digits , Contains four voltage symbols (00,01,10,11). obviously ,MLC Storage capacity ratio SLC Big , however SLC Simpler and more reliable ,SLC The speed of reading and writing is faster than MLC faster , and SLC Than MLC More durable ,MLC Each unit can be erased 1w Time , and SLC Erasable 10w Time , therefore , Enterprise flash memory products are generally selected SLC, This is also why enterprise products are much more expensive than household products .

SSD The technical characteristics of

SSD Compared with traditional disks , The first is that there is no mechanical device , The second is to change from magnetic medium to dielectric . stay SSD There's a FTL(Flash Transalation Layer), It is equivalent to the controller in the disk , The main function is to do address mapping , take flash memory The physical address of is mapped to that of the disk LBA Logical address , And provide OS Make transparent access .

SSD There is no seek time and delay time of traditional disk , therefore SSD It can provide very high random reading ability , This is its biggest advantage ,SLC Type of SSD Usually more than 35000 Of IOPS, Tradition 15k Of SAS disk , At most, it can only reach 160 individual IOPS, This is almost an astronomical number for traditional disks .SSD The advantage of continuous reading ability over ordinary disks is not obvious , Because continuous reading for traditional disks , No seek time is required ,15k Of SAS disk , The throughput of continuous reading can reach 130MB, and SLC Type of SSD You can achieve 170-200MB, We see in terms of throughput ,SSD Although higher than traditional disks , But the advantage is not obvious .

SSD The write operation of is quite special ,SSD The minimum write unit of is 4KB, Called page (page), When writing a blank position, you can press 4KB Units written , But if you need to rewrite a unit , An additional erase is required (erase) action , The unit of erasure is generally 128 individual page(512KB), Each erasure unit is called a block (block). If to a blank page When writing information , You can write directly without erasing , But if you need to overwrite a storage unit (page) The data of , The entire block Read in cache , And then modify the data , And erase the whole block The data of , Finally, the whole block write in , Obviously ,SSD Rewriting data is expensive ,SSD This characteristic of , We call it erase-before-write.

After testing ,SLC SSD The random write performance of can reach 3000 About IOPS, Continuous write throughput can reach 170-200MB, This data is still much higher than traditional disks . however , With SSD Keep writing , When more and more data needs to be rewritten , The performance of writing will gradually decline . After our test ,SLC It is obviously better than MLC, After a long write ,MLC Write at random IO It's going down a lot , and SLC Performance is relatively stable . To solve this problem , Various manufacturers have many strategies to prevent write performance degradation .

wear leveling

because SSD There is “ Write wear ” The problem of , When a unit is rewritten repeatedly for a long time ( such as Oracle redo), It will not only cause performance problems of writing , And it will be greatly shortened SSD The service life of , Therefore, we must design a load balancing algorithm to ensure SSD Each unit of can be used in a balanced way , This is it. wear leveling, It is called loss equalization algorithm .

Wear leveling It's also SSD Inside FTL Realized , It achieves the purpose of balancing losses through data migration .Wear leveling Depend on SSD Part of the reserved space , The basic principle is SSD Two... Are set in block pool, One is free block pool( Free pool ), One is data pool (data block pool), When you need to rewrite something page when ( If the original position is written , You must erase the entire block, Then you can write data ), Do not write to the original location ( Actions that do not require erasure ), Instead, take new ones out of the free pool block, Merge the existing data and the data to be rewritten into a new block, Write new blanks together block, The original block Identified as invalid state ( Waiting to be erased and recycled ), new block Then enter the data pool . Background tasks will be scheduled from data block Get invalid data from block, After erasure, it is recycled to the free pool . The advantage of doing so is , First, it will not erase the same block, Second, the writing speed will be faster ( The action of erasing is omitted ).

Wear leveling Divided into two : Dynamic loss equalization and static loss equalization , The principles of both are consistent , The difference is that dynamic algorithms only deal with dynamic data , For example, data migration will be triggered when data is rewritten , It has no effect on static data , Static algorithm can balance static data , When the background task finds a static data block with low consumption , Migrate it to other database blocks , Put these blocks into the free pool for use . From the perspective of equilibrium effect , Static algorithm is better than dynamic algorithm , Because almost all of them block Can be used in a balanced way ,SSD Your life will be greatly extended , But the disadvantage of static algorithm is when data is migrated , It may lead to write performance degradation .

Write to enlarge

because SSD Of erase-before-write Characteristics of , So there is a concept of write amplification , For example, you want to rewrite 4K The data of , You must first erase the entire block (512KB) The data in is read into the cache , After rewriting , Write the whole block together , At this time, you actually write 512KB The data of , The write amplification factor is 128. The best case of write amplification is 1, There is no amplification .

Wear leveling The algorithm can effectively alleviate the problem of write amplification , However, unreasonable algorithms will still lead to write amplification , For example, the user needs to write 4k Data time , Find out free block pool There is no blank block, At this time, it must be in data block pool Select a containing invalid data block, Read into the cache first , After rewriting , Write the whole block together , use wear leveling The algorithm will still have the problem of write amplification .

By providing SSD Reserve more space , It can significantly alleviate the performance problems caused by write amplification . According to our test results ,MLC SSD After a long random write , Performance degradation is obvious ( Write at random IOPS Even reduced to 300). If wear leveling Reserve more space , Can significantly improve MLC SSD Performance degradation after long write operations , And the more space reserved , The more obvious the performance improvement . By comparison ,SLC SSD The performance of is much more stable (IOPS After a long period of random writing , Random writing can be stable at 3000 IOPS), I think so SLC SSD The capacity is usually small (32G and 64G), And for wear leveling The reason why the space is relatively large .

database IO Characteristic analysis

IO There are four types : Read continuously , random block read , Random writing and continuous writing , Continuous reading and writing IO size It's usually bigger (128KB-1MB), It mainly measures throughput , And random reading and writing IO size The relatively small ( Less than 8KB), The main measure is IOPS And response time . The full table scan in the database is a continuous read IO, Index access is a typical random read IO, Log files are written continuously IO, Data files are written randomly IO.

The database system is designed based on the traditional disk access characteristics , The biggest feature is that the log file adopts sequential logging, Log files in the database , It is required that it must be written to disk when the transaction is committed , The demand for response time is very high , So it is designed to be written in sequence , It can effectively reduce the time spent on disk seek , Reduce latency . Log files are written in sequence , Although the physical location is continuous , But it is different from the traditional continuous write type , Of the log file IO size Very small ( Usually less than 4K), Every IO Between is independent ( The magnetic head must be raised to seek again , And wait for the disk to rotate to the corresponding position ), And the interval is very short , Database access log buffer( cache ) and group commit The way ( Submit in bulk ) To improve IO size Size , And reduce IO The number of times , Thus, the response delay is smaller , Therefore, the sequential writing of log files can be regarded as “ Random writing of consecutive positions ”, The bottleneck is still IOPS, Not throughput .

The data file adopts in place update The way , It means that the modification of the data file is written to the original location , Data files are different from log files , Not in business commit Write data file when , Only when the database finds dirty buffer Too much or need to be done checkpoint In action , Will refresh these dirty buffer Go to the right place , It's an asynchronous process , Usually , Random write pairs of data files IO The requirements are not particularly high , As long as meet checkpoint and dirty buffer Just meet your requirements .

SSD Of IO Characteristic analysis

1. Random reading ability is very good , Continuous reading performance is average , But more than ordinary SAS Good disk .

2. There is no delay time for disk seek , There is little difference in response latency between random writes and continuous writes .

3.erase-before-write characteristic , Cause write amplification , Affect the performance of writing .

4. Write wear characteristics , use wear leveling Algorithm prolongs life , But at the same time, it will affect the performance of reading .

5. Read and write IO Response delays are not equal ( Reading is much better than writing ), And ordinary disk read and write IO The difference in response latency is small .

6. Continuous write performance is better than random write , such as 1M Write in sequence 128 individual 8K It's much better to write immediately , Because random writing will bring a lot of erasure .

be based on SSD The above characteristics of , If you put all the databases in SSD On , There may be the following problems :

1. Log files sequential logging Will repeatedly erase the same position , Although there are loss equalization algorithms , But writing for a long time will still lead to performance degradation .

2. Data files in place update A large number of random writes will be generated ,erase-before-write Write amplification will occur .

3. Database read-write hybrid application , There are a lot of random writes , At the same time, it will affect the performance of reading , Produce a lot of IO Delay .

be based on SSD Database optimization rules :

be based on SSD The optimization of is to solve erase-before-write The resulting write amplification problem , Different types of IO Separate , Reduce the performance impact of write operations .

1. take sequential logging It is amended as follows In-page logging, Avoid repeatedly erasing the same position .

2. A large number of in-place update Random writes are merged into a few sequential writes .

3. utilize SSD High random reading and writing ability , Reduce writing and increase reading , So as to improve the overall performance .

In-page logging

In-page logging Is based on SSD To the database sequential logging An optimization method of , In the database sequential logging It is very beneficial to traditional disks , It can greatly improve the response time , But for SSD It's a nightmare , Because you need to erase the same position repeatedly , and wear leveling Although the algorithm can balance the load , But it will still affect the performance , And produce a lot of IO Delay . therefore In-page logging Merge logs and data , Change log sequential write to random write , be based on SSD For random writing and continuous writing IO Characteristics of little difference in response delay , Avoid repeatedly erasing the same position , Improve overall performance .

In-page logging The basic principle : stay data buffer in , There is one in-memory log sector Structure , Be similar to log buffer, Every log sector Is with the data block Corresponding . stay data buffer in ,data and log Not merged , It's just data block and log sector A correspondence is established between , You can put a data block Of log separate from . however , stay SSD At the bottom flash memory in , Data and logs are stored in the same block( Erase unit ), Every block Both contain data page and log page.

When log information needs to be written (log buffer Insufficient space or transaction commit ), Log information will be written to flash memory Corresponding block in , That is to say, the log information is distributed in many different block Medium , And each block The log information in is append write, So there is no need to erase . When a block Medium log sector When it's full , At this time, an action will occur , Will the whole block Read out the information in , Then apply block Medium log sector, You can get the latest data , And then the whole block write in , At this time ,block Medium log sector It's blank .

stay in-page logging In the method ,data buffer Medium dirty block No need to write to flash memory Medium , Even if the dirty buffer Need to be exchanged , There is no need to write them flash memory in . When you need to read the latest data , As long as block Merge the data and log information in , You can get the latest data .

In-page logging Method , Put the log and data in the same erasure unit , Less right flash Repeated erasure in the same position , And there's no need to dirty block Write to flash in , A lot less in-place update Random write and erase actions . Although when reading , You need to make a merge The operation of , But because data and logs are stored together , and SSD The random reading ability of is very high ,in-page logging It can improve the overall performance .

SSD As writing cache—append write

SSD It can be used as a disk write cache, because SSD Continuous write performance is better than random write , such as :1M Write in sequence 128 individual 8K Random writing is much better , We can combine a large number of random writes into a small number of sequential writes , increase IO Size , Reduce IO( erase ) The number of times , Improve write performance . This method is related to many NoSQL Of the product append write similar , That is, do not rewrite the data , Just append data , Merge when necessary .

The basic principle : When dirty block When you need to write to a data file , Do not directly update the original data file , But first IO Merge , There will be many 8K Of dirty block Merge into one 512KB Write unit of , And USES the append write The way to write to a cache file in ( Save in SSD On ), Avoid erasing , Improved write performance .cache file The data in is written sequentially in a circular manner , When cache file When there is not enough space , The background process will cache file The data in is written to the real data file ( Save on disk ), This is the second time IO Merge , take cache file Merge the data in , Integrate into a small number of sequential writes , For disks , The final IO yes 1M Write in the order of , Sequential writes only affect throughput , The throughput of disk will not become a bottleneck , take IOPS The bottleneck of is transformed into the bottleneck of throughput , Thus, the overall system capability is improved .

When reading data , You must first read cache file, and cache file The data in is stored out of order , For quick retrieval cache file Data in , Generally, it will be cache file Build an index , The index will be queried first when reading data , If you hit the query cache file, If you don't hit , Read again data file( Normal disk ), therefore , This method is actually more than just writing cache, It also plays a role in reading cache The role of .

SSD It is not suitable to put the log file of the database , Although the log file is also append write, But because of the log file IO size The relatively small , And must be written synchronously , Cannot merge , Yes SSD Come on , Requires a lot of erasure . We have also tried to put redo log Put it in SSD On , in consideration of SSD Random writing of can also achieve 3000 IOPS, And the response delay is much lower than that of disk , But it depends on SSD Of itself wear leveling Whether the algorithm is excellent , And the log file must be stored independently , If the writing of log files is a bottleneck , It can also be regarded as a solution . Usually , I still suggest that log files be placed on ordinary disks , instead of SSD.

SSD As a reading cache—flashcache

Because most databases are read more and write less , therefore SSD As a database flashcache It is the simplest of the optimization schemes , It can be fully utilized SSD Advantages of reading performance , Again, avoid SSD Write performance problems . There are many ways to do this , When reading data , Write data at the same time SSD, You can also print out the data buffer when , Write to SSD. When reading data , First, in the buffer Query in , And then in flashcache Query in , Last read datafile.

SSD As flashcache And memcache As external to the database cache The biggest difference is ,SSD Data is not lost after power failure , This also caused another thought , When the database fails and restarts ,flashcache Is the data in valid or invalid ? If it works , Then we must always ensure flashcache Consistency of data in , If it's invalid , that flashcache There is also a problem of preheating ( This is related to memcache The problem after power failure is the same ). at present , as far as I am concerned , Basically, it is considered invalid , Because to keep flashcache Consistency of data in , Very difficult .

flashcache As the second level between memory and disk cache, In addition to the performance improvement , From a cost perspective ,SSD The price of is between memory and disk Between , As a layer between the two cache, You can find a balance between performance and price .

summary

With SSD The price keeps falling , Capacity and performance continue to improve ,SSD Replacing disks is just a matter of time .

边栏推荐

- Arduino get random number

- Time slice polling scheduling of RT thread threads

- Walk into WPF's drawing Bing Dwen Dwen

- imgcat使用心得

- Rough analysis of map file

- ESP learning problem record

- Who says that PT online schema change does not lock the table, or deadlock

- (五)R语言入门生物信息学——ORF和序列分析

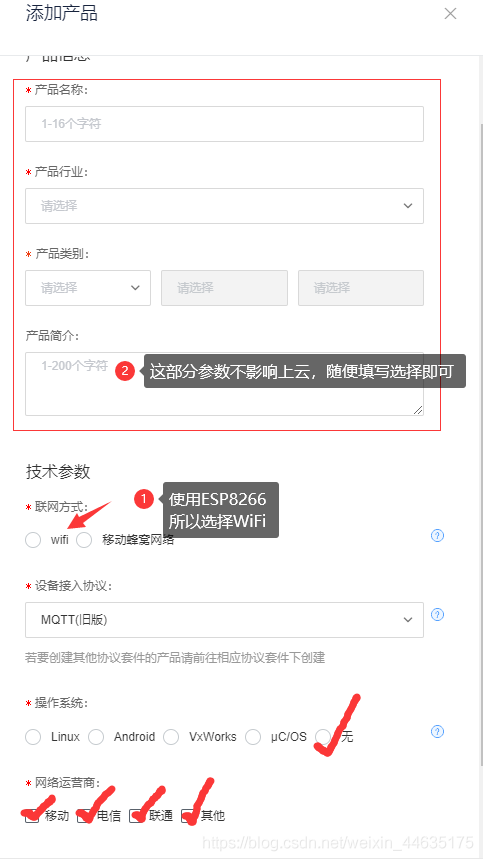

- ESP8266连接onenet(旧版MQTT方式)

- Types de variables JS et transformations de type communes

猜你喜欢

ES6 grammar summary -- Part 2 (advanced part es6~es11)

Learning notes of JS variable scope and function

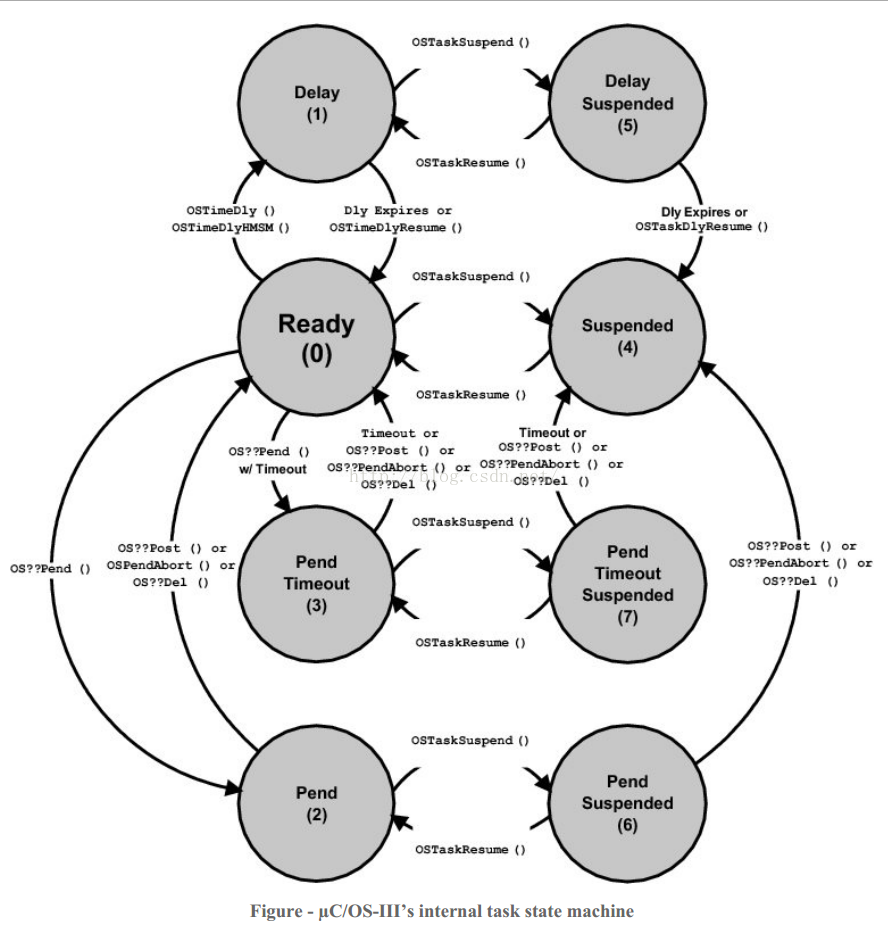

Characteristics, task status and startup of UCOS III

JS数组常用方法的分类、理解和运用

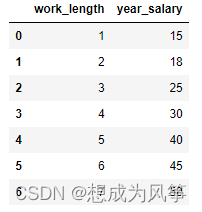

E-commerce data analysis -- salary prediction (linear regression)

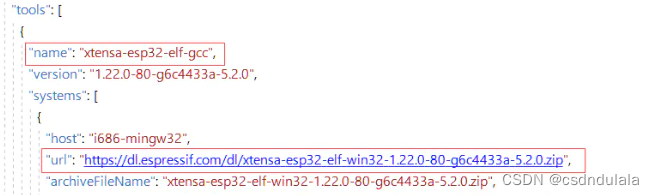

【ESP32学习-1】Arduino ESP32开发环境搭建

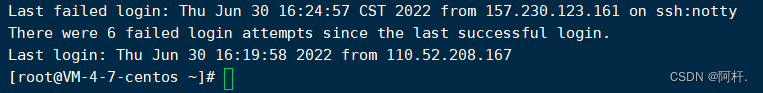

Remember an experience of ECS being blown up by passwords - closing a small black house, changing passwords, and changing ports

ESP8266连接onenet(旧版MQTT方式)

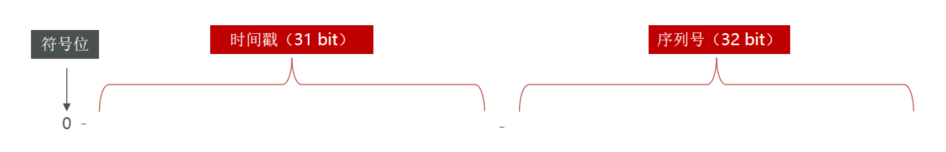

基於Redis的分布式ID生成器

Basic operations of databases and tables ----- modifying data tables

随机推荐

JS变量类型以及常用类型转换

[Leetcode15]三数之和

(五)R语言入门生物信息学——ORF和序列分析

[golang] leetcode intermediate - fill in the next right node pointer of each node & the k-smallest element in the binary search tree

MySQL時間、時區、自動填充0的問題

2021.11.10汇编考试

如何给Arduino项目添加音乐播放功能

About using @controller in gateway

MP3mini播放模块arduino<DFRobotDFPlayerMini.h>函数详解

CUDA C programming authoritative guide Grossman Chapter 4 global memory

Talking about the startup of Oracle Database

Oppo vooc fast charging circuit and protocol

Types de variables JS et transformations de type communes

Learning notes of JS variable scope and function

Remember an experience of ECS being blown up by passwords - closing a small black house, changing passwords, and changing ports

Feature of sklearn_ extraction. text. CountVectorizer / TfidVectorizer

Gateway 根据服务名路由失败,报错 Service Unavailable, status=503

编译原理:源程序的预处理及词法分析程序的设计与实现(含代码)

C language callback function [C language]

Missing value filling in data analysis (focus on multiple interpolation method, miseforest)